The big picture: Samsung said the drive will be available this quarter in both form factors but didn’t mention pricing. Given its massive capacities coupled with the fact that it is an enterprise-class product, buyers can likely expect to shell out a small fortune for the drive.

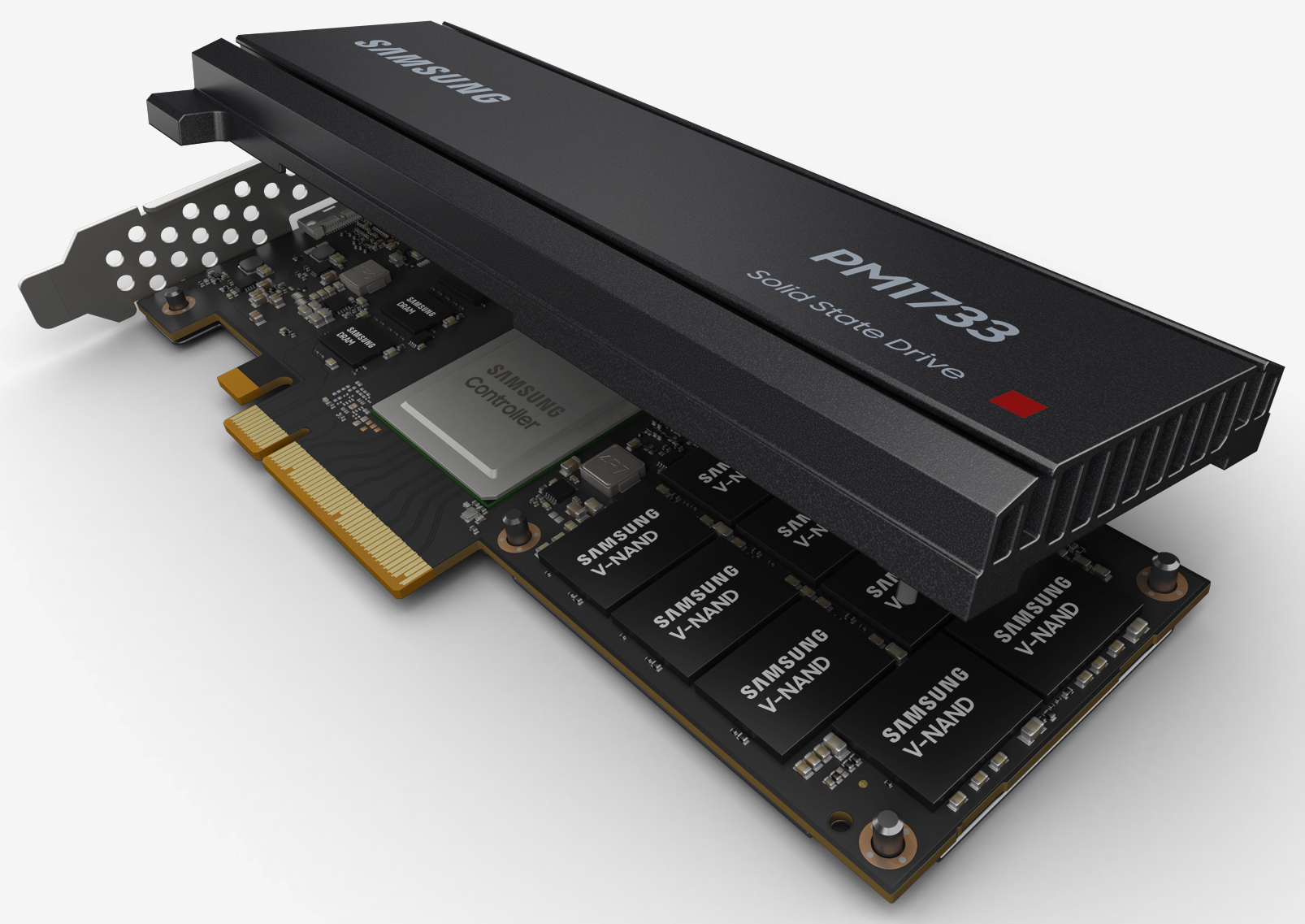

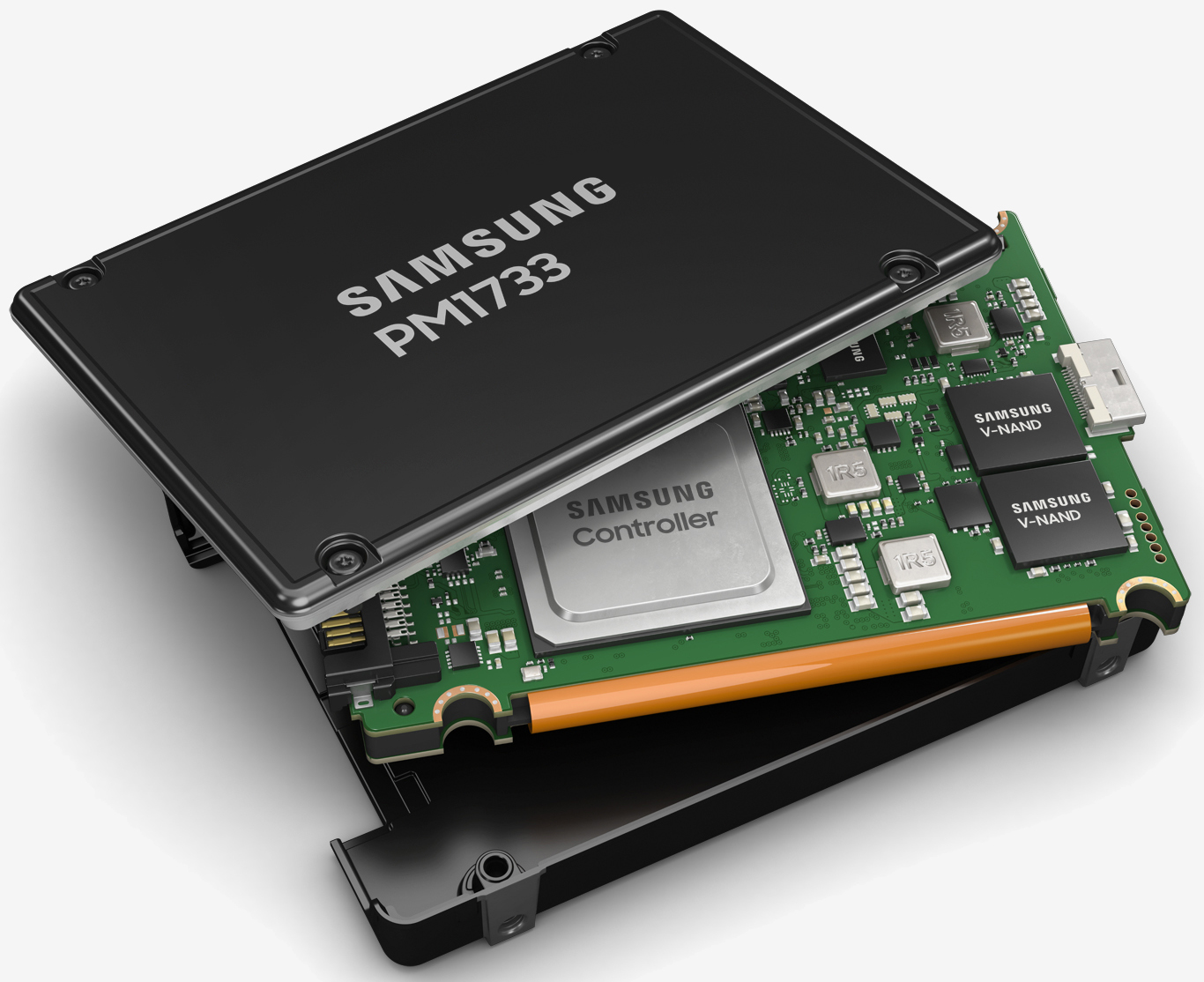

Samsung on Friday announced the PM1733 PCIe Gen4 Solid State Drive (SSD), an enterprise-class drive that offers the “highest performance of any SSD on the market today.”

Built using Gen5 512Gb TLC V-NAND, the PM1733 will be offered in both U.2 (Gen 4 x4) and HHHL (Gen 4 x8) form factors in maximum capacities of 30.72TB and 15.36TB, respectively. It is backward compatible with the older PCIe 3.0 interface but using it on that platform will severely hinder overall performance.

Speaking of performance, Samsung said the drive reads sequentially at 8.0GB/s and randomly at 1,500,000 IOPS. Additional specs haven’t yet been revealed although Samsung did say it will have double the throughput capabilities of current Gen 3 SSDs.

Interestingly enough, the PM1733 also features dual port capabilities “to support storage as well as server applications.”

In related news, Samsung has also provided its full line-up of RDIMM and LRDIMM dynamic random access memory (DRAM) for AMD’s EPYC 7002 generation processors. AMD announced its second gen EPYC processors earlier this week.