The big picture: The server business is expected to reach new revenue heights in the next few years, and this growth is not solely attributed to AI workload acceleration. According to market research company Omdia, powerful GPU co-processors are spearheading the shift toward a fully heterogeneous computing model.

Omdia anticipates a decline of up to 20 percent in yearly shipments of server units by the end of 2023, despite expected revenues to grow by six to eight percent. The company's recent Cloud and Data Center Market Update report illustrates a reshaping of the data center market driven by the demand for AI servers. This, in turn, is fostering a broader transition to the hyper-heterogeneous computing model.

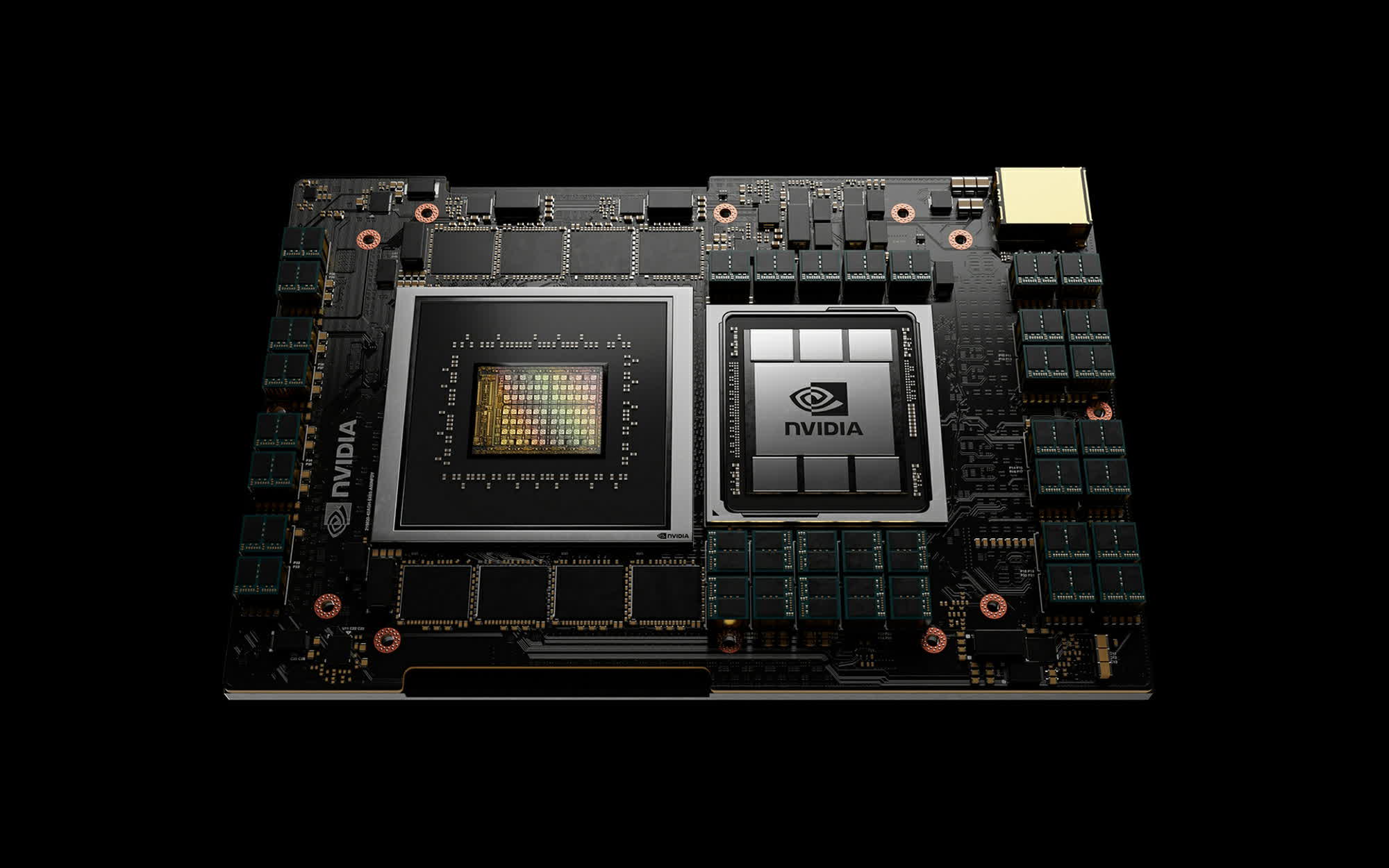

Omdia has coined the term "hyper heterogeneous computing" to describe a server configuration equipped with co-processors specifically designed to optimize various workloads, whether for AI model training or other specialized applications. According to Omdia, Nvidia's DGX model, featuring eight H100 or A100 GPUs, has emerged as the most popular AI server to date and is particularly effective for training chatbot models.

In addition to Nvidia's offerings, Omdia highlights Amazon's Inferentia 2 models as popular AI accelerators. These servers are equipped with custom-built co-processors designed for accelerating AI inferencing workloads. Other co-processors contributing to the hyper-heterogeneous computing trend include Google's Video Coding Units (VCUs) for video transcoding and Meta's video processing servers, which leverage the company's Scalable Video Processors.

In this new hyper-heterogeneous computing scenario, manufacturers are increasing the number of costly silicon components installed in their server models. According to Omdia's forecast, CPUs and specialized co-processors will constitute 30 percent of data center spending by 2027, up from less than 20 percent in the previous decade.

Currently, media processing and AI take the spotlight in most hyper-heterogeneous servers. However, Omdia anticipates that other ancillary workloads, such as databases and web servers, will have their own co-processors in the future. Solid-state drives with computational storage components can be viewed as an early form of in-hardware acceleration for I/O workloads.

Based on Omdia's data, Microsoft and Meta currently lead among hyperscalers in the deployment of server GPUs for AI acceleration. Both corporations are expected to receive 150,000 Nvidia H100 GPUs by the end of 2023, a quantity three times larger than what Google, Amazon, or Oracle are deploying.

The demand for AI acceleration servers from cloud companies is so high that original equipment manufacturers like Dell, Lenovo, and HPE are facing delays of 36 to 52 weeks in obtaining sufficient H100 GPUs from Nvidia to fulfill their clients' orders. Omdia notes that powerful data centers equipped with next-gen coprocessors are also driving increased demand for power and cooling infrastructure.

https://www.techspot.com/news/100984-server-shipments-2023-down-but-revenue-growing.html