Something to look forward to: Competition between Samsung and SK Hynix is growing for DDR5 memory. As the launch of faster and denser memory is on the horizon, engineers are pushing clock speeds up and voltages down. The end result is desktop memory that will be a major step forward for the industry.

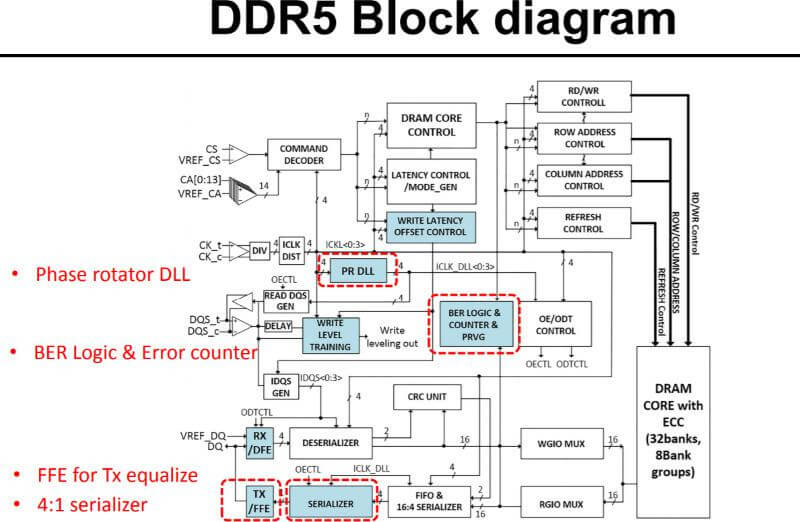

As the International Solid State Circuits Conference continues, memory maker SK Hynix has shared its plans on DDR5. Even though the DDR5 standard is considered under development by the Jedec standards organization, a real world design will help in the push towards finalized specifications.

Actual finished products using DDR5 are expected to be available during the fourth quarter this year whether or not the standard is officially ratified. In Hynix's presentation, 16Gb DDR5 SDRAM module was showcased. The design is capable of running at 6.4Gb/s on each pin while operating at 1.1V.

Despite Hynix's design choices that will allow high clock speeds to be possible, Samsung still appears to be in the lead for high performance. Samsung's 10nm LPDDR5 SDRAM can push data around at 7.5Gb/s on only 1.05V. However, Samsung declined to present any operational details of their memory offering, making it difficult to know what differences exist in silicon.

Regardless of which company wins the performance race in the next generation of memory, everyone will enjoy up to 50% more bandwidth. Density is also expected to be double that of DDR4. The future of desktop workstations easily reaching 1TB of memory is no longer completely out of the question, although cost and practicality of such a setup will be limiting factors for most.

Hynix is predicting that DDR5 memory will become 25 percent of market sales by 2021 and reach 44 percent 2022. Pricing and availability of consumer memory modules is still unknown, but rest assured that large capacity DIMMs with record setting clock speeds are not going to be wallet-friendly initially.

https://www.techspot.com/news/78879-sk-hynix-presents-first-ddr5-chip-promising-major.html