In brief: One of the many concerns that have been raised regarding generative AIs is their potential to show political bias. A group of researchers put this to the test and discovered that ChatGPT generally favors left-wing political views in its responses.

Story correction (Aug. 20): Two Princeton computer scientists have looked more deeply into the paper that claimed that ChatGPT has a 'liberal bias' and have found it to have glaring flaws. The paper tested an outdated language model not associated with ChatGPT and used problematic methods, including multiple choice questions and weak prompts.

Turns out, ChatGPT does not guide users on voting as the model is trained to avoid or refuse to respond when asked controversial political questions. In testing GPT 4.0, it refused answers in 84% of cases. However, ChatGPT's design does allow users to set specific response preferences, ensuring the chatbot aligns with their political views if desired.

The original story follows below:

A study led by academics from the University of East Anglia sought to discover if ChatGPT was showing political leanings in its answers, rather than being unbiased in its responses. The test involved asking OpenAI's tool to impersonate individuals covering the entire political spectrum while asking it a series of more than 60 ideological questions. These were taken from the Political Compass test that shows whether someone is more right- or left-leaning.

The next step was to ask ChatGPT the same questions but without impersonating anyone. The responses were then compared and researchers noted which impersonated answers were closest to the AI's default voice.

It was discovered that the default responses were more closely aligned with the Democratic Party than the Republicans. It was the same result when the researchers told ChatGPT to impersonate UK Labour and Conservative voters: there was a strong correlation between the chatbot's answers and those it gave while impersonating the more left-wing Labour supporter.

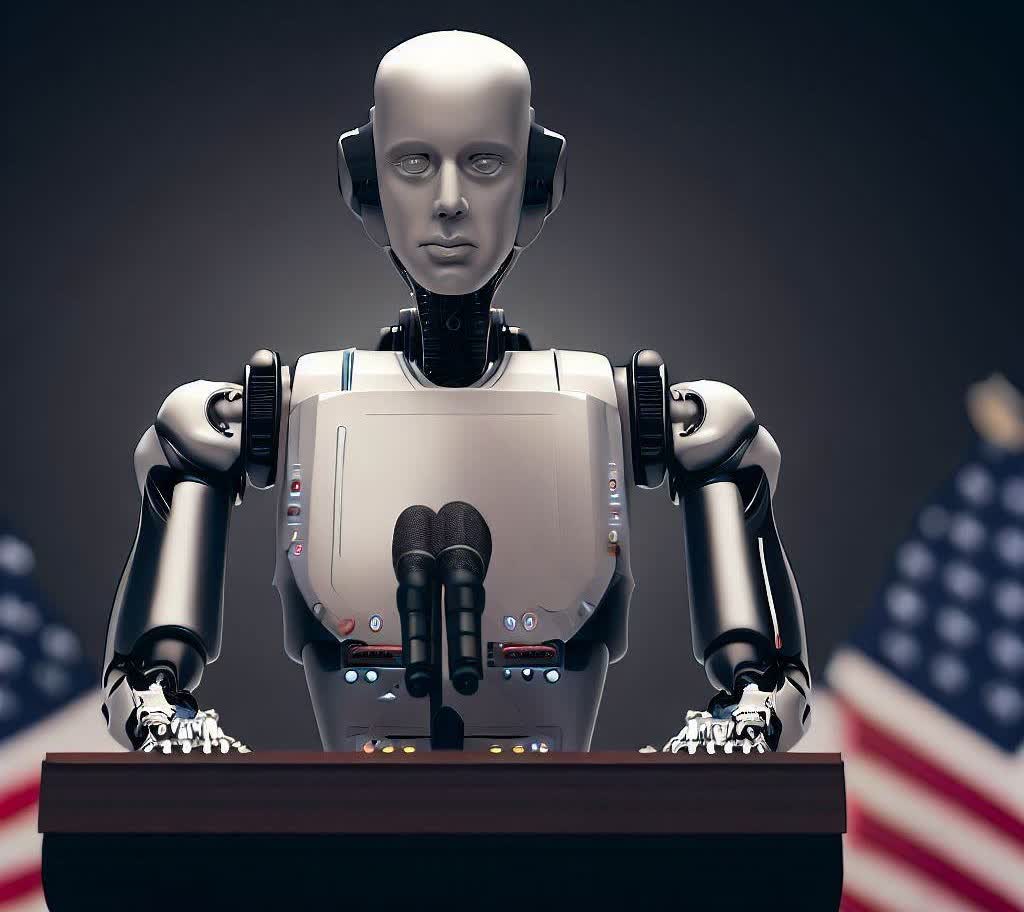

ChatGPT would likely prefer the president on the left, based on the test results

Another test asked ChatGPT to imitate supporters of Brazil's left-aligned current president, Luiz Inácio Lula da Silva, and former right-wing leader Jair Bolsonaro. Again, ChatGPT's default answers were closer to the former's.

Asking ChatGPT the same questions multiple times can see it respond with multiple different answers, so each one in the test was asked 100 times. The answers were then put through a 1,000-repetition "bootstrap," a statistical procedure that resamples a single dataset to create many simulated samples, helping improve the test's reliability.

Project leader Fabio Motoki, a lecturer in accounting, warned that this sort of bias could affect users' political views and has potential implications for political and electoral processes. He warned that the bias stems from either the training data taken from the internet or ChatGPT's algorithm, which could be making existing biases even worse

"Our findings reinforce concerns that AI systems could replicate, or even amplify, existing challenges posed by the internet and social media," Motoki said.

https://www.techspot.com/news/99838-openai-chatgpt-has-left-wing-bias-times.html