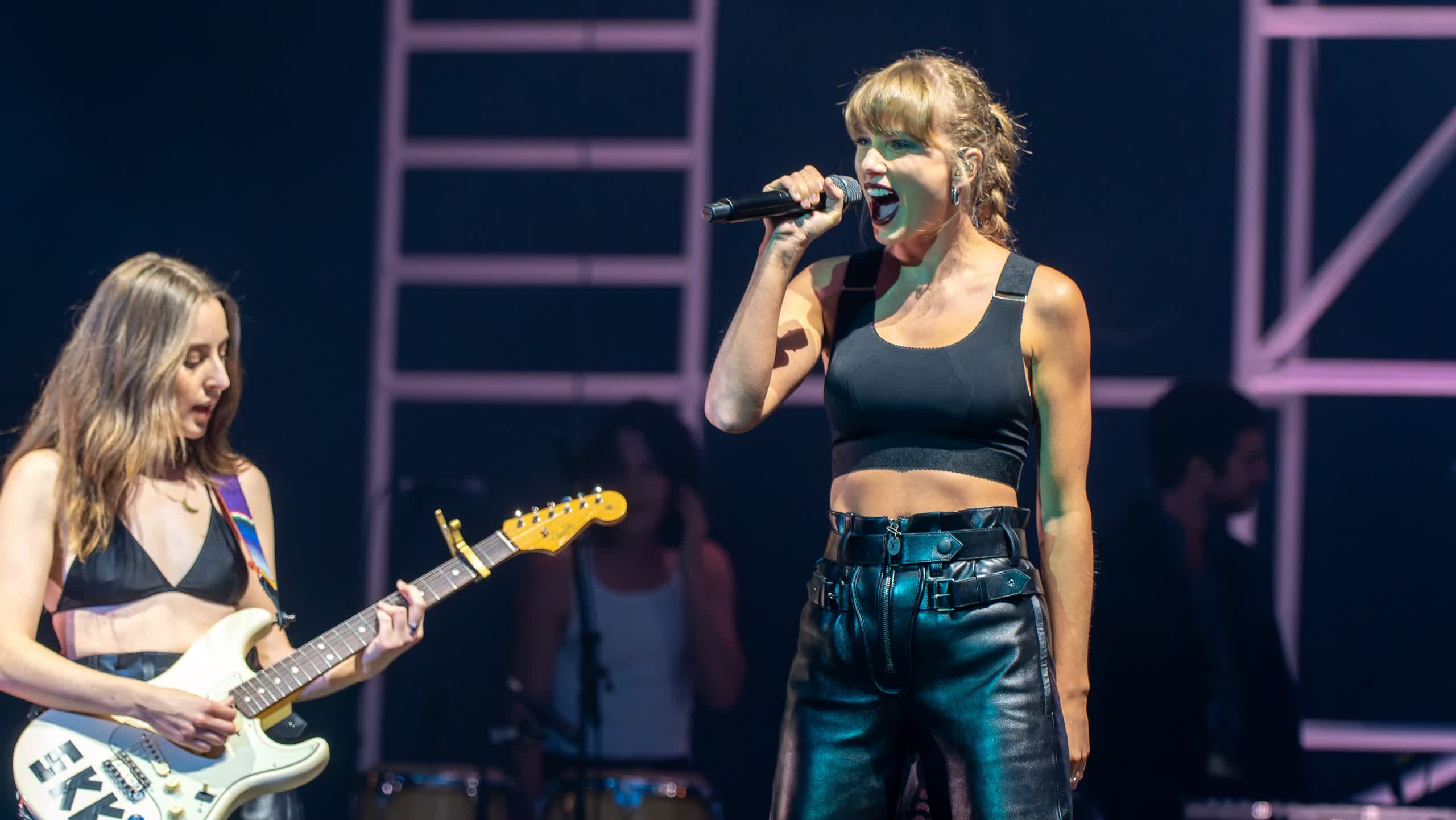

TL;DR: World-famous pop star Taylor Swift has become the latest victim of deepfake pornography this week, after AI-generated images of her were shared tens of millions of times on social media platforms. With that, deepfakes are back in the legislative consciousness. Congressional representatives and even the White House have now weighed in on the matter.

Explicit images of singer-songwriter Taylor Swift, 34, were shared on X this week, garnering over 27 million views and 260,000 "likes" before the account that posted the images was shut down. That did little to stop the spread though, as the images have continued to circulate and have reportedly been viewed over 40 million times.

Responding to the incident, X has been actively removing the images and has disabled searches for Taylor Swift on the platform to try and contain the spread. In a statement, it said, "We're closely monitoring the situation to ensure that any further violations are immediately addressed, and the content is removed."

But on-platform moderation may not be enough. Now, members of the US Congress and even the White House have weighed in on the issue. US representative Joe Morelle has said that deepfake images "can cause irrevocable emotional, financial, and reputational harm – and unfortunately, women are disproportionately impacted."

Democratic representative Yvette Clark said, "what's happened to Taylor Swift is nothing new. For years, women have been targets of deepfakes [without] their consent. And [with] advancements in AI, creating deepfakes is easier & cheaper."

On Friday, White House Press Secretary, Karine Jean-Pierre, called the images "alarming" and said in a statement, "While social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation, and non-consensual, intimate imagery of real people."

What's happened to Taylor Swift is nothing new. For yrs, women have been targets of deepfakes w/o their consent. And w/ advancements in AI, creating deepfakes is easier & cheaper.

– Yvette D. Clarke (@RepYvetteClarke) January 25, 2024

This is an issue both sides of the aisle & even Swifties should be able to come together to solve.

As stated by Representative Clark, this is not a new issue. But with such a high-profile target, the problem has cut through the public discourse and now may be a possible target for future legislation.

In the UK, explicit deepfakes were made illegal under the Online Safety Act in October 2023. PornHub, a major online provider of adult media, has banned deepfakes on their platform since 2018.

Ms. Swift has yet to comment publicly on the incident.

Whether or not this latest high-profile incident leads to legislative changes, it's clear that AI content is already causing issues for law-makers. Just this week we reported on the first known instance of AI-generated messaging being used to suppress voter turnout, after a fake President Biden called New Hampshire residents and urged them not to vote.

https://www.techspot.com/news/101669-us-lawmakers-weigh-deepfakes-after-explicit-taylor-swift.html