Editor's take: Like almost everyone in tech today, we have spent the past year trying to wrap our heads around "AI". What it is, how it works, and what it means for the industry. We are not sure that we have any good answers, but a few things have been clear. Maybe AGI (artificial general intelligence) will emerge, or we'll see some other major AI breakthrough, but focusing too much on those risks could be overlooking the very real – but also very mundane – improvements that transformer networks are already delivering.

Part of the difficulty in writing this piece is that we are stuck in something of a dilemma. On the one hand, we do not want to dismiss the advances of AI. These new systems are important technical achievements, they are not toys only suited for generating pictures of cute kittens dressed in the style of Dutch masters contemplating a plate of fruit as in the picture shown below (generated by Microsoft Copilot). They should not be easily dismissed.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

On the other hand, the overwhelming majority of the public commentary about AI is nonsense. No one actually doing work in the field today who we have spoken with thinks we are on the cusp of Artificial General Intelligence (AGI). Maybe we are just one breakthrough away, but we cannot find anyone who really believes that is likely. Despite this, the general media is filled with all kinds of stories that conflate generative AI and AGI, with every kind of wild, unbased opinions on what this means.

Setting aside all the noise, and there is a lot of noise, what we have seen over the past year has been the rise of Transformer-based neural networks. We have been using probabilistic systems in compute for years, and transformers are a better, or more economical method, for performing that compute.

This is important because it opens up the problem space that we can tackle with our computers. So far this has largely fallen in the realm of natural language processing and image manipulation. These are important, sometimes even useful, but they apply to what is still a fairly small piece of user experience and applications. Computers that can efficiently process human language will be very useful, but does not equate to some kind of universal compute breakthrough.

This does not mean that "AI" only provides a small amount of value, but it does mean that much of that value will come in ways that are fairly mundane. We think this value should be broken into two buckets – generative AI experiences and low-level improvements in software.

Take the latter – improvements in software. This sounds boring – it is – but that does not mean it is unimportant. Every major software and Internet company today is bringing transformers into their stacks. For the most part, this will go totally unnoticed by users.

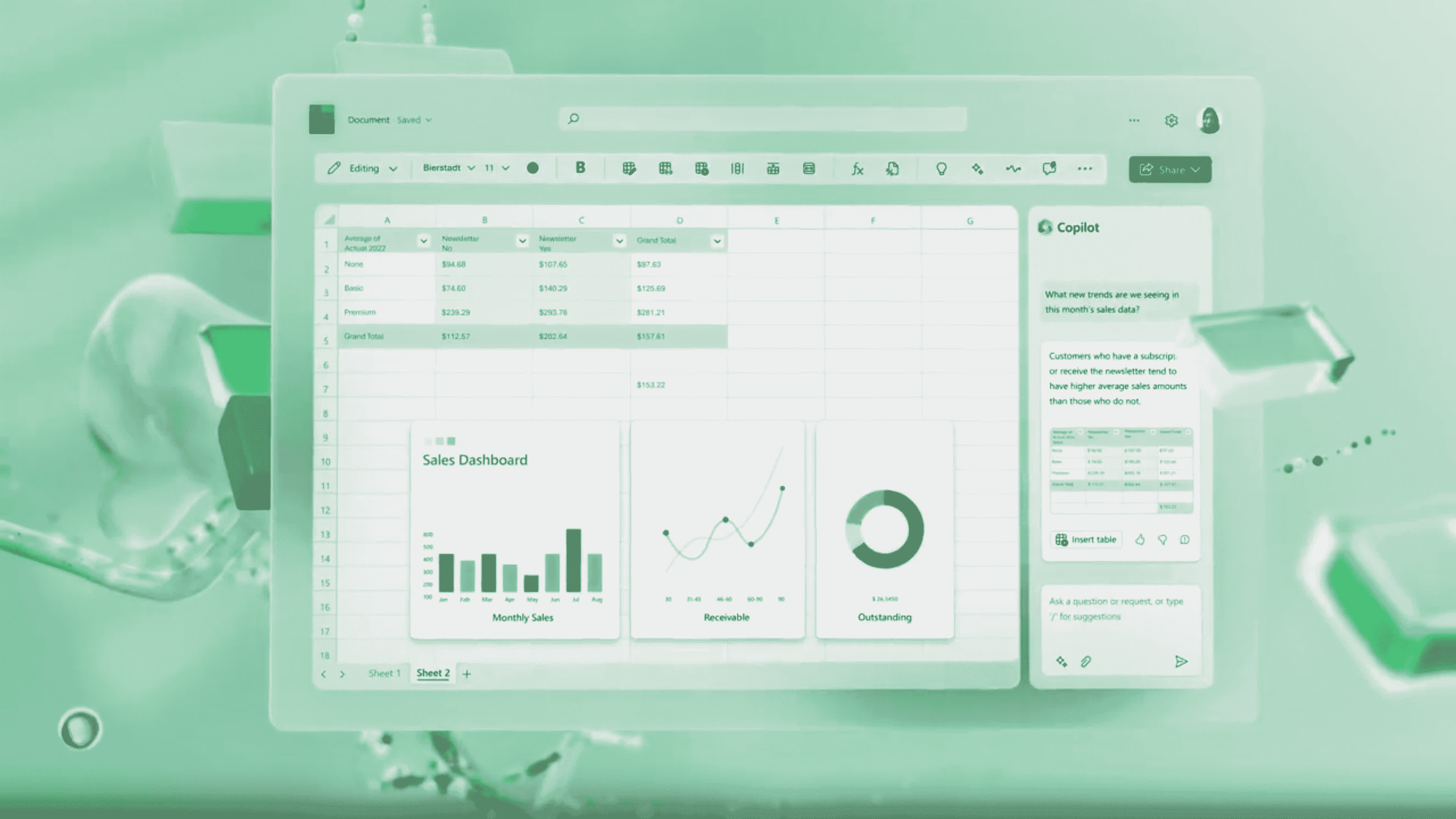

We imagine Microsoft may have some really cool features to add to MS Word, PowerPoint and Visual Basic. Sure, go ahead and impress us with AI Excel. But that is a lot of hope for a company that is not well known for delivering great user interfaces.

Security companies can make their products a little bit better at detecting threats. CRM systems may get a little better at matching user requests to useful results. Chip companies will improve processor branch prediction by some amount. All of these are tiny gains, 10% or 20% boosts in performance, or reductions in cost. And that is ok, that is still tremendous value when compounded across all the software out there. For the moment, we think the vast bulk of "AI" gains will come in these unremarkable but useful forms.

Generative AI may turn out to be more significant. Maybe. Part of the problem we have today with this field is that much of the tech industry is waiting to see what everyone else will do on this front.

In all their recent public commentary, every major processor company has pointed to Microsoft's upcoming AI update as a major catalyst for adoption of AI semis. We imagine Microsoft may have some really cool features to add to MS Word, PowerPoint and Visual Basic. Sure, go ahead and impress us with AI Excel. But that is a lot of hope to hang onto to a single company, especially a company like Microsoft that is not well known for delivering great user interfaces.

For their part, Google seems to be a deer in the headlights when it comes to transformers, ironic given that they invented them. When it comes down to it, everyone is really waiting for Apple to show us all how to do it right. So far, they have been noticeably quiet about generative AI. Maybe they are as confused as everyone else, or maybe they just do not see the utility yet.

Apple has had neural processors in their phones for years. They were very quick to add transformer support to M Series CPUs. It does not seem right to say they are falling behind in AI, when maybe they are just laying in wait.

Taking this back to semiconductors, it may be tempting to build big expectations and elaborate scenarios of all the ways in which AI will drive new business. Hence the growing amount of commentary about AI PCs and the market for inference semiconductors. We are not convinced, it is not clear any of those companies will really be able to build massive markets in these areas.

Instead, we tend to see the advent of transformer-based AI systems in much simpler terms. The rise of transformers largely seems to mean a transfer of influence and value-capture to Nvidia at the expense of Intel in the data center. AMD can carve out its share of this transfer, and maybe Intel can stage the comeback-of-all-comebacks, but for the foreseeable future there is no need to complicate things.

That said, maybe we are getting this all wrong. Maybe there are big gains just hovering out there, some major breakthrough from a research lab or deca-unicorn pre-product startup. We will not eliminate that possibility. Our point here is just that we are already seeing meaningful gains from transformers and other AI systems. All those "under the fold" improvements in software are already significant, and we should not agonize over waiting for emergence of something even bigger.

Some would argue that AI is a fad, the next bubble waiting to burst. We are more upbeat than that, but it is worth thinking through what the downside case for AI semis might look like...

We are fairly optimistic about the prospects for AI, albeit in some decidedly mundane places. But we are still in early days of this transition, with many unknowns. We are aware that there is a strain of thinking among some investors that we are in an "AI bubble", and the hard form of that thesis holds that AI is just a passing fad, and once the bubble deflates the semis market will revert to the status quo of two years ago.

Somewhere between the extremes of AI is so powerful it will end the human race and AI is a useless toy sits a much more mild downside case for semiconductors.

As far as we can gauge right now, the consensus seems to hold that market for AI semis will be modestly additive to overall demand. Companies will still need to spend billions on CPUs and traditional compute, but now need to AI capabilities necessitating the purchase of GPUs and accelerators.

At the heart of this case is the market for inference semis. As AI models percolate into widespread usage, the bulk of AI demand will fall in this area, actually making AI useful to users. There are a few variations within this case. Some CPU demand will disappear in the transition to AI, but not a large stake. And investors can debate how much of inference will be run in the cloud versus the edge, and who will pay for that capex. But this is essentially the base case. Good for Nvidia, with lots of inference market left over for everyone else in a growing market.

The downside case really comes in two forms. The first centers on the size of that inference market. As we have mentioned a few times, it is not clear how much demand there is going to be for inference semis. The most glaring problem is at the edge. As much as users today seem taken with generative AI, willing to pay $20+/month for access to OpenAI's latest, the case for having that generative AI done on device is not clear.

People will pay for OpenAI, but will they really pay another extra dollar to run it on their device rather than the cloud? How will they even be able to tell the difference. Admittedly, there are legitimate reasons why enterprises would not want to share their data and models with third parties, which would require on device inference. On the other hand, this seems like a problem solved by a bunch of lawyers and a tightly worded License Agreement, which is surely much more affordable than building up a bunch of GPU server racks (if you could even find any to buy).

All of which goes to say that companies like AMD, Intel and Qualcomm, building big expectations for on-device AI are going to struggle to charge a premium for their AI-ready processors. On their latest earnings call, Qualcomm's CEO framed the case for AI-ready Snapdragon as providing a positive uplift for mix shift, which is a polite way of saying limited price increases for a small subset of products.

The market for cloud inference should be much better, but even here there are questions as to the size of the market. What if models shrink enough that they can be run fairly well on CPUs? This is technically possible, the preference for GPUs and accelerators is at heart an economic case, but change a few variables and for many use cases CPU inference is probably good enough for many workloads. This would be catastrophic, or at least very bad, to expectations for all the processor makers.

Probably the scariest scenario is one in which generative AI fades as a consumer product. Useful for programming and authoring catchy spam emails, but little else. This is the true bear case for Nvidia, not some nominal share gains by AMD, but a lack of compelling use cases. This is why we get nervous at the extent to which all the processor makers seem so dependent on Microsoft's upcoming Windows refresh to spark consumer interest in the category.

Ultimately, we think the market for AI semis will continue to grow, driving healthy demand across the industry. Probably not as much as some hope, but far from the worst-case, "AI is a fad" camp.

It will take a few more cycles to find the interesting use cases for AI, and there is no reason to think Microsoft is the only company that can innovate here. All of which places us firmly in the middle of expectations – long time structural demand will grow, but there will be ups and downs before we get there, and probably no post-apocalyptic zombies to worry about.