What just happened? At its Q2 2024 earnings call, Micron Technology CEO Sanjay Mehrotra announced that the company has sold out its entire supply of high-bandwidth HBM3E memory for all of 2024. Mehrotra also claimed that "the overwhelming majority" of the company's 2025 output has also already been allocated.

Micron is reaping the benefits of being the first out of the gate with HBM3E memory (HBM3 Gen 2 in Micron-speak), with much of it being used up by Nvidia's AI accelerators. According to the company, its new memory technology will be extensively used in Nvidia's new H200 GPU for artificial intelligence and high-performance computing (HPC). Mehrotra believes that HBM will help Micron generate "several hundred million dollars" in revenue this year alone.

According to analysts, Nvidia's A100 and H100 chips were widely used by tech companies for their AI training models last year, and the H200 is expected to follow in their footsteps by becoming the most popular GPU for AI applications in 2024. It is expected to be used extensively by tech giants Meta and Microsoft, which have already deployed hundreds of thousands of AI accelerators from Nvidia.

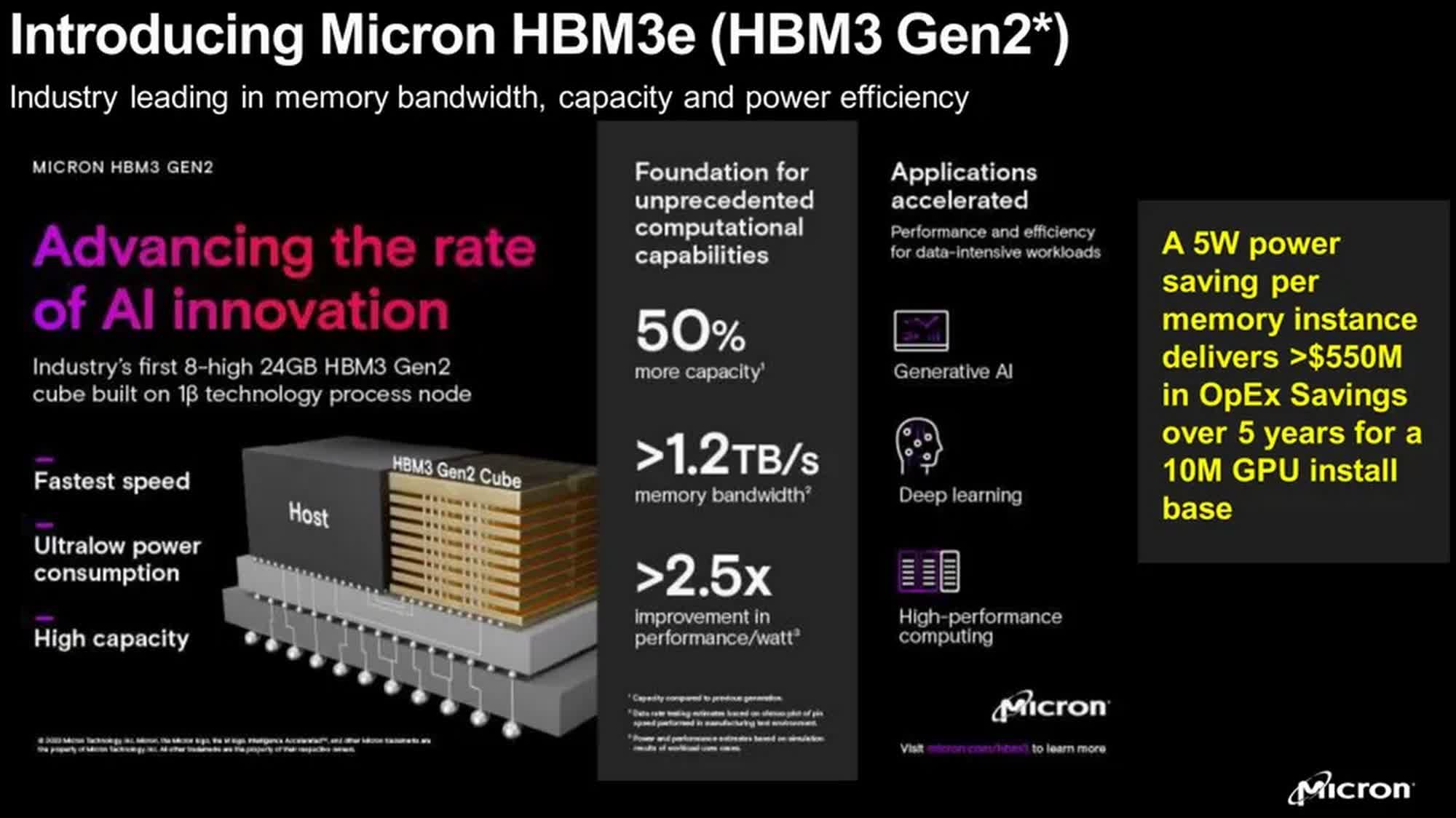

As noted by Tom's Hardware, Micron's first HBM3E products are 24GB 8-Hi stacks with 1024-bit interface, 9.2 GT/s data transfer rate and a 1.2 TB/s peak bandwidth. The H200 will use six of these stacks in a single module to offer 141GB of high-bandwidth memory.

In addition to the 8-Hi stacks, Micron has already started sampling its 12-Hi HBM3E cubes that are said to increase DRAM capacity per cube by 50 percent to 36GB. This increase would allow OEMs like Nvidia to pack more memory per GPU, making them more powerful AI-training and HPC tools. Micron says it expects to ramp up the production of its 12-layer HBM3E stacks throughout 2025, meaning the 8-layer design will be its bread and butter this year. Micron also said that it is looking at bringing increased capacity and performance with HBM4 in the coming years.

In addition, the company is working on its high-capacity server DIMM products. It recently completed validation of the industry's first mono-die-based 128GB server DRAM module that's said to provide 20 percent better energy efficiency and over 15 percent improved latency performance "compared to competitors' 3D TSV-based solutions."