Why it matters: Intel in a recent interview revealed some more information about its upcoming AI-powered image reconstruction technology, what hardware it will support, and how it compares to efforts from competitors AMD and Nvidia. This comes after Intel's initial presentation of XeSS last week. In the interview, the chipmaker most closely compared XeSS to Nvidia's DLSS but outlined some key differences, particularly in how they implement it in individual games.

Correction (8/25/21): We've updated the original headline and opening paragraph of this article to fix a mistake and apologize for the error. When games first started implementing Nvidia's DLSS in 2018, its AI had to be trained for each individual game. When Nvidia later rolled out DLSS 2.0, it was based on a more general neural network used across games. In an interview with Wccftech, Intel principal engineer Karthik Vaidyanathan confirms the company is already at a similar stage with XeSS, referencing the real-time demo from last week's presentation.

"XeSS has never seen that demo. It was never trained on that demo," Vaidyanathan said. "All the content in that scene that you saw was not used as a part of our training process." He confirms this should help Intel and developers roll out XeSS faster. It still has to be implemented into the game engine itself by a developer though, not at the driver level.

In its initial presentation, Intel revealed XeSS won't only work on the specialty hardware of its upcoming Arc GPUs, but will also work in another form, which developers will implement through the same API as on Intel's hardware, on any GPU with DP4a instruction. That includes many AMD, Nvidia, and Intel integrated graphics chips from the last few years.

In the interview, Intel further confirms this should work on anything that supports Shader Model 6.4, though it recommends using Shader Model 6.6. Intel isn't currently planning on any fallback to FP16 or FP32 however, and XeSS running on an Nvidia RTX card won't make use of Nvidia's Tensor cores.

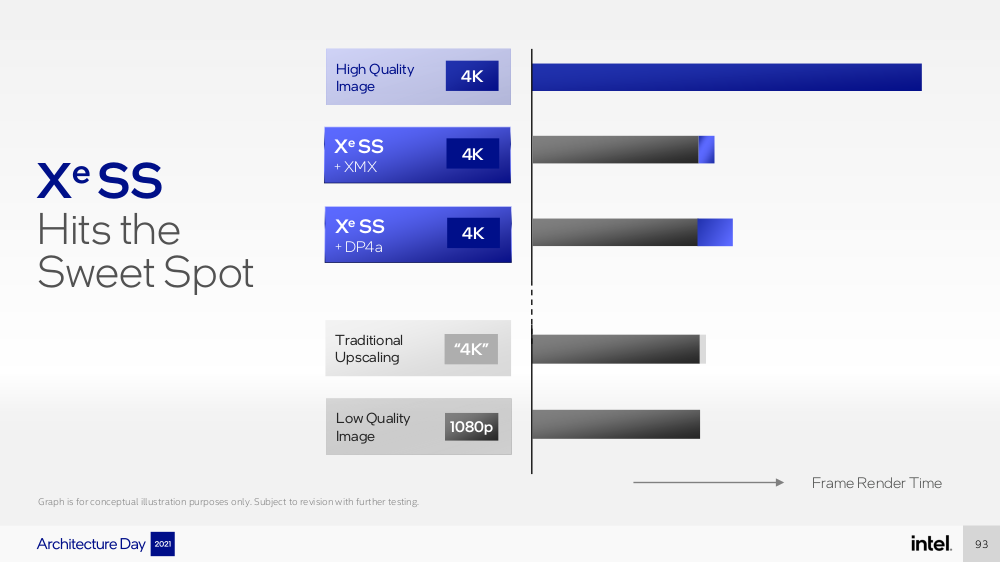

Intel also confirmed that, like DLSS and AMD's FSR, XeSS will have different quality modes and will work at different resolutions. The demo in the presentation only showed it upscaling a 1080p image to 4K – equivalent to what Nvidia and AMD call "performance mode."

According to Vaidyanathan, Intel trains the XeSS AI on what he estimates is equivalent to 32K resolution, compared to the 16K for DLSS, though he bases that number on samples per pixel. "We train with reference images that have 64 samples per pixel," he said. "I wouldn't call it 32k because what we are doing is effectively all those samples are contributing to the same pixel....But yeah, effectively 32k pixels is what we use to train."

XeSS will not use Microsoft's DirectML. "...for our implementation we need to really push the boundaries of the implementation and the optimization and we need a lot of custom capabilities, custom layers, custom fusion, things like that to extract that level of performance," Vaidyanathan said. "And in its current form, DirectML doesn't meet those requirements."

Intel already confirmed in its earlier presentation that XeSS will eventually be open source.