Bottom line: Apple is likely correct when it claims the M1 Ultra is the most powerful consumer-grade desktop chip, but that's because it's hard to compare an SoC with 114 billion transistors with what's available now in the x86 space. Early benchmarks seem to suggest performance-per-watt is stellar, but GPU performance falls short against dedicated GPUs like Nvidia's RTX 3090.

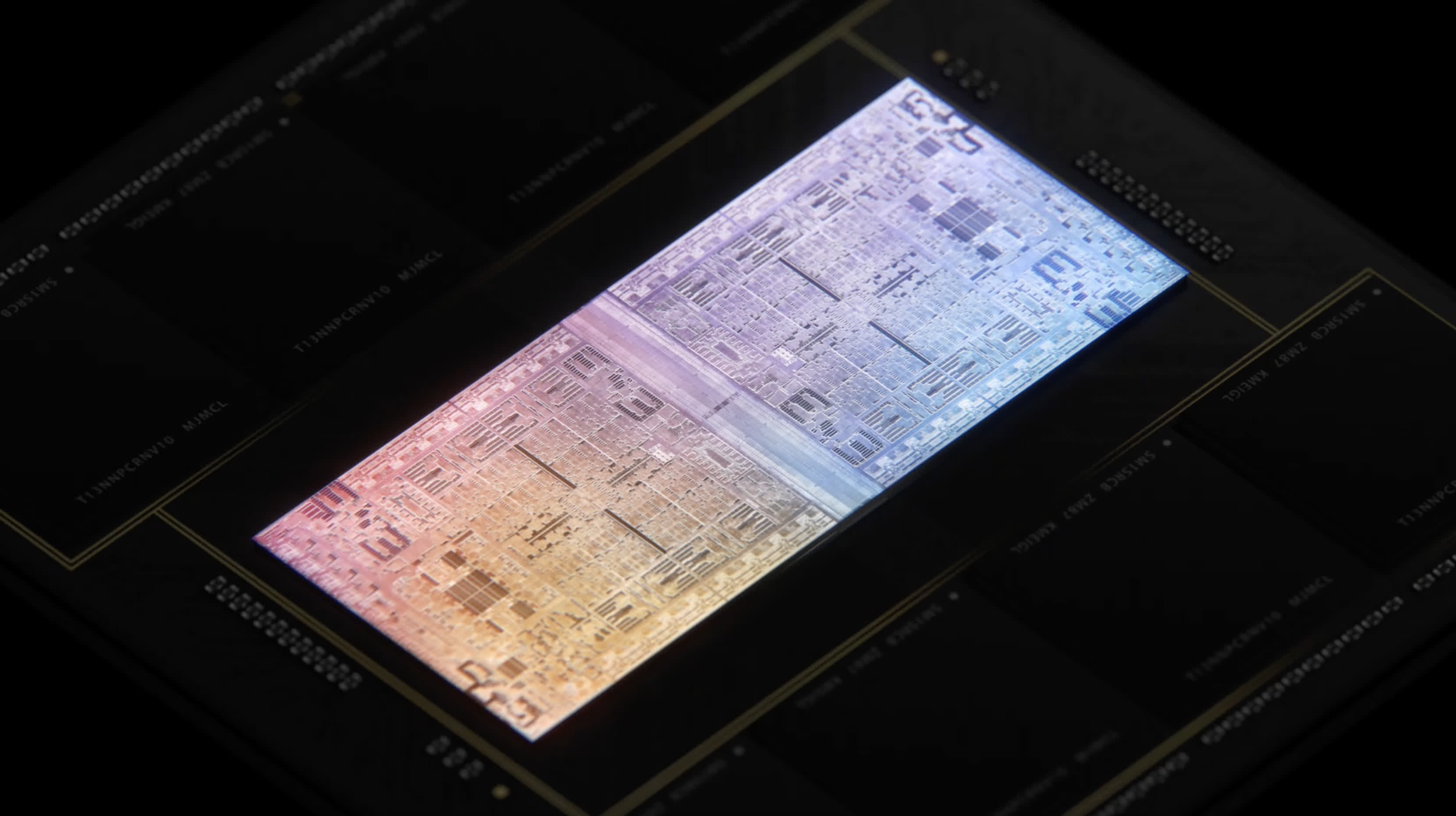

When Apple introduced its M1 Ultra chipset, it made a big deal about its performance and energy efficiency, extolling the benefits of the chiplet design and the UltraFusion packaging and interconnect technology that made it possible.

To be fair, a lot of engineering clearly went into both the hardware and software aspects of the new chipset, as Apple essentially fused together two M1 Max chips and paired them with a bunch of high-bandwidth unified memory. It also made the two chips recognizable in software as a single chip, which will no doubt simplify app development.

However, as is the case with many of Apple's performance claims (and for that matter, any company that's competing in the hardware space), they don't usually tell the full story. Companies like to cherry-pick benchmark results to make their products look better than the competition, and that's why independent reviews are an important resource to consult before deciding what works for you.

Apple chose to compare the M1 Ultra performance against Intel's Core i9-12900K and Nvidia's RTX 3090, two of the fastest and most power-hungry consumer parts in the desktop space at the moment. The company claimed during the launch event that its new chipset is able to slightly beat the RTX 3090 with a much more modest power consumption, but it didn't say which benchmarks were used.

Now that the first independent reviews are out, things are starting to come into focus. The Verge ran a series of benchmarks including NPBench Python and Geekbench, as well as some Puget and gaming tests, and the results were interesting.

The M1 Ultra's CPU definitely outperforms the M1 Max's, as well as the 28-core Intel Xeon W found in the specced-out Mac Pro, but its GPU doesn't quite reach RTX 3090 levels of compute performance.

The Verge used a PC equipped with an Intel Core i9-10900, 64 gigabytes of RAM, and an Nvidia RTX 3090 GPU and obtained a Geekbench 5 Compute score of over 215,000 points. By comparison, the M1 Ultra in the Mac Studio was only able to muster a little over 83,000 points, or 102,156 points when using Metal.

That's still an impressive result, so The Verge also looked at gaming performance in Shadow of the Tomb Raider. Apple is notorious for not optimizing its hardware for gaming workloads, and the M1 Ultra is no exception. While it was able to achieve a respectable 108 frames per second at 1080p and 96 frames per second at 1440p, Nvidia's dedicated GPU had a lead of 18 to 31 percent.

Image credit: The Verge

A lot of that difference could be down to the 100-watt power envelope of the M1 Ultra GPU and the way it shares memory bandwidth with the CPU. For reference, the RTX 3090 alone has a TGP of 320 watts and the Core i9-12900K can add over 241 watts on top of that. We saw a similar story with other Apple Silicon chipsets such as the M1 Pro, which is great in terms of performance-per-watt but struggles to keep up with more power-hungry hardware from the x86 space.

The key takeaway from the M1 Ultra benchmarks we've seen so far is that Apple created a small desktop computer that approaches the level of performance found in the significantly larger, more expensive Mac Pro. The $6,199 specced-out Mac Studio may seem like an expensive bit of kit, but for professionals who depend on macOS and apps optimized for Apple Silicon it might look like a bargain next to a slightly more powerful, $14,000 Mac Pro.

https://www.techspot.com/news/93829-apple-m1-ultra-performance-beast-but-definitely-not.html