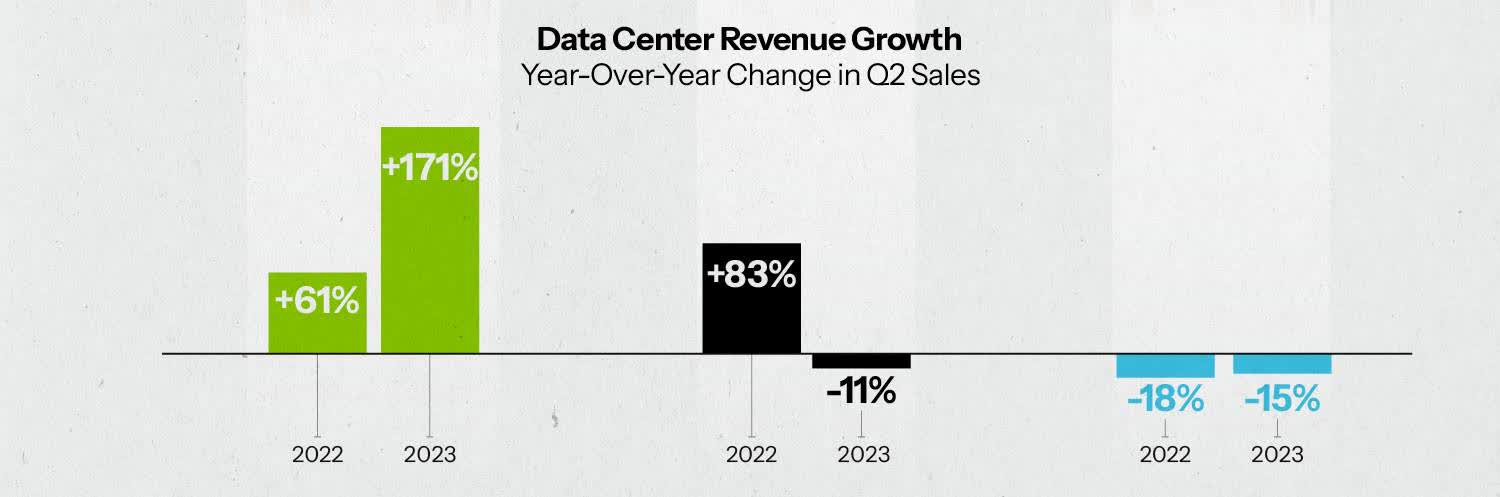

Why it matters: Data center and AI-related hardware are becoming nothing short of a cash cow for chipmakers with the ability to provide the needed resources. Nvidia maintains a strong hold on the market, while other companies such as AMD, Intel, Google, and Amazon continue ramping up operations in hopes of cashing in. According to recent reports, OpenAI and Microsoft may be the latest entrants looking to enter the growing AI chip race and end their reliance on 3rd-party AI computing resources.

A new report from Reuters outlined OpenAI's plans to begin providing its own AI chips and secure its share of the lucrative AI chip market. While there are no reports that the company has formally started executing its own AI chip plans, sources close to the company indicate it has begun identifying potential acquisition targets to help turn its plans into reality. According to the article, OpenAI's scheme includes several potential approaches, ranging from building its own AI chips to diversifying its supplier network beyond Nvidia's current offerings.

OpenAI isn't the only company looking to end its heavy reliance on 3rd-party resources. According to another article from The Information, OpenAI-supporter Microsoft is also on the verge of releasing its own AI chip, codenamed Athena, as early as next month. The announcement could be positioned as one of the highlights of this year's Microsoft Ignite conference.

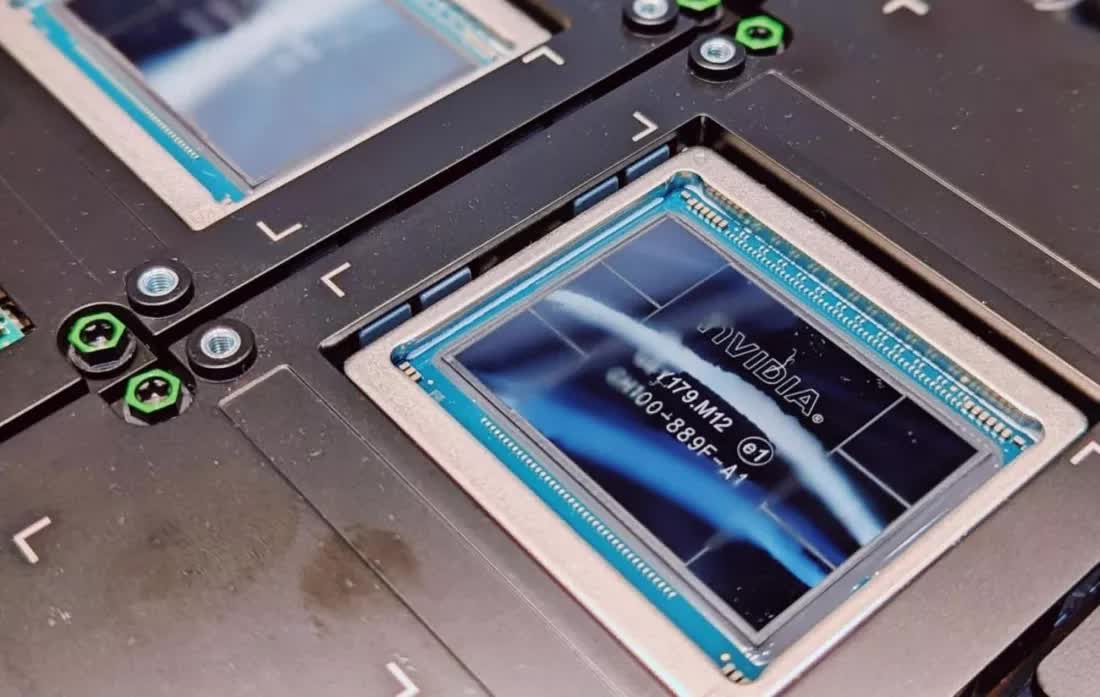

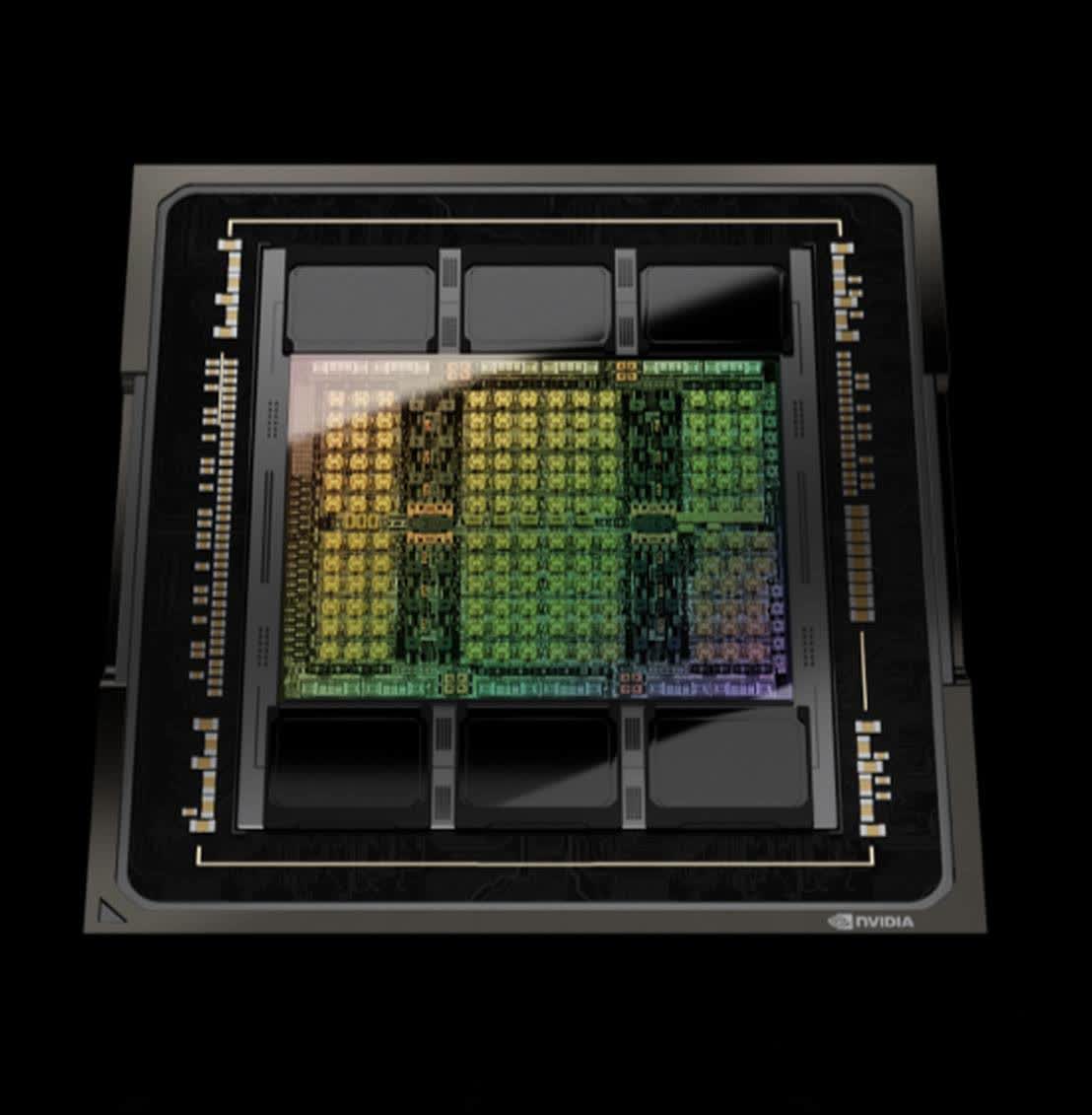

Nvidia's early adoption of AI technology and existing GPU capabilities have positioned it as the clear leader in today's AI explosion. According to visualcapitalist.com, Team Green already holds more than 70% of the existing $150 billion AI market, a value that continues to grow daily. And with AMD and Intel lagging behind in the AI and data center segments, there's still plenty of room for competition to break in and provide new, cost-effective solutions.

Nvidia's AI success is attributable to more than just its legacy GPU and newest Grace Hopper chip offerings. The company offers AI resources ranging from hardware solutions to software and enterprise tools providing data analytics, security, and other AI modeling services.

There's certainly room for new offerings and innovation in the rapidly expanding AI market, however any real challenges to Nvidia's AI chip dominance are likely still several years away. Even then, Nvidia's well integrated combination of hardware and software solutions will be a tough opponent to contend with.

Image credit: Jenna Ross and Sam Parker

https://www.techspot.com/news/100419-microsoft-openai-making-moves-enter-ai-chip-market.html