The big picture: A key selling point of solid state drives is that they are less failure-prone than HDDs. However, data from recent years began to cast doubt on that assumption, but at least one new long-term analysis shows this might prove correct as time marches on.

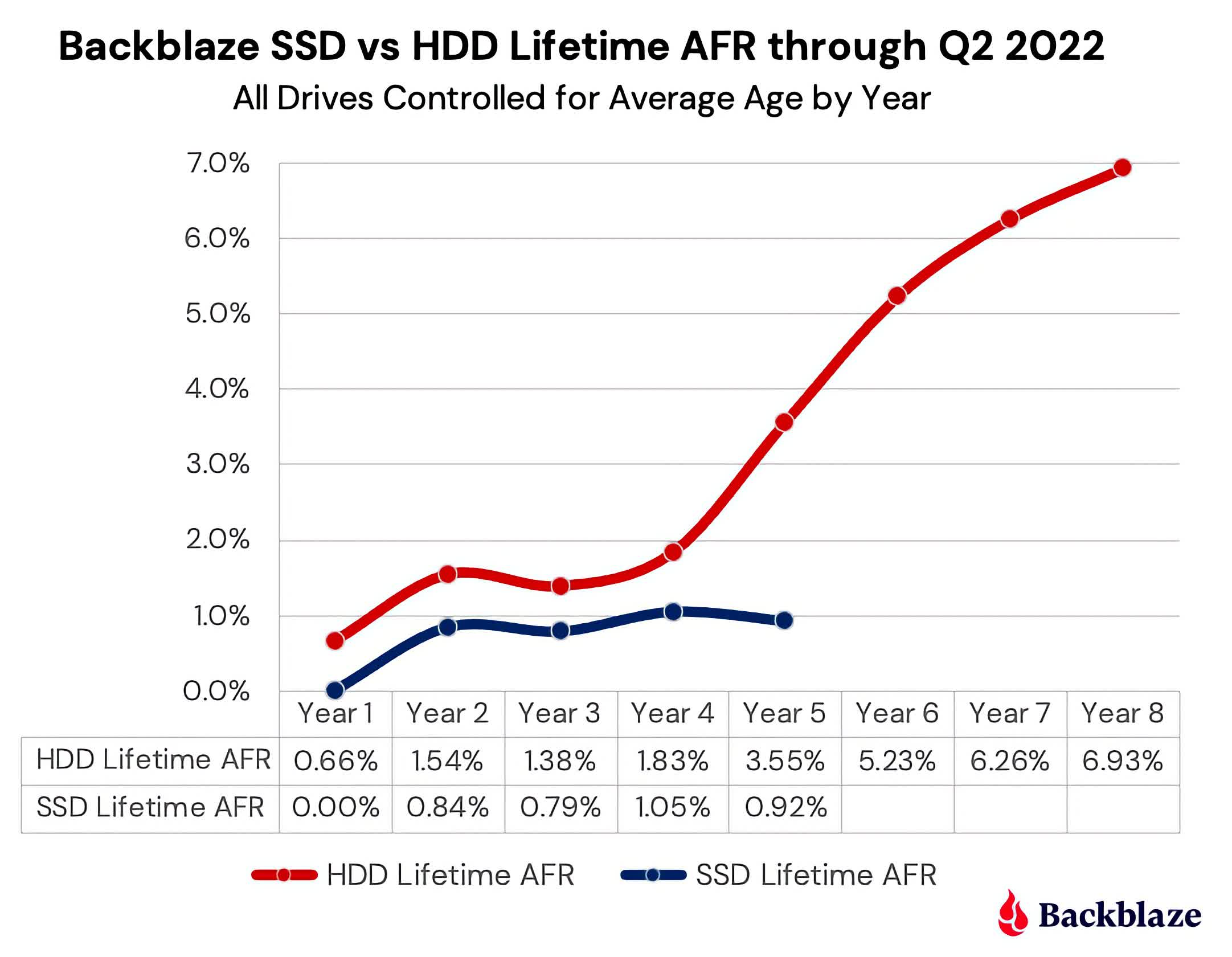

Backblaze's 2022 mid-year review on storage failure rates shows that SSDs may indeed be more reliable than HDDs over time. The new report paints a better picture for SSDs than the same company's analysis from last year.

As a cloud storage and backup provider, Backblaze heavily relies on both SSDs and HDDs and it's been their custom to record and report on the drives' failure rates which are always interesting to follow. The periodic findings are putting solid-state technology's claims of superior durability over traditional disk drives to the test.

Last year's report showed similar failure rates for both types of storage, adjusted for age. Backblaze's HDDs and SSDs all saw failure rates increase by around one percent after a year of operation before leveling off in the subsequent two years. That's where the data for SSDs ended because Backblaze did not incorporate them into its analyses until 2018, while failure rate data for HDDs extends back to 2014.

The last data point for SSDs was interesting because year five is where HDD failure rates take a steep climb, reaching over six percent by year seven. Another year of findings would reveal whether SSDs would see the same increase in failures.

But so far Q1 and Q2 2022 data shows SSDs holding strong during their fifth year of service, creating a significant difference from HDDs. More data in the coming years will show us a clearer picture of SSD reliability advantages over hard disks, but certainly this new report suggests solid-state tech is more durable in the long run.

SSDs may not be uniformly better than HDDs, however. Last month, the University of Wisconsin-Madison and the University of British Columbia published a report claiming that SSDs could produce twice as many CO2 emissions as HDDs.

The findings have almost nothing to do with how consumers use their storage, as SSDs likely use less energy than HDDs. Rather, manufacturing SSDs is more energy-intensive than manufacturing hard drives. The study suggested increasing SSD longevity to solve the problem, so that manufacturers won't need to build as many drives. HDDs also certainly maintain their price-per-GB advantage over SSDs.

https://www.techspot.com/news/95966-backblaze-data-shows-ssd-have-lower-failure-rates.html