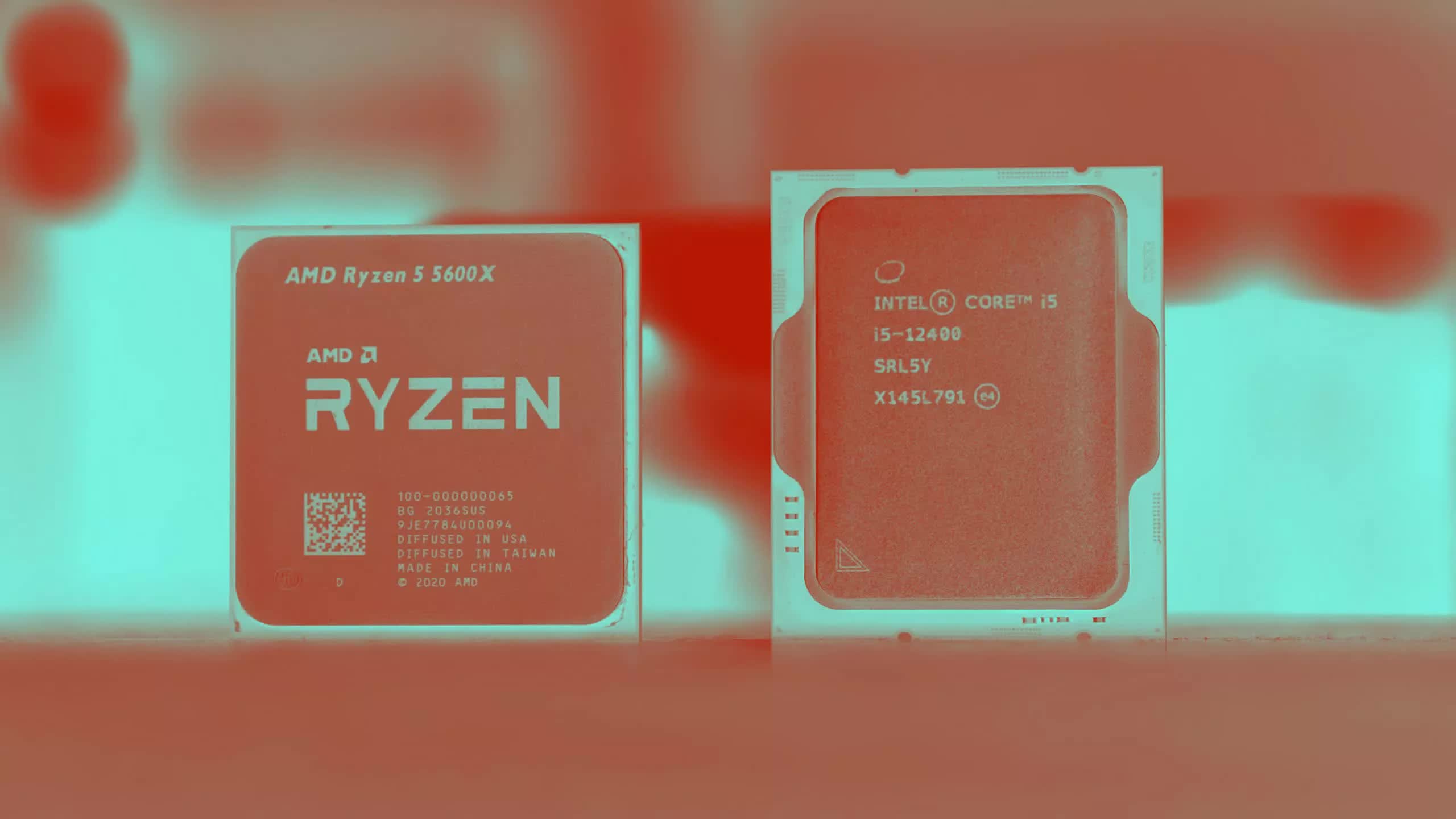

In this article, we'll take a look at how L3 cache capacity affects gaming performance. More specifically, we'll be examining AMD's Zen 3-based Ryzen processors in a "for science" type of feature.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

CPU Cache vs. Cores: AMD Ryzen Edition

- Thread starter Steve

- Start date

WhiteLeaff

Posts: 470 +856

It would be more useful if the clockrate was leveled.

I don't understand the reason for limiting yourself to bringing data that we already know.

I don't understand the reason for limiting yourself to bringing data that we already know.

gamerk2

Posts: 971 +985

As I noted years ago: There's a point where adding more cores is no longer going to improve performance simply due to the impossibility of making the code more parallel. And it's not shocking at all the majority of titles start hitting that wall in the 6-8 core range. After that you're chasing incremental gains (and can even see minimal loss due to cache coherency and scheduling concerns).

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

WhiteLeaff

Posts: 470 +856

More cores help to compile shaders quickly thoAs I noted years ago: There's a point where adding more cores is no longer going to improve performance simply due to the impossibility of making the code more parallel. And it's not shocking at all the majority of titles start hitting that wall in the 6-8 core range. After that you're chasing incremental gains (and can even see minimal loss due to cache coherency and scheduling concerns).

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

Very interesting! I was thinking of going for a 5800X3D from my 5600X but given this and the 5600X3D review, I will go for the 5600X3D... next time I happen to drive by a Micro Center.

Thank you Steve

Thank you Steve

bluetooth fairy

Posts: 235 +166

Very interesting! I was thinking of going for a 5800X3D from my 5600X but given this and the 5600X3D review, I will go for the 5600X3D... next time I happen to drive by a Micro Center.

Thank you Steve

Excuse me, but what GPU are you using with the 5600X, and what games are you usually playing?

bluetooth fairy

Posts: 235 +166

As a side note: if it's 6c/12t and 8c/16t, it would be better to show that on the diagrams. Because, 6c/6t != 6c/12t in gaming. Especially considering AMD SMT implementation. Thats probably why 12t is enough for gaming still.

--------

PS 6c/12t were comparable to 8c/8t, as far as I can recall. But it's the story for another article, I guess.

--------

PS 6c/12t were comparable to 8c/8t, as far as I can recall. But it's the story for another article, I guess.

Last edited:

MarcusNumb

Posts: 155 +300

Another point to show that AMD is doing a good job with their CPUs, hopefully one day they can do that well with their GPUs.

I am using a 4070 Ti - I play mostly action games but BG3 lately. Cyberpunk.... it's kind of a mess with this setup. I am hoping the new CPU will help with stuttering/1% lows. But knowing me, I'll get obsessed with some 2d game and then it won't even matter haha, Heat Signature will be the death of me.Excuse me, but what GPU are you using with the 5600X, and what games are you usually playing?

So let's downgrade our monitors to 1080p to put our 4090's to good use on our EOL systems

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

ScottSoapbox

Posts: 1,048 +1,824

Nice story bro.So let's downgrade our monitors to 1080p to put our 4090's to good use on our EOL systems

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

I found this test very interesting.

Knot Schure

Posts: 431 +206

No one needs more than 60fps, which all these CPUs easily hit. If you think you need more than that, you got suckered into a never-ending numbers game. The article isn't helping with that, suggesting that cheaper Ryzens are useless because you can't get 150fps in Game X on the 4090 you'll never own or use for 1080p.

That said, it's good to have some innovation in the CPU space. The gains are impressive with large L3. It's just pointless, unless you've been convinced you need triple digit framerates.

That said, it's good to have some innovation in the CPU space. The gains are impressive with large L3. It's just pointless, unless you've been convinced you need triple digit framerates.

Oh, that's great, bro, thanks for sharing.Nice story bro.

I found this test very interesting.

bluetooth fairy

Posts: 235 +166

I played CP2077 add on on 4070 mobile and 6 Alder lake cores. The perf was acceptable in 1080p, but w/o path tracing.I am using a 4070 Ti - I play mostly action games but BG3 lately. Cyberpunk.... it's kind of a mess with this setup. I am hoping the new CPU will help with stuttering/1% lows. But knowing me, I'll get obsessed with some 2d game and then it won't even matter haha, Heat Signature will be the death of me.

But BG3 seems another story, especially in the Act 3. Not sure that extra cache can make it much better. But very scene depending. Steve's data doesn't show that BG3 runs bad on 5600x, 79 fps 1% lows is just fine.

But 5600x can bottleneck the 4070 ti, and there's certainly double digits % left on the table.

Last edited:

Mr Majestyk

Posts: 2,058 +1,903

So you only game. Are you also claiming 7950X/X3D won't beat a 7800X/X3D in productivity. Not all of us just game.As I noted years ago: There's a point where adding more cores is no longer going to improve performance simply due to the impossibility of making the code more parallel. And it's not shocking at all the majority of titles start hitting that wall in the 6-8 core range. After that you're chasing incremental gains (and can even see minimal loss due to cache coherency and scheduling concerns).

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

No one needs more than 60fps

You are missing out on much smoother game play at 60hz. My monitor is used for both work and gaming, and sometimes when I switch its input from my work computer to my personal, it forgets that it can display at 144Hz, and I end up with a 60Hz monitor. It's easy to fix, but I only ever notice it's at 60Hz when I boot up a game and see lag, tearing, or blur where I otherwise would never expect to see it.

gamerk2

Posts: 971 +985

Outside of tasks that scale basically forever, the difference due to core count is still minimal.So you only game. Are you also claiming 7950X/X3D won't beat a 7800X/X3D in productivity. Not all of us just game.

Nobina

Posts: 4,505 +5,507

60 FPS looks like 30 FPS after you've been playing at high refresh rate for a short time. I don't need more than 60 in most cases but in competitive FPS it's night and day.No one needs more than 60fps, which all these CPUs easily hit. If you think you need more than that, you got suckered into a never-ending numbers game. The article isn't helping with that, suggesting that cheaper Ryzens are useless because you can't get 150fps in Game X on the 4090 you'll never own or use for 1080p.

That said, it's good to have some innovation in the CPU space. The gains are impressive with large L3. It's just pointless, unless you've been convinced you need triple digit framerates.

PS 6c/12t were comparable to 8c/8t, as far as I can recall. But it's the story for another article, I guess.

Agree, looking forward new article, smth like "CPU threads VS L3 cache": if intel drops HT in its new Arrow Lake cores - the effect on gaming seems to be very... nontrivial. Deeper investigation is needed to have the complicated topic covered: how many threads are required for gaming - 12 or maybe 8, HT(SMT) or not? And also there are P (LP) and E cores now which complicates things even more...Are E-cores completely useless for gaming? (they seem to only help lower CPU power/thermals if the performance of P-cores is not fully utilized)

There probably will be a few games that actually get some performance from E-cores. Majority of games probably ignore them unless Intel gives E-cores more cache and/or better memory performance. That hardly makes any sense.Agree, looking forward new article, smth like "CPU threads VS L3 cache": if intel drops HT in its new Arrow Lake cores - the effect on gaming seems to be very... nontrivial. Deeper investigation is needed to have the complicated topic covered: how many threads are required for gaming - 12 or maybe 8, HT(SMT) or not? And also there are P (LP) and E cores now which complicates things even more...Are E-cores completely useless for gaming? (they seem to only help lower CPU power/thermals if the performance of P-cores is not fully utilized*)

However even considering CPU with E- and P-cores is simply waste. Why this kind of anomaly even exist is because Intel had to lower power consumption at any cost to be competitive vs AMD. Too bad, hybrid CPU is not good for anything useful. Not even for multithreading. Those who disagree feel free to show Intel server CPU with hybrid architecture. If servers are not for multithreading, then what is. Well, at least hybrid crap looks good on benchmarks if ignoring power consumption.

* No, it goes like this: if process is considered background process by Intel Thread Director, thread goes into E-cores. That makes absolutely no sense, of course.

Last edited:

fb020997

Posts: 37 +12

I hope AMD will be able to deliver 3D models WITHOUT gimping clockspeed next time.

It’s a difficult thing to do, because the cache sits exactly on top of the CCD, so the heat generated by the cores has to travel through the 3DVCache. And also, with the thick IHS to keep cooler compatibility, it’s even harder.

Endymio

Posts: 2,673 +2,702

I'm not a gamer, but "tearing and blur" isn't due to a low frame rate, but rather a mismatch between the update rates of your graphics card and monitor.You are missing out on much smoother game play... I only ever notice it's at 60Hz when I boot up a game and see lag, tearing, or blur where I otherwise would never expect to see it.

I won't speak for the population at large, but when I moved from 60 to 75hz I saw a noticeable improvement in smoothness ... but 75 to 144hz was essentially imperceptible.

I suspect the bug that causes it to run at 60Hz also disables the adaptive sync capabilities of the monitor. There's stuttering and other things as well.I'm not a gamer, but "tearing and blur" isn't due to a low frame rate, but rather a mismatch between the update rates of your graphics card and monitor.

I won't speak for the population at large, but when I moved from 60 to 75hz I saw a noticeable improvement in smoothness ... but 75 to 144hz was essentially imperceptible.

When you moved from 75 to 144hz, were you getting significantly greater than 75fps? If not, you probably wouldn't notice the difference. Also, you mentioned you are not a gamer, so perhaps your application simply wouldn't benefit from the high refresh rate. Some games don't either, such as Civ.

Similar threads

- Locked

- Replies

- 28

- Views

- 550

- Replies

- 42

- Views

- 523

Latest posts

-

Police arrest high school athletic director for deepfaking principal's voice

- Indianapolis replied

-

May I be overvolting my CPU despite disabling Asus APE in BIOS?

- Marcos Baras replied

-

-

PlayStation 5 Pro will be bigger, faster, and better using the same CPU

- Squid Surprise replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.