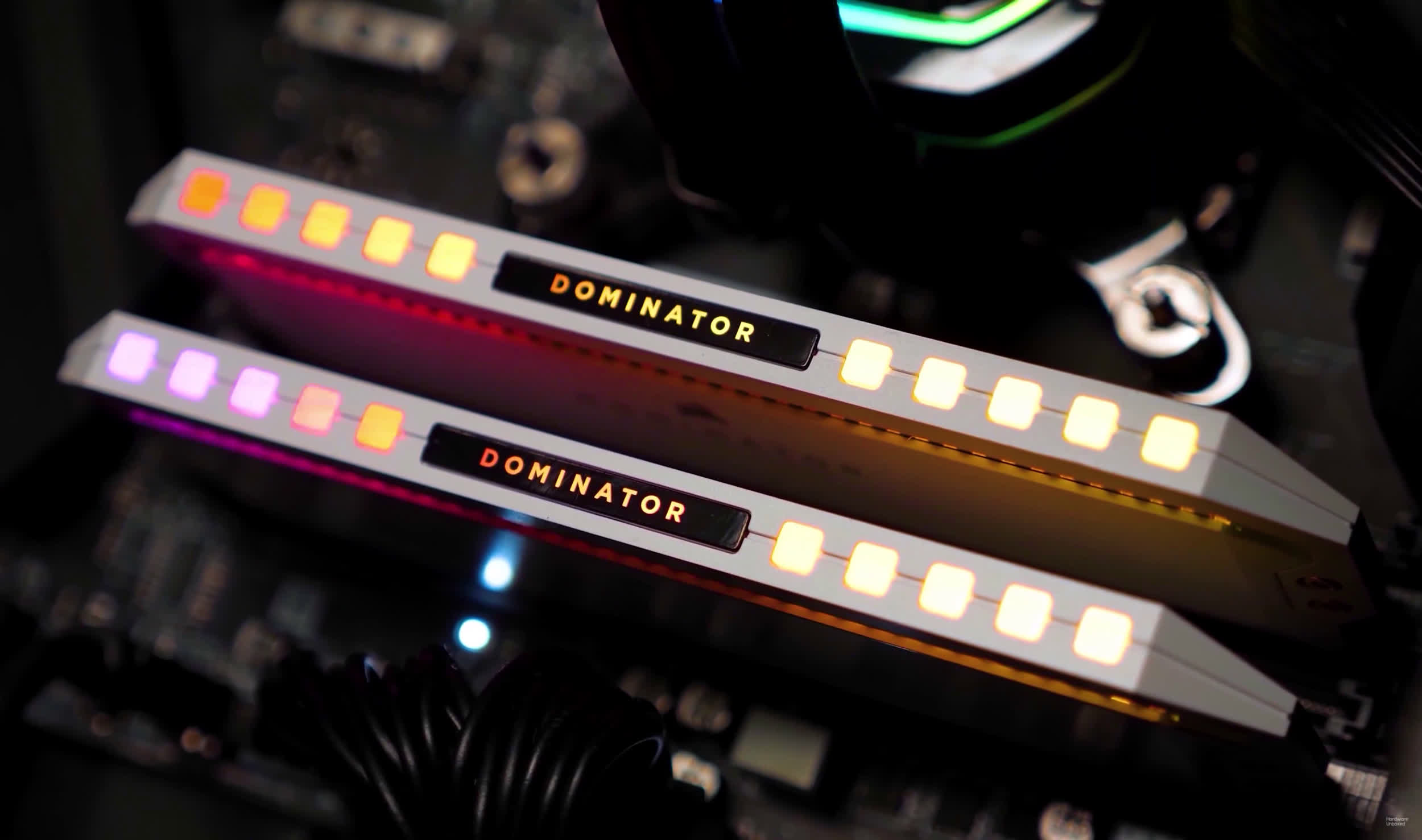

It's time we take a fresh look at DDR4 vs DDR5 memory performance using the Core i9-14900K. We're benchmarking with fast DDR5-7200 and DDR4-4000 memory, noting that these days DDR5 is cheaper than DDR4.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DDR5 vs. DDR4 Gaming Performance

- Thread starter Steve

- Start date

Atmajaya2020

Posts: 172 +108

Theinsanegamer

Posts: 5,452 +10,234

Not surprising to see the difference grow. As more games grow in memory usage that difference will only increase.

The only reason we likely havent seen further growth is the xbox series S, which is a tombstone around game development. It's tiny, slow RAM pool restricts anything published on xbox from embracing the full capabilities of a modern memory bus. Halo vs TLOU is a great example, the latter was made for PS5 then ported to PC and is harder on RAM.

We likely wont see the full effect until the PS6/Xbone series x/s2.

The only reason we likely havent seen further growth is the xbox series S, which is a tombstone around game development. It's tiny, slow RAM pool restricts anything published on xbox from embracing the full capabilities of a modern memory bus. Halo vs TLOU is a great example, the latter was made for PS5 then ported to PC and is harder on RAM.

We likely wont see the full effect until the PS6/Xbone series x/s2.

Lowhangingfruit

Posts: 20 +51

Interestingly, in a role reversal, DDR4 memory now costs quite a bit more than DDR5 (!)

A single exclamation is not sufficient for that sentence. Everything is upside down right now in regards to pricing, with many last gen components costing more than current gen. You probably weren't even expecting that when you started planning this article. If DDR4 were to cost 20-30% less than DDR5 then we'd be talking about a more difficult decision.

LetTheWookieWin

Posts: 158 +340

Interestingly, in a role reversal, DDR4 memory now costs quite a bit more than DDR5 (!)

Disagree. This might be true for the fastest memory kits as used in this article, but wasn’t my experience.

I just bought a new DDR4 MSI Z790 DDR4 MB for $205, where the DDR5 version was $220. For memory modules, I was able to buy 64GB of Corsair Vengeance DDR4-3600 for $119, while a Corsair Vengeance DDR5-6400 64GB kit was $205. That’s 72% more ($100) for maybe a 4-7% average uplift.

hahahanoobs

Posts: 5,224 +3,076

Spending the money to upgrade memory and mobo money for 7% doesn't make sense to me. Just keep what you have.

Did you run Gear 1 mode for the DDR4? I doubt you did because you didn't even mention it in your article. If you didn't run your DDR4 with Gear 1 mode then those benchmarks as well as this article is meaningless. As far as I know, most motherboards run DDR4 memory with Gear 2 mode by default.

ScottSoapbox

Posts: 1,048 +1,824

True.Spending the money to upgrade memory and mobo money for 7% doesn't make sense to me. Just keep what you have.

I think this comparison is more useful for someone building a new system for how much DDR5 is worth over DDR4 (as both are widely available currently).

yRaz

Posts: 6,413 +9,490

They're both actually pretty cheap now, too. You can get "optimal" speeds for both for not much over the base speeds. If you're building a completely new system, the speed difference is more than the cost difference at this point. If it was this time last year then that'd be a vastly different answer, but things have settled down now in the memory spaceTrue.

I think this comparison is more useful for someone building a new system for how much DDR5 is worth over DDR4 (as both are widely available currently).

ChipBoundary

Posts: 50 +34

Yeah, it is, but if you're building a new system DDR5 is cheaper or equivalent price and performs slightly better.For casual gaming DDR4 is very sufficient..

ChipBoundary

Posts: 50 +34

Then you'd be absolutely wrong. The typical setting is "auto" on all the brands. It determines the best gear based on memory speed. ASRock does it this way, and so do the other major brands. Also, there is no gear 1 on Raptor lake. It's 2 and 4. They have different "gear ratios" from previous generations.Did you run Gear 1 mode for the DDR4? I doubt you did because you didn't even mention it in your article. If you didn't run your DDR4 with Gear 1 mode then those benchmarks as well as this article is meaningless. As far as I know, most motherboards run DDR4 memory with Gear 2 mode by default.

Theinsanegamer

Posts: 5,452 +10,234

If you're a casual gamer, it wont. Because your GPU is nowhere near 4090 level, and your CPU is not a 14900k.Yeah, it is, but if you're building a new system DDR5 is cheaper or equivalent price and performs slightly better.

Future proofing would be a better argument.

Those who upgrade every 1-2 years dont care about "sense", they buy the fastest they can whenever possible. A 7% increase is enough to justify it to them.Spending the money to upgrade memory and mobo money for 7% doesn't make sense to me. Just keep what you have.

For everyone else CPUs have long stopped being an upgrade thing and have become something that you use until it fails, like PSUs or cases. Really, only the GPU needs updated frequently anymore.

MetXallica

Posts: 6 +4

That's funny, because I've been running Gear 1 DDR4 3600 CL14 on my MSI Tomahawk z690 with Raptor Lake since release... "13700K"Then you'd be absolutely wrong. The typical setting is "auto" on all the brands. It determines the best gear based on memory speed. ASRock does it this way, and so do the other major brands. Also, there is no gear 1 on Raptor lake. It's 2 and 4. They have different "gear ratios" from previous generations.

LetTheWookieWin

Posts: 158 +340

See my earlier post. Comparing DDR5-6400 vs DDR4-3600, DDR4 was substantially cheaper.Yeah, it is, but if you're building a new system DDR5 is cheaper or equivalent price and performs slightly better.

Bruno M. Villar

Posts: 62 +21

200 fps - DDR5

190 fps - DDR4⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢀⣀⣠⣤⣤⣀⣀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⢀⣠⣴⣶⣾⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣷⣦⣄⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⢀⣤⣾⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣦⣀⠀⠀⠀⠀⠀

⠀⠀⠀⣠⣿⣿⣿⣿⣿⣿⣿⣿⠿⠟⠟⠛⠛⠛⠻⠻⠿⣿⣿⣿⣿⣿⣿⣿⣿⣦⡀⠀⠀⠀

⠀⠀⣴⣿⣿⣿⣿⣿⣿⠟⠉⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠉⠻⣿⣿⣿⣿⣿⣿⣿⡄⠀⠀

⠀⣰⣿⣿⣿⣿⣿⣏⠅⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠢⠹⣿⣿⣿⣿⣿⣿⣿⡄⠀

⢠⣿⣿⣿⣿⣿⡷⠈⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢻⣿⣿⣿⣿⣿⣿⣿⠀

⢸⣿⣿⣿⣿⣿⠁⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢹⣿⣿⣿⣿⣿⣿⣿⡇

⡿⣿⣿⣿⣿⡺⢀⡴⠿⠟⣶⣂⣄⠀⠀⠀⠀⠀⢀⣠⣴⣶⠾⠿⢶⣼⣿⣾⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⢳⠃⠀⠀⠀⠀⠈⠉⠁⠀⠀⠀⠀⠈⠁⠁⠀⢀⠀⠀⣹⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣘⣠⣾⢿⣟⣾⠲⣄⠀⠀⠀⢸⡀⠀⡠⠖⣻⣻⡿⢷⣽⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⠜⠋⠑⠠⠍⠁⠈⠀⠀⠀⠀⠈⠠⠀⠀⠑⠈⠩⠅⠊⢹⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⡟⠀⠀⠀⠀⠀⠀⠀⠀⢠⠄⠀⠸⡎⠀⠀⠀⠀⠀⠀⠀⠀⠻⣛⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⠀⠀⠀⠀⠀⠀⠀⣰⢁⣤⠀⣰⡄⢣⠀⠀⠀⠀⠀⠀⠀⠀⢉⣿⣿⢹⣿⣿⣿

⣿⣿⣿⣿⣯⢷⠀⠀⠀⠀⠀⠀⠈⠈⠀⠈⠀⠈⠈⠀⠀⠀⠀⠀⠀⠀⠀⣾⡧⢋⣼⡿⣿⣿

⣿⣿⣿⣿⣿⡇⠀⠠⠀⠀⠀⠀⠀⠀⠀⠀⠀⡀⠀⠀⠀⠀⠀⠀⠀⠀⠈⢻⣀⣽⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⠀⠀⡁⠀⠰⣦⠥⢌⣉⣩⡩⠤⠬⣕⢲⠄⠀⢨⠀⠀⠀⣸⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⡀⠀⠁⠀⠀⠀⠁⠚⠀⠃⠂⠓⠉⠈⠀⠀⠀⣸⠀⠀⢴⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣟⣿⣿⣿⣷⣄⠠⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣴⠃⠀⡤⠺⣿⣿⣿⣿⣿⣿⣿

⠸⣿⣿⣿⣿⣿⣿⡇⠈⠃⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣠⠧⠚⠁⠀⠀⢿⣿⣿⣿⣿⣿⡿

⠀⠙⣿⣿⣿⣿⡟⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣿⣿⣿⡿⠃

⠀⠀⠀⠉⠉⠁⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠉⠉⠀⠀

190 fps - DDR4⠀⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢀⣀⣠⣤⣤⣀⣀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⠀⢀⣠⣴⣶⣾⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣷⣦⣄⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⢀⣤⣾⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣦⣀⠀⠀⠀⠀⠀

⠀⠀⠀⣠⣿⣿⣿⣿⣿⣿⣿⣿⠿⠟⠟⠛⠛⠛⠻⠻⠿⣿⣿⣿⣿⣿⣿⣿⣿⣦⡀⠀⠀⠀

⠀⠀⣴⣿⣿⣿⣿⣿⣿⠟⠉⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠉⠻⣿⣿⣿⣿⣿⣿⣿⡄⠀⠀

⠀⣰⣿⣿⣿⣿⣿⣏⠅⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠢⠹⣿⣿⣿⣿⣿⣿⣿⡄⠀

⢠⣿⣿⣿⣿⣿⡷⠈⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢻⣿⣿⣿⣿⣿⣿⣿⠀

⢸⣿⣿⣿⣿⣿⠁⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢹⣿⣿⣿⣿⣿⣿⣿⡇

⡿⣿⣿⣿⣿⡺⢀⡴⠿⠟⣶⣂⣄⠀⠀⠀⠀⠀⢀⣠⣴⣶⠾⠿⢶⣼⣿⣾⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⢳⠃⠀⠀⠀⠀⠈⠉⠁⠀⠀⠀⠀⠈⠁⠁⠀⢀⠀⠀⣹⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣘⣠⣾⢿⣟⣾⠲⣄⠀⠀⠀⢸⡀⠀⡠⠖⣻⣻⡿⢷⣽⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⠜⠋⠑⠠⠍⠁⠈⠀⠀⠀⠀⠈⠠⠀⠀⠑⠈⠩⠅⠊⢹⣿⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⡟⠀⠀⠀⠀⠀⠀⠀⠀⢠⠄⠀⠸⡎⠀⠀⠀⠀⠀⠀⠀⠀⠻⣛⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⠀⠀⠀⠀⠀⠀⠀⣰⢁⣤⠀⣰⡄⢣⠀⠀⠀⠀⠀⠀⠀⠀⢉⣿⣿⢹⣿⣿⣿

⣿⣿⣿⣿⣯⢷⠀⠀⠀⠀⠀⠀⠈⠈⠀⠈⠀⠈⠈⠀⠀⠀⠀⠀⠀⠀⠀⣾⡧⢋⣼⡿⣿⣿

⣿⣿⣿⣿⣿⡇⠀⠠⠀⠀⠀⠀⠀⠀⠀⠀⠀⡀⠀⠀⠀⠀⠀⠀⠀⠀⠈⢻⣀⣽⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⠀⠀⡁⠀⠰⣦⠥⢌⣉⣩⡩⠤⠬⣕⢲⠄⠀⢨⠀⠀⠀⣸⣿⣿⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⡀⠀⠁⠀⠀⠀⠁⠚⠀⠃⠂⠓⠉⠈⠀⠀⠀⣸⠀⠀⢴⣿⣿⣿⣿⣿⣿⣿

⣿⣿⣟⣿⣿⣿⣷⣄⠠⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣴⠃⠀⡤⠺⣿⣿⣿⣿⣿⣿⣿

⠸⣿⣿⣿⣿⣿⣿⡇⠈⠃⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣠⠧⠚⠁⠀⠀⢿⣿⣿⣿⣿⣿⡿

⠀⠙⣿⣿⣿⣿⡟⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣿⣿⣿⡿⠃

⠀⠀⠀⠉⠉⠁⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠉⠉⠀⠀

hahahanoobs

Posts: 5,224 +3,076

Those who upgrade every year wouldn't buy the DDR4 board version for 12th gen+. They would pay the premium for DDR5 board and memory.If you're a casual gamer, it wont. Because your GPU is nowhere near 4090 level, and your CPU is not a 14900k.

Future proofing would be a better argument.

Those who upgrade every 1-2 years dont care about "sense", they buy the fastest they can whenever possible. A 7% increase is enough to justify it to them.

For everyone else CPUs have long stopped being an upgrade thing and have become something that you use until it fails, like PSUs or cases. Really, only the GPU needs updated frequently anymore.

I'm talking about the ones that went DDR4+12th gen+ to save money.

If all you care about is FPS and price go DDR4. The human eye cannot percieve anything beyond about 60 fps. All of these people shooting for 100+ are just wasting money. You literally cannot see the difference. Same that we cant really visually spot the differences between 4K and 8K. Buy a nice 4K monitor that does at least 60 FPS and build your computer to run that. The tests I want to see are loading times, how fast apps and games open, how smooth they operate. Show the difference between DDR4 and DDR5 on speed, even between similarly priced parts.

https://www.techspot.com/article/2769-why-refresh-rates-matter/If all you care about is FPS and price go DDR4. The human eye cannot percieve anything beyond about 60 fps. All of these people shooting for 100+ are just wasting money. You literally cannot see the difference. Same that we cant really visually spot the differences between 4K and 8K. Buy a nice 4K monitor that does at least 60 FPS and build your computer to run that. The tests I want to see are loading times, how fast apps and games open, how smooth they operate. Show the difference between DDR4 and DDR5 on speed, even between similarly priced parts.

SilverCider

Posts: 89 +26

If all you care about is FPS and price go DDR4. The human eye cannot percieve anything beyond about 60 fps. All of these people shooting for 100+ are just wasting money. You literally cannot see the difference. Same that we cant really visually spot the differences between 4K and 8K. Buy a nice 4K monitor that does at least 60 FPS and build your computer to run that. The tests I want to see are loading times, how fast apps and games open, how smooth they operate. Show the difference between DDR4 and DDR5 on speed, even between similarly priced parts.

You'll find that the human eye cannot perceive anything beyond about 24 fps.

yRaz

Posts: 6,413 +9,490

This is backwards, it takes about 15-20fps to create the illusion of motion to our eyes. Our eyes do not have a frame rate. As we get older, the amount of frames we can perceive drops. Depending on age, the amount of frames we can perceive is between 40 and 90. As a general rule, the frame rate of a display should be twice our perceivable frame rate. At 37 years old I don't get any benefit from anything higher than 100-120 fps so I'm going to make the assumption that I can perceive about 50fps. I had a teenager sit down in front of my PC and he immediately noticed my "low refresh rate" 120hz display but you can put a 120 and 240 next to each other and I can't tell the difference.You'll find that the human eye cannot perceive anything beyond about 24 fps.

Last edited:

yRaz

Posts: 6,413 +9,490

Currently, I agree. Right now, DDR4 is the best it's ever going to be and I feel we still have another 2ish years of DDR5 development before we see it peak. Although, the cost difference now is really about $20-30 for comparable sets so I wouldn't let that stop me if I was doing a new build. Not like it was a year ago where there was 100% cost difference for a slower set of DDR5 and that was if it was even in stock at the time.DDR5 looks more like a marketing gimmick imo. Or could be that it's still in early stages. The performance we're seeing now is not worth it imo.

Skjorn

Posts: 828 +696

No gear 1 for DDR5* . Gear one exists for DDR4 still, I'm not sure what the actual limit is for G1D4 but I think it's 4000MHz for 13th gen i7.Then you'd be absolutely wrong. The typical setting is "auto" on all the brands. It determines the best gear based on memory speed. ASRock does it this way, and so do the other major brands. Also, there is no gear 1 on Raptor lake. It's 2 and 4. They have different "gear ratios" from previous generations.

I know for a fact 11th gen was 3200MHz for i7 and 3600MHz for i9.

You got a source for the 60fps claim? Cause right now it looks like you just pulled it out your rear end.If all you care about is FPS and price go DDR4. The human eye cannot percieve anything beyond about 60 fps. All of these people shooting for 100+ are just wasting money. You literally cannot see the difference. Same that we cant really visually spot the differences between 4K and 8K. Buy a nice 4K monitor that does at least 60 FPS and build your computer to run that. The tests I want to see are loading times, how fast apps and games open, how smooth they operate. Show the difference between DDR4 and DDR5 on speed, even between similarly priced parts.

90 is about the minimum I think that's "fluid" enough. I can tell the difference between 120 and 144. I got someone a 240Hz screen and I can tell the difference from my own 144 screen.This is backwards, it takes about 15-20fps to create the illusion of motion to our eyes. Our eyes do not have a frame rate. As we get older, the amount of frames we can perceive drops. Depending on age, the amount of frames we can perceive is between 40 and 90. As a general rule, the frame rate of a display should be twice our perceivable frame rate. At 37 years old I don't get any benefit from anything higher than 100-120 fps so I'm going to make the assumption that I can perceive about 50fps. I had a teenager sit down in front of my PC and he immediately noticed my "low refresh rate" 120hz display but you can put a 120 and 240 next to each other and I can't tell the difference.

Boy you act your 97 and not 37. There's professional athletes out there your age hitting 100mph fastballs and returning tennis serves that are faster.

I don't feel comfortable with you on the road.You'll find that the human eye cannot perceive anything beyond about 24 fps.

yRaz

Posts: 6,413 +9,490

It's my experience that response time is more important at high refresh rates than the actual refresh rate. I've seen 240hz displays that look like they're 60hz and I've seen some OLED panels running at 60hz that look much higher than that. But with all things being equal, I can't tell the difference between 120 and 240. I remember looking at 144hz OLED once and thinking that it looked like a glasses free 3D display.90 is about the minimum I think that's "fluid" enough. I can tell the difference between 120 and 144. I got someone a 240Hz screen and I can tell the difference from my own 144 screen.

Boy you act your 97 and not 37. There's professional athletes out there your age hitting 100mph fastballs and returning tennis serves that are faster.

People put far too much on refresh rate alone. I find ultra-high refresh rate displays absolutely *****ic. "using this 10,000 FPS camera you can see that at 360hz this pixel is blurry but at 540hz it isn't". I remember lamenting the transition to LCDs from CRTs because ghosting was an issue until about 2008-2009 and you needed a TN panel so you had to deal with dithering to get decent 60hz performance. IPS wasn't entirely sorted out until around 2012-2013 and I'd argue that VA panel still have issues to this day. Frankly, I don't know why they still make VA panels, OLEDs have made them irrelevant.

If they can bring the cost down of OLEDs using things like printing then I wouldn't even care about things like burn in. I'd just buy a new display every 2-3 years for $300.

Similar threads

- Replies

- 17

- Views

- 241

- Replies

- 50

- Views

- 801

- Locked

- Replies

- 28

- Views

- 559

Latest posts

-

US TikTok ban could begin next year as Biden signs law, but legal battle looms

- GodisanAtheist replied

-

Who is Prabhakar Raghavan and why is he accused of killing Google Search?

- captaincranky replied

-

USB 28 error on windows 10

- Iddo12 replied

-

AMD Radeon RX 8000 RDNA 4 GPUs rumored to use slower 18 Gbps GDDR6 memory

- m3tavision replied

-

Ayaneo Pocket S Android handheld lands on Indiegogo starting at $400

- toooooot replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.