What just happened? Over three months since it announced the feature was on its way, DLSS has finally come to Call of Duty: Warzone. According to Nvidia, turning on the company’s Deep Learning Super Sampling, assuming you have an RTX 20-Series or newer, will bring up to 70% faster performance in the FTP battle royale title.

At its CES keynote in January, Nvidia said Warzone would be following in CoD Black Ops Cold War’s footsteps by introducing support for DLSS. While the amount of difference it makes does vary from game to game, team green claims you can expect up to a 70 percent frames per second improvement in Warzone, though that’s probably an optimistic figure.

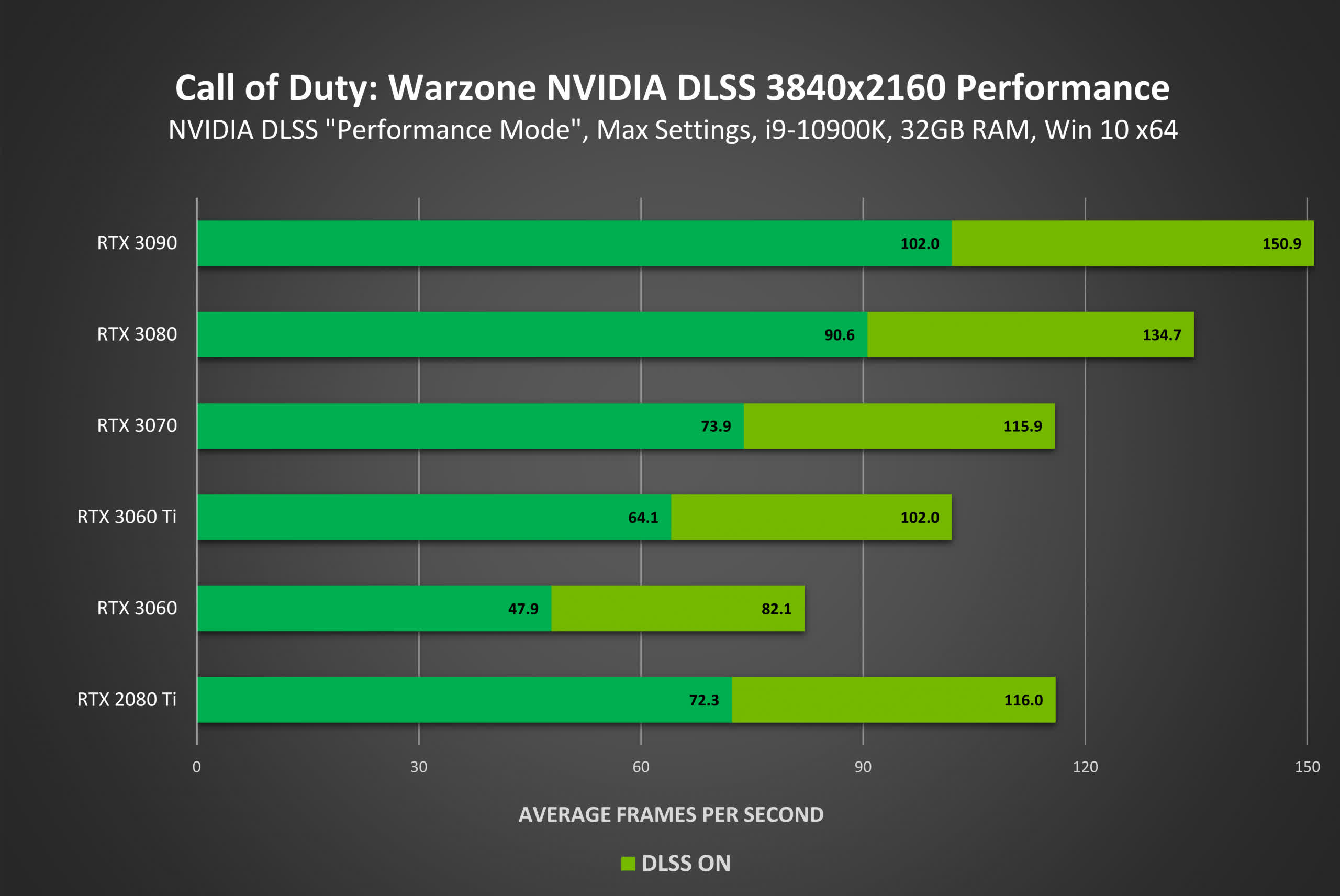

According to the company’s charts, enabling DLSS while using an RTX 3060 (4K, max settings, i9-10900K) will boost Warzone's framerate from 47.9 fps to 82.1 fps. The difference is even greater if you’re lucky enough to own an RTX 3090; it moves from 102 fps to 150.9 fps.

VideoCardz writes that some players who have already enabled DLSS in Warzone are reporting visible artifacts and ghosting, but stability should improve with future updates.

DLSS has seen vast improvements since its arrival and is becoming an option in more titles—check out the full list here. We heard earlier this month that the Unity game engine would be receiving support by the end of the year, and there’s an Unreal Engine 4 plugin that makes implementing DLSS in games a lot easier for devs. This method has already been utilized in the System Shock remake demo.

In addition to adding DLSS, the 25.2GB Warzone Season 3 update adds new content and a slew of bug fixes.

https://www.techspot.com/news/89412-dlss-finally-arrives-cod-warzone-nvidia-claims-up.html