In most discussions about generative AI, people tend to focus on the things that tools like ChatGPT, Midjourney, Google Bard and Bing Chat can do. And it's easy to understand why – these impressive capabilities have turned the tech and business worlds on their ear. They're also likely what's led an impressive 88% of US businesses to already start using GenAI tools – one of the many unexpected facts uncovered in a new study by TECHnalysis Research.

What that study also found, however, is that while people are undoubtedly excited about the possibilities that the current generation of GenAI tools offer, they also have a number of concerns about features and capabilities not currently included as well as how the products are packaged and priced.

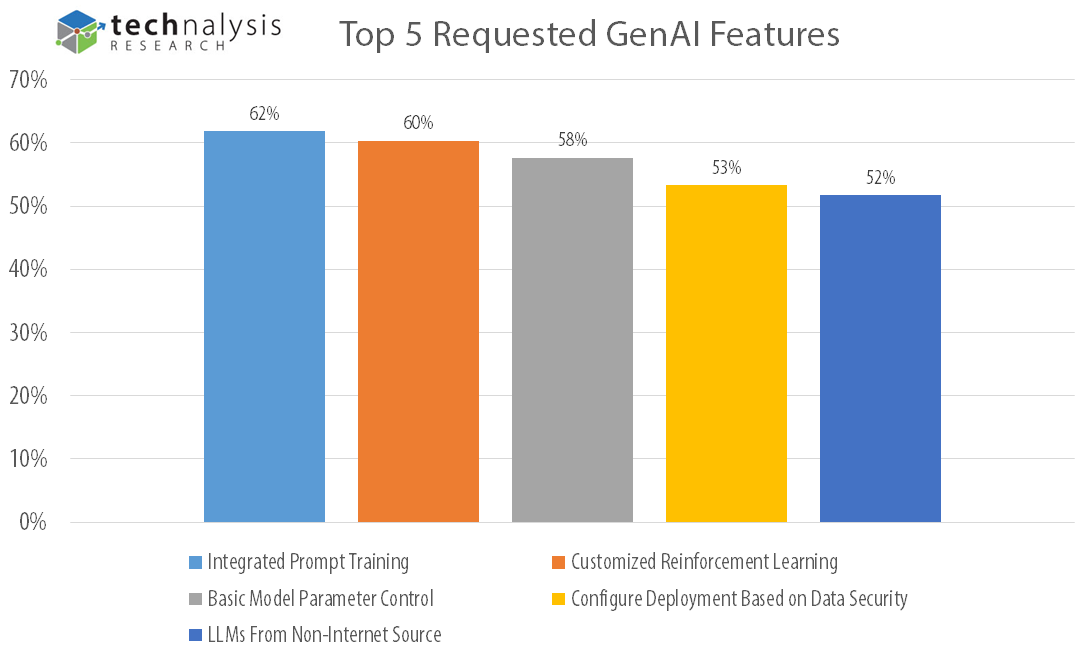

As part of the survey in which 1,000 IT decision makers participated, respondents were asked about functions they would like to see added to GenAI tools. As Figure 1 illustrates below, there were five options that more than one half of the survey takers would like to see include in future iterations...

Fig. 1

Leading the list is integrated prompt training, which 62% of respondents requested. Given the incredible importance that prompts play in the effective use of GenAI tools, this doesn't seem terribly surprising, yet there are few if any current products that include it.

There's certainly been a lot of discussion about "prompt engineers" being an exciting new job category that GenAI tools are enabling, but for GenAI adoption to go mainstream it only makes sense to make sure that everyone who uses these tools can learn how to get the best possible results from them. The ability to create effective prompts shouldn't be limited to specialists, so let's hope vendors quickly respond to this request and start to integrate these capabilities into new versions of their tools.

The ability to have customized reinforcement learning was second, which is interesting on several levels. First, it reflects the desire to have customized foundation models that can integrate human feedback, such as with Reinforcement Learning from Human Feedback (RLHF), which is a relatively new capability for improving the quality and accuracy of GenAI models. Its selection by 6 of 10 survey takers reflects a somewhat surprising degree of sophistication on the part of respondents. At the same time, it's still a little-known capability, which might suggest a level of potential confusion or misunderstanding on the part of respondents – a point that seemed apparent from answers to other technical questions. What does seem clear is the desire to customize the GenAI models that organizations work with in multiple ways is high on the priority list for most survey takers.

In a separate question, the IT decision makers who participated in the study were asked about their use of watermarking or otherwise notifying people who see the output from these tools that they were created by GenAI. A whopping 91% said they did so for various combinations of content (or other generated output) for internal or external purposes. Once again, however, few of the current generation GenAI tools have mechanisms for automatically integrating some type of watermarking – a clear opportunity for vendors to improve.

When it comes to how GenAI models are packaged and sold, there are still a wide variety of opinions or expectations, but a fair amount of confusion as well. Even things as simple as knowing whether or not a vendor was selling a standalone GenAI model, an application or service that was powered by a GenAI model, or some other variation on these themes was not easily understood. (Nor is it in the current messaging around many GenAI tools, for that matter.)

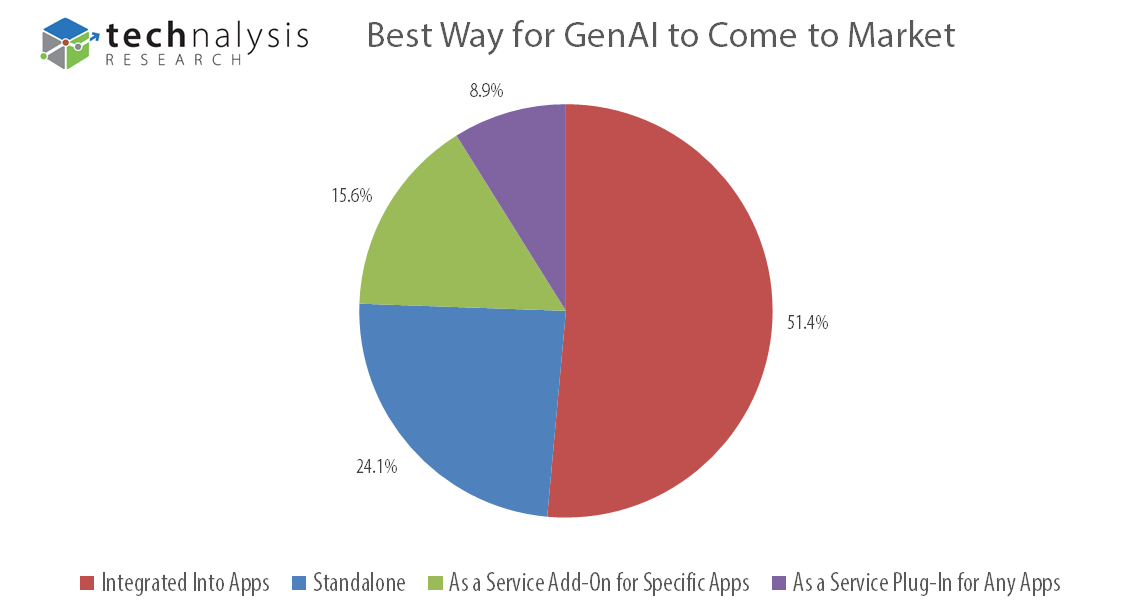

When specifically asked about what respondents thought was the best way for GenAI tools to be brought to market, there was no clear answer, as Figure 2 shows.

Fig. 2

The largest group at just over 51% believes that GenAI capabilities should be integrated into existing applications, but about a fourth believe it should be standalone and the remaining portion think it should be sold in an as-a-service model for either specific apps or any app. These are all very different approaches and it's likely we'll see vendors experimenting with several different methods.

A related question also asked about whether core foundation models should be integrated into each app, or shared across multiple apps, with options for a single shared model across all apps, or a limited number of models used in multiple apps. In this case, about 55% said each app should have its own foundation model, while 35% thought there should be a single "master" model that gets used across multiple apps, and the remaining 10% believed there should be multiple models that are shared across multiple apps.

Again, there's lots of permutations to think through from all this data, but one thing that's very clear is that GenAI vendors have a huge educational and marketing challenge on their hands to best explain what exactly they're offering, what it works with (or doesn't), and how to integrate it with other tools.

There's little doubt that the excitement around GenAI is real and, as the study illustrates, it's starting to be deployed for a wide variety of applications in industries of all types. At the same time, it's still very early in the evolution and understanding of GenAI tools. As a result, vendors are going to have to do a great deal of work (likely using GenAI to help explain GenAI!) to clearly position what their tools are capable of doing and customers are going to need to be clear about what they're looking for and what they need to achieve their goals. Eventually, this should all be straightforward, but for now, it's going to be interesting times.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

Image credit: Andrea De Santis