Something to look forward to: Now that we're into year 2 of the GenAI phenomenon, it's time to start digging into the second and third order implications of what this revolution may actually bring about. One question that's kept evolving over the past year is the potential impact that generative AI will have on hardware devices, things like PCs, smartphones, tablets, wearables, and other devices.

Initially, it appeared that these computing devices would play a minor role since the early GenAI applications and services were cloud-based and accessed like any typical website or app. The assumption was that the computing requirements for these kinds of workloads far exceeded the capabilities of even the most advanced personal computing systems, and could only be handled by cloud-based mega data centers.

However, this perspective began to shift last year due to various developments and the rapid pace of evolution and advancement in the GenAI domain. Notably, the introduction of several foundation models with fewer than 10 billion parameters, capable of operating within the memory and compute limits of personal devices, marked a significant change.

Versions of Meta's Llama2, Google's Gemini, Stability AI's Stable Diffusion, and others have all been demonstrated running on PCs or phones. This sparked speculation about efforts by companies like Microsoft, Apple, and others to integrate this technology into everyday devices by 2024.

Technological advancements such as model quantization and pruning, along with concepts like retrieval augmented generation (RAG), have shifted focus towards running foundation models and GenAI applications on devices. This approach has now become widely accepted.

At Samsung's Galaxy S24 launch event, we observed some of the first real-world implementations of on-device AI, including real-time translation features. This year and the next are likely to bring many more examples of products utilizing on-device AI.

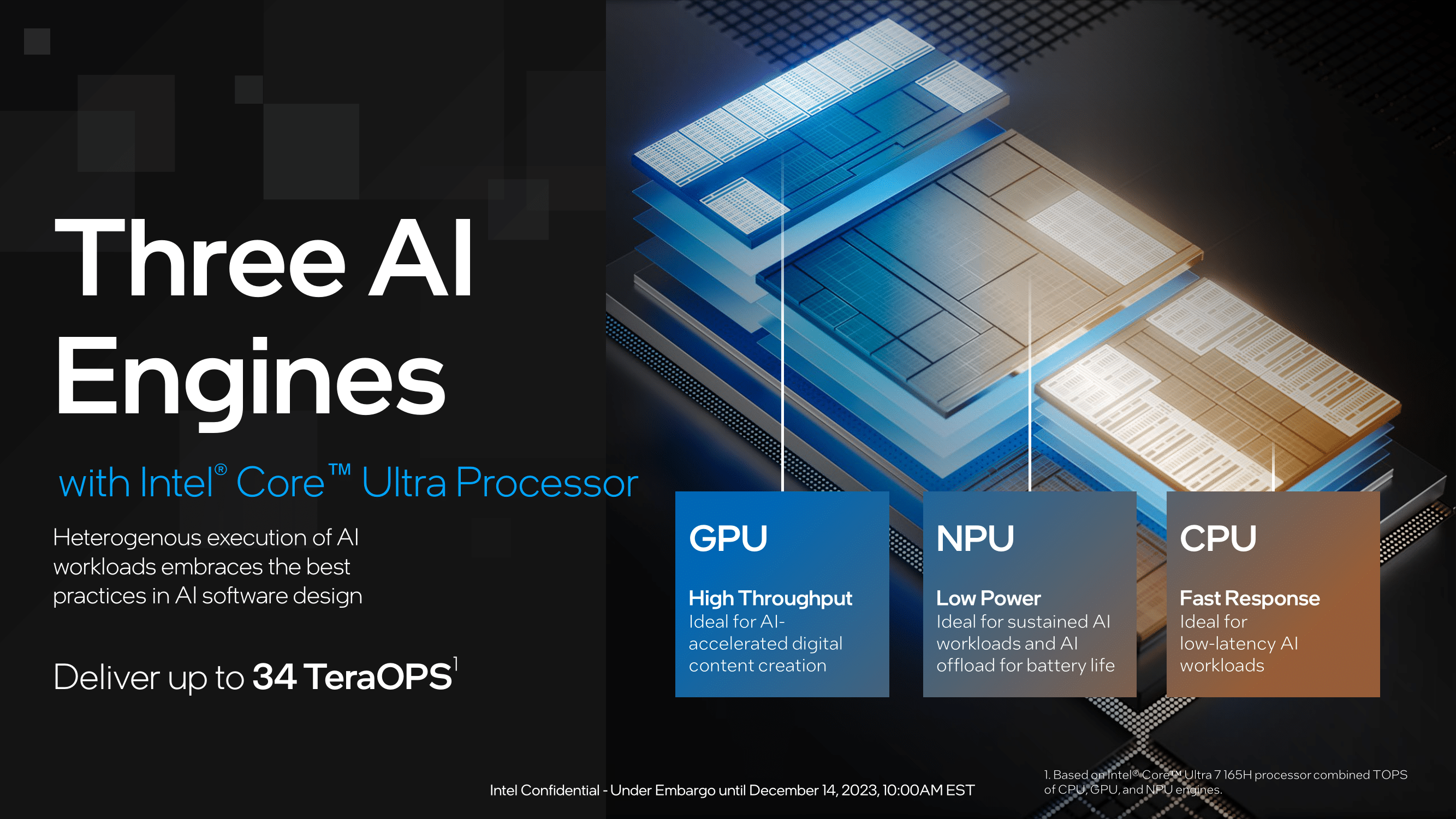

Furthermore, the introduction of AI hardware acceleration capabilities in new semiconductors has been a highlight. Qualcomm got things started with demos of GenAI models running on Snapdragon 8 Gen 3 mobile processors. The company continued that theme with the announcement of its Snapdragon X Elite PC processor slated for release in mid-2024. AMD debuted the Ryzen 7040 PC SoC with its first Ryzen NPU and brought out the updated 8040 more recently. Finally, Intel closed out 2023 with the launch of its long awaited Core Ultra SoC, which is its first to incorporate a dedicated AI accelerator.

Just as impressive as this flurry of activity on the semiconductor side is the accelerated pace at which new launches are expected in the coming months. Both AMD and Intel, are expected to have more powerful PC chips with better AI accelerators before the end of the year. For its part, Microsoft has also hinted at several new capabilities coming to Windows PCs that will leverage them. And even though it's been remarkably silent on the topic to date, Apple is anticipated to have GenAI-related news on both hardware and software fronts around the time of their WWDC event that typically happens in early June.

Taken together, all these advances already reflect a profound impact on the device world. And yet, I'd argue that they only represent the tip of the iceberg. First, on the overall market, there are some encouraging early signs that AI PCs and phones will reinvigorate the sales of these recently floundering categories. The biggest impact likely won't come until the second half of 2024 and perhaps not until 2025, but after a difficult last year or two, that's great news.

Across all devices, this will require things like higher quality microphones, more and better sensors, and new and easier ways of connecting with peripherals and other devices.

We should also expect some dramatic improvements in the overall usability and capabilities of our devices because of GenAI. Adding things like truly usable and reliable speech and gesture inputs can open a whole range of new applications and reduce the frustrations that many people have with their current devices. It will also enable entirely new types of devices, particularly in the wearables world, where the dependence on screens for a UI will start to diminish. Across all devices, this will require things like higher quality microphones, more and better sensors, and new and easier ways of connecting with peripherals and other devices.

I also expect to see new types of software architectures, such as distributed applications that perform some of their work on the cloud and some on-device. In fact, I believe this hybrid AI concept will prove to be one of the primary means of running GenAI applications on devices for the next several years, particularly until there's a larger installed base of devices with powerful, dedicated AI co-processors.

We are headed into some interesting and exciting times in the world of PCs, smartphones, tablets, and wearables.

Eventually, that is just what we'll have. While we may initially call these new devices AI PCs or AI smartphones, they will soon just be PCs and smartphones once again, and the AI capabilities will be inherent and assumed.

It's very much like how graphics and GPUs came into being. Initially no PCs (or smartphones) had dedicated graphics chips, and it was a big deal when the first GPUs started to be incorporated into them. Now, every device has some level of integrated graphics acceleration and a few – like gaming PCs – still have standalone dedicated GPUs for more demanding needs.

I believe almost the exact same thing will happen with NPUs and AI acceleration. Most every device will have some level of AI acceleration within two to three years, but there will continue to be some that use dedicated AI processors for more advanced applications.

In the meantime, we'll need to figure out how we think, talk about, and categorize these new types of GenAI-influenced computing devices. There's little doubt that things may get confusing for a while, but it's also clear that we are headed into some interesting and exciting times in the world of PCs, smartphones, tablets, and wearables.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on X @bobodtech

https://www.techspot.com/news/101728-opinion-how-generative-ai-impact-our-devices.html