Lmao

This is the guy who literally got cut off from Nvidia for not covering their cards well enough.... He's always had a heavy amd bias (but even with that he's still a reasonable person and isn't a delusional fanboy).

The article just goes to show that it's almost impossible not to admit that Nvidia has do e a better job once again overall between features future tech pricing and availability.

All of it is showing without question that Nvidia is the best option.

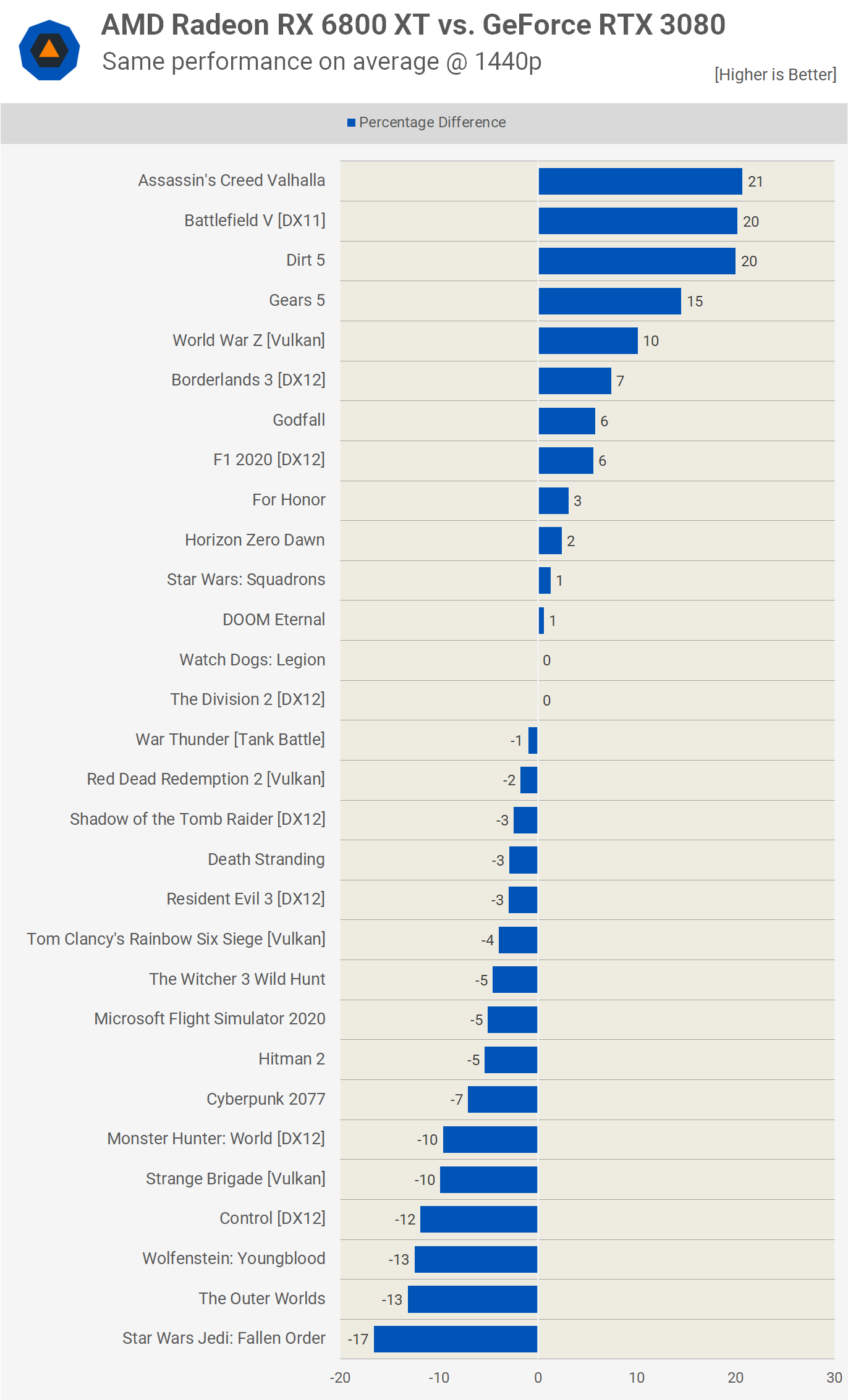

What I find interesting is that the 6800 XT's additional VRAM doesn't seem to help. The 6800 XT is clearly a better performer at 1080P. But, as you increase resolution, the 3080 erases that advantage. One would think that additional frame buffer would benefit higher resolutions, but apparently not. Maybe 10GB is enough for 4K, at least for now.

That's called a bunch of amd fanboys who've Convinced themselves over year that the extra ram radeon throws at them (in the past to make up some of the lost "value" compared to higher performing Nvidia cards) isn't the be all end all deciding factor for "future proofing" they want to believe it is.

It's been clear for a while speed of access is much more important and though a decent amount of ram is obviously needed the "budget" most have convinced themselves they NEED for a long time has been bloated.

The see things like ram usage in their favorite game and automatically think since it's full or close to it they need more for future more demanding titles.

Reality is that many programs over reserve the ram and aren't technically "using" anywhere near that full amount.

Nvidia did their homework and have proven that 10gb for 4k is relatively fine for the most part.

And these bench's back that up.

Would love to buy an RTX3080. Refuse to pay scalper prices, and I want a selection of models, not being forced into just buying whatever is available. Nvidia will have probably shuffled the model pack by the time you can freely buy them.

It reminds me of the Fermi GTX480 where after the initial availability of cards there was zero stock right up until the GTX580 replaced it 6 months later. Mainly because in that case the yield of those chips was so horrendous they barely made any of them. I have to question the yield and production speed of this at Samsung, four months after launch.

Lots of factors like a gaming market explosion and crypto again but I would also probably say this is another result of Turing's failure to have a big impact on the market. Lots of people went to Pascal, then they didn't move onto RTX2000 Turing because it wasn't a good enough step.

Now Ampere is a significant step every man and his Pascal dog wants an upgrade.

Me personally made the decision early on not to be greedy and just take whatever 3080 I could get, it took me 8 hours on launch day but I landed a msrp card (but not the one I wanted) still I didn't mind.

I used that card til I could get a better preferred card and when I did I was easily able to sell that one for enough to pay for my new one. I did the same to land on the actual 3080 I wanted from the get go back in December and again was able to sell my 2nd used cars easy (under 60 seconds) with enough return to pay for the card I just got.

Ultimaltey the market is so stressed that nearly any card is a good choice and can easily be replaced without sunken costs of you happen to get what you want later.

And once again TS gives a "summary" that only includes 4k. 3% of gamers game at 4k.

Very poorly done.

He covered 4k and 1440p the rest f the results are there and speak for themselves

. Does he need to hold your hand?

It's quite clear the 3080 is a better choice for almost everyone (except for a 1080p gamer who doesn't stream nor has any interest in RT (or dlss).

Budget HDR monitors are terrible and look worse with HDR on, this is a known fact, plenty of reviews prove you need to spend decent money to get a screen that has a decent HDR implementation.

Best HDR you can experience is a lg display oled they may be TV's "but they blow anything in the monitor world out of the water for HDR.

Not to mention HDR is still a mess in windows / pc where it's almost universal and a given on TV / console devices and content.

I went lg oled for my primary display and will never go back to a standard monitor w ever again.

4k/120 with. VRR and proper HDR is all most would ever need.

As a proud owner of a Sapphire RX 6800 XT Nitro+, you can call me a little biased. But note that my previous card was a RTX 3070. I see the 6800XT as a big upgrade, personally. I was never too interested in ray tracing, I saw it more as a gimmick instead of a feature.

While DLSS 2.0 has improved, you are still taking a performance and visual loss with it on. Take Cyber Punk as an example. I completed the game with Ray Tracing on, with DLSS set to Quality. The game struggled to hit 60 frames with my 3070. DLSS still needs a lot of work IMO. For fast paced games, most will just opt to turn it off for better performance.

Why the lack of vram? 8 GB for the 3070, and a whole 10 GB for the 3080? Another reason why I went with a 6800xt over the 3080 was out of concern for a lack of VRAM over time. While we are not seeing an issue currently, give it a year or two and I believe these cards will start have issues.

My 6800xt overclocks like a beast, boosting past 2700 Mhz at times, out performing the 3080 and even the 3090 at times. For me, I was one of the lucky ones, able to get a card. But if I had both sitting side by side and had to pick, I'd still choose the 6800XT.

Some are calling the OP biased against Nvidia? I think it's fair and balanced as much as possible. Perhaps a little leaning towards Nvidia's favor. I appreciate the objectivity.

Saying Nvidia is still better after fundamentally doing their best to make their advantages something not seen in these numbers (like moving dlss / rt to a separate article) just shows that Nvidia is clearly the winner.

When Nvidia gets handicapped in the things that absolutely are their main focus and they still come out on top that just speaks to the value.

Steve has always leaned towards an amd bias but he's also not a delusional person / shill.

You say you got your 6800 XT Nitro+ but what you didn't say is what you paid...

More than likely ( I can actually guarantee) you didn't pay anything near the $649 msrp they "claim".

You can be bias (most people are especially when they spent the money you did to aquire something that's so hard to get) but objectively the 3080 overall has been the better card from value to costs to availablity.

Enjoy what you line and convince yourself any which way you want/need that's fine... It's actually rational.

But at the end of the day Steve's Conclusion isn't one that can really be argued with amd with it Como g from the guy known as the Nvidia fighter amd supporter that he is we know it's likley to be only a personal opinion that could argue against it.