The 7900 XT was going for $705 last week during Prime and Fantastech Newegg sales. I think that's a fantastic deal & with 20GB, don't need to upgrade for a long while.Basically you forgot to add previous generation of AMD GPUs to the possible choices. And taking them for consideration for pure rasterization performance is they way to go:

The best sub $€200 GPU: Rx6600/Intel arc750

The best sub 250GPU: rx6650xt/Rx 7600

The best 300ish GPU: RX 6700xt

The best sub 450 GPU is Rx 6800

The best 500ish GPU is 6800xt

The best sub 600 GPU is 6950xt

If someone prefers dlss, frame gen, don't care about the best bang for the buck and is Ngreedia fanboy gets their products.

According to the buffoon MLID, we're expecting to see 2 or possibly 2 RDNA3 cards in September. There's a big price gap from $300-$700 and should have at least 3 cards to fill up the hole. I feel like AMD will follow ngreedia's policy and won't give us anything substantial, worthwhile over RDNA2.

7700 12GB - $349 - 6750XT w/lower power draw?

7700XT - 12GB - $429 - 6800-ish performance?

7800 16GB - $479 - 6800XT w/lower power draw?

7800XT 16GB - $579 - 6950XT w/lower power draw?

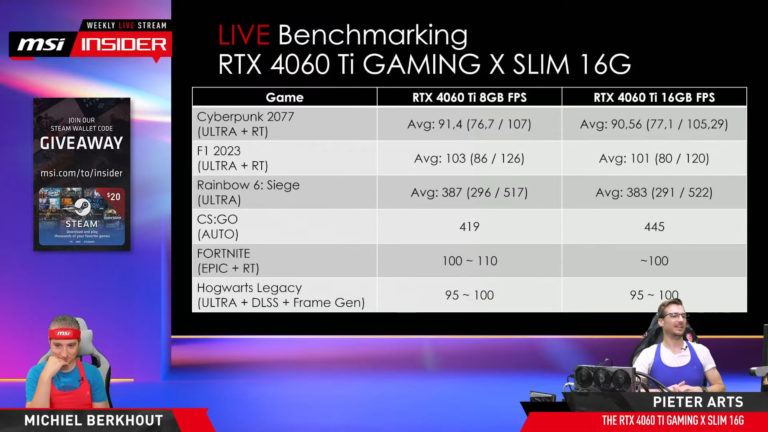

AMD can literally demolish the 4060ti and 4070 in the "mid-range". Rebrand the 6750XT+8% tweaks and charge $350, rebrand the 6800XT 16GB for $450 and 6950XT for $600. All with RDNA3 features and lower power draw.

I will buy one of these if it comes to fruition.