Forward-looking: Are you hoping the worldwide chip shortage will come to an end soon? Sadly, the current situation of demand far outweighing supply is predicted to last for another three or four quarters, followed by a further one or two quarters before inventories reach normal levels. If that's accurate, it'll be sometime in 2022 before the industry gets back to normal.

We've seen pretty much every market where the end product uses a chip suffer in the global shortage, with automobiles, PC hardware, and games consoles hit hardest.

The biggest issue has been Covid-19 and the stay-at-home orders. With most of the world suddenly switching to remote working, and home-based entertainment products becoming the norm as everything from bars to cinemas pauses trading, demand for PCs, laptops, TVs, consoles, etc. reached unprecedented levels.

Exacerbating the issue has been the increasingly complex manufacturing process making chips more difficult to produce, the larger number of chips in every device, logistical problems, and package shortages. The China/US trade war saw companies trying to stockpile chips in advance, putting further pressure on manufacturers.

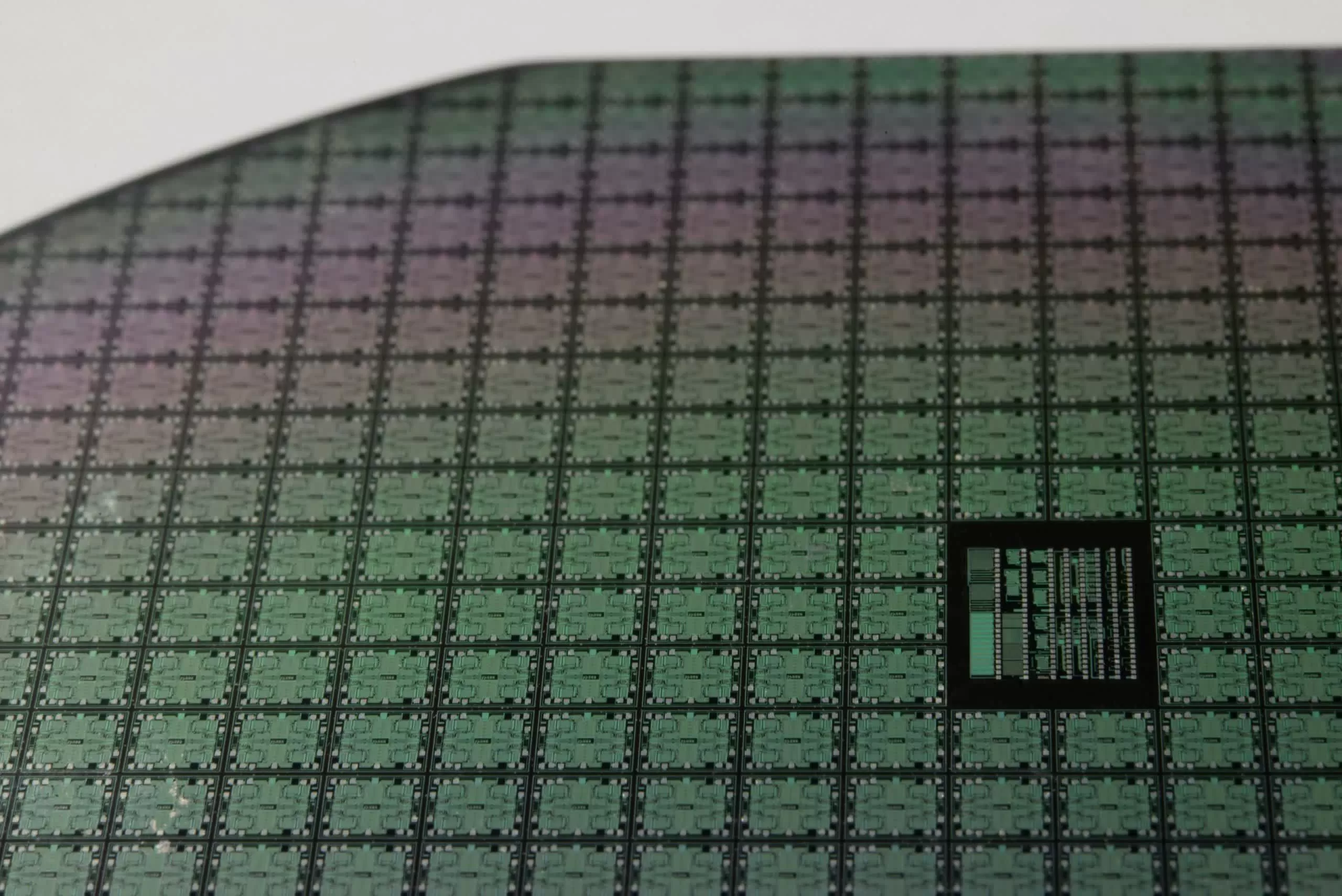

Wafer capacity leaders

| Company | Monthly wafer manufacturing capacity | Total global capacity share |

| Samsung | 3.1 million | 14.7% |

| TSMC | 2.7 million | 13.1% |

| Micron Technology | 1.9 million+ | 9.3% |

| SK Hynix | ~1.85 million | 9% |

| Kioxia | 1.6 million | 7.7% |

| Intel | 884,000 | ~4.1% |

With production already struggling to meet demand over the previous two years, the problem came to a head in 2020.

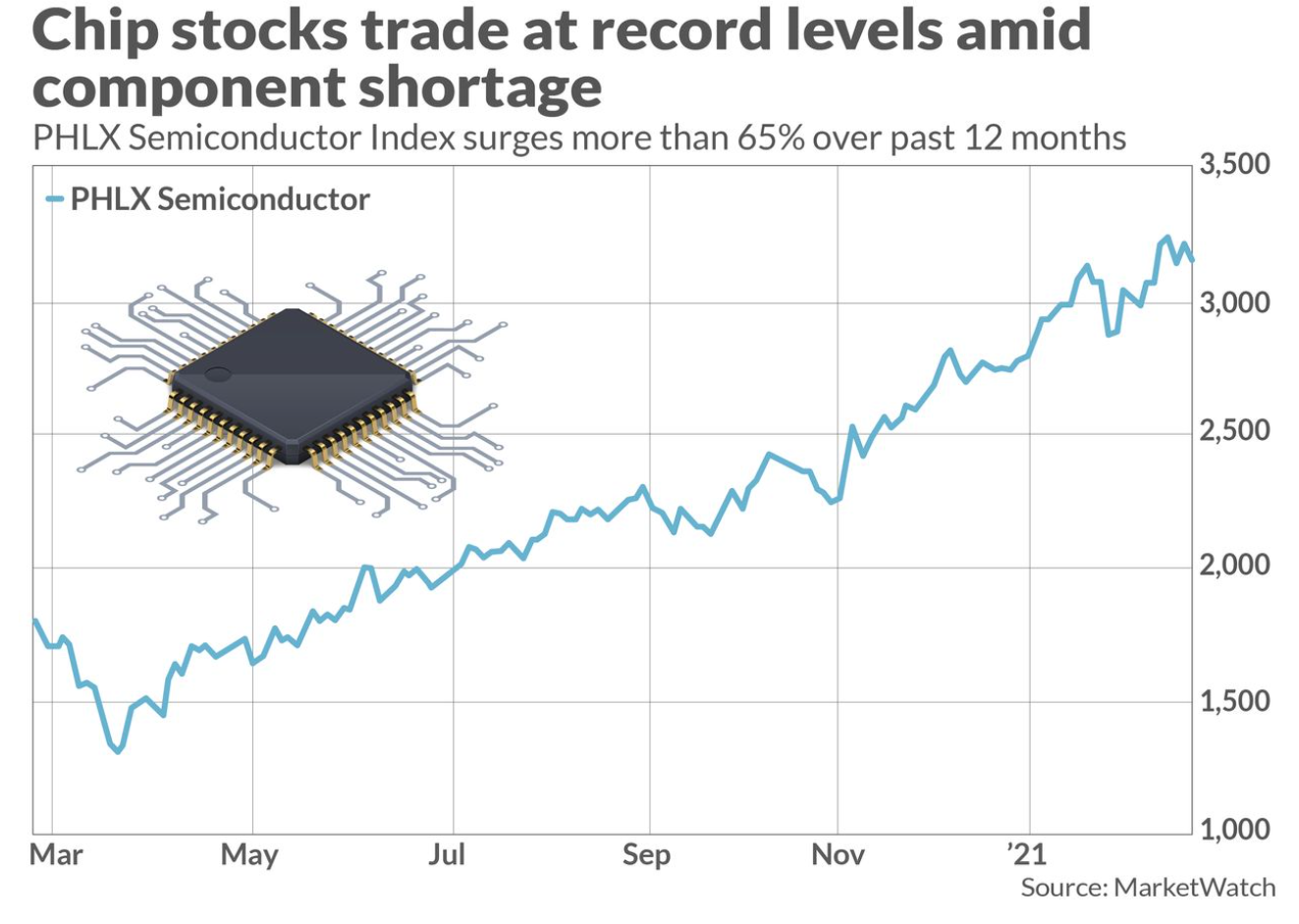

MarketWatch writes that normality is still a long way off. "We believe semi companies are shipping 10% to 30% below current demand levels and it will take at least 3-4 quarters for supply to catch up with demand and then another 1-2 quarters for inventories at customers/distribution channels to be replenished back to normal levels," said Harlan Sur, an analyst with J.P. Morgan.

Susquehanna Financial analyst Christopher Rolland said chip shortages would worsen as we head into spring as economies open up following lockdown easing and continuing vaccine rollouts. Lead times—the length of time between an order being placed and delivery—are entering a "danger zone" of above 14 weeks, the longest they've been since 2018.

"We do not see any major correction on the horizon, given ongoing supply constraints as well as continued optimism about improving demand in 2H21," wrote Stifel analyst Matthew Sheerin. "We remain more concerned with continued supply disruptions, and increased materials costs, than we do an imminent multi-quarter inventory correction."

While all this might be good news for chipmakers who have seen their stock prices soar, it's leaving consumers frustrated as attempts to buy the latest products prove fruitless. Wafer producers increased their output 40 percent in December, and the Biden administration is getting involved, but this problem isn't going away anytime soon.

https://www.techspot.com/news/88727-global-chip-shortages-expected-last-2022.html