In a nutshell: Could Nvidia's dominance in the AI and high-performance computing hardware segment in China face a challenge from Huawei? According to the chairman of a Chinese AI company, the sanctioned tech giant now has a GPU that offers similar performance to Nvidia's A100 GPU.

Liu Qingfeng, the founder, chairman, and president of Chinese AI firm iFlytek, which supplies voice recognition software, made the claim at the 19th Summer Summit of the 2023 Yabuli China Entrepreneurs Forum (via IT Home).

Qingfeng said that Huawei has been making massive strides when it comes to GPUs, having reached performance parity with Nvidia's A100.

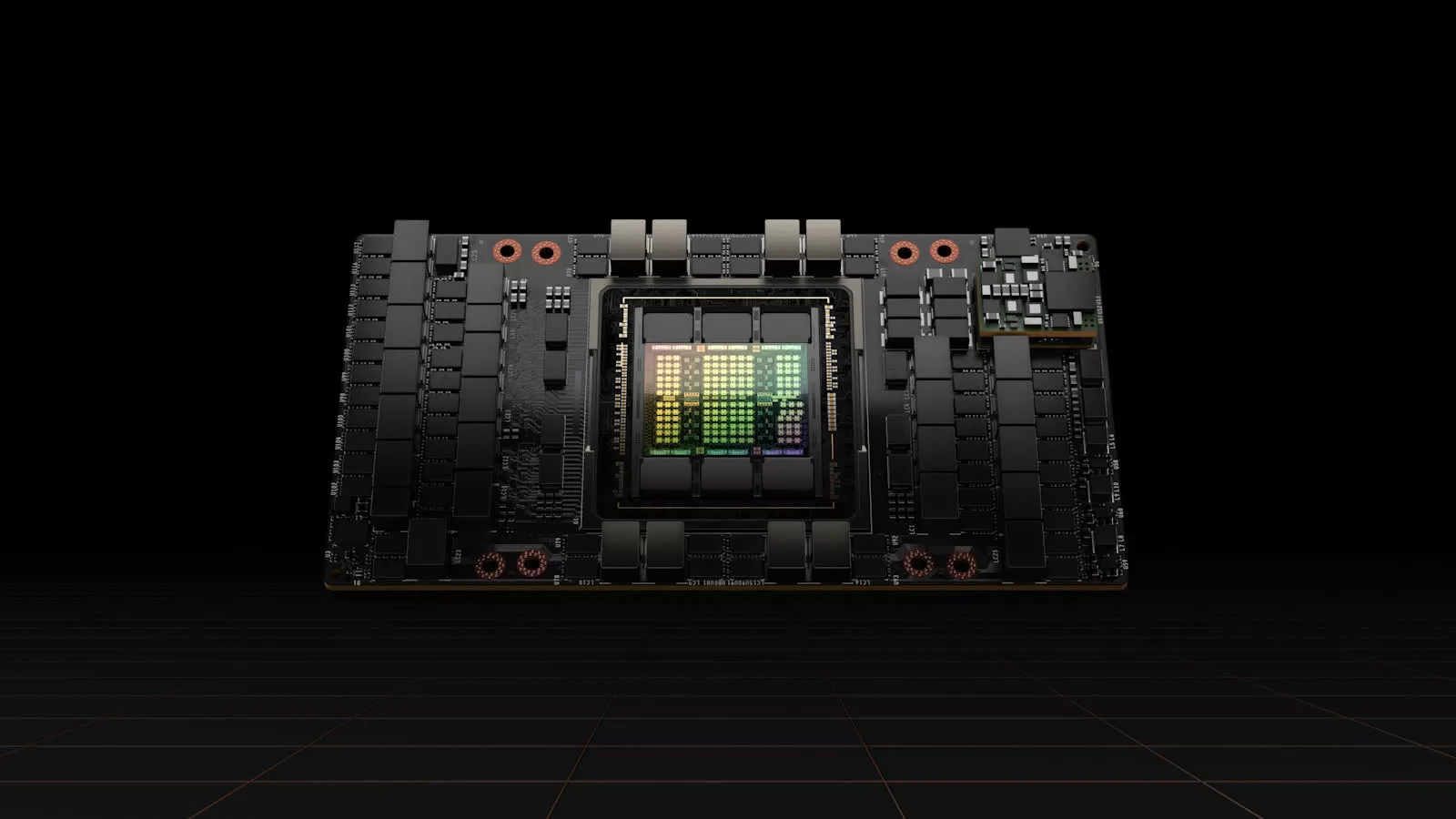

Tens of thousands of Team Green's A100 GPUs, which cost around $10,000 each, were used in a supercomputer to train ChatGPT. Only the Hopper H100 sits above it in Nvidia's AI product stack.

A domestic company producing GPUs equivalent to the A100 would be a major step for China. US sanctions prevent Nvidia from selling the A100 to firms in the country, leading Nvidia to create a cut-down version for China in the form of the A800, which has an interconnect speed of 400 GB/s, down from the A100's 600 GB/s. The scarcity of the A100 has resulted in a black market for Nvidia AI chips in the Asian nation, where they sell for double their MSRP and without warranties.

Qingfeng added that three Huawei board members are working with a special team from his company. He said that there are no problems with algorithms in the field of AI in China, but the computing power is held down by Nvidia.

Elsewhere, Qingfeng said that iFlytek will release a new general-purpose AI model to compete with ChatGPT. It will launch in both English and Chinese versions, and while it won't be on the same level as OpenAI's product at first, the company says it should be able to match the GPT-4 LLM by the first half of next year.

iFlytek officially released its Spark cognitive large-scale model in May. It has seven core capabilities: text generation, language understanding, knowledge-based questioning and answering, logical reasoning, mathematical ability, code ability, and multimodal capability. The company also announced a partnership with Huawei this month to design all-in-one machines for AI.

https://www.techspot.com/news/99948-huawei-alleged-have-ai-gpu-matches-nvidia-a100.html