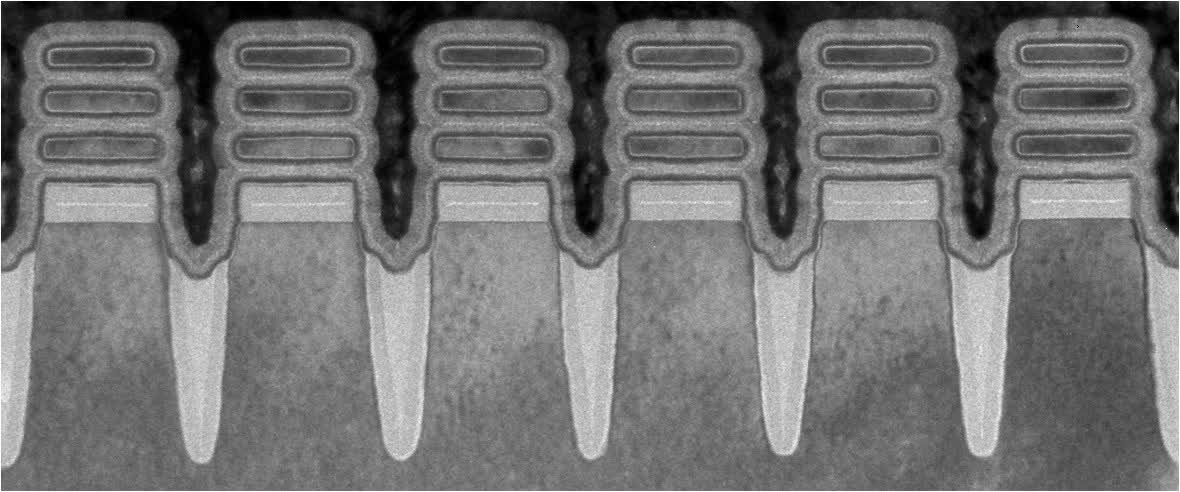

The big picture: IBM has unveiled what it is calling the world’s first 2 nanometer (nm) chip technology. According to the tech giant, the new 2nm chip design is projected to achieve 45 percent higher performance compared to today’s most advanced 7nm node chips at the same power level. Alternately, the chip can be configured to run at the same performance as today’s 7nm chips while consuming 75 percent less energy.

IBM predicts that smartphones based on the new chip technology could have up to four times more battery life compared to 7nm-based handsets. Laptops with 2nm chips could better perform tasks like language translation while self-driving vehicles could realize faster object detection and better reaction times, making them safer to use.

As AnandTech’s Dr. Ian Cutress reminds us, however, nothing about the nomenclature really resembles what you’d expect 2nm to actually be. As he explains:

In the past, the dimension used to be an equivalent metric for 2D feature size on the chip, such as 90nm, 65nm, and 40nm. However with the advent of 3D transistor design with FinFETs and others, the process node name is now an interpretation of an ‘equivalent 2D transistor’ design.

Some of the features on this chip are likely to be low single digits in actual nanometers, such as transistor fin leakage protection layers, but it’s important to note the disconnect in how process nodes are currently named.

The real talking point, then, comes down to transistor density. IBM anticipates that with the new tech, it’ll be able to stuff as many as 50 billion transistors on a chip the size of a fingernail.

IBM, as you may know, doesn't actually manufacture chips. As Cutress highlights, they're a research facility and their goal with 2nm and other tech is to develop it, patent it and license it out to partners like Intel and Samsung, and they're really good at it. Earlier this year, IBM topped the US patent list for the 28th year in a row with more than 9,100 patents granted.

A chief engineer with IBM told Cutress that products based on the new tech could hit the market in late 2024 and in higher quantities in 2025.

https://www.techspot.com/news/89579-ibm-new-2nm-chip-design-promises-higher-performance.html