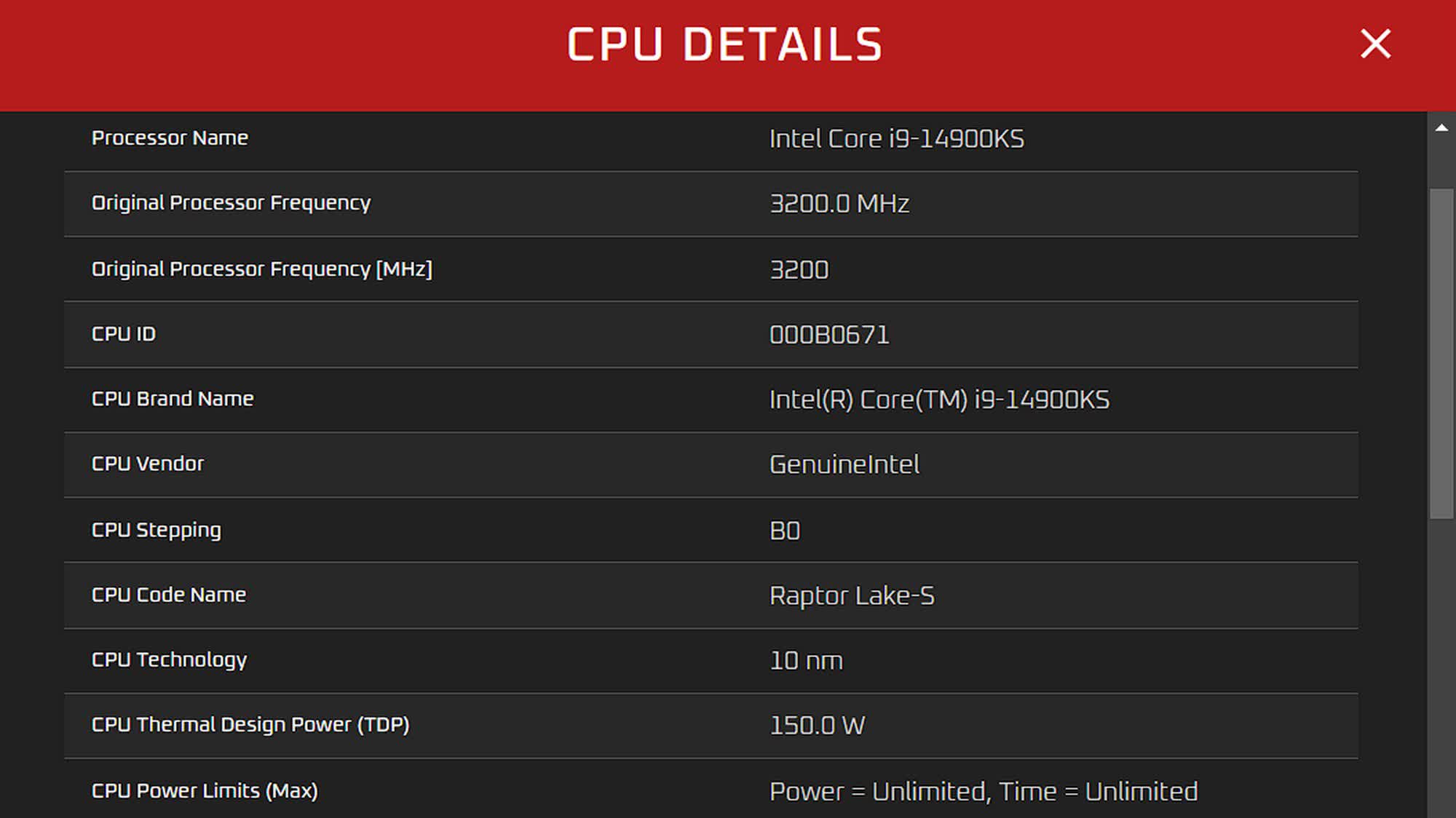

The big picture: Intel is expected to launch its flagship Core i9-14900KS Limited Edition CPU later this year, and a recent leak appears to reveal some of its key technical specifications. The forthcoming chip is believed to be a slightly enhanced version of the Core i9-14900K, which was introduced last year as part of the Raptor Lake Refresh lineup.

The Core i9-14900KS specifications were disclosed when it appeared on the OCCT database. According to the listing, the 14900KS will boast 24 cores, comprising eight P-cores and 16 E-cores. It will also support 32 threads, making it nearly identical to the existing 14900K in terms of core and thread count.

The new chip is reported to feature a 3.2 GHz base clock, mirroring that of the 14900K. Other notable specifications of the 14900KS include 36 MB of L3 cache and 32 MB of L2 cache, which align with the specifications of the 14900K.

The listing, however, also reveals a few upgrades from the standard 14900K, such as a 6.2 GHz boost speed, which is 200 MHz higher than that of the existing variant. The upcoming chip will also feature a 150W base TDP, representing a 25W increase from the 125W TDP of the 14900K. However, the peak power consumption of the 14900KS could reach up to a substantial 410W, with an average power rating of 330W.

Due to the high power consumption, the 14900KS is expected to run fairly hot, reaching temperatures of up to 101 degrees Celsius. These high temperatures suggest that users will need to invest in premium cooling solutions to ensure they can fully leverage the capabilities of their flagship CPU without experiencing thermal throttling.

As of now, there's no official word on when Intel will release the new Raptor Lake Refresh CPU, but it's likely to be sooner rather than later. Online speculations suggest that it could be priced around the $800 mark, similar to the 13900KS, but we can't confirm this until it's officially announced by Intel. Either way, the 14900K is expected to be a powerhouse, and we eagerly await its official debut.

https://www.techspot.com/news/101867-intel-core-i9-14900ks-leak-reveals-62-ghz.html