Something to look forward to: On Thursday, Intel released design documents detailing its Gen11 SoC graphics architecture. The information posted on its website outlines what to expect from Gen11, which it announced last December would be twice as fast the previous Gen9 integrated graphics engine.

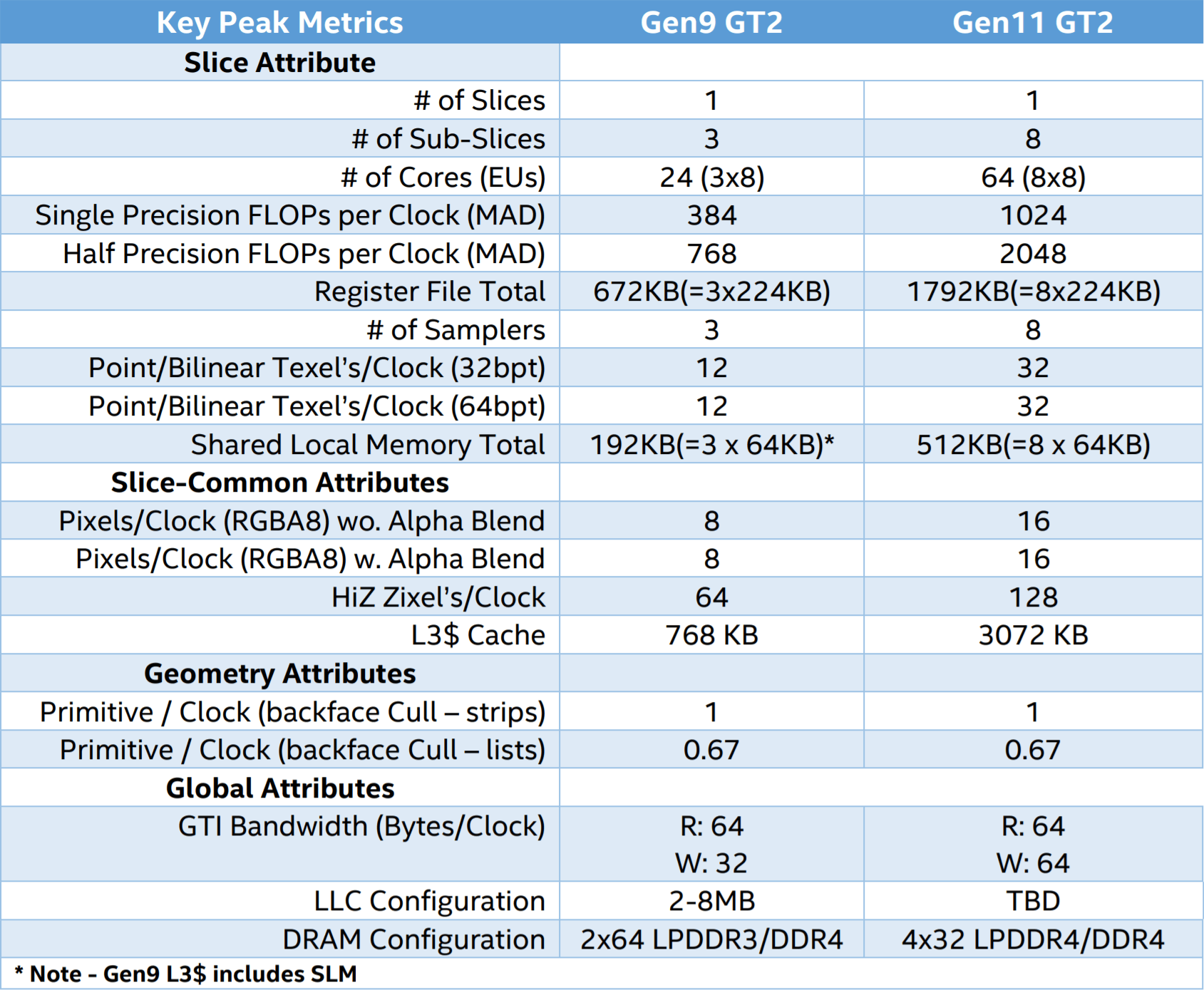

The new architecture will make its first appearance in Intel’s upcoming 10nm Ice Lake processors. The chip maker indicated that it would be aiming to reach one teraflop of 32-bit and two teraflops of 16-bit floating point performance with the new engine.

According to Intel, it will be based on its 10nm process with third-generation FinFET technology and will support all common APIs and includes Adaptive Sync support. The architecture allows for up to 4x32-bit LPDDR4/DDR4.

Intel raised the number of sub-slices which each house eight cores from three in Gen9 to eight in Gen11 for a total of 64 EUs. That is a considerable improvement over the previous 24 in Skylake chips. The new engine will process two pixels per clock.

Interestingly, CPU and GPU will share last level cache (LLC). Intel explains that this provides increased effectiveness for memory bandwidth by eliminating data movement to and from their respective units. Of course, this is all theoretical, so we’ll have to wait to see if this bears out.

The Gen11 SoC will support course pixel shading (CPS) and position only shading (POSH) to reduce power and bandwidth demands.

CPS reduces shading in portions of the screen where it is less noticeable. This method can improve frame rate and performance, while reducing rendering overhead.

“[We can use] this technique to lower the total overall power requirements or hit specific frame rate targets by decreasing the shading resolution while preserving the fidelity of the edges of geometry in the scene,” Intel said.

The method is best used on objects that are far from the camera, are in motion, or are on the visual periphery.

POSH is a tile-based rendering technology. It reduces the required bandwidth by dividing the image into a certain number of rectangular regions and then rendering them individually. Tiling helps stem the extra write bandwidth that comes with overdrawing pixels.

Intel’s Gen11 integrated graphics should be a considerable step up from the previous generation. If you want to dig into all the gritty details, it posted a white paper on its website.

Intel has Ice Lake core processors slated for release around the 2019 holiday season.

https://www.techspot.com/news/79326-intel-quietly-posted-details-gen-11-graphics-architecture.html