What just happened? Intel has posted a perplexing image of an enormous socket-based graphics processor. No one had any idea what it was until Intel’s Raja Koduri called it the “father of all.” That’s an in-house name for a specific Xe GPU. Intel Xe is an upcoming series of GPUs encompassing every market you can imagine.

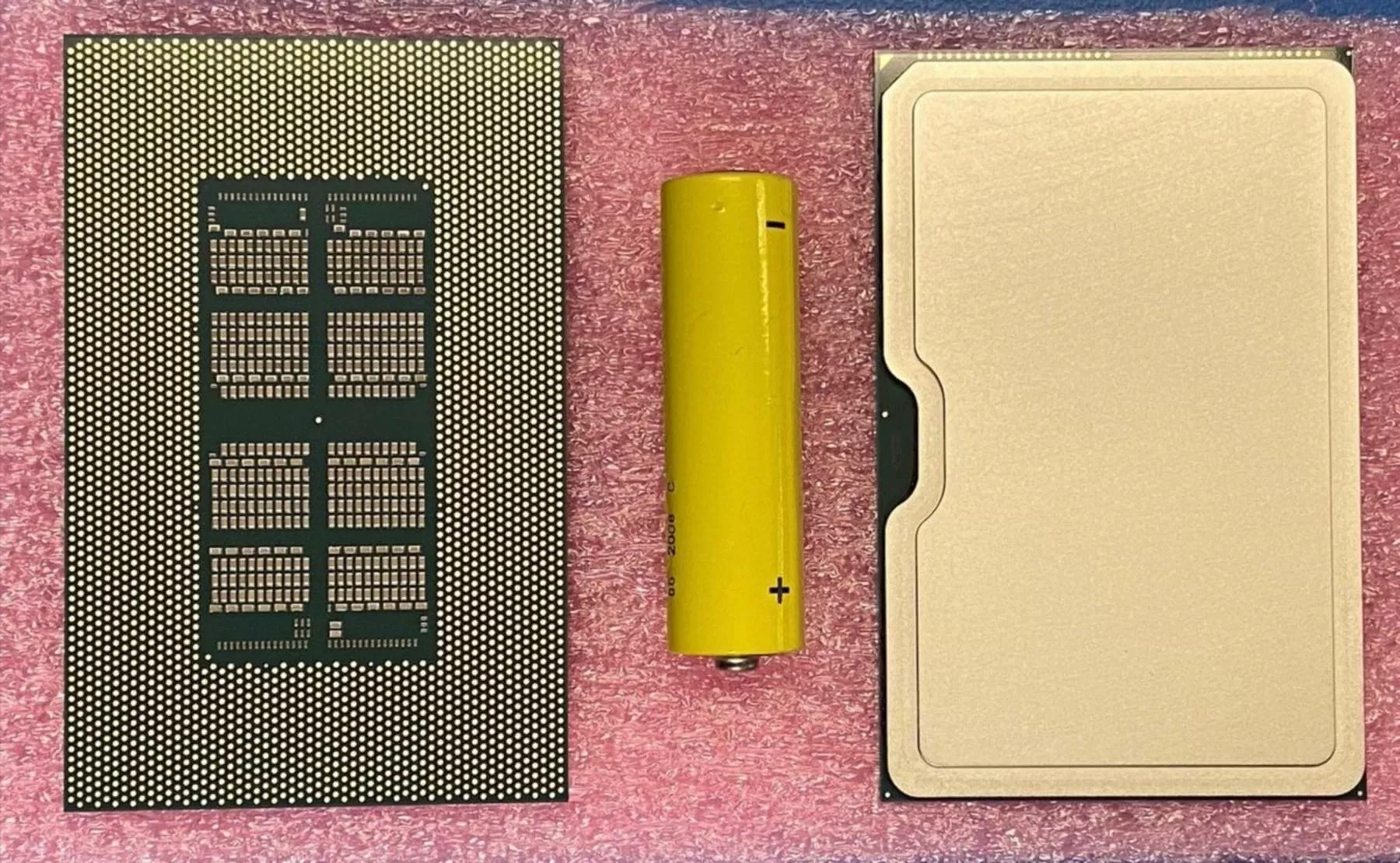

This graphics processor is strange. As you can see from the image, from the outside it’s more like a CPU than a GPU. It sits in a socket using almost three thousand pins. It has an integrated heat spreader.

Inside, it’s like nothing we've seen before. Using the AA battery for scale, the chip is about 4000mm2. If the active area is half that size, that's three times the size of an RTX 2080 Ti. It has “tens of billions” of transistors, while the RTX 2080 Ti has just under twenty billion.

But what sort of GPU is it? For the time being, this processor eludes classification as the information Intel has provided is contradictory. Koduri previously said that the “father of all” is a Xe HP (high performance) product. Later on, he would also mention this chip supports BF16, a very niche AI acceleration compute format. BF16 is a confirmed, exclusive feature of Xe HPC (high performance compute). This chip could therefore be a Xe HPC product...

This was called the “baap of all” by our team....The “Baahubali of all” is baking as well. Let’s hope the wait will be shorter than what @ssrajamouli put us through for @BaahubaliMovie :) https://t.co/psWsIL5rnp

— Raja Koduri (@Rajaontheedge) May 2, 2020

Baap means father in Hindi, and Baahubali is a mythical hero of sorts. Xe is being developed in India.

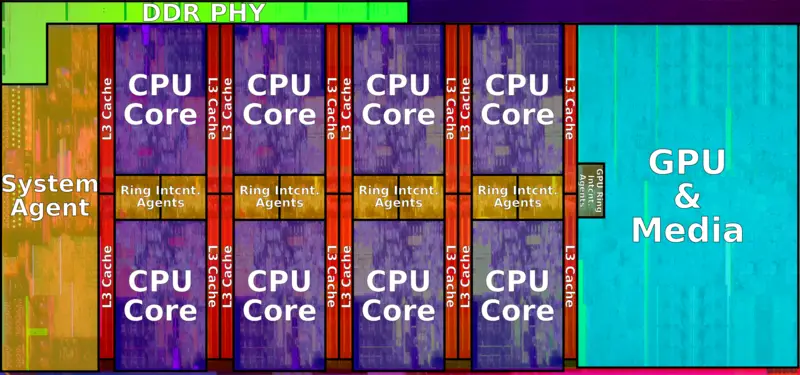

The difference between the two is substantial. Xe HP is oriented towards gaming and workstations. Leaks pin the core counts at between 1024 and 4096. Xe HPC is for servers and optimized for AI and scientific work. The single HPC product under development is codenamed Ponte Vecchio, it is said to have 1024 cores, but is by far the most powerful accelerator Intel is developing by virtue of its complex memory subsystem and the variety of cores and operations it supports. You can read more about this in our in-depth breakdown on Xe.

While neither HP nor HPC can be ruled out definitively, the nature of the latter seems more suitable to the new mystery processor. It would be quite strange for a gaming/workstation processor to be prototyped in a socket-based format, whereas Ponte Vecchio has already been depicted in what could be a socket.

Follow up story: Intel's Raja Koduri confirms that massive 'father of all' GPU is aimed at the data center

Further, from early schematics and presentations, Ponte Vecchio could be expected to be unprecedentedly large due to the inclusion of the high-footprint RAMBO cache and onboard HBM2 (or HBM2E). There is no reason to expect a HP product to be so large.

Regardless of what exactly this GPU is, the “father of all” will undoubtedly have the mother of all price tags, so you’d better start saving. Xe HP will release sometime this year and Ponte Vecchio will be ready in late 2021.

https://www.techspot.com/news/85073-intel-tweets-photo-biggest-weirdest-gpu-ever-made.html