What just happened? AMD officially announced the Radeon RX 7800 XT and Radeon RX 7700 XT graphics cards. We have all the details, including specifications, launch date, pricing, and AMD's performance claims – plus additional information on FSR 3, Anti-Lag+, Hypr-RX, and more. So let's get started.

Pricing, Specs and Availability

Let's begin with the two new graphics cards, which will be available on September 6th. The RX 7800 XT will be priced at $500 US, while the RX 7700 XT is slightly less at $450 US. Both still lie in the middle of the current market in direct competition with Nvidia's GeForce RTX 4070 and RTX 4060 Ti.

These new cards are based on AMD's RDNA3 Navi 32 GPU die, which is chiplet based. It has one GCD in the middle, a smaller GCD than was used with Navi 31, but is still built on TSMC's N5 node. Flanking this are four MCDs using a TSMC N6 process. So, from a GPU design perspective, it's more similar to the RX 7900 XTX and 7900 XT than the RX 7600, which used a monolithic Navi 33 die.

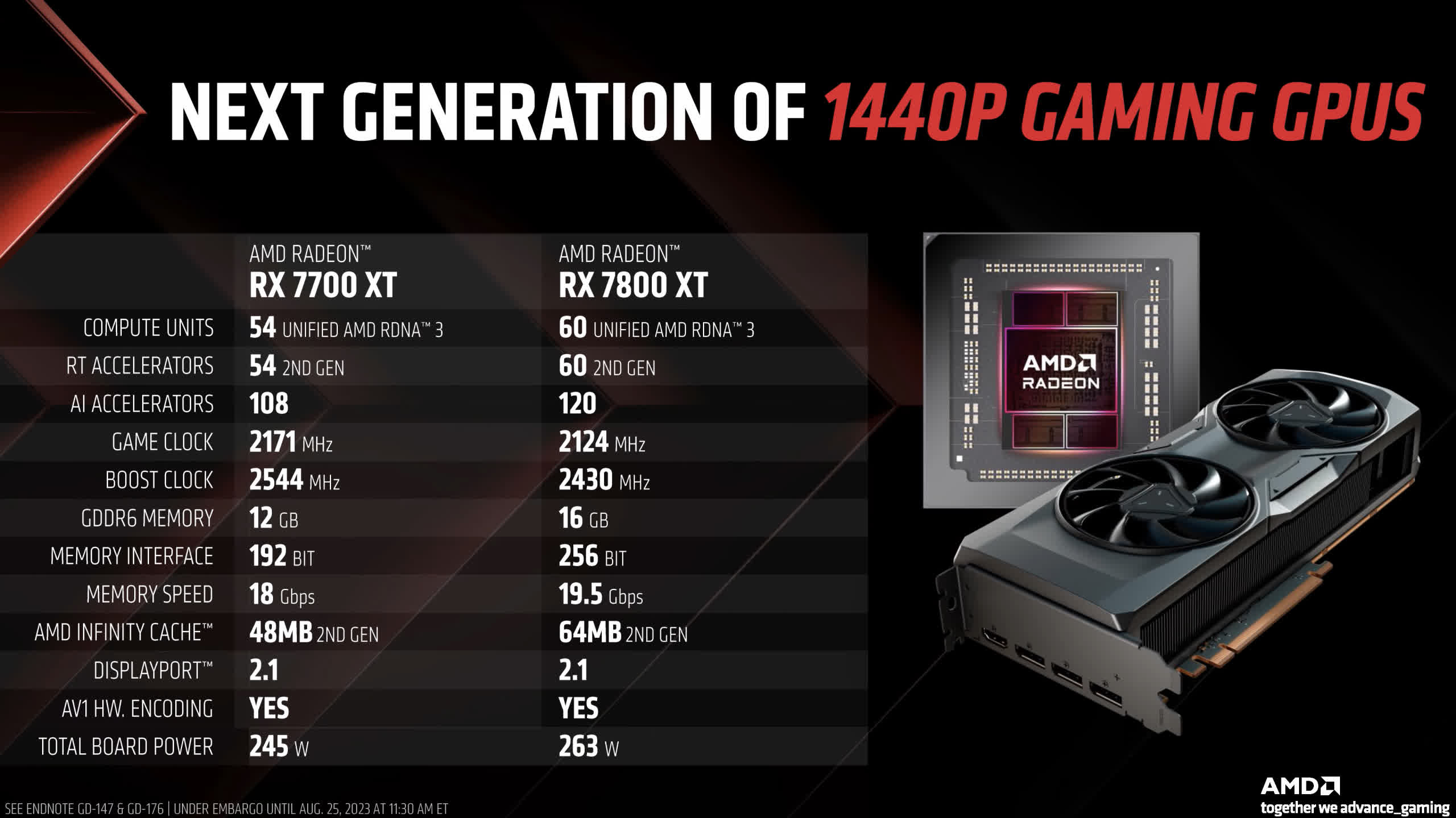

The 7800 XT uses a fully unlocked Navi 32 die with 60 compute units and all four MCDs, offering a 256-bit memory bus and 64 MB of infinity cache paired with 16GB of GDDR6 memory at 19.5 Gbps. Meanwhile, the RX 7700 XT is cut down to 54 compute units, and it has three of the four MCDs, so a 192-bit memory bus and 48 MB of infinity cache. This allows for a 12 GB GDDR6 memory configuration, although it's also clocked slightly lower than the 7800 XT, at just 18 Gbps. Both cards have a similar game clock, 2171 MHz for the 7700 and 2124 MHz for the 7800, although the rated boost clock for the 7700 is somewhat higher. Typically, the game clock is a more realistic reflection of in-game clock speeds.

In addition, both models support new RDNA3 features like AV1 video encoding and DisplayPort 2.1. Board power ratings are mid-tier, as expected, with the 7800 XT listed at 263W while the 7700 XT runs at 245W. Given that AMD is pitting these models against the 200W RTX 4070 and 160W RTX 4060 Ti, this suggests inferior efficiency for Navi 32 compared to Nvidia's AD104 and AD106 silicon.

RX 7700 XT Performance Claims

Based on specifications and pricing alone, there are some concerns about the price of the RX 7700 XT. The 7700 XT is 10 percent cheaper than the 7800 XT, for a 10-percent reduction in compute units. That sounds reasonable. However, the memory system is significantly reduced. The 7700 XT, with its 192-bit bus and slower memory, ends up with 31 percent less memory bandwidth than the 7800 XT.

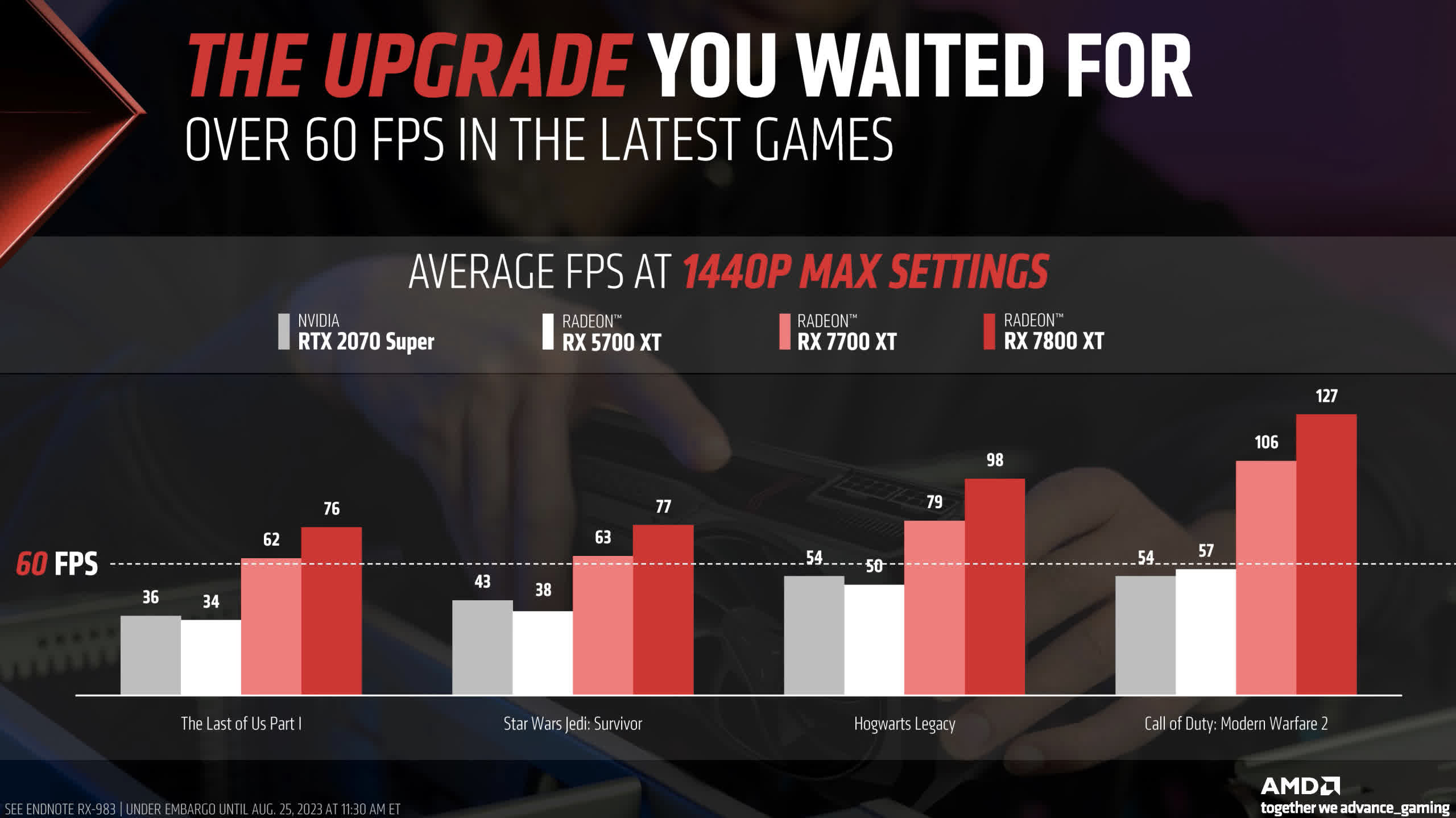

Realistically, the performance gap between these two models will be more than 10 percent. That's certainly what AMD is expecting based on this slide, where the 7800 XT is, on average, 22 percent faster than the 7700 XT, albeit across just four games.

If this is an accurate reflection of performance, the 7800 XT will be around 20 percent faster for just a 10-percent price premium, giving the 7800 XT a better cost per frame. This looks to be a very similar situation to the 7900 XT versus the 7900 XTX, where the XTX was only around 10 percent more expensive for 20 percent more performance at launch. We'll have to wait and see where the final benchmarks lie in our testing, but our initial assessment points toward the 7700 XT being dead on arrival, at least in AMD's line-up.

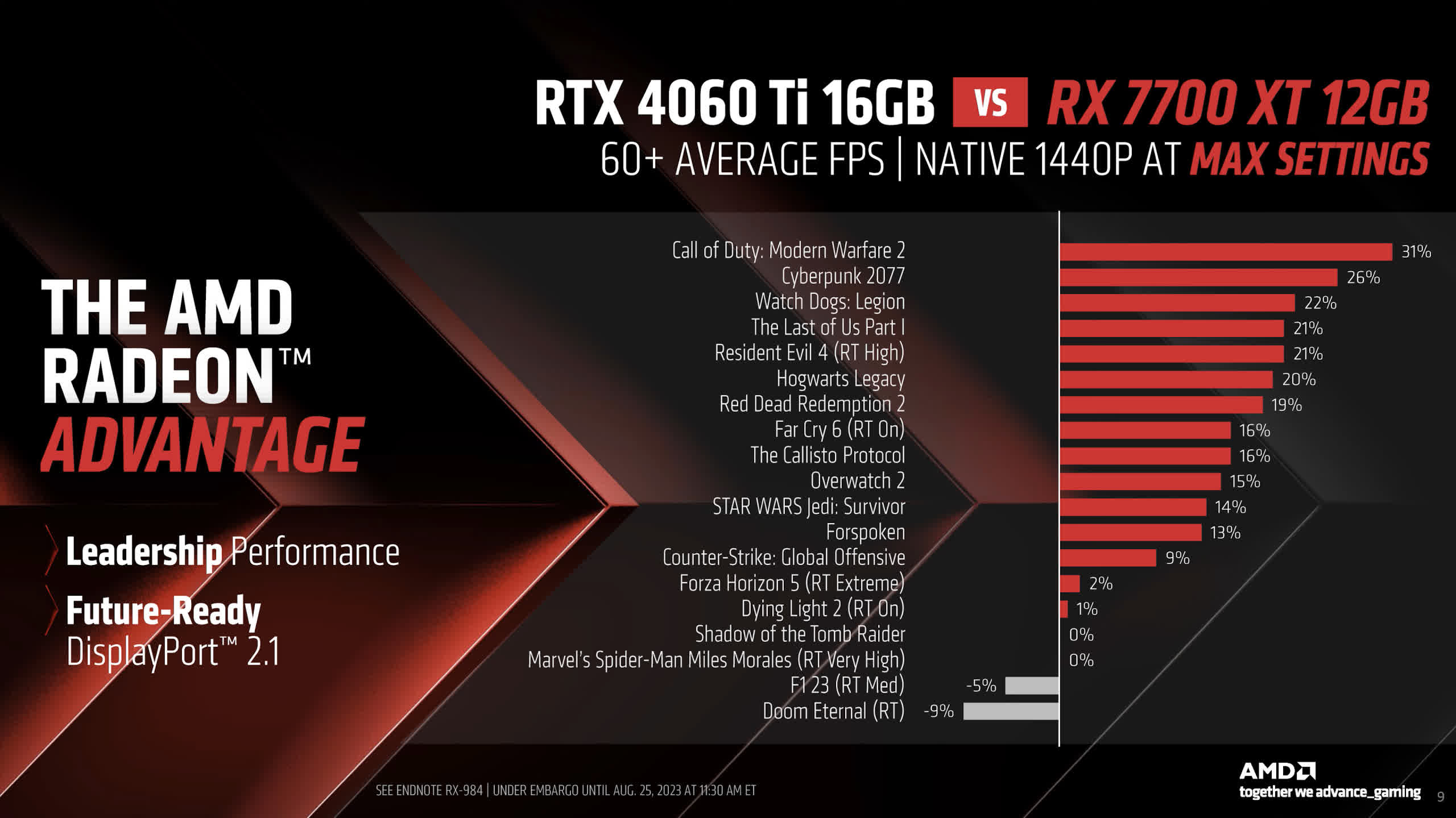

AMD's central performance claims for the 7700 XT compare it to Nvidia's RTX 4060 Ti 16GB. On average, across this 20-game configuration sample (below), AMD has the 7700 XT 12 percent ahead of the 4060 Ti. However, this should be taken with a healthy dose of skepticism, given these are first-party benchmarks, and AMD hasn't always been super accurate with the performance of this generation. Some of these results are ray-traced games, and some use rasterization, so if we split those off separately, AMD is claiming a 17-percent lead in raster but a four-percent deficit in ray tracing.

At first glance, this looks reasonable. AMD priced this new card 10 percent below the 4060 Ti 16GB but 13 percent ahead of the 4060 Ti 8GB, which we believe are awful graphics cards with terrible prices. VRAM capacity also slots in the middle, offering 4GB more than the anemic 8GB 4060 Ti, but you're also paying a premium for this privilege. Similarly, while it is cheaper than the 16GB model, Nvidia has a VRAM advantage.

AMD might struggle with the 7700 XT compared to some of their RDNA2 models, which are still available to purchase. The RX 6800, in particular, is currently priced around $430 to $450, and in our testing, the 6800 is about 16 percent faster than the 4060 Ti in rasterization. This would indicate similar performance to the 7700 XT at a comparable or lower price while also packing more VRAM, 16GB versus 12GB. So, that will be a very interesting comparison for our final review to see precisely where price-to-performance ratios lie, especially if the 7700 XT can't quite reach these performance figures.

Our initial feeling is that the 7700 XT is too expensive and should be a $400 GPU to fit with the rest of the RDNA3 line-up and older RDNA2 models while also offering strong competition to GeForce competitors. With that pricing, AMD could have claimed a faster GPU with more VRAM at the same price as the RTX 4060 Ti 8GB model. Instead, they have chosen not to take any big advantage in the mid-range.

RX 7800 XT Performance Claims

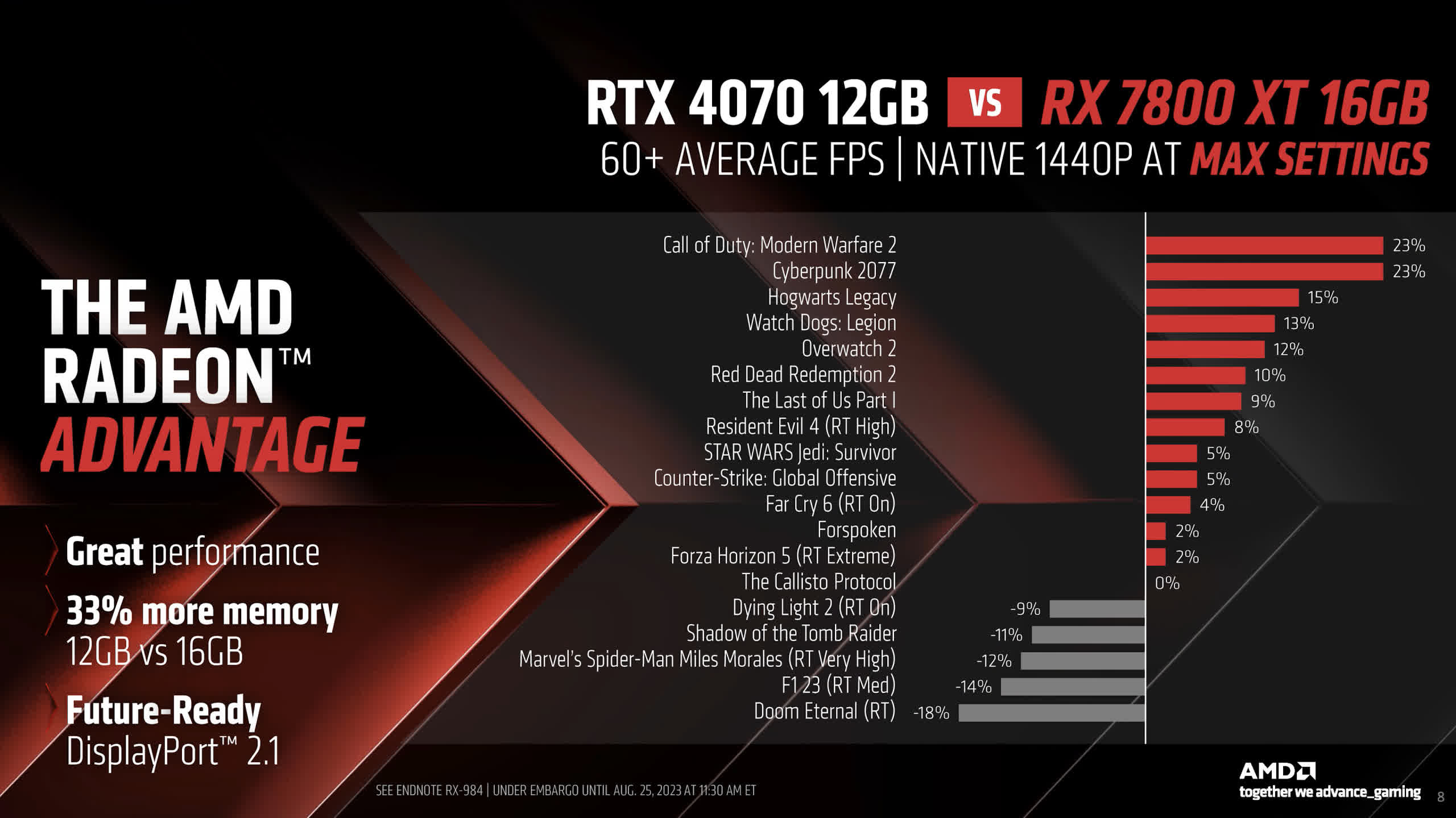

The 7800 XT has been pitted against the RTX 4070 in AMD's performance slide (below), showing a four-percent gain on average across these 19 games. This breaks down into a nine-percent advantage in rasterization and a six-percent deficit in ray tracing, which is a bit more favorable than the 7700 XT, given this is what AMD is offering at a $100 lower price point, not to forget the extra VRAM coming in at 16GB versus 12GB for Nvidia's competitor.

It should also lead to a more significant performance gap compared to the RTX 4060 Ti 16GB at the same price, given that our testing put the 4070 at 28 percent faster than the 4060 Ti, and AMD is claiming a slight lead over the 4070. Obviously, a thorn in AMD's side in this price tier will be ray tracing performance, which AMD suggests is lower than the 4070, and they didn't even test some of the most intensive RT titles, such as Cyberpunk 2077.

Where this model could be shaky is when comparing it to the nearest-priced RDNA2 model – the RX 6800 XT – which is currently available for around $530. Our testing has the RTX 4070 and RX 6800 XT offering similar rasterization performance, while AMD claims a nine-percent raster lead over the 4070. This does point to a better cost per frame for the 7800 XT, but it hinges entirely on the gap between it and the 6800 XT. If it's near 10 percent, as suggested, that does give it a reasonable advantage in value to the newer 7800 XT, given it's also slightly cheaper. However, if the cards end up more equal, it is only a slight generational uplift. We'll have to see how it all plays out in our comprehensive review.

At least on the graphics card launch side of things, what AMD is showing looks reasonable, not super amazing must-buys, not awfully inadequate models, but just okay. Again, the 7800 XT looks better from what AMD has shown than the 7700 XT, but with both GPUs, it's really going to come down to final performance, features, and value. There are many decent options in this price range, including the RX 6800 XT, RX 6800, RX 6700 XT, and probably Nvidia's best RTX 40 series GPU outside the 4090 in the RTX 4070. One thing AMD has going for it with this launch is it can take the crown for the cheapest current generation GPU to offer more than 8GB of VRAM in the 7700 XT, succeeding the RTX 4060 Ti due to its $50 lower price tag and 12GB capacity.

AMD Provides More FSR 3 Details

During its Gamescom presentation, AMD also spent some time talking about new features for Radeon GPUs and games as a whole. One of the biggest ones is FSR 3, which is hotly anticipated after the company announced it way back in November of 2022. We're nearly 10 months on from that announcement, and despite revisiting FSR 3 at Gamescom, AMD is still not ready to release this feature and hasn't put a firm release date on it. The best we could get from AMD is it will roll out in "early fall," which could mean as early as September.

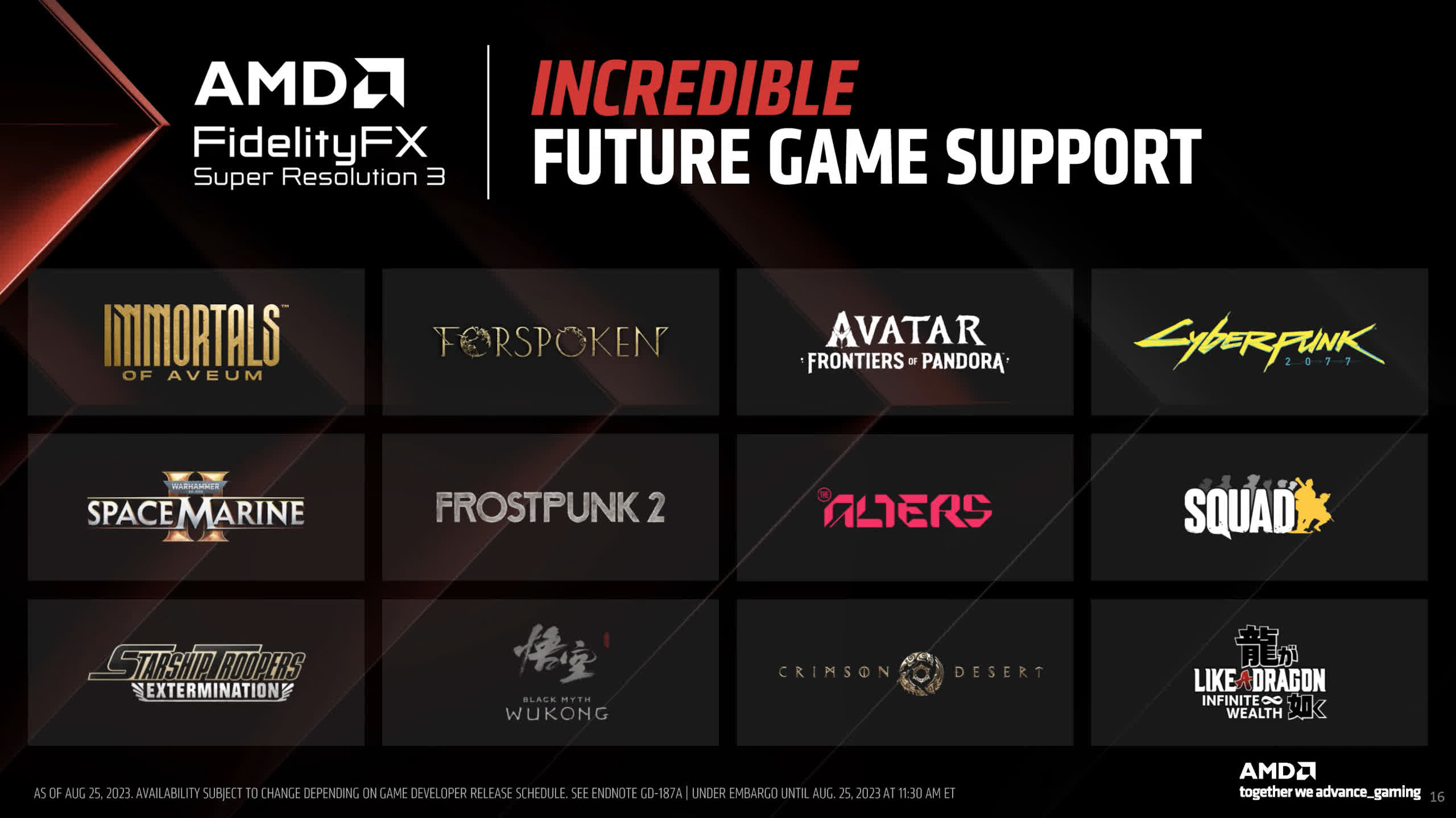

We also learned that contrary to recent rumors, AMD is not aiming to have Starfield as their first FSR 3 launch title. In fact, this presentation did not indicate that FSR 3 would be coming to Bethesda's game at all. Starfield is not listed on AMD's future game support slide, and Bethesda is not listed as a partner for FSR 3. AMD specifically told us that Forspoken and Immortals of Aveum would be the first games to receive FSR 3. That's a bit of a blow, given that Starfield is currently AMD's flagship sponsored title. However, FSR 3 is coming to Cyberpunk 2077, which is good to see given the imminent launch of that game's expansion, Phantom Liberty.

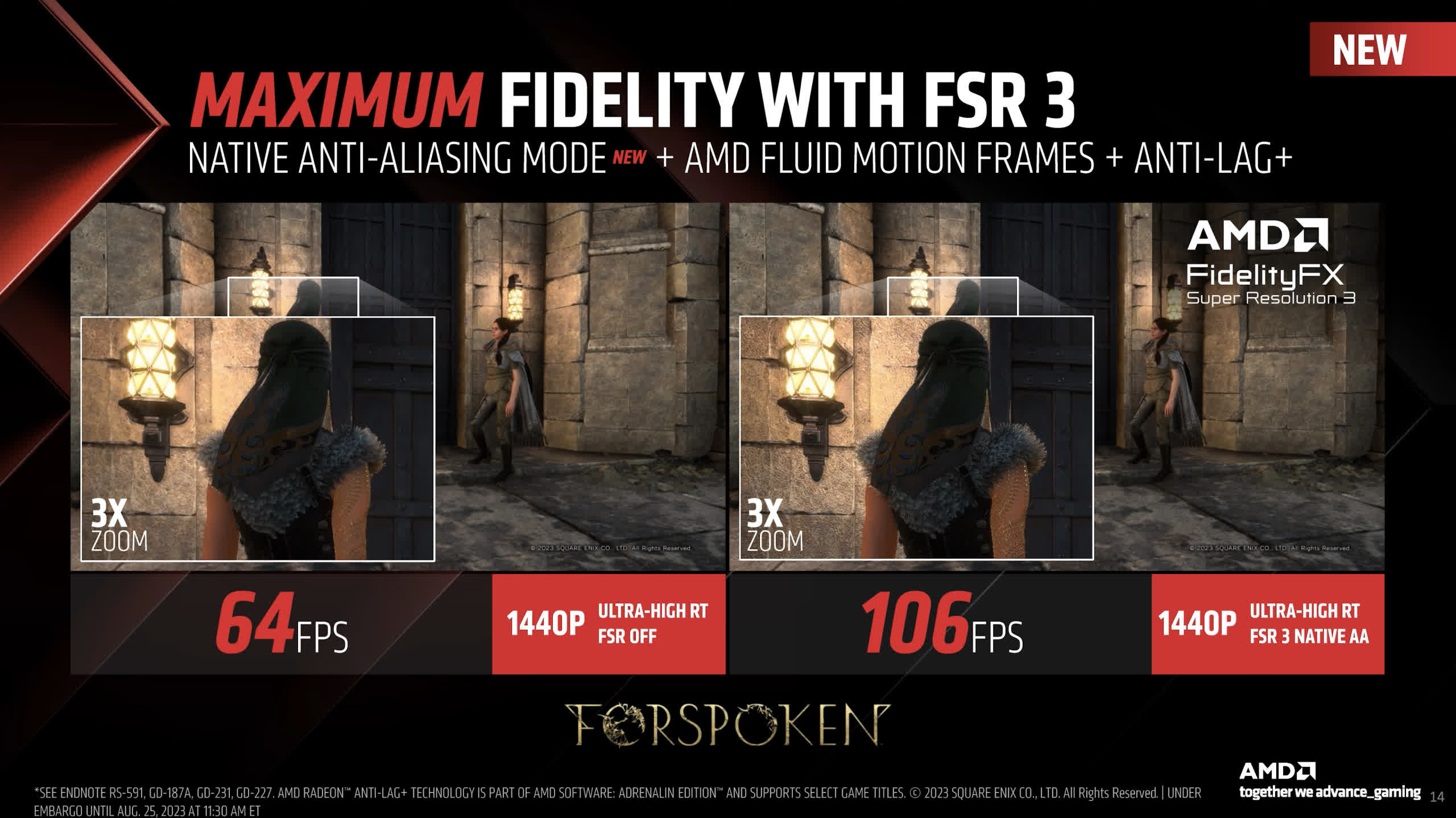

We didn't learn much more about FSR 3 that AMD hadn't previously claimed. Their demonstrations show frame generation technology working as expected to roughly double the frame rate, as we expected based on what the company said last year. As with DLSS 3 frame generation, you don't get precisely double the FPS, as seen below, but the technology is showing a significant leap in smoothness with it enabled. AMD is branding its frame generation tech as "Fluid Motion Frames." When implemented in FSR 3, it uses frame interpolation and motion vectors to generate these additional frames (quite similar to DLSS 3, by the sounds of things).

Where FSR 3 will differ from DLSS 3 is in hardware support. FSR 3 will be available for most graphics cards. When we asked, AMD said support goes back to RDNA1-based GPUs and "a broad range of competitor solutions, including NVIDIA GeForce RTX 20, 30, and 40 Series graphics cards." However, it also recommended using FSR 3 on RDNA2 or RDNA3 GPUs, suggesting the tech is optimized for newer architectures and may suffer on those older cards. It's unclear whether FSR 3 will be blocked entirely from running on GPUs that aren't in this supported list, for example, the trusty old RX 580; it could be a situation where the architecture of older models doesn't include key functionality that FSR 3 requires.

We asked AMD whether FSR 3 uses FSR 2 for temporal upscaling or whether the upscaling component has been upgraded.

"FSR 3 includes the latest version of our temporal upscaling technology, which has been optimized to be fully integrated with the new frame generation technology. However, our main focus with AMD FSR 3 research and development has been on creating high-performance, high-quality frame generation technology that works across a broad range of products."

So it doesn't sound like the temporal upscaler has been upgraded, but it will use the latest version. AMD also said it has optimized FSR 3 to reconstruct game UIs, saying that "UI processing is an integral part of our solution to minimize any impact frame generation can have on it."

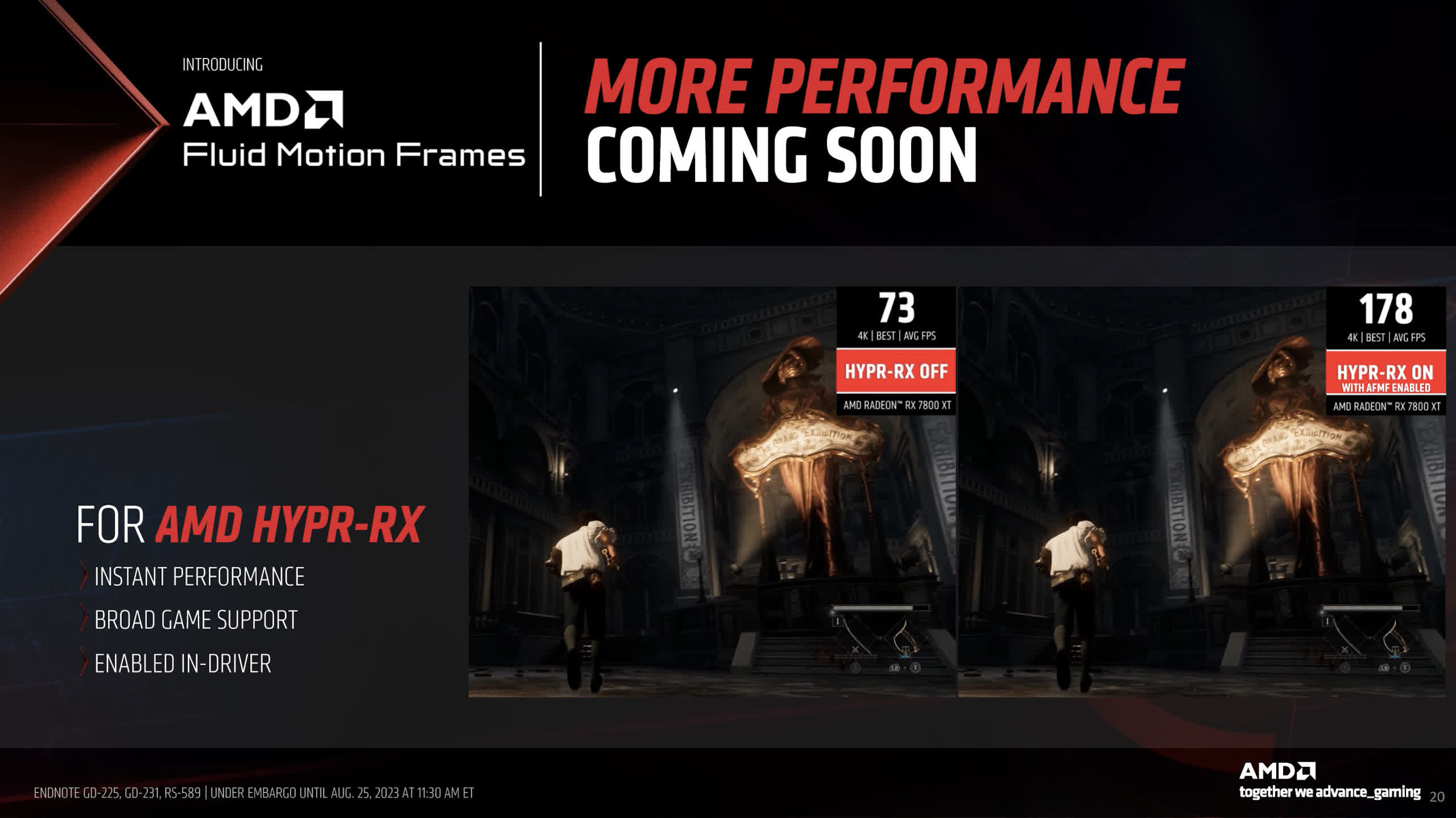

Alongside FSR 3, AMD will be integrating frame generation into their driver as a standalone Fluid Motion Frames feature, similar to what it did with Radeon Super Resolution, bringing FSR 1 into the driver. This will offer broad game compatibility and allow you to use a very rudimentary version of frame generation in any title on your Radeon GPU.

It all sounds good in theory, and we're sure many will comment about how this will put AMD ahead in the "generated frames" race, and plenty of fanboy wars will ensue. However, based on what we've seen with DLSS 3 frame generation, game integration and the use of motion vectors are crucial to generating visually decent frames. The frame interpolation component is often the weak link to frame generation, causing blurry, garbled messes every second frame. With DLSS 3, usually only parts of the screen have to rely on interpolation, with the rest being processed primarily thanks to motion vector and game engine data (e.g., shift this entire frame slightly to the left for the next generated frame).

With AMD saying that Fluid Motion Frames in the driver is just the motion interpolation component – of course it is; the driver cannot access data such as motion vectors – we have pretty substantial question marks over the visual quality of this feature. Without that crucial motion data, is the entire frame just going to be a blurry, smeared mess blending two complete frames together? If it's anything like what we've seen from interpolation in DLSS 3 but now applied to the entire generated frame every single time, it could look awful, and we're not sure it's a feature that will be worth using. So, that one will require some pretty heavy scrutiny before we get excited about it.

New Anti-Lag Plus Feature for RDNA3

Latency is where it gets a bit complicated. You might have spotted on these FSR 3 slides something called "Anti-Lag+," a new feature being announced. It is separate from FSR 3's built-in latency-reducing technology. So, to be clear, when you enable FSR 3, it will automatically apply latency-reducing tech, but then Radeon owners can use Anti-Lag+ on top of this at the driver level. AMD's slides make it seem like Anti-Lag+ is part of FSR 3, like how Nvidia Reflex is part of DLSS 3 and is enabled when frame generation is toggled on. However, AMD clarified this isn't the case.

Anti-Lag+ is a new driver-level latency-reducing feature available exclusively for RDNA3 GPUs and presumably anything else moving forward. It will be available alongside the old Anti-Lag but promises some improvements. AMD explained it to us as follows:

"AMD Radeon Anti-Lag helps synchronize CPU and GPU processing during gameplay to reduce input latency. Anti-Lag's synchronization point, however, is placed at a fixed location in the rendering pipeline. AMD Radeon Anti-Lag+ further reduces latency through intelligent synchronization placement that considers the holistic rendering pipeline. As a result, Anti-Lag+ can further reduce latency where it matters most."

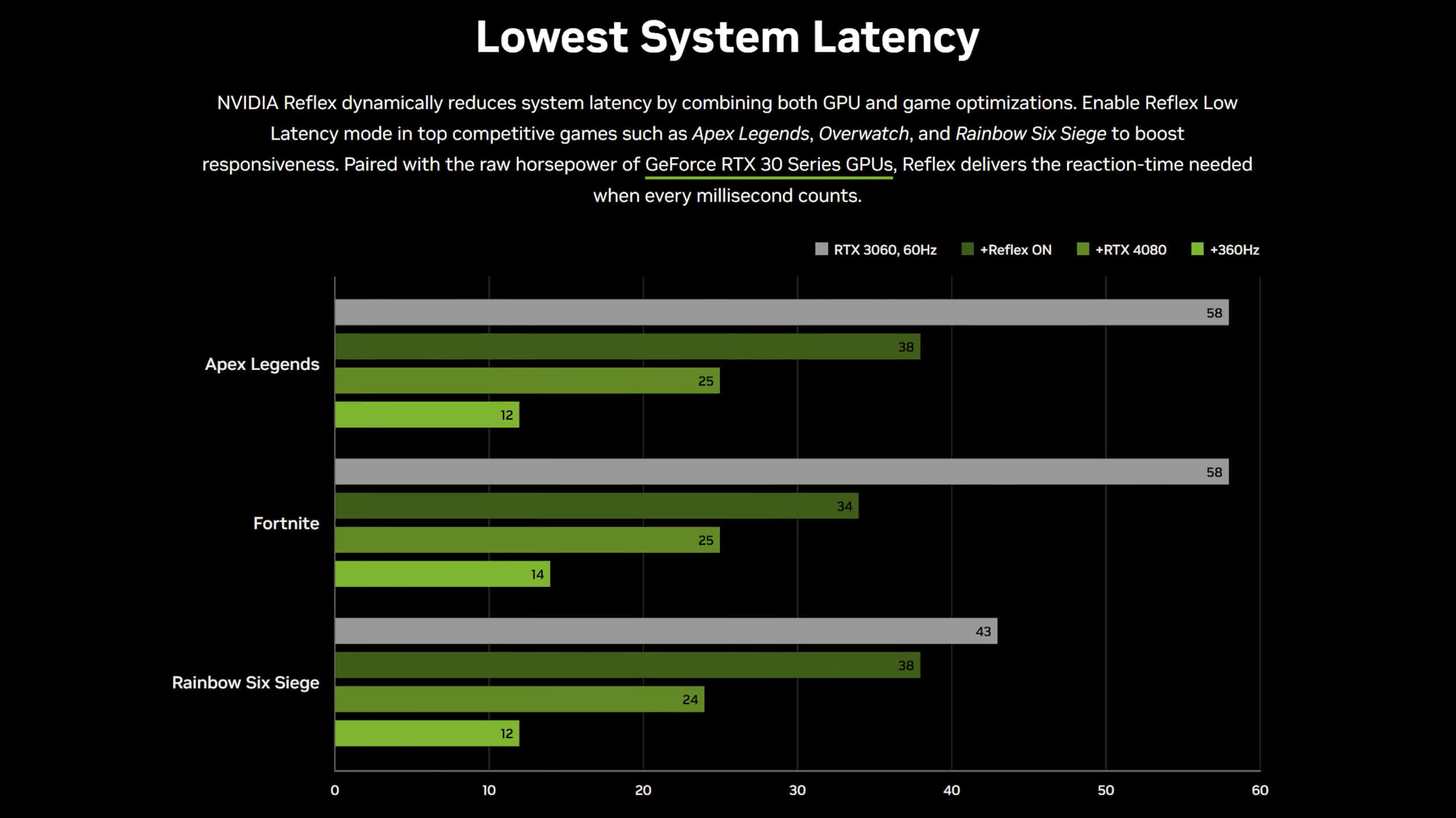

AMD didn't go into specifics, but this seems more akin to Nvidia Reflex than their previous Anti-Lag technology. Reflex intelligently adjusts to minimize latency in the pipeline, effectively providing the benefits of a dynamic frame cap to slightly alleviate any GPU bottleneck, a proven technique for improving latency. Nvidia's technology is integrated into the games and is said to include optimizations on a per-title basis. We don't know if Anti-Lag+ works like this yet, and implementation does differ in that it's driver-side instead of game-side. However, the way AMD has explained it makes it sound like it's trying to achieve something similar. Hopefully, it's like Reflex, a great technology that works well, and up to this point, AMD didn't have a competitor for it.

It's also unclear whether Anti-Lag+ will work across all games or just a selection of titles. AMD confirmed that it isn't integrated into games but is driver profile-based. The company seemed to suggest only some titles will support Anti-Lag+. We'll have to see how that all works when it's integrated into the driver.

How Anti-Lag, Anti-Lag+, and FSR3 intertwine is a bit confusing, especially concerning how DLSS3 and Reflex operate on the Nvidia side. So here is the best explanation of how it all works:

AMD's FSR3 frame generation technology has built-in latency-reducing technology that works across all GPUs. Gamers using an RX 7000 series GPU can go into the driver and enable Anti-Lag+ on top of FSR3's latency-reduction tech in supported titles. Anti-Lag+ is not enabled by default in FSR3 titles; it's an optional feature that users must turn on manually. Owners of older Radeon GPUs can apply Anti-Lag (the non-plus version) on top of FSR3's latency-reducing tech instead, again an optional feature.

"The built-in latency reduction technology in AMD FSR 3 will provide valuable latency reduction when using FSR 3 frame generation, and our in-driver latency reduction solutions are a bonus that stacks on top to provide the best possible experience when using FSR 3," AMD explained.

As for why Anti-Lag+ is only supported on RDNA3, AMD said, "Anti-Lag+ [relies] on Intellectual Property that was introduced with AMD RDNA 3 and is not available on previous generations of hardware. At AMD, we always aim to support previous generations of hardware. […] While we are investigating the ability to do […] Anti-Lag+ in earlier hardware, we cannot commit that this is possible."

We would like to know exactly what intellectual property is specific to RDNA3 that is required for this feature, but we'll have to wait to find out.

For regular FSR 2 users, AMD is releasing a new native anti-aliasing mode, which allows you to use FSR 2 technology without any upscaling applied. This is effectively what Nvidia is doing with DLAA. So, it gives AMD parity with Nvidia in games that integrate this feature. This native AA mode will provide gamers with a higher quality anti-aliasing technique than many games' built-in TAA solution, at some cost to performance, while also featuring FSR's built-in sharpening. Many people have been requesting something like this, so AMD is now delivering it.

HyperRX

Lastly, we have Hypr-RX, AMD's one-click toggle to apply all its performance-enhancing features. First announced way back with RDNA3 last year, this feature is finally ready and will be available natively in some games and as a driver toggle. Essentially, it aims to make it easy for novice users to enable features like Radeon Boost, Anti-Lag, Super Resolution, and even Fluid Motion Frames by hitting one button. In-game implementations will use FSR when Hypr-RX is on, while the driver toggle will use Radeon Super Resolution. Gamers can then go in and further customize what features are enabled, so they could turn on Hypr-RX and then disable Boost or RSR if they don't like those features.

Hypr-RX is the first time that all of these features can be enabled together. Previously, you couldn't use Boost and Radeon Super Resolution simultaneously. AMD has worked around that limitation, the caveat being that, like Anti-Lag+, Hypr-RX is only supported on RDNA3 GPUs and newer. AMD offered the same technical reason for this as with Anti-Lag+, which seems pretty questionable.

We don't think Hypr-RX will be an essential feature for most enthusiast gamers, as they'll probably want to tweak each of AMD's features individually.

To recap: The RX 7800 XT and RX 7700 XT are coming on September 6th, priced at $500 and $450, respectively. AMD FSR 3 is nearly ready and will be debuting in early fall, starting with Forspoken and Immortals of Aveum. Anti-Lag+, Fluid Motion Frames, and Hypr-RX are also coming to AMD's Radeon driver shortly.

https://www.techspot.com/news/99927-amd-finally-targeting-nvidia-radeon-rx-7800-xt.html