Recap: MIT's research highlights the importance of training AI on the right data set. For those fearful of a real-life Skynet, results like this certainly won't make you sleep any easier at night.

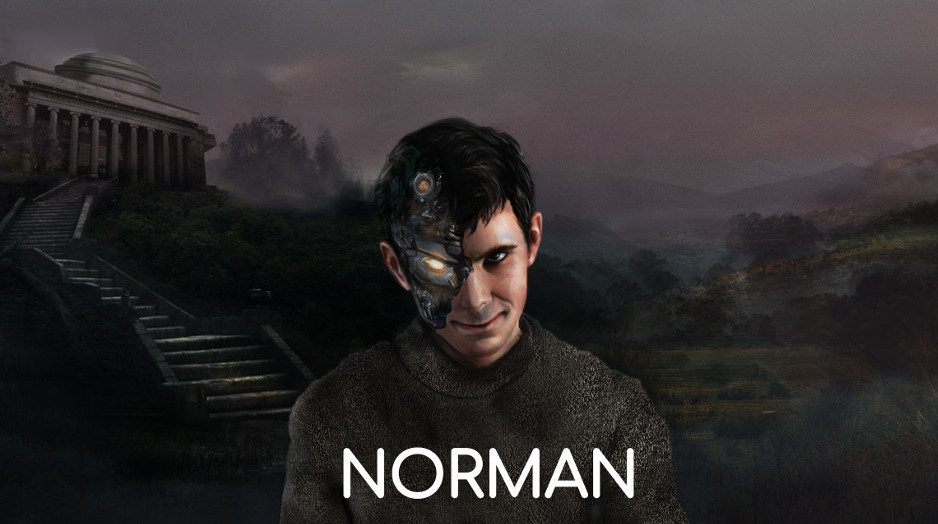

Researchers at MIT’s Media Lab have created what they’re calling the world’s first psychopath AI. Norman (named after a character in Alfred Hitchcock’s Psycho) was trained with data from “the darkest corners of Reddit” and serves as a case study of how biased data can influence machine learning algorithms.

As the team highlights, AI algorithms can see very different things in an image if trained on the wrong data set. Norman was trained to perform image captioning, a deep learning method used to generate a description of an image. It was fed image captions from an “infamous” subreddit that documents the disturbing reality of death (the specific name of the subreddit wasn’t provided due to its graphic nature).

Once trained, Norman was tasked with describing Rorschach inkblots – a common test used to detect underlying thought disorders – and the results were compared with a standard image captioning neural network trained on the MSCOCO data set. The results were quite startling.

Well, alright then.

Researchers note that due to ethical concerns, they only trained Norman on the image captions; no images of real people dying were used in the experiment.

This isn't the first time we've seen AI exhibit poor behavior. In 2016, if you recall, Microsoft launched an AI chat bot named Tay modeled after a 19-year-old girl. In less than 24 hours, the Internet managed to corrupt the bot's personality, forcing Microsoft to promptly pull the plug.

https://www.techspot.com/news/74983-mit-trained-ai-algorithm-data-reddit-community-about.html