In context: Intel's Arc A770 is just one step down from the Arc Limited Edition desktop graphics card, and gamers are hoping it will be powerful enough to rival Nvidia's RTX 3070 Ti and AMD's RX 6700 XT. Early benchmarks suggest the Intel part will be weaker than either of the two, but there's still hope since Team Blue appears to be burning the midnight oil to get the drivers right before the much-awaited summer launch.

Intel's mobile Arc A-series GPU launch was largely a paper launch, with only a couple of laptop models available on the South Korean market as of writing this. The company managed to brew some enthusiasm amid a troubled GPU market, but it appears the drivers for Team Blue's dedicated graphics solutions are far from ready with some features having a significant impact on the overall performance.

This has delayed the launch of the desktop Arc graphics cards by months, but that hasn't stopped models like the A770 from making an appearance online. Although this is supposed to be one of the higher-end Alchemist GPUs, early OpenCL benchmarks suggest it will more likely compete with mainstream GPUs like AMD's RX 6600 XT and Nvidia's RTX 3050.

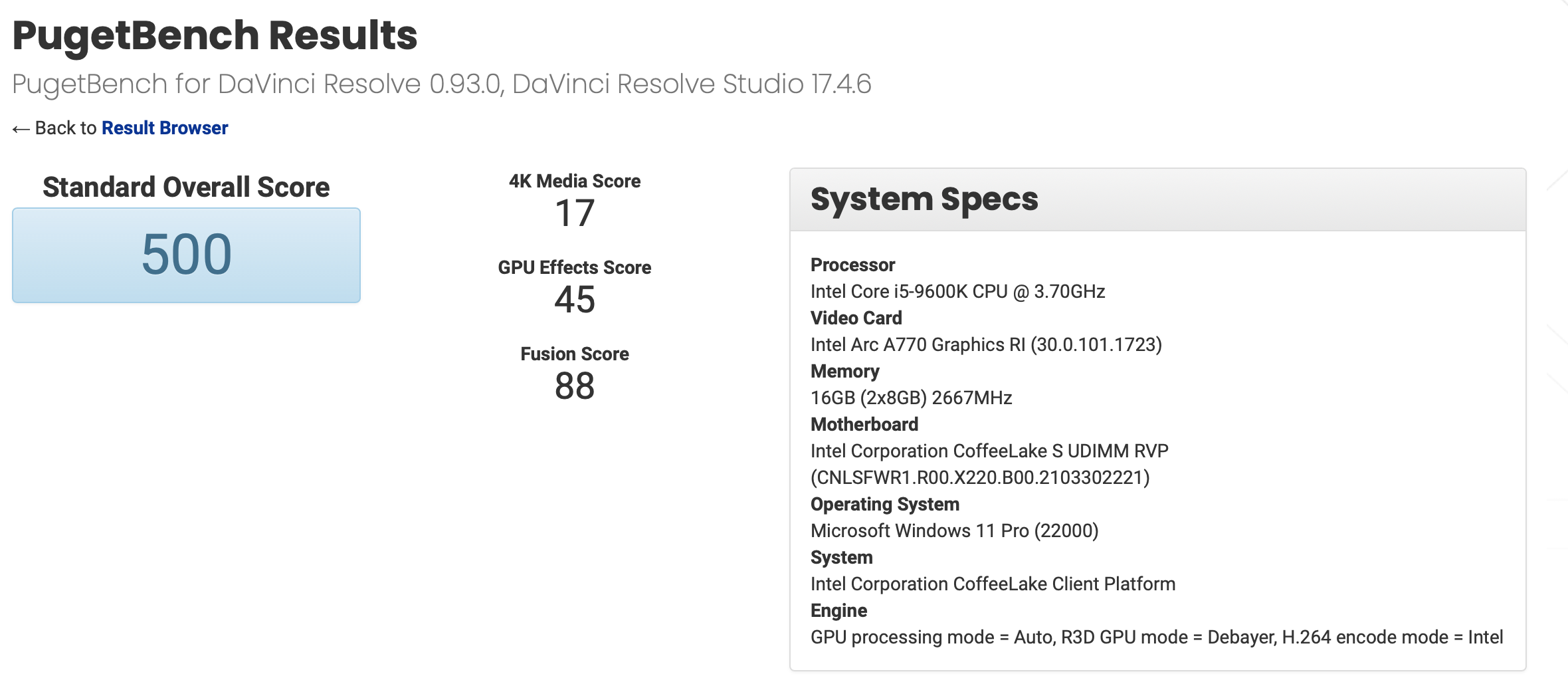

The A770 GPU has also surfaced on Puget Bench's database with a new benchmark result for Davinci Resolve workloads. Interestingly, the test system uses an older, Intel Core i5-9600K CPU, and the motherboard designation suggests this is an internal development kit. There are two scores — 39 and 45 points, respectively, suggesting the Arc A770 is capable of roughly half the performance of an RTX 3070.

These results should be taken with a grain of salt, but there is one interesting detail that suggests Intel is hard at work trying to improve the drivers for Arc GPUs. The driver installed on the test system is designated as version 30.0.101.1723, newer than what's publicly available for Arc mobile GPUs as well as Intel Xe integrated graphics.

Intel has the difficult task of working on game-specific optimizations for a large number of PC titles that already run well on AMD and Nvidia hardware. The company says it will prioritize the top 100 most popular games for the desktop Arc GPU launch and then expand the list of certified titles from there.

If early benchmarks are any indication, Intel will also have to price its Arc GPUs aggressively to spur adoption.

https://www.techspot.com/news/94355-more-benchmarks-intel-arc-a770-desktop-gpu-surface.html