What just happened? Concerned that artificial intelligence seems to be advancing at a rapid pace, potentially threatening more human jobs? Then here's some news that could add to those concerns. A team of Microsoft researchers has announced a new AI that can accurately mimic a human voice from a mere three-second-long audio sample.

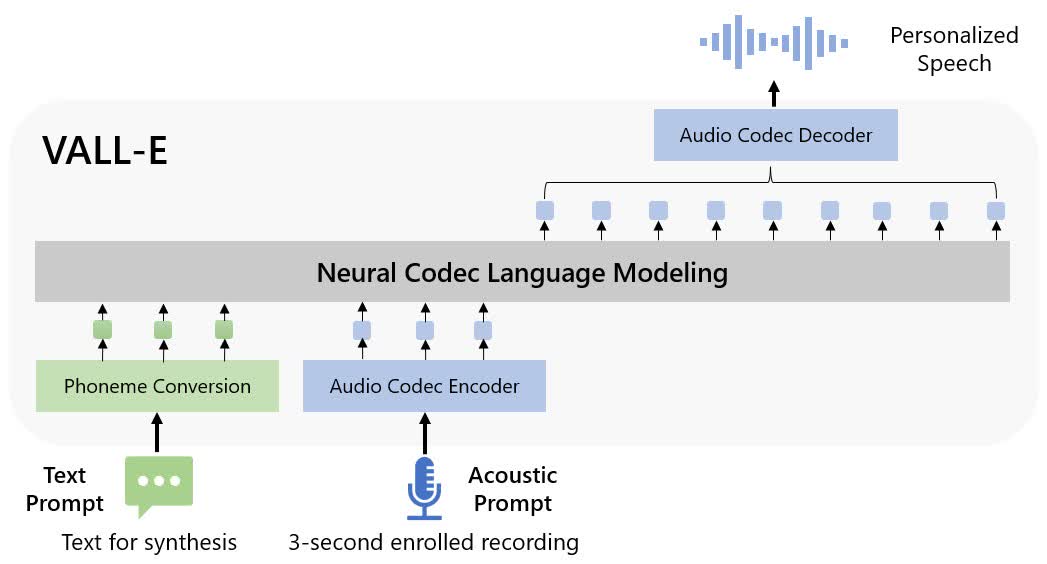

Microsoft's voice AI tool, called Vall-E, is trained on "discrete codes derived from an off-the-shelf neural audio codec model" as well as 60,000 hours of speech—100 times more than existing systems—from more than 7,000 speakers, most of which come from LibriVox public domain audiobooks.

Ars Technica reports that Vall-E builds on a technology called EnCodec that Meta announced in October 2022. It works by analyzing a person's voice, breaking down the information into components, and using its training to synthesize how the voice would sound if it were speaking different phrases. Even after hearing just a three-second sample, Vall-E can replicate a speaker's timbre and emotional tone.

Microsoft have announced their AI "VALL-E"

— Tuvok @ NaughtyDog (@TheCartelDel) January 7, 2023

Using a 3-second sample of human speech, it can generate super-high-quality text-to-text speech from the same voice. Even emotional range and acoustic environment of the

sample data can be reproduced. Here are some examples. pic.twitter.com/ExoS2VWO6d

"Experiment results show that Vall-E significantly outperforms the state-of-the-art zero-shot TTS system [AI that recreates voices it's never heard] in terms of speech naturalness and speaker similarity," states the research paper, available at Cornell University. "In addition, we find VALL-E could preserve the speaker's emotion and acoustic environment of the acoustic prompt in synthesis."

You can hear examples of Vall-E recreating voices on GitHub. Many are genuinely amazing, sounding almost identical to the speaker despite being based on such a short audio sample. There are a few that are a slightly more robotic and sound a bit closer to traditional text-to-voice software, but it's still impressive, and we can expect the AI to improve over time.

Microsoft's researchers believe Vall-E could find use as a text-to-voice tool, a way of editing speech, and an audio creation system by combining it with other generative AIs such as GPT-3.

As with all AIs, there are concerns about the potential misuse of Vall-E. Impersonating public figures like politicians is an example, especially when using it alongside Deepfakes. Or it could trick people into believing they are talking to family, friends, or officials and handing over sensitive data. There's also the fact that some security systems use voice identification. As for its impact on jobs, Vall-E would likely be a cheaper alternative to hiring voice actors.

Addressing the risks of Vall-E being misused, the researchers said these could be mitigated. "It is possible to build a detection model to discriminate whether an audio clip was synthesized by Vall-E. We will also put Microsoft AI Principles into practice when further developing the models."

Masthead: Ociacia

https://www.techspot.com/news/97217-new-microsoft-ai-can-accurately-mimic-human-voice.html