At the Game Developers Conference this week in San Francisco, Imagination Technologies has announced a groundbreaking new family of mobile-oriented consumers GPUs. Known as 'Wizard', the GPU line expands on the company's 'Rogue' designs by offering a dedicated ray tracing unit (RTU), giving game developers the flexibility to produce mobile games with real-time ray tracing lighting effects.

The first of the Wizard GPUs is the GR6500, which is a variation of Imagination's Series6XT GX6450 Rogue hardware. This means we're getting a Unified Shading Cluster Array (USCA) with 128 ALUs, which at the GPU's reference clock speed of 600 MHz will provide around 150 GFLOPS of FP32 power.

The dedicated RTU is a non-programmable, stand-alone processing unit that performs all ray tracing tasks without the need for the USCA's ALUs. Rasterization, which is an important graphical task performed by the USCA, won't be affected on a performance level by the addition of the RTU, although it's possible that there might be an overall performance hit from the extra memory bandwidth the RTU may need.

With the GR6500 clocked at 600 MHz, the RTU will be capable of 300 million rays per second, 24 billion node tests per second, and 100 million dynamic triangles per second.

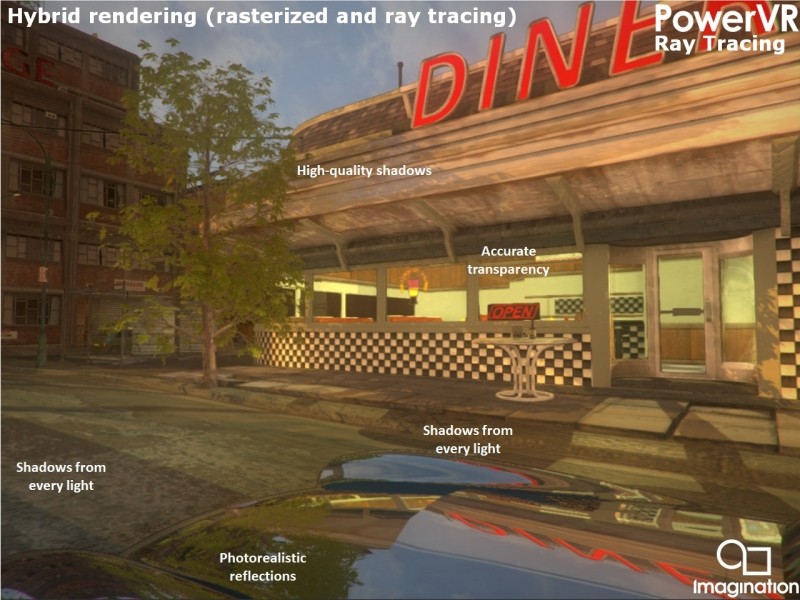

By having a dedicated ray tracer in the GR6500, advanced lighting effects can be hardware accelerated, producing more realistic graphics than we're otherwise used to on mobile hardware. Specifically, a game that ray traces will have high-quality shadows that are a product of every light source, much more accurate transparency and photorealistic reflections.

The inclusion of these sorts of effects is up to the game developer, who need a game engine to support Imagination's new hardware. Luckily, the company has announced that Unity's new 5.x game engine will support their ray tracing technology, although this is just one step in a larger push to get OEMs and software engineers supporting ray tracing.

One thing that Imagination didn't reveal was the additional power cost of the RTU. We're already looking at a GPU that will be integrated into battery-powered devices, where efficiency is key, so let's hope the cost of utilizing the RTU isn't huge.

https://www.techspot.com/news/56044-new-powervr-wizard-mobile-gpu-will-ray-trace-in-real-time.html