Big quote: "The current experience at these resolutions is not where we want them." Nvidia is working hard to improve DLSS, especially at lower resolutions, after gamers share blurry screenshots rendered by the deep learning neural networks.

Nvidia has recognized that its Deep Learning Super Sampling feature introduced on the RTX series of graphics cards is not yet perfected. Through a Q&A post made by Andrew Edelsten, Nvidia's technical director of deep learning, it is shared that DLSS for resolutions below 4K is going to receive significant attention.

Gamers taking advantage of the latest Battlefield V update that included DLSS support have reported seeing blurry frames on occasion. In response, Nvidia has said, "We have seen the screenshots and are listening to the community’s feedback about DLSS at lower resolutions, and are focusing on it as a top priority." Additional training of neural networks will help bring about higher quality visuals, but training the networks for 1080p gaming is going to take a little longer. As a side note, TechSpot's full take on Battlefield's DLSS update will go live tomorrow morning.

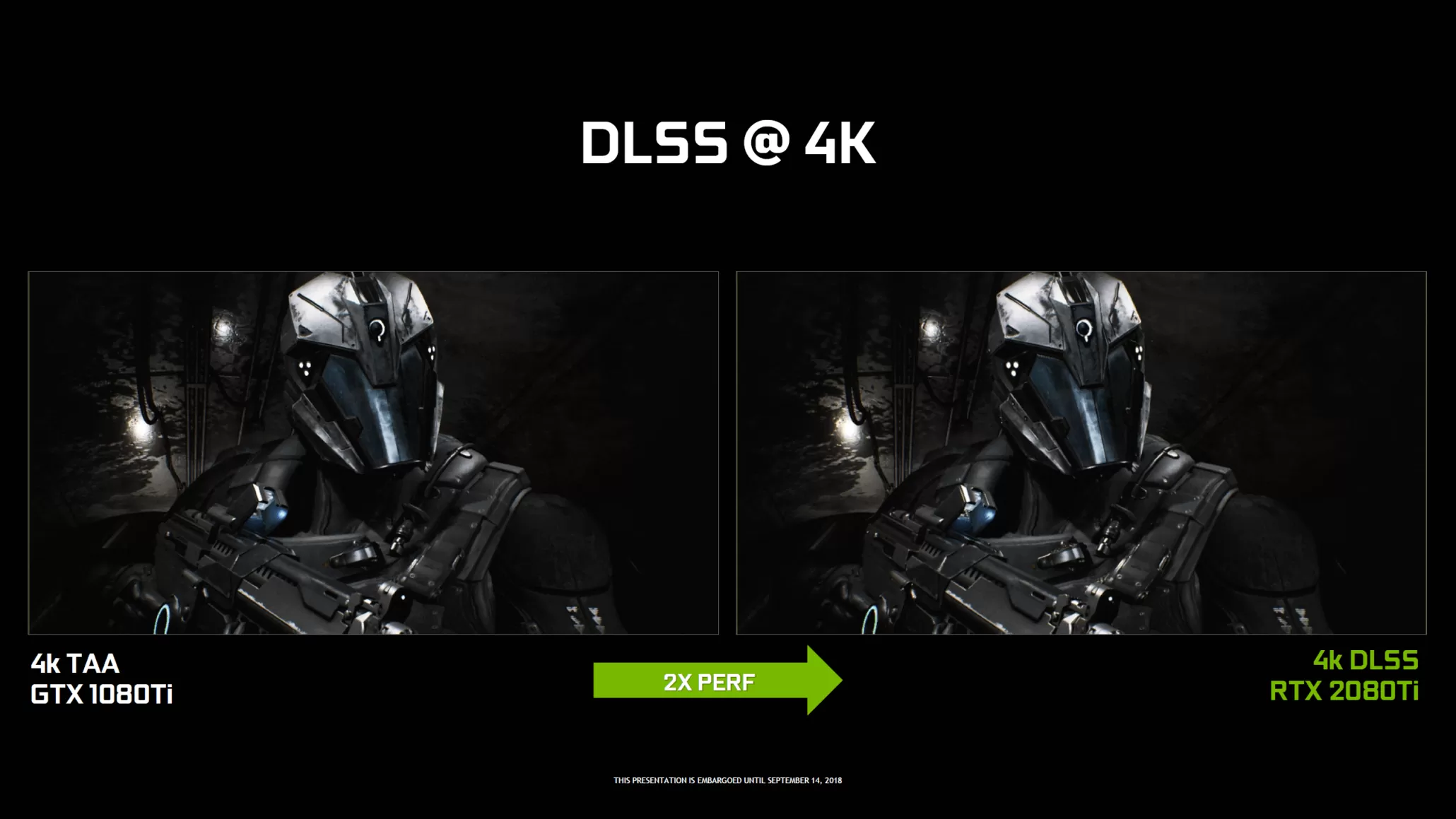

When working with 4K titles, Nvidia has 3.5 to 5.5 million pixels that are usable as an input to their DLSS algorithms compared to a maximum of 1.5 million pixels usable at 1080p. It is far more difficult to render a final frame that looks good to human eyes with less source information available. Going forward, Nvidia will focus on improving DLSS on 1920x1080 and ultrawide monitors running 3440x1440 or similar resolutions.

There are instances where switching on TAA may appear to be slightly better than DLSS for the time being. The trade-off though is that TAA makes use of multiple frames and can cause ghosting or flickering in scenes with high motion rates. DLSS largely eliminates ghosting and flicker, but is not objectively better for all games and combinations of settings.

Besides an incoming update to Battlefield V, Metro Exodus also has an update incoming that was not ready in time for the initial launch. Nvidia is working on neural network training across an expanded portion of the game and is also addressing feedback received over issues with HDR functionality.

In this case, Nvidia has earned a nice shout out for publicly acknowledging problems within a timely manner and also committing to fix them.

https://www.techspot.com/news/78799-nvidia-acknowledges-dlss-shortcomings-working-fix-them.html