Why it matters: Nvidia has announced that DLSS is now available in two additional games: Crysis Remastered and the System Shock remake demo. Both are beautiful games that should benefit immensely from the upscaling tech, especially Crysis Remastered, which is much like the original in that it is tough on hardware.

The first game in the Crysis series debuted in 2007 and was a visual masterpiece. It was also very taxing on PC hardware of the era, resulting in the popular, “But can it run Crysis?” meme. The remastered version launched on September 18, 2020, on PlayStation 4, Xbox One and PC, and after some patch work, seems to be more akin to what gamers were expecting from the release.

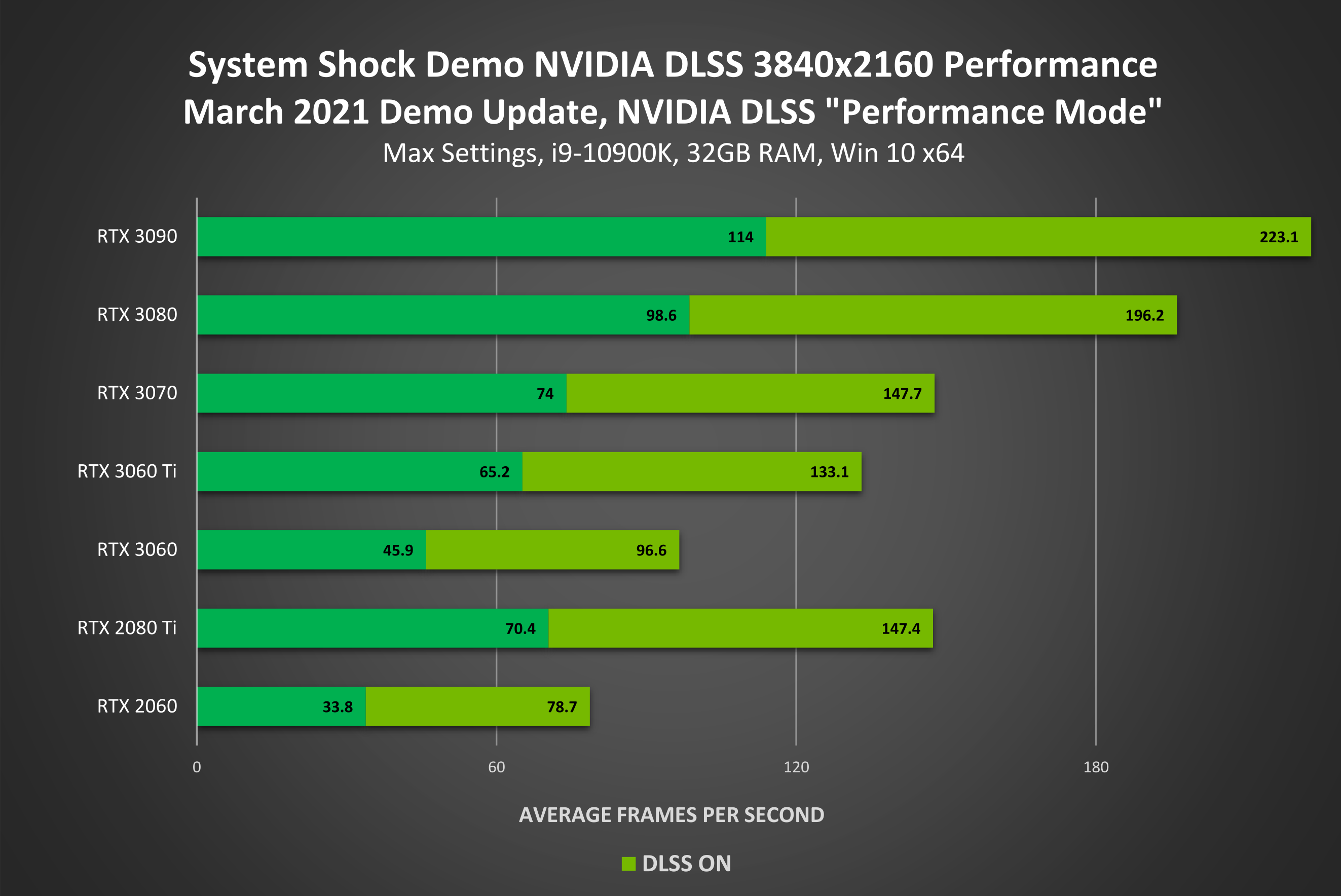

The System Shock remake demo, meanwhile, dropped last month courtesy of Nightdive Studios. It provides players with a glimpse of what the developer has been working on over the past five years and what they can expect when the full launch takes place this summer.

The System Shock demo uses the Unreal Engine 4 plugin to implement DLSS. Matthew Kenneally, lead engineer at Nightdive Studios, said they were able to use the plugin to add DLSS support to System Shock “over the weekend.”

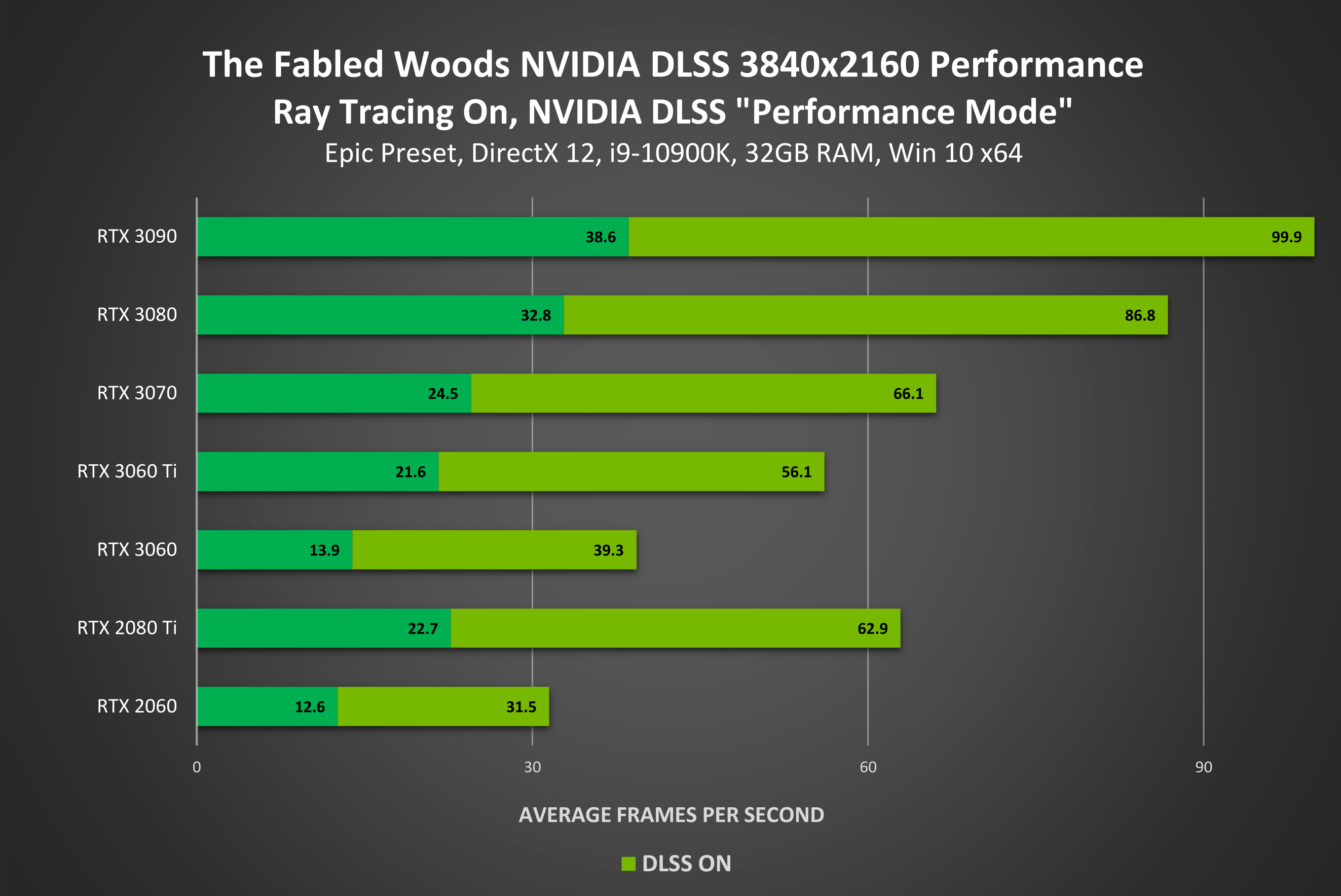

Nvidia further noted that when The Fabled Woods drops on March 25, it’ll be DLSS-ready. This “dark and mysterious narrative short story” was developed by CyberPunch Studios and is being published by Headup. There’s a free demo of the game over on Steam if you’re interested in giving it a spin.

A full list of games that support DLSS can be found here.

https://www.techspot.com/news/88961-nvidia-dlss-added-crysis-remastered-system-shock-remake.html