In brief: Nvidia confirmed that it created a new 12-pin power connector to replace the commonly used 8-pin connector. The company said the new connector is smaller than its predecessor but is able to carry more power.

Nvidia on Wednesday published a video in which it explained some of the design philosophies that went into creating the thermal solution for its upcoming Ampere family of GPUs.

As Nvidia thermal architect David Haley recounts, the first law of thermodynamics states that energy cannot be created or destroyed in a closed system.

Thus, in order to get more performance out of a GPU, you need to be able to bring in more power and effectively dissipate the increased heat. To do that, Nvidia’s engineering team used computational fluid dynamics tools to simulate how air flows through a system. From there, they tweaked the PCB design, created a new spring system to attach the cooler to the card, relocated the cooling fans and adjusted the software that controls the fans.

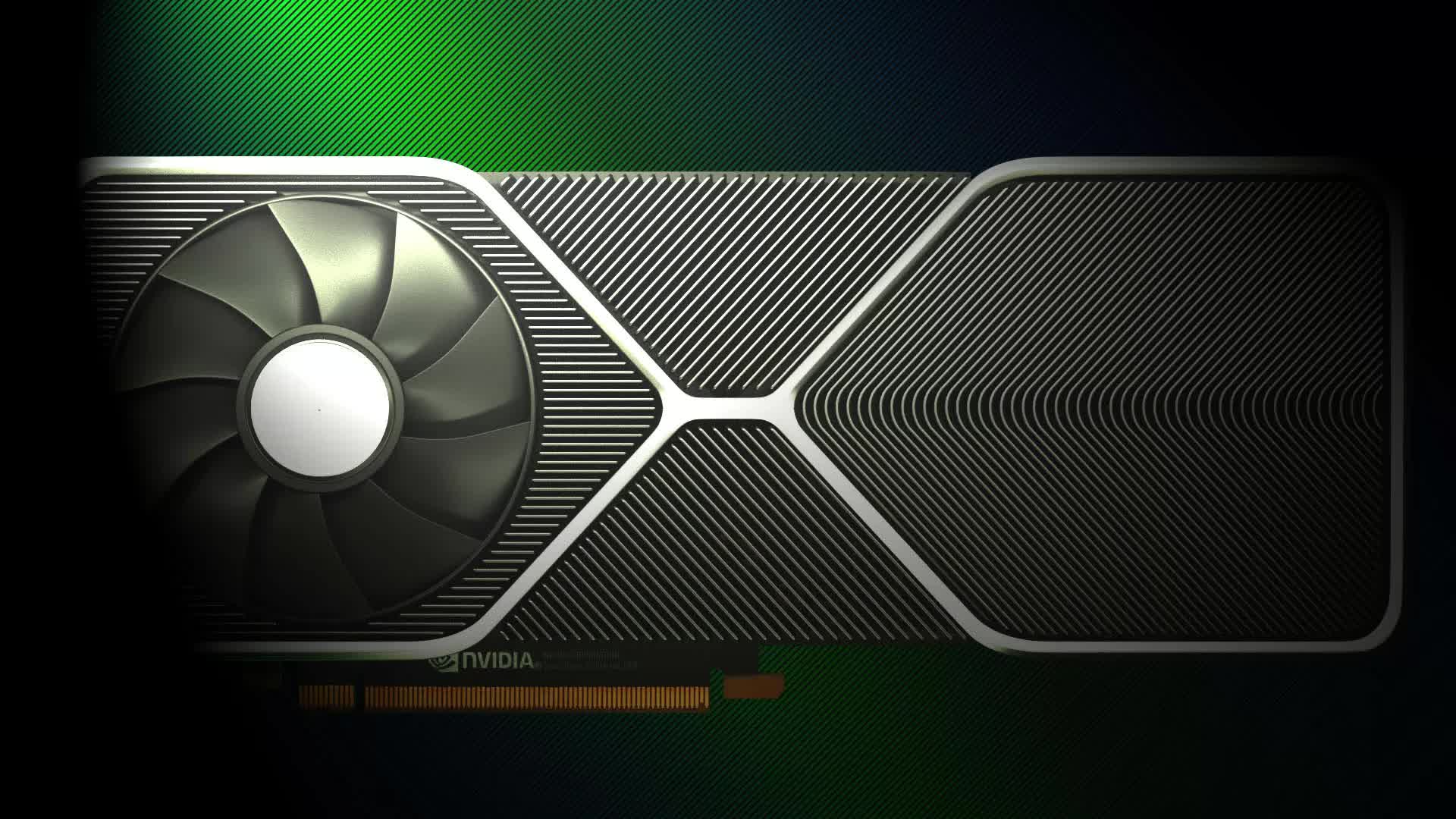

The heatsink and fan tweaks are in line with what we’ve seen from leaked images thus far, further bolstering their validity.

At the end of the video, we get a brief preview of what is believed to be the 3080 cooler.

Nvidia is expected to share all during an online presentation scheduled for August 31.

https://www.techspot.com/news/86517-nvidia-shares-rtx-3000-series-cooler-design-process.html