What just happened? More than six months after rumors of their existence began, Nvidia has revealed the RTX 3080 Ti and RTX 3070 Ti at its Computex keynote. Much of what we'd already heard about the former, more powerful card proved accurate, apart from the slightly higher $1,199 MSRP. The 3070 Ti, meanwhile, is priced from $599 and arrives on June 10.

Talk of an RTX 3080 Ti has been around since Ampere was unveiled. Once thought to feature 20GB of GDDR6X, Nvidia confirmed the card comes with 12GB—half that of the RTX 3090. Its GA102-225 GPU features 10,240 CUDA cores with a total of 80 Streaming Multiprocessors (SM) units, 320 third-generation Tensor Cores, and 80 second-generation RT cores.

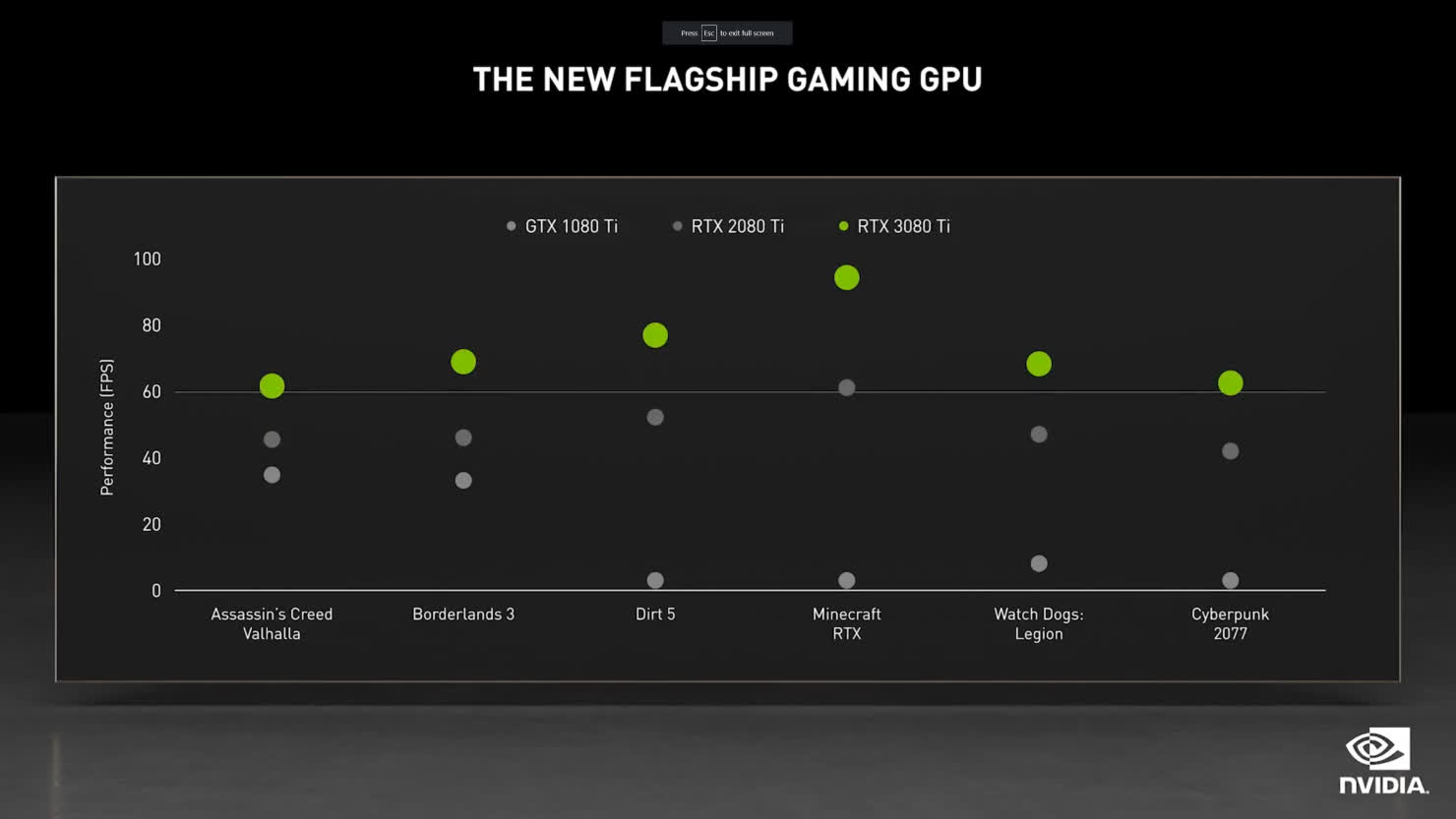

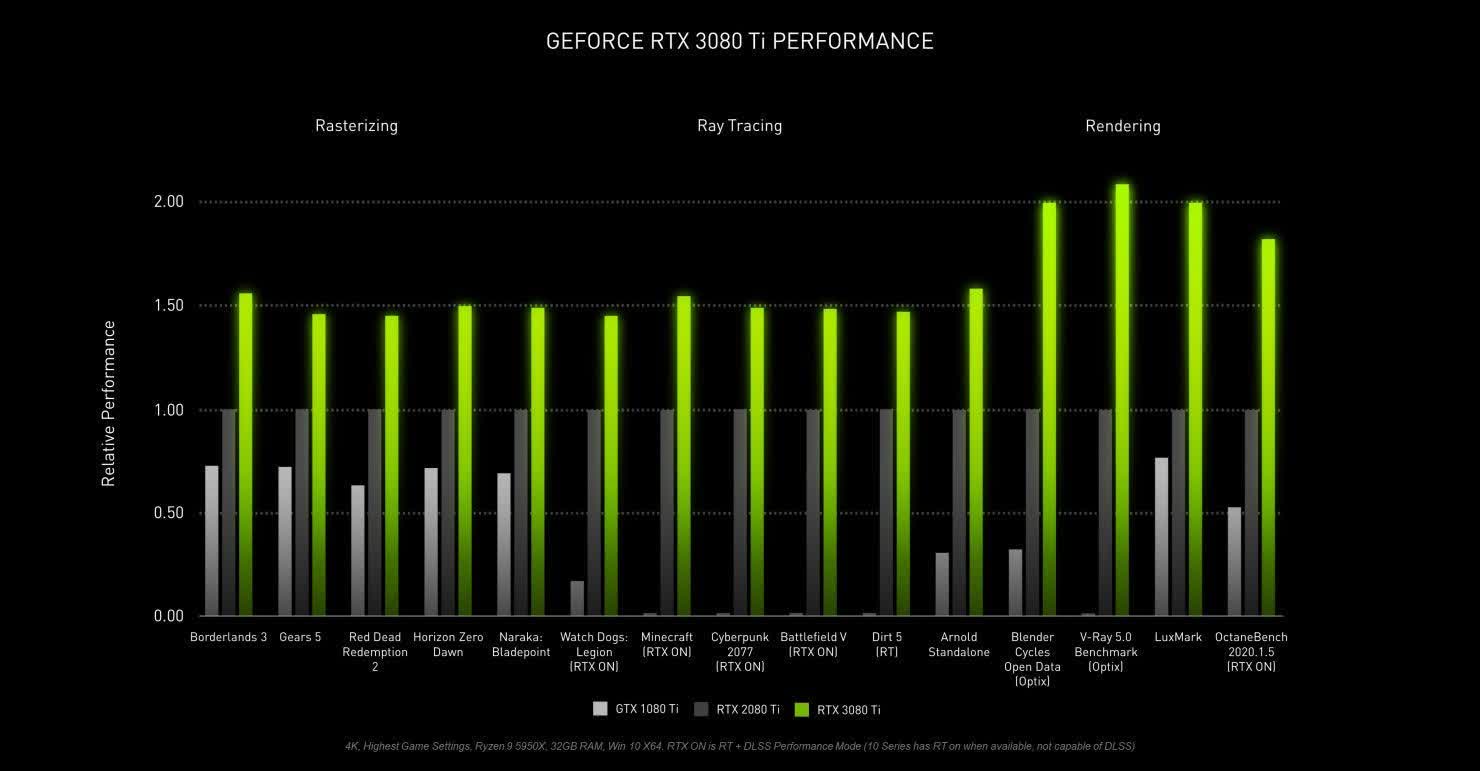

Nvidia threw out some comparison figures during its event: The RTX 3080 Ti boasts 1.5 times more performance than the GeForce RTX 2080 Ti and double the performance of the RTX 1080 Ti. It's essentially very similar to the RTX 3090, with less VRAM being the only significant difference—and the price.

The RTX 3080 Ti has 12GB of GDDR6X memory at 19 Gbps and uses a 384-bit bus interface, giving a total theoretical total bandwidth of 912 GB/s—only slightly behind the RTX 3090's 936.2 GB/s.

Elsewhere, the card has a 350W TDP, an expected base clock of 1440 MHz/1665 MHz Boost, 320 TMUs, and 112 ROPs. As with the rest of the consumer Ampere line, it's built on Samsung's 8N process technology. Going off looks alone, you might struggle to tell the difference between the RTX 3080 and RTX 3080 Ti Founders Editions. It has a single 12-pin Microfit connector—Nvidia includes an adapter for eight-pin cables—one HDMI 2.1 and three DP 2.0 connectors.

The RTX 3080 Ti launches on June 3 for $1,199. Expect custom models to arrive soon after. What we can also expect, sadly, is for it to suffer the same availability and scalping issues as every other graphics card right now.

Nvidia also unveiled the RTX 3070 Ti during the keynote, though it was a bit light on specs. We do know the card will feature 8GB of GDDR6X memory (the standard RTX 3070 has 8GB of GDRR6), 6144 CUDA cores, and use the full GA104 GPU core found in the vanilla version, utilizing all 48 SMs. There's also a 256-bit bus interface (608.3 GB/s bandwidth), and the base clock is expected to be 1575 MHz with a 1770 MHz boost.

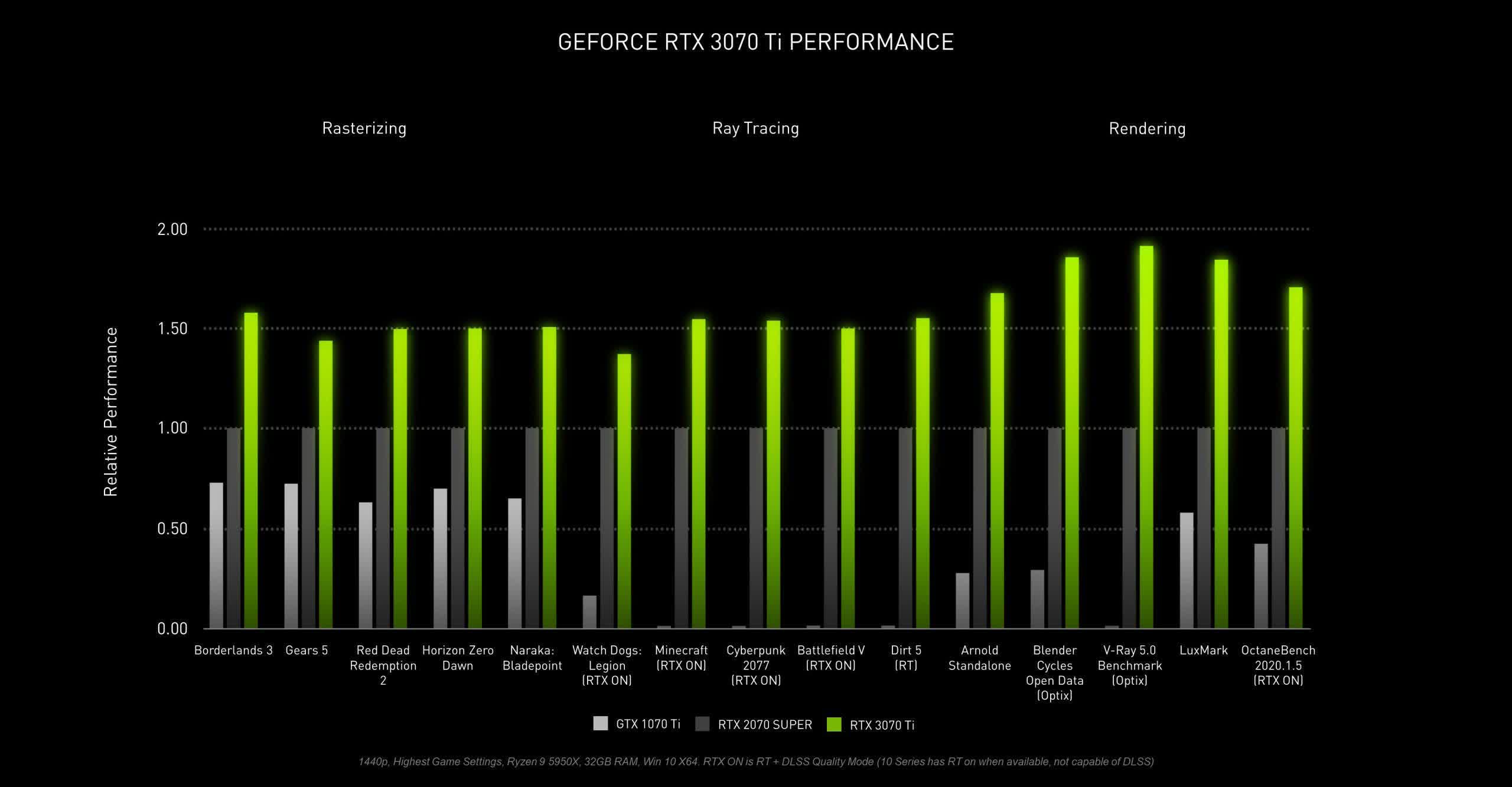

Nvidia says the RTX 3070 Ti will offer a 50% performance boost compared to the RTX 2070 Super and a 20% improvement over the RTX 3070.

The RTX 3070 Ti will be available on June 10, starting at $599. The same availability/price caveats as the RTX 3080 Ti apply. You can watch Nvidia's entire keynote right here.

https://www.techspot.com/news/89883-nvidia-unveils-geforce-rtx-3080-ti-boasting-rtx.html