Highly anticipated: The highlight of any Nvidia CES keynote is always the new hardware it shows off, and this year's event certainly didn't disappoint there. There was no big RTX 4000-series reveal (nor should there have been), but we did see the first true budget 3000-series card: the RTX 3060, coming in at just $329.

The card is expected to launch sometime in February -- at least, for the auto-purchase bots and scalpers that have been ravaging the hardware market over the past several months. Everyone else might have to wait longer for stock to come in.

At any rate, the 3060's biggest selling point (aside from its sub-$350 price tag) is its 12GB of GDDR6 VRAM. That figure surpasses last gen's RTX 2080 Ti, and even this gen's RTX 3080, which have 11 and 8GB of VRAM, respectively.

The 3060 was built with a 192-bit memory bus, and it's set to feature 3,584 CUDA cores and a base clock speed of 1.32 GHz (boost clocks go up to 1.78 GHz). It's expected to draw around 170 W of power on average over a PCIe 8-pin connector, which should fit into most modern builds quite nicely.

Nvidia promises up to twice the raster performance of the GTX 1060, and 10x the RT performance. That's a rather useless and frankly puzzling comparison, given that the 1060 is two generations old and doesn't even support RTX in the first place.

Edit: To clarify, Pascal cards do technically support RT functions following a driver update from Nvidia some time ago, but their performance is so terrible that Nvidia's comparisons are still rather meaningless.

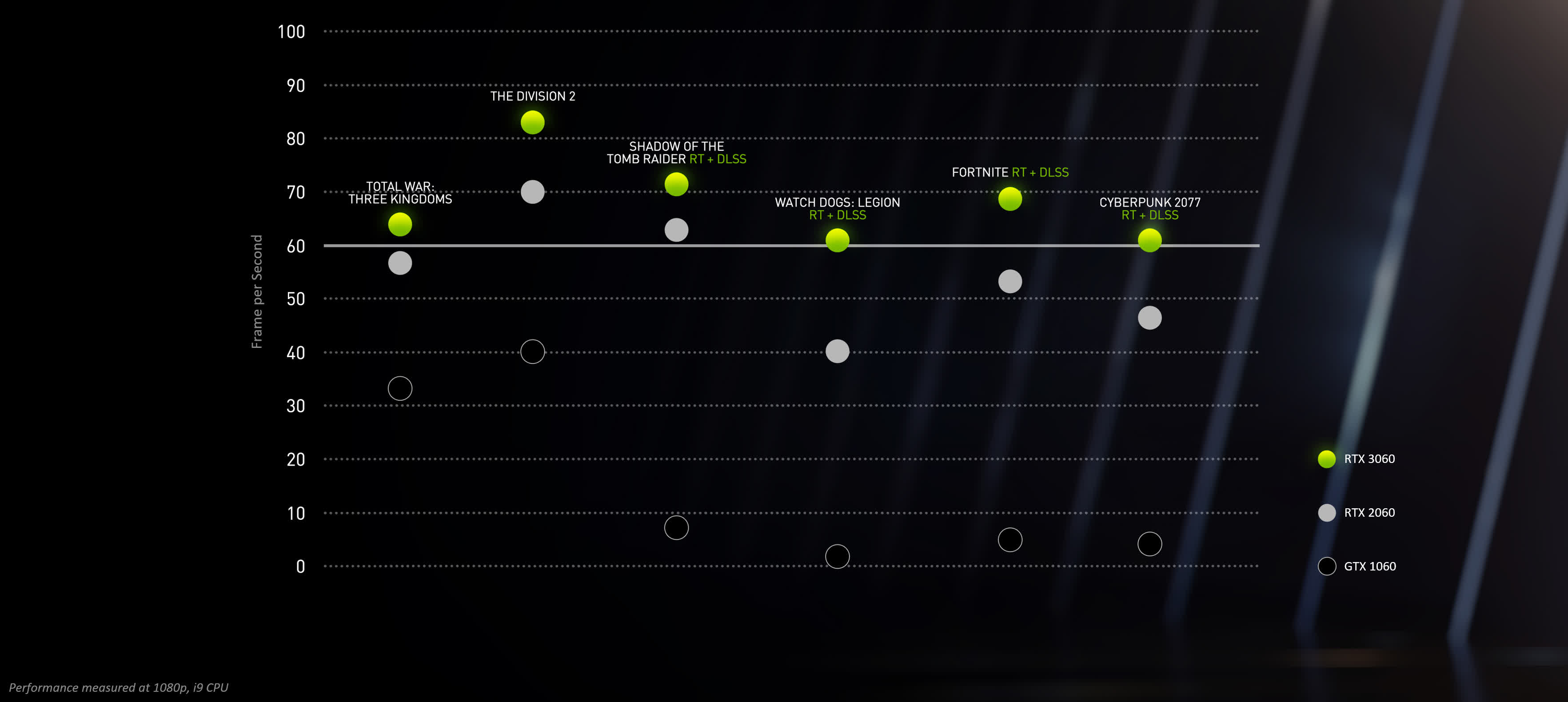

A much better comparison would be to stack up the 3060 against the 2060: both cards proclaim to support RTX technology, and they're only a generation apart. Fortunately, Nvidia tossed us a bone there, too, with the following chart:

According to Nvidia's calculations, the RTX 3060 is can push somewhere in the neighborhood of 60-64 FPS in Watch Dogs: Legion at 1080p with RT and DLSS enabled (it's unclear what RT and DLSS settings were used, however). The 2060, however, sinks to the low 40s, which is no surprise given the generational differences here. The 1060's performance is listed as well, but again, it's an irrelevant comparison.

As usual, we'd advise you to take manufacturer benchmarks with a grain of salt. It's not that Nvidia is intentionally misleading anyone here, but rather that we simply don't know what methodology they use to arrive at their conclusions, and thus cannot verify them. It's always better to wait for independent benchmarks, such as our own.

Nonetheless, if you wish to throw caution to the wind, you can snag a 3060 of your own next month. We'll update you when we have a more concrete release date to share.

https://www.techspot.com/news/88265-nvidia-geforce-rtx-3060-boasts-12gb-vram-3584.html