Earlier this year Nvidia launched their GeForce GTX 750 Ti and GTX 750 graphics cards. Based on the latest Maxwell microarchitecture, the release garnered a surprising amount of attention. Normally cards in this price and performance bracket are unexciting workhorses; they’re the mainstream budget cards that people buy because they either can’t afford or simply aren’t interested in the more monstrous cards north of the $200 line.

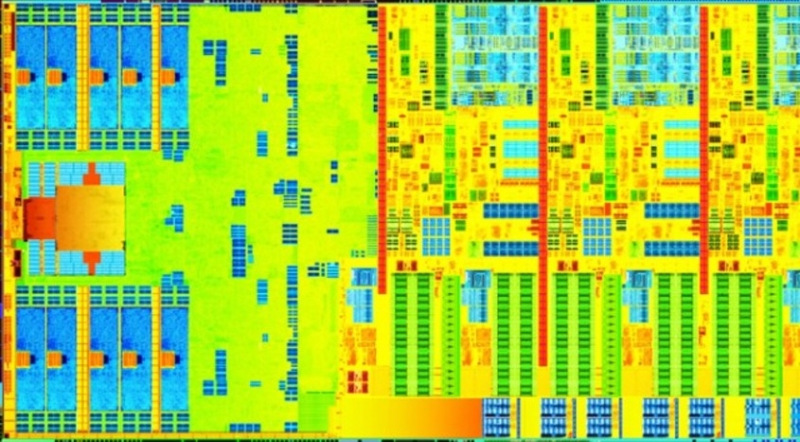

What caught everyone’s attention wasn’t how fast the card was so much as its performance per watt. Nvidia’s Maxwell effectively doubled performance per watt against Kepler, and it thoroughly trounces AMD’s GCN architecture. It does this without the benefit of smaller process technology, an advantage Intel aggressively leverages over AMD. With the GM107 chip that powers the GTX 750 Ti and GTX 750, Nvidia has essentially produced the Pentium M of GPUs: architected to maximize performance per watt but within an envelope.

Guest author Dustin Sklavos is a Technical Marketing Specialist at Corsair and has been writing in the industry since 2005. This article was originally published on the Corsair blog.

Of course, in a way, Nvidia is playing catch up. Intel has been aggressively pursuing increased efficiency and reduced power consumption with their processors for a long time, having suffered from plenty of egg on their faces for almost the entire Pentium 4 and Pentium D families.

Haswell is arguably a bust from a performance standpoint, requiring as much power or more for the same performance you could get from Ivy Bridge and trading some power consumption for its increased instructions per clock.

Yet the integration of voltage regulation circuitry onto the die, aggressive use of smaller manufacturing processes for the chipset, and even system-on-chip versions for mobile and all-in-ones tell a different story. Haswell’s load consumption is up, but its idle consumption can be as much as ten watts lower. Intel architects their chips to spend as much time idle as possible; the less time the chip spends actively working, the more time it spends idle and sipping power. It works out as a net gain.

AMD’s Kaveri architecture is another move in this direction. Their GCN graphics core architecture is arguably more efficient than their VLIW4 and VLIW5 architectures of yesteryear, and Kaveri itself was architected to reduce power consumption over Trinity and Richland while offering similar or better performance on both the CPU and GPU sides. A minor process transition from 32nm to 28nm completes the package.

Many of these changes are driven by the mobile sector. Originally we were looking at trying to get better performance in notebooks, but now it’s even trying to architect hardware for tablets and smartphones; Nvidia’s Maxwell was designed essentially to be ported to a smartphone SoC.

Still, better performance per watt helps us all. The benefit of the desktop PC is physics: power consumption and your thermal ceiling are far less constricted than they are in notebooks or tablets. Increased efficiency allows us to make powerful, silent machines for the living room or go the opposite direction and maximize performance in our full towers.

The run for efficiency doesn't stop there. Low voltage DDR3L has supplanted conventional DDR3 in notebooks and ultrabooks, and power supplies are getting more and more efficient. The Corsair AX1500i is 80 Plus Titanium compliant, meaning that at most loads it never drops below 90% efficiency; even a lot of entry models are pushing 80 Plus Gold now. The AX1500i is specced to a mighty 1500W, but with how efficient hardware is becoming, that 1500W can be better utilized to power a staggering amount of performance.

If you think about it, this is really the only direction we can go.

There was a period of time when brute force was a perfectly reasonable way to improve performance: increase power consumption, increase performance, call it a day. Or just throw more and more resources on to a chip, power consumption be damned. At some point, though, you’re just going to smash headfirst into a thermal/power wall like Intel’s Netburst architecture did, and that’s pretty much where we’re at.

Designs need to be scalable in multiple directions; that’s what necessitates designs like Maxwell, Haswell, and Kaveri, and that’s what makes chips like Nvidia’s old GF100 absolutely horrendous for use anywhere outside of the desktop (see the GeForce GTX 480M.) GK110 (GeForce GTX 780, 780 Ti, Titan, and Titan Black) at least has the high performance computing market to fall back on, but GK104 continues to do the heavy lifting for Nvidia in mobile.

Performance per watt is fast becoming the most important metric we’re judging hardware by, and it’s evident there are still big gains to be made in this department on the GPU side, at least if Nvidia’s Maxwell is any indication. The positive reception to the GeForce GTX 750 Ti is proof enough of that. Anyone who ignores it, be they as insignificant as a single builder or as massive as a semiconductor company, does so at their own peril.

https://www.techspot.com/news/57565-op-ed-the-future-will-be-measured-in-performance-per-watt.html