The obvious response to such a statement (and this isn't directed at yourself; it's a remark that repeatedly comes up) is ask the question 'what is the evidence that a group of developers are being lazy?' Perhaps it's better to word it as 'what is the evidence to suggest that a game would perform better if the engine was using parallel processing?'Well you also have the issue of lazy devs using threads but not utilizing them fully. We more or less need to brute force our way around developer laziness.

The simple answer is that there is no such evidence, unless the developers in question openly stated the full processing sequence in their game engine and/or broke down the respective performance bottlenecks and what they had done in response.

However, we can look at look at benchmark results from a game, where the number of available cores restricted. For example:

At first glance, the results for Assassin's Creed Odyssey would strongly suggest that mulithreading offers significant performance benefits. But first note the system specs and game settings being used, specifically that the GPU is an overclocked RTX 2080 and the resolution is 1080p. These two alone are putting the performance bottleneck almost entirely on the CPU, so any minor increases in performance will be clearly highlighted.

Then note that the game is fundamentally created for the PS4 and XB1 platforms; the CPU in both systems is essentially a dual CPU setup, each with 4 cores:

Cross-CPU read/writes are fraught with latency problems and need to be avoided at all costs, so games for these consoles that are using multithreading need to control what is done in parallel in such a way to keep performance critical threads (e.g. animation, physics, networking, culling) on one cpu and the rest (general OS, I/O, audio) on another.

But no matter which multithreading approach to the engine is used (synchronous parallel compute, asynchronous parallel compute, synchronous parallel data objects) there is always a hitch somewhere, either in the form of data dependency (I.e. the result of one thread is dependent on the rest of another thread being done before) or thread stalls (I.e. the engine can't move on until all threads in flight are processed).

This may go some way to explaining why the performance increase in the video above, especially in 0.1% low values is so significant when going from 4 to 6, and then again from 6 to 8 - the game engine is fundamentally designed to operate on a system with two 4 core CPUs. Does this make Ubisoft's developers lazy for not creating an entirely new engine, just for the PC platform?

Possibly, but it's really down to cost reasons. The Anvil engine, like Unreal or Unity, is an economic approach to creating a game system for 8 different platforms. For Odyssey, it's a relatively large team of programmers that work on the engine as a whole (roughly 100), but this is just a tiny part of the 10 divisions of development and publishing organisations used to create the title.

Every engine development team are faced with the same challenges - there's only so much time and so much financial resources with which to work, and always means certain design choices have to be made. In the case of cross-platform games, it's quicker and easier to push the performance bottleneck onto the GPU that it is to generate a engine specifically for the PC and one for consoles.

For a PC-only game, such as Total War: Three Kingdoms, it's a different story, especially if the developers aim to have as broad a range as possible for the system requirements. For that particular Total War game, the minimum CPU is a dual core processor, with the clear caveats that the frame rate is going to be around 30 fps on average, with low settings used abound. The recommended CPU for the best overall experience is a 6 core, 12 thread processor - not 8 cores or more, which may seem surprising given that it's a 2019 release. That's not a sign of laziness, such one of design and cost constraints.

This doesn't explain why Remedy recommends a 4 to 6 core processor for Control, nor why 4A Games recommends a 4 core one for Metro Exodus.Evernessince said:Features like ray tracing require a lot of CPU overhead and that's why Battlefield recommends an 8 core CPU when RTX is enabled.

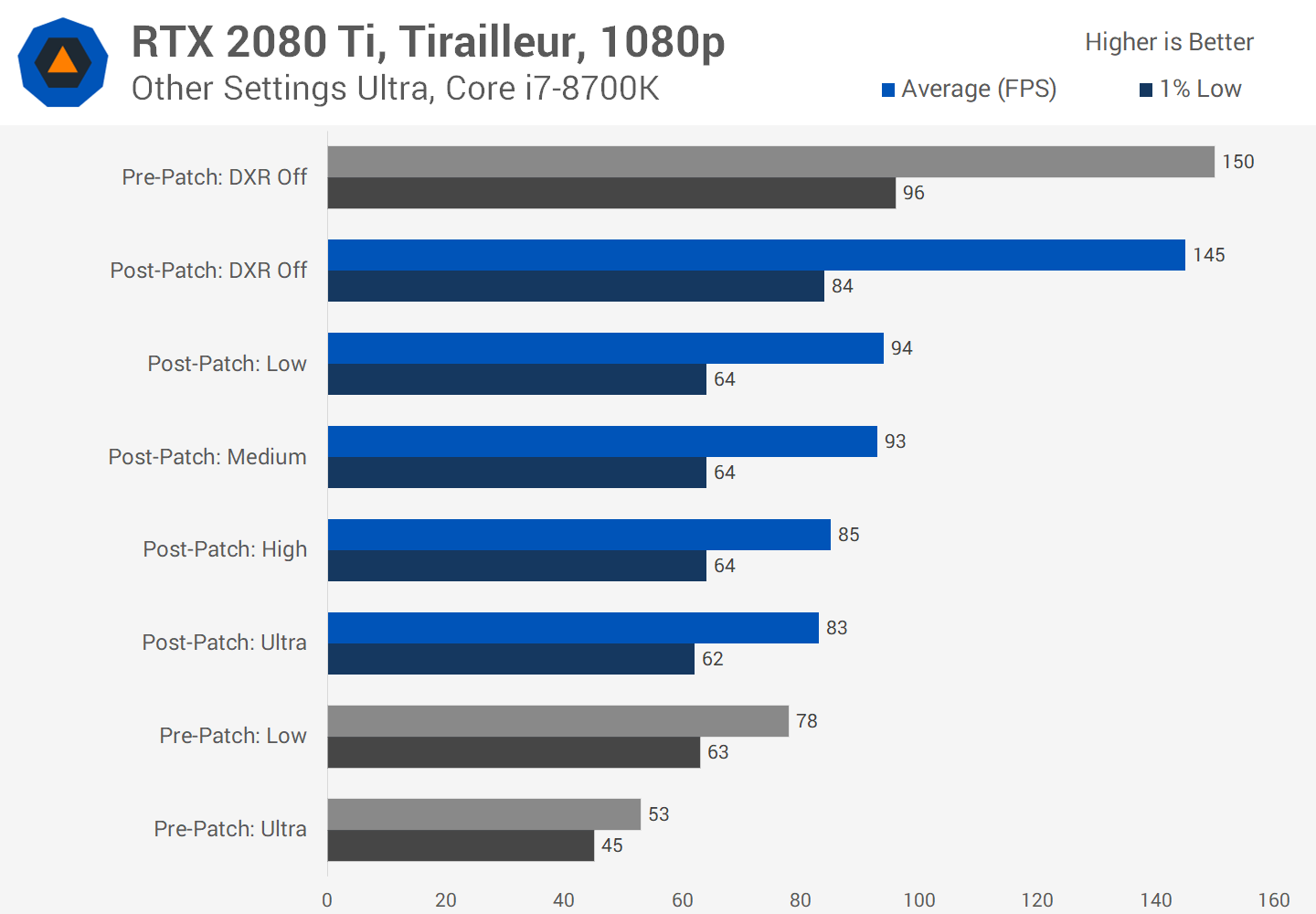

If you look at this analysis of BV's ray tracing performance, you can see that BV may just be a one-off case:

If you look at the post-patch Ultra results, comparing 1080p to 1440p (a 77% increase in pixels to be processed), the 1% low drops from 62 to 59 fps and the average from 83 to 60 fps - drops of 5% and 28% respectively.

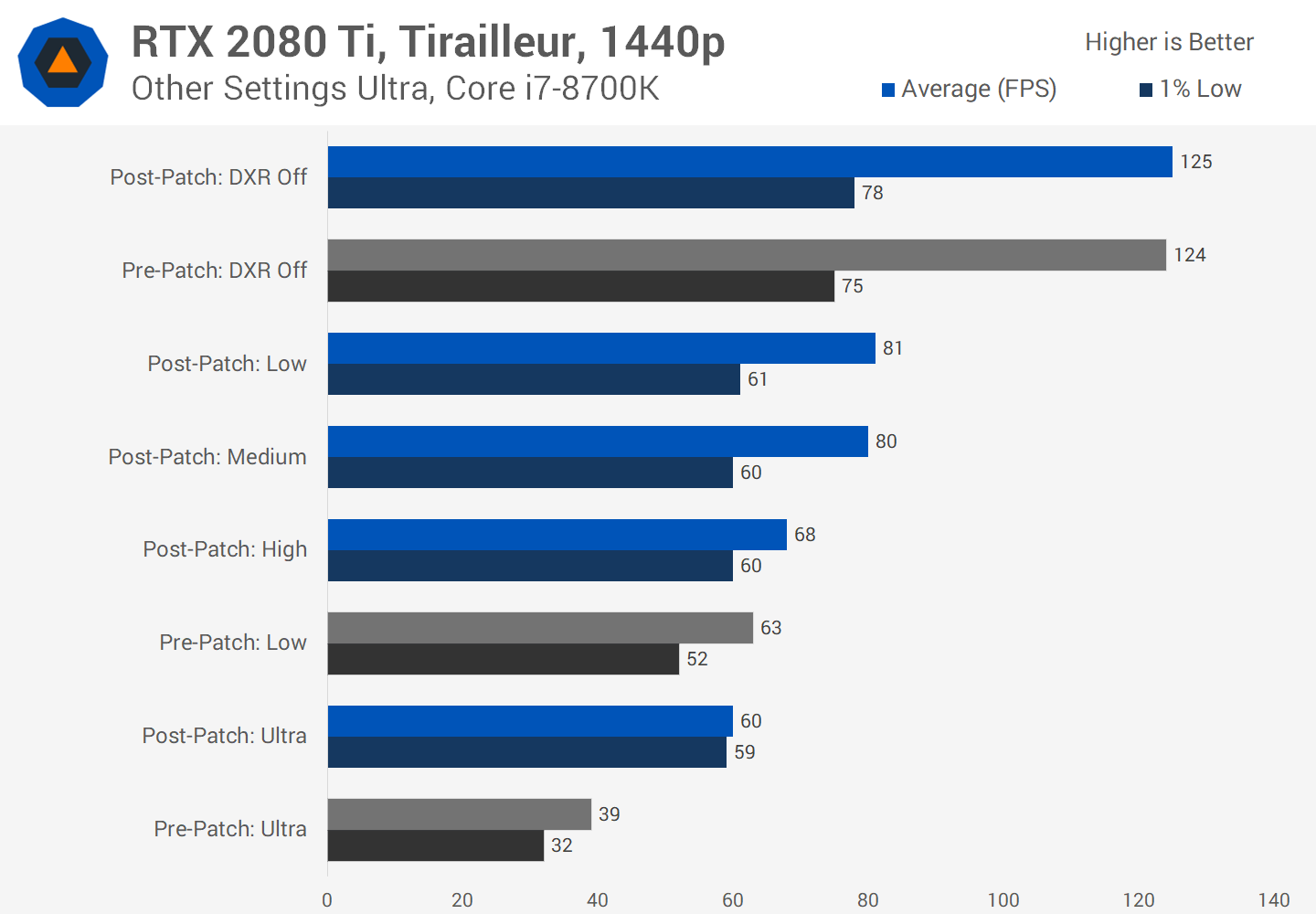

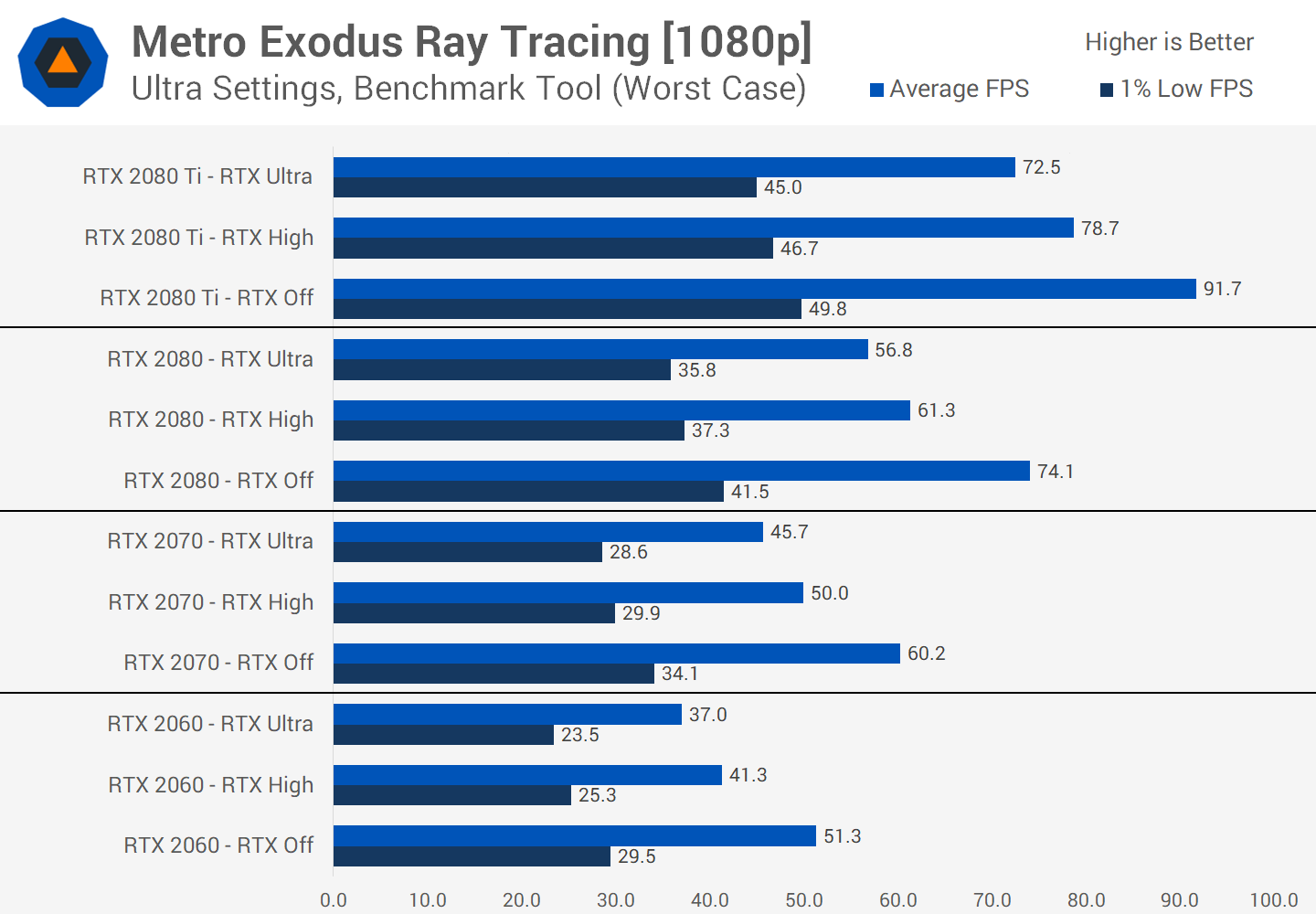

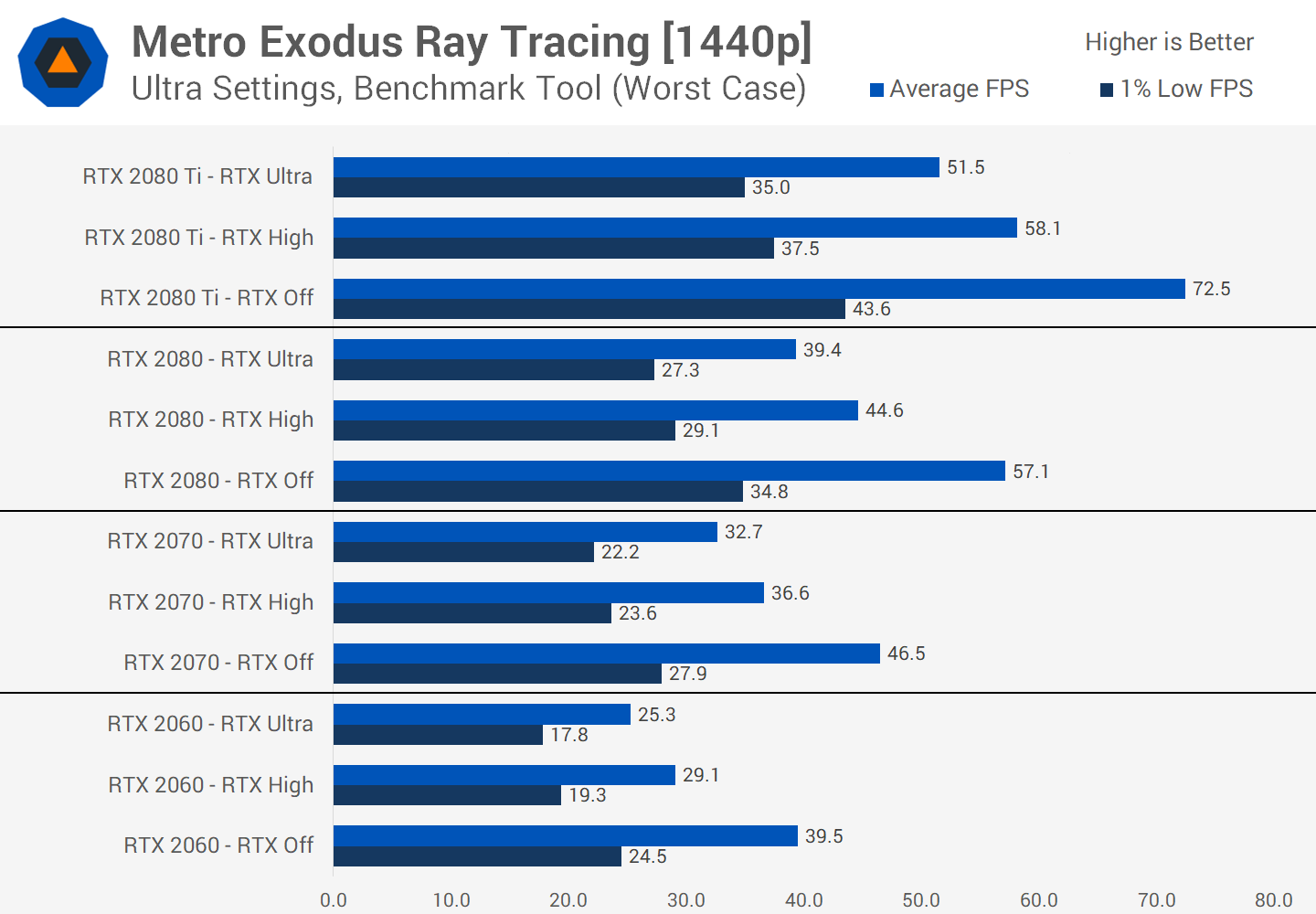

Compare that to Metro Exodus:

For the same GPU, the drops are 22% (1% low) and 29% (average); this means that the use of ray tracing in Metro is a lot more GPU dependent than it is in Battlefield V, and the Metro test was done with a CPU with 2 more cores. So the use of RT isn't necessarily heavily dependent on the CPU.