There are many third-gen Ryzen processors to pick from, but you can narrow the choices down easily and having an intended budget will help you quickly do that. It's also good to understand what kind of performance boost you'll get by going up a tier, or how much you'll be sacrificing by going down to save some money.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ryzen 3 vs. Ryzen 5 vs. Ryzen 7 vs. Ryzen 9

- Thread starter Steve

- Start date

QuantumPhysics

Posts: 6,306 +7,258

Adding more cores seems to be great for a workstation, but it doesn't seem to matter much for gaming as compared to better optimization of fewer cores.

It's mainly due to how games are currently coded, 8 threads seems pretty standard looking at the data, which is why the 3300X does a good job, going forward the next gen consoles are basically having a 3700X but at lower clocks for power consumption, but with the low level APIs of consoles that'll be very similar to a 3700X running at full speed anyways. So expect after 2 years of next gen to see games coded for 12-14 threads, so a 3900 12/24 might be better for the average PC gamer in 3 years time due to the overhead of running Windows and other applications.Adding more cores seems to be great for a workstation, but it doesn't seem to matter much for gaming as compared to better optimization of fewer cores.

Theinsanegamer

Posts: 5,435 +10,199

I expect the exact opposite. 2 cores of the new consoles are reserved hardware, so games will have access to 6 cores 12 threads at most. Given the work to get game engines to use 6 cores already done, I believe we will see games continue to use 6 cores, MAYBE 12 threads, and continue to not take advantage of 8+ cores as there is no financial inentive to do so unless you are making a PC exclusive game.It's mainly due to how games are currently coded, 8 threads seems pretty standard looking at the data, which is why the 3300X does a good job, going forward the next gen consoles are basically having a 3700X but at lower clocks for power consumption, but with the low level APIs of consoles that'll be very similar to a 3700X running at full speed anyways. So expect after 2 years of next gen to see games coded for 12-14 threads, so a 3900 12/24 might be better for the average PC gamer in 3 years time due to the overhead of running Windows and other applications.

The Xbox 360 had 3 cores and 6 threads, but games didnt start using more then 2 cores in any significant manner until the PS4 came out in 2013.

There is also the issue of games not inherintly being a multi threaded application. Throwing more cores at the issue will not always mean they will be used. Even today we still see 1-2 threads being loaded way heavier then the other 4-6. The boost another 2 cores will provide under sucha system are very minimal, and this is with engines optimized for 6 very slow jaguar cores. Now we'll have 6 fast Zen cores available, there's no incentive to push even more multithreading VS taking advantage of the 6 cores they arleady have.

Homerlovesbeer

Posts: 269 +314

Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

I really don't care a bit about 1080p resolution. Honestly it's only for the frame rate nutters. The rest of us appreciate a game looking great and being absorbing.

Love your work otherwise guys. Cheers.

I really don't care a bit about 1080p resolution. Honestly it's only for the frame rate nutters. The rest of us appreciate a game looking great and being absorbing.

Love your work otherwise guys. Cheers.

amghwk

Posts: 1,549 +1,539

How are these new Ryzen processors when it comes to Linux?

I'm moving away completely from Windows 10, no thanks to recent forced updates which started breaking up things (even though I liked Win10 for sometime in the beginning), and my future builds will be based on Linux.

I think I'm done with the crazy graphics card race and decided to stay put with my current 5700XT. (For modern gaming, I'm just going to get the PS5, since most new games are released multi-platform. Not that latest games are anything to shout about anyway, but I have my backup system to play any latest ones with new consoles without worrying about obscene prices for flagship video cards nowadays.)

I'm really only interested in the Ryzen 7 or 9 series, probably not Threadrippers due to overkill pricing, for my future builds.

I have come to be familiar with Linux being much better with Intel processors, just not sure how's the latest scene like with the Ryzens.

I do compile most apps from sources.

Thanks for any input about this.

I'm moving away completely from Windows 10, no thanks to recent forced updates which started breaking up things (even though I liked Win10 for sometime in the beginning), and my future builds will be based on Linux.

I think I'm done with the crazy graphics card race and decided to stay put with my current 5700XT. (For modern gaming, I'm just going to get the PS5, since most new games are released multi-platform. Not that latest games are anything to shout about anyway, but I have my backup system to play any latest ones with new consoles without worrying about obscene prices for flagship video cards nowadays.)

I'm really only interested in the Ryzen 7 or 9 series, probably not Threadrippers due to overkill pricing, for my future builds.

I have come to be familiar with Linux being much better with Intel processors, just not sure how's the latest scene like with the Ryzens.

I do compile most apps from sources.

Thanks for any input about this.

amghwk

Posts: 1,549 +1,539

I think both the authors and viewers have commented before (many times) why 1080p is used when benchmarking CPUs. It's about bottlenecks, and I think others can help you understand better why this is so, by putting into words in an easy way.Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

I really don't care a bit about 1080p resolution. Honestly it's only for the frame rate nutters. The rest of us appreciate a game looking great and being absorbing.

Love your work otherwise guys. Cheers.

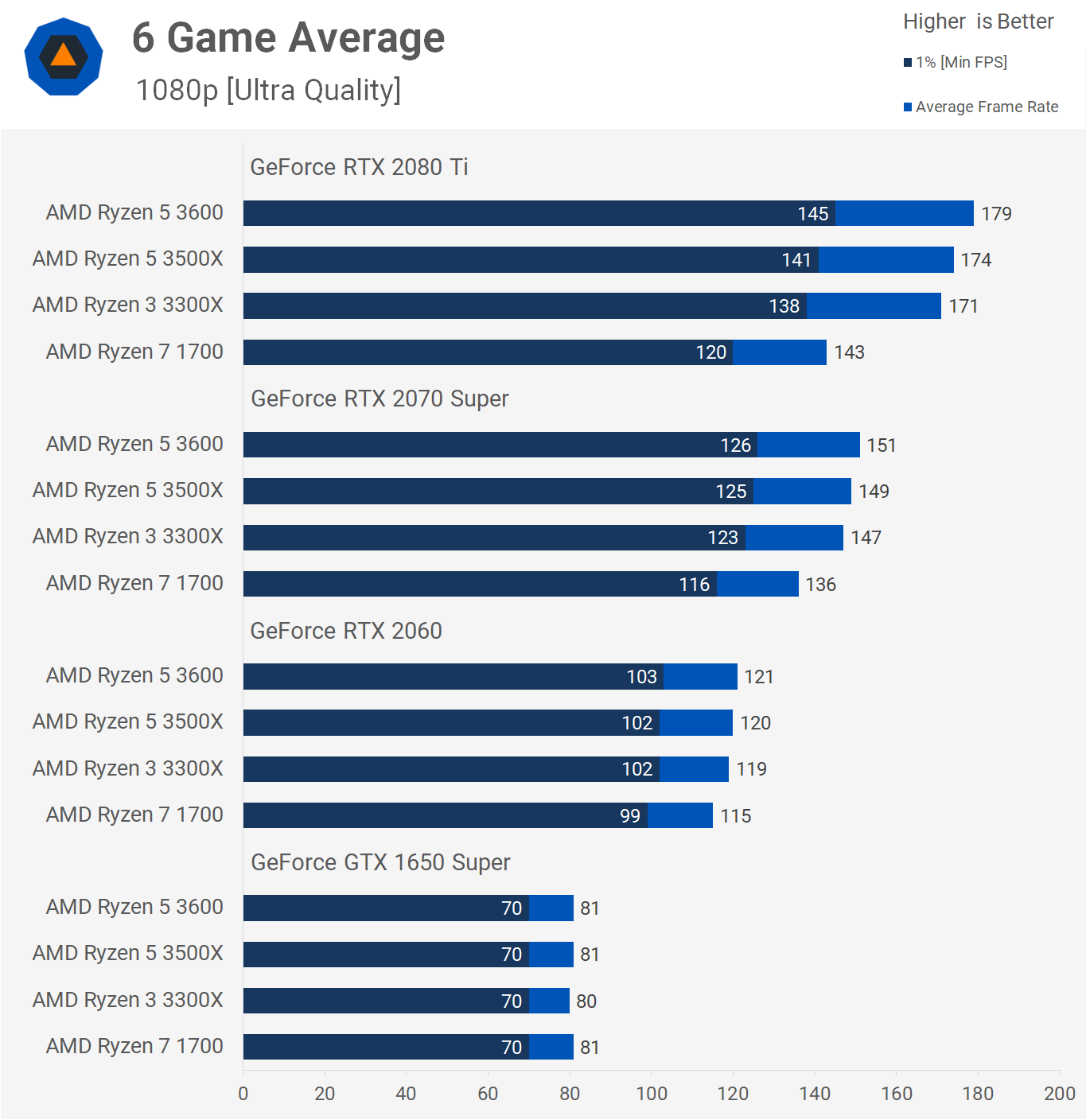

Differences between the CPUs decreases with resolution, because the frame rate in games is increasingly controlled by the performance of the graphics card. You only have to look at the following article to see this:Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

Ryzen 7 1700 vs. Ryzen 3 3300X: GPU Scaling

AMD's old-time favorite, the Ryzen 7 1700 seems to have aged rather well. Budget PC builders right now can choose between a Ryzen 3 3300X or a...

www.techspot.com

www.techspot.com

Notice how all 4 CPUs perform within 1 fps of each other at 1080p maximum quality, when using a GeForce 1650 Super? That's because the frame rate is being limited entirely by the budget GPU.

Replace it with a 2080 Ti, currently the most capable consumer GPU out there, and there's a clear separation between the CPUs.

One might argue why we don't then use the lowest possible graphics settings, to put the rendering performance limit entirely on the CPU; the reason for using high or highest possible settings, is that many rendering techniques still require the CPU to perform a raft of additional calculations before passing the data on to the GPU.

More importantly, CPU and GPU reviews are not system performance examinations. At no point are we ever suggesting that if you buy a Ryzen 5 3600 and pair it with a GeForce RTX 260 that you too will get 86 fps on average. All game benchmarks in reviews use realistic workloads where possible (I.e. we don't use built-in tests, instead data is taking from playing in the game itself) but that doesn't mean the results will transfer across to other systems.

You have it completely back to front. The higher the resolution the more GPU-bottlenecking takes place and the less difference is seen between high vs low end CPU's. Upping the resolution makes sense for GPU benchmarks but not CPU benchmarks.Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

That's nice but considering 90% of the market don't use more than 1440p, 1080p benchmarks are going nowhere:-I really don't care a bit about 1080p resolution.

https://store.steampowered.com/hwsurvey

I expect the exact opposite. 2 cores of the new consoles are reserved hardware, so games will have access to 6 cores 12 threads at most. Given the work to get game engines to use 6 cores already done, I believe we will see games continue to use 6 cores, MAYBE 12 threads, and continue to not take advantage of 8+ cores as there is no financial inentive to do so unless you are making a PC exclusive game.

The Xbox 360 had 3 cores and 6 threads, but games didnt start using more then 2 cores in any significant manner until the PS4 came out in 2013.

There is also the issue of games not inherintly being a multi threaded application. Throwing more cores at the issue will not always mean they will be used. Even today we still see 1-2 threads being loaded way heavier then the other 4-6. The boost another 2 cores will provide under sucha system are very minimal, and this is with engines optimized for 6 very slow jaguar cores. Now we'll have 6 fast Zen cores available, there's no incentive to push even more multithreading VS taking advantage of the 6 cores they arleady have.

Those 2 cores could also be considered for the extra overhead of running on a PC, so if the consoles are really using 6c/12t then the PC will really want a couple extra cores for windows overhead already. You could see how even now before the new consoles, there are some games that run better with the 3700X than the 3600.

Evernessince

Posts: 5,469 +6,158

I expect the exact opposite. 2 cores of the new consoles are reserved hardware, so games will have access to 6 cores 12 threads at most. Given the work to get game engines to use 6 cores already done, I believe we will see games continue to use 6 cores, MAYBE 12 threads, and continue to not take advantage of 8+ cores as there is no financial inentive to do so unless you are making a PC exclusive game.

The Xbox 360 had 3 cores and 6 threads, but games didnt start using more then 2 cores in any significant manner until the PS4 came out in 2013.

There is also the issue of games not inherintly being a multi threaded application. Throwing more cores at the issue will not always mean they will be used. Even today we still see 1-2 threads being loaded way heavier then the other 4-6. The boost another 2 cores will provide under sucha system are very minimal, and this is with engines optimized for 6 very slow jaguar cores. Now we'll have 6 fast Zen cores available, there's no incentive to push even more multithreading VS taking advantage of the 6 cores they arleady have.

You should have extra cores to handle background processes and other programs regardless. The vast majority of gamers do not run games in benchmark environments. It's nice to not have a system that lags everytime windows decides to do something in the background or have to worry about discord taking up what little CPU resources you do have. GPUs are similar, in that if you run them at 100% you are increasing input lag. That's why it's recommended you cap FPS at 3% below 100% GPU usage.

Last edited:

Nobina

Posts: 4,504 +5,507

4K is for noobs that play tripleAAAY telltale games. Pros play on 5:4 with black bars.Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

I really don't care a bit about 1080p resolution. Honestly it's only for the frame rate nutters. The rest of us appreciate a game looking great and being absorbing.

Love your work otherwise guys. Cheers.

Irata

Posts: 2,288 +4,002

Great work as always, but I have a suggestion: Why not test a multitasking scenario ?

Running single tasks only shows part of what each CPU is capable of and I feel that the relatively small differences in many games may look quite different if you had several programs like Teamspeak, Discord and maybe even streaming at the same time.

I realize that not everyone is into heavy multi tasking, but I‘d bet more people run at least several programs / tasks simultaneously vs. only one at a time with close to no background tasks.

Heck, if I look at all the stuff in my system tray, that should already keep a thread or two busy. Never mind that networking, sound and I/o should take up some system resources.

Having owned slow systems in the past where you had to shut down as many tasks as possible (I.e. basically a benchmarking scenario) to get decent gaming performance, knowing how well a system can handle many tasks at the same time is useful information to me.

Here is one example of a multi tasking scenario:

www.gamersnexus.net

www.gamersnexus.net

Running single tasks only shows part of what each CPU is capable of and I feel that the relatively small differences in many games may look quite different if you had several programs like Teamspeak, Discord and maybe even streaming at the same time.

I realize that not everyone is into heavy multi tasking, but I‘d bet more people run at least several programs / tasks simultaneously vs. only one at a time with close to no background tasks.

Heck, if I look at all the stuff in my system tray, that should already keep a thread or two busy. Never mind that networking, sound and I/o should take up some system resources.

Having owned slow systems in the past where you had to shut down as many tasks as possible (I.e. basically a benchmarking scenario) to get decent gaming performance, knowing how well a system can handle many tasks at the same time is useful information to me.

Here is one example of a multi tasking scenario:

AMD R7 1700 vs. Intel i7-7700K Game Streaming Benchmarks

This new benchmark looks at the AMD R7 1700 vs. Intel i7-7700K performance while streaming, including stream output/framerate, drop frames, streamer-side FPS, power consumption, and some brief thermal data. - P2: AMD Ryzen Streaming Benchmarks vs. Intel

Last edited:

Guys can you stop testing only at 1080p and add 1440 and even 4k as I'm sure the frame rates will then differ more from processor to processor.

I really don't care a bit about 1080p resolution. Honestly it's only for the frame rate nutters. The rest of us appreciate a game looking great and being absorbing.

Love your work otherwise guys. Cheers.

?? You want a cpu review to test at 1440p and 4k ?

4K is for noobs that play tripleAAAY telltale games. Pros play on 5:4 with black bars.

It's either noobs or pros? Some people just want to enjoy a variety of games with great graphics on a nice monitor and a smooth experience. Not everyone is looking for every last frame in shooty shooter competitive games. Lots of gamers aren't even into shooty shooter games.

dankbot420

Posts: 15 +12

3900x is $389 on Newegg right now + free Assassin's Creed Valhalla code.

Nobina

Posts: 4,504 +5,507

Gamers that aren't into shooty shooter games are way below in the gamer hierarchy.It's either noobs or pros? Some people just want to enjoy a variety of games with great graphics on a nice monitor and a smooth experience. Not everyone is looking for every last frame in shooty shooter competitive games. Lots of gamers aren't even into shooty shooter games.

Gamers that aren't into shooty shooter games are way below in the gamer hierarchy.

That's a load of malarkey.

Mr Majestyk

Posts: 2,058 +1,903

With the price of the 3700X dropping to $260 on Newegg it seems to be a better choice unless you just purely game. For a mixed use case it's very tempting, but I'm holding off for Vermeer.Good article, sure seems like the 3600 is the sweet spot. If these were all tested at stock speeds I wonder how much a modest overclock would help for at least gaming?

I looked at your charts and compared CPUs by subtracting instead of dividing.

That helped explain some of the results.

The R3 uses half of a physical chip (CCX in AMDspeak), the R5 & R7 use one whole CCX (I think the R5 is just an R7 with 1 or 2 defective cores), and the R9’s use 2 CCX’s (again guessing that the 9900 has a defective core somewhere). This matters because of the size of the L3 cache available to each group. The R3 can only use half of the CCX’s L3, the R5/R7 the whole L3, and the R9’s get the second CCX’s L3 cache.

This shows up perfectly in the compile time benchmark; the times depend almost entirely on how much L3 cache is on tap. The L3 is half as fast as both the R5 and the R7, which barely differ from each other. And the R9’s are almost exactly twice as fast as the R5/7 pair.

Some of the other benchmarks show a similar but less extreme cache effect. I think this helps explain why the R5 and the 9900 seem just a little sweeter than their cousins.

That helped explain some of the results.

The R3 uses half of a physical chip (CCX in AMDspeak), the R5 & R7 use one whole CCX (I think the R5 is just an R7 with 1 or 2 defective cores), and the R9’s use 2 CCX’s (again guessing that the 9900 has a defective core somewhere). This matters because of the size of the L3 cache available to each group. The R3 can only use half of the CCX’s L3, the R5/R7 the whole L3, and the R9’s get the second CCX’s L3 cache.

This shows up perfectly in the compile time benchmark; the times depend almost entirely on how much L3 cache is on tap. The L3 is half as fast as both the R5 and the R7, which barely differ from each other. And the R9’s are almost exactly twice as fast as the R5/7 pair.

Some of the other benchmarks show a similar but less extreme cache effect. I think this helps explain why the R5 and the 9900 seem just a little sweeter than their cousins.

mAdmAnDingo

Posts: 94 +82

I looked at your charts and compared CPUs by subtracting instead of dividing.

That helped explain some of the results.

The R3 uses half of a physical chip (CCX in AMDspeak), the R5 & R7 use one whole CCX (I think the R5 is just an R7 with 1 or 2 defective cores), and the R9’s use 2 CCX’s (again guessing that the 9900 has a defective core somewhere). This matters because of the size of the L3 cache available to each group. The R3 can only use half of the CCX’s L3, the R5/R7 the whole L3, and the R9’s get the second CCX’s L3 cache.

This shows up perfectly in the compile time benchmark; the times depend almost entirely on how much L3 cache is on tap. The L3 is half as fast as both the R5 and the R7, which barely differ from each other. And the R9’s are almost exactly twice as fast as the R5/7 pair.

Some of the other benchmarks show a similar but less extreme cache effect. I think this helps explain why the R5 and the 9900 seem just a little sweeter than their cousins.

Welcome to Techspot bud, I am happy you have joined us. This is a great place to be a part of. You are mostly correct, you are just getting mixed up with CCX and CCD terminology. So I can understand what you meant, and on the whole you do have the right idea, it is just your terminology that needs correction.

An 3300x is actually 1 entire CCX with all 4 cores enabled. And an 3100x is 2xCCX with 2 cores enabled per CCX. And an CCX is located in an CCD with up to 2xCCXs per CCD, so an 3800x and lower CPUs CCXs are located in 1 CCD which is 1 chiplet (regardless of core count) and an 3900x/3950x are comprised of 2xCCDs which consist of 4xCCXs at 2xCCXs per CCD, and each CCD is one chiplet. I think you are getting confused with CCDs and CCXs. And the CCXs communicate with each other over the Infinity Fabric.

An 3700x is also 2x4 cores CCXs with all 4 cores enabled per CCX which together comprise 1xCCD (fully enabled CCD as it were). Ryzen Master shows this clearly, it labels my 3700X as 1x CCD (CCD 0) split into 2x4 core CCXs called CCX 0 and CCX 1. And those 2xCCX (1xCCD) are located in 1 chiplet whereas an 3900x/3950x are 2x4 core CCXs per CCD with 2xCCDs in total (2x chiplets).

So AMDs current layout is 1xCCX is 4 cores when fully enabled, but there are 2xCCXs per chiplet which is actually counted as 1 fully enabled CCD. So my 3700x consists of CCX 0 and CCX 1 and those two combined equal 1xCCD, in this case CCD 0. And an 3900x/3950x will have 2xCCD (CCD 0 and CCD 1) comprised of 2xCCXs per CCD (CCX core count depending on CPU model).

So an 3300x is half of one fully enabled CCD, but an entire 1xCCX. But it is still an CCD nonetheless, just not a fully enabled CCD, as it only has 1x CCX that is actually enabled and not 2x4 core CCX enabled CPU like my 3700x.

And an 3950x is the fully enabled 2xCCD (2x chiplets) with each CCD comprising of 2x4 core CCXs. And all CPU have an IO die chiplet as well. So an fully enabled 3950x comprises 2xCCDs chiplets consisting of 2x4 core CCXs per CCD and 1x IO chiplet.

Does that make sense? Or must I try be more specific for you? I tried to make it as uncomplicated as I could, my apologies if it is a bit disjointed and a bit of a mess, I do tend to do that sometimes. So once again, my apologies if it comes across that way, that was not my intention.

Here is an article explaining the difference if I am not making any sense (which wouldn't surprise me, I am not great at explaining sometimes).

AMD CCD and CCX in Ryzen Processors Explained

AMD’s Ryzen CPUs are made up of core complexes called CCDs and/or CCXs. But what is a CCX and how is it different from a CCD in an AMD processor? Let’s have a look. There are many factors responsible for AMD’s recent success in the consumer market. But, the chiplet or MCM design (Multi-chip...

www.hardwaretimes.com

www.hardwaretimes.com

And each Ryzen 3000 (desktop CPU) has 16mb L3 cache per 1xCCX, and 32mb when both CCXs are present (such as 3600/3700x/3800x etc). And 64mb for 3900x/3950x.

So you are correct an 3300x (and 3100x) does only have access to 16mb L3 cache. It is only CCD/CCX that your are getting mixed up with, everything else seems sound. But the fact that the 3300x does not suffer from latency penalties that the other 2xCCX CPUs (or 4xCCX CPUs) suffer from with cross-CCX penalties when fetching data from another CCXs cache, or passing threads from 1 CCXs core/s to another CCX cores, that actually helps it make up quite a bit of ground compared to CPUs featuring 2xCCXs or more.

But the added cores (and cache) of the R5/R7/R9 will usually win out at the end of the day in core heavy (and some other) situations, despite any penalties. They just don't scale as well as Intels monolithic die, but AMD is correcting this somewhat with Ryzen 4000 (Zen3) CPUs and their 8 core CCXs.

But like I said, on the whole you are correct and I understood exactly what you meant and where you are coming from, it is just terminology at the end of the day. But you do have the right idea, and I can see you do understand on the whole. You just need AMDs terminology and you are perfect.

Anyway, once again welcome to Techspot bud, I am glad you have joined us.

Last edited:

Ryzen perform really well under Linux, CPUs as well as APUs, I´m currently running a 2700X, a 1600AF (UNIX/FreeNAS), a 3900X and a 2400G under Ubuntu and Manjaro, absolutely no issues, a lot of tests can be found here:How are these new Ryzen processors when it comes to Linux?

I'm moving away completely from Windows 10, no thanks to recent forced updates which started breaking up things (even though I liked Win10 for sometime in the beginning), and my future builds will be based on Linux.

I think I'm done with the crazy graphics card race and decided to stay put with my current 5700XT. (For modern gaming, I'm just going to get the PS5, since most new games are released multi-platform. Not that latest games are anything to shout about anyway, but I have my backup system to play any latest ones with new consoles without worrying about obscene prices for flagship video cards nowadays.)

I'm really only interested in the Ryzen 7 or 9 series, probably not Threadrippers due to overkill pricing, for my future builds.

I have come to be familiar with Linux being much better with Intel processors, just not sure how's the latest scene like with the Ryzens.

I do compile most apps from sources.

Thanks for any input about this.

https://www.phoronix.com/scan.php?page=news_topic&q=AMD

I've cobbled together some images of the Ryzen chips examined in this article, so you can see how the various structures are laid out. The source for die shots is Fritzchens Fritz's incredible collection of images:

The cores that are blanked out indicate the number disabled, not the actual ones, as this will vary from chip to chip.

Note that for the 3900X it's entirely possible that one CCD contains all 4 of the disabled cores with the other having none disabled at all.

The cores that are blanked out indicate the number disabled, not the actual ones, as this will vary from chip to chip.

Note that for the 3900X it's entirely possible that one CCD contains all 4 of the disabled cores with the other having none disabled at all.

Similar threads

- Replies

- 32

- Views

- 164

- Locked

- Replies

- 28

- Views

- 523

- Replies

- 38

- Views

- 954

Latest posts

-

AMD unveils new Ryzen Pro 8000 processors for AI PCs

- Willxx789 replied

-

-

Microsoft rolls out a new way to install Windows Store apps from the web

- MaestroIT replied

-

Ryzen 7 5800X3D vs. Ryzen 7 7800X3D, Ryzen 9 7900X3D and 7950X3D

- gamerk2 replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.