The top M.2 slot is directly connected to the CPU and the bottom one is hooked up to the X570 chipset. So although they're both PCIe 4.0 x4 connections, the bottom has to route transactions through the PCH to get the RAM, etc.For actual tests, we used the upper slot for everything as we noticed the lower one was slower.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Samsung 980 Pro vs. Sabrent Rocket 4 Plus

- Thread starter William Gayde

- Start date

The top M.2 slot is directly connected to the CPU and the bottom one is hooked up to the X570 chipset. So although they're both PCIe 4.0 x4 connections, the bottom has to route transactions through the PCH to get the RAM, etc.

Another reason is that upper slot is closer to CPU than lower. That basically applies to every slot on motherboard: two "same" slots, one closest to CPU is faster. Exceptions are very very rare.

The top M.2 slot is directly connected to the CPU and the bottom one is hooked up to the X570 chipset. So although they're both PCIe 4.0 x4 connections, the bottom has to route transactions through the PCH to get the RAM, etc.

For those scratching their X570-shaped head at the term "PCH" as applied to PCIE 4.0, this is Intel's term for a "chipset." Thank you neeyik in any case -- I've seen some attribute the speed differences between M.2_1 and M.2_2 to one slot being PCIe 3.0 (which I'm doubtful is even possible).

If anyone has deep knowledge on this speed-delta artifact it'd be great to hear from you. My limited understanding is that the bottom (M.2_2) slot *shares* the PCIe 4.0x4 lanes with the chipset.

[Updated: And yes, distance matters of course...per HardReset's comment.]

Last edited:

Seconding the vote for comparison to WD Black SN850 (1TB, especially): To the best of my understanding -- short of the 5x more-expensive Optane, of course -- WD's latest gem is the strongest-performing consumer/prosumer SSD as of today in early Jan 2021 (I.e., best random read/write, IOPS and access times...sequential read/write being more of a marketing canard than anything else).

Last edited:

For those scratching their X570-shaped head at the term "PCH" as applied to PCIE 4.0, this is Intel's term for a "chipset." Thank you neeyik in any case -- I've seen some attribute the speed differences between M.2_1 and M.2_2 to one slot being PCIe 3.0 (which I'm doubtful is even possible).

If anyone has deep knowledge on this speed-delta artifact it'd be great to hear from you. My limited understanding is that the bottom (M.2_2) slot *shares* the PCIe 4.0x4 lanes with the chipset.

[Updated: And yes, distance matters of course...per HardReset's comment.]

Bottom slot communicates with CPU through PCIe 4.0 x4 interface (chipset-CPU link). Not big difference compared to CPU connected upper slot but still something. Chipset have more lanes but CPU-chipset link sets limit for simultaneous use. HEDT platforms have much more PCIe lanes from CPU.

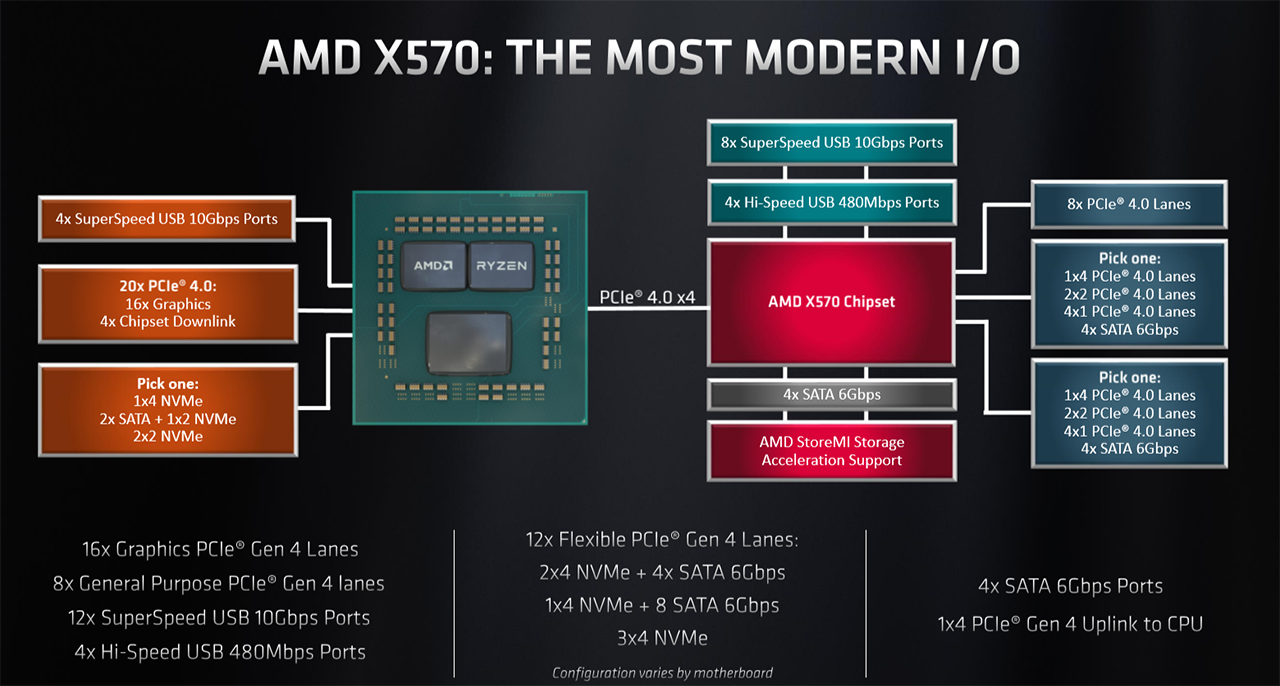

This is how it's all connected:For those scratching their X570-shaped head at the term "PCH" as applied to PCIE 4.0, this is Intel's term for a "chipset." Thank you neeyik in any case -- I've seen some attribute the speed differences between M.2_1 and M.2_2 to one slot being PCIe 3.0 (which I'm doubtful is even possible).

If anyone has deep knowledge on this speed-delta artifact it'd be great to hear from you. My limited understanding is that the bottom (M.2_2) slot *shares* the PCIe 4.0x4 lanes with the chipset.

The important thing to note is that PCI Express is a point-to-point system: there needs to be a PCIe controller in both devices connected by the lanes. The CPU has such a controller and has 24 PCIe lanes, 4 of which are used exclusively for communicating with the PCIe controller in the X570 chipset.

That chip has 16 lanes, but 4 of those are for the CPU-chipset link. The rest can be used for NVMe or SATA. So with the motherboard in this article, when an SSD is placed in the top M.2 slot, it links directly to the CPU and it's memory controllers - thus all read/write tests are going to operate as fast as they can.

However, the bottom M.2 slot links to the X570 chipset, which handles the command and data transactions from the CPU. So read/write tests here involve copying data to the PCH first, and then transferring them to the system RAM. No matter how quick that is, it's never going to be as fast as the top slot.

hahahanoobs

Posts: 5,633 +3,400

I've read the reports and just read your Tom's article. Intel and Samsung were both guilty, and research is tough when you have to buy the drive and test it to see any changes. Some changes are good and some are bad, but I haven't seen anything that warrants enough fear to stick with just two drive makers like you've chosen. I've had Crucial, Kingston, Samsung, WD and TeamGroup - ZERO complaints.I disagree. I'd say brand matters more. Certain brands have not only been caught out silently switching NAND but also their entire controllers and/or firmware leading to massive performance drops. The ADATA drive tested showed a 40% decrease in tests vs the original review model. Multiple review sites have covered this including Toms Hardware, Hexus and LTT.

I'll continue to trust Intel/Samsung for my system drives going forward.

Bottom line: do your research before going for that "too good to be true" deal.

Many brands were missing from that Tom's article, therefore I stand by my statement.

Brand doesn't really matter. DRAM cache does matter. Ignore the rest. Fast storage has already peaked for 90% of us, including gamers and that's why I went with the cheaper X470 over X570 last Spring.

550MB/s is perfect for 80%, PCIe 3 for 15% and PCIe 4 for the remaining 5% of consumers.

Last edited:

Mr Majestyk

Posts: 2,425 +2,259

Why would you buy the overpriced 980 EVO. Yes let's call it for what it is, it's no Pro, it's and EVO in all but name. Can we expect the actual 980 EVO to really be a 980 QVO with QLC still at ridiculous price per gigabyte.

Samesung have long ceased to be the benchmark in SSD's, lot's of great competition now and the small differences in performance will never be noticed in the real world.

Sabrent or Adata are much better buys just to name a few.

Samesung have long ceased to be the benchmark in SSD's, lot's of great competition now and the small differences in performance will never be noticed in the real world.

Sabrent or Adata are much better buys just to name a few.

The heck man...I see that in many tests the Sabrent is quite clearly better and your conclusion is that Samsung is ties with Sabrent? Add to that the fact that the Samsung SSD is more expensive and the conclusion of this review is...funny to say the least. Well, this is an example of why Hardware unboxed deserved what it got from nvidia.

Think about cad/cam work, where your load image might very well be in the 10's / 100's GB Or rendering, where 50GB load files are not uncommon. Load from a HDD? Really? And I pay you HOW much an hour to sit there and thumb-twiddle?

I know an architectural firm that did not blink at a 500K$ lasser printer because it could output100GB A8 plots in minutes instead of days. Productivity pays the bills, mate.

This is from the testing in this article:

"The test we ran copies randomly generated 100GB files ten times while deleting and regenerating them each iteration. This is a total of 1TB worth of reads and 1TB worth of writes.

For reference, the 970 Pro has a rated lifespan of 1200TB written, 1800TB for the Rocket 4, 600TB for the 980 Pro, and 1400TB for the Rocket 4 Plus. It's interesting to note that the lifespan of the newer drives is lower than the older drives. We don't know why this is for the Sabrent drive, but in Samsung's case, the 980 Pro in reality is more of a successor to the 970 Evo which also used 3-bit MLC (aka TLC).

If the drives were using a cache or had poor thermal management, we would see a dip in performance partway through. Thankfully none of the drives have that issue, but the 980 Pro did slow down slightly after the 200GB mark. The Rocket 4 Plus finished the test in just over 400 seconds with the 970 Pro shortly behind. The 980 Pro finished in 3rd at just over 550 seconds. The Rocket 4 was very disappointing here taking twice as long as the next drive."

Notice how it said that with 100GB there was no noticeable slowdown. These drives have a very large cache, and even while you're writing, which goes to cache first, the cache is also writing to main storage.

This is why I made the statement in the first place. If a drive can deal with 100GB without slowing down, then it can pretty much deal with almost any work case scenario and it doesn't matter what that work is, including working with 4K video. Even for rendering, if you have a 500GB file, the application can't even RENDER that fast. Pose this question if you dare to Steve from Hardware Unboxed.

DonquixoteIII

Posts: 188 +131

SixTymes said: "It would be nice to see sustained tests for SSDs to see how fast it is once the cache runs out and then how fast it is once it has to actually write to TLC for example. I have an older pci3 Sabrent than goes from 1,000MBs to 80MBs after 50GB. Makes copying video footage unusably slow."

To which 131dbl said: "Most people aren't going to use an NVMe drive in that kind of way, so for most people that's going to be a useless stat that can mislead them into making a bad buying decision.

Common uses: loading and running an OS from, loading and playing games from, along with other applications. Using for scratch area for building files, such as rendering or other work with video or audio files. And I'm sure there are others. I don't think there exists an app that can write out a media file fast enough for that stat to matter, or the end file isn't that big anyway. Writing a 100GB file for instance tests the drive's ability to work as a scratch/work drive, and the reality is it mostly exceeds the ability of almost any app/PC hardware for the speed in which it can build the file.

I do understand there are fringe use cases where a person might want to write a couple hundred GB to an NVMe. I just can't think of a GOOD use case right now. Maybe if your system crashed and you have to restore your drive from a clone, but I'm not going to make a buying decision based on that rare use case. More than likely the clone is a magnetic drive or SATA SSD anyway, in which case these new drives are going to be waiting forever for the data.

OK, sorry there is a use case I can think of, and that's something like a ZFS RAID config. But even there you could use any of these drives in such a way where the NVMe drives aren't going to be the limiting factor, because more often than not these types of RAID configs are used for a large amount of storage, and most of it's going to be mechanical drives or SATA SSDs and the NVMe drives are operating more like a cache for the RAID. Once again though, you run into the issue of how often that RAID gets used for writing hundreds of GBs at a single time."

To which I said: "

Think about cad/cam work, where your load image might very well be in the 10's / 100's GB Or rendering, where 50GB load files are not uncommon. Load from a HDD? Really? And I pay you HOW much an hour to sit there and thumb-twiddle?

I know an architectural firm that did not blink at a 500K$ laser printer because it could output100GB A8 plots in minutes instead of days. Productivity pays the bills, mate."

To which 131dbl made some kind of reply that completely missed the point.

If you are in a production environment that requires loading a very large file before you can even start to work, then sustained reads / writes ARE important.

People that manage the budget for these types of workplaces will spend very large on productivity enhancements A RAID drive of SSD's with read / write in the 20k range are not uncommon. The faster the better, when people cannot even START working until a very large file (or set of files) finishes loading.

Your possible lack of experience with these types of productivity environments may lead you to not understand just how crucially important load times really are in such an environment. CAD / CAM, rendering farms, GIS work, even processing MRI files. All usually require loading up very large file sets before work can even start. And, writing back these file sets before work can even continue, for probable checking by a supervisor before these file sets can be sent back for revision or committed to the final product.

These environments DO exist, and people DO work there. I can envision an SSD based array with Optane memory as a very large cache to decrease total read / write times, all funded by productivity increases.

To which 131dbl said: "Most people aren't going to use an NVMe drive in that kind of way, so for most people that's going to be a useless stat that can mislead them into making a bad buying decision.

Common uses: loading and running an OS from, loading and playing games from, along with other applications. Using for scratch area for building files, such as rendering or other work with video or audio files. And I'm sure there are others. I don't think there exists an app that can write out a media file fast enough for that stat to matter, or the end file isn't that big anyway. Writing a 100GB file for instance tests the drive's ability to work as a scratch/work drive, and the reality is it mostly exceeds the ability of almost any app/PC hardware for the speed in which it can build the file.

I do understand there are fringe use cases where a person might want to write a couple hundred GB to an NVMe. I just can't think of a GOOD use case right now. Maybe if your system crashed and you have to restore your drive from a clone, but I'm not going to make a buying decision based on that rare use case. More than likely the clone is a magnetic drive or SATA SSD anyway, in which case these new drives are going to be waiting forever for the data.

OK, sorry there is a use case I can think of, and that's something like a ZFS RAID config. But even there you could use any of these drives in such a way where the NVMe drives aren't going to be the limiting factor, because more often than not these types of RAID configs are used for a large amount of storage, and most of it's going to be mechanical drives or SATA SSDs and the NVMe drives are operating more like a cache for the RAID. Once again though, you run into the issue of how often that RAID gets used for writing hundreds of GBs at a single time."

To which I said: "

Think about cad/cam work, where your load image might very well be in the 10's / 100's GB Or rendering, where 50GB load files are not uncommon. Load from a HDD? Really? And I pay you HOW much an hour to sit there and thumb-twiddle?

I know an architectural firm that did not blink at a 500K$ laser printer because it could output100GB A8 plots in minutes instead of days. Productivity pays the bills, mate."

To which 131dbl made some kind of reply that completely missed the point.

If you are in a production environment that requires loading a very large file before you can even start to work, then sustained reads / writes ARE important.

People that manage the budget for these types of workplaces will spend very large on productivity enhancements A RAID drive of SSD's with read / write in the 20k range are not uncommon. The faster the better, when people cannot even START working until a very large file (or set of files) finishes loading.

Your possible lack of experience with these types of productivity environments may lead you to not understand just how crucially important load times really are in such an environment. CAD / CAM, rendering farms, GIS work, even processing MRI files. All usually require loading up very large file sets before work can even start. And, writing back these file sets before work can even continue, for probable checking by a supervisor before these file sets can be sent back for revision or committed to the final product.

These environments DO exist, and people DO work there. I can envision an SSD based array with Optane memory as a very large cache to decrease total read / write times, all funded by productivity increases.

Most people aren't going to use an NVMe drive in that kind of way, so for most people that's going to be a useless stat that can mislead them into making a bad buying decision.

Common uses: loading and running an OS from, loading and playing games from, along with other applications. Using for scratch area for building files, such as rendering or other work with video or audio files. And I'm sure there are others. I don't think there exists an app that can write out a media file fast enough for that stat to matter, or the end file isn't that big anyway. Writing a 100GB file for instance tests the drive's ability to work as a scratch/work drive, and the reality is it mostly exceeds the ability of almost any app/PC hardware for the speed in which it can build the file.

I do understand there are fringe use cases where a person might want to write a couple hundred GB to an NVMe. I just can't think of a GOOD use case right now. Maybe if your system crashed and you have to restore your drive from a clone, but I'm not going to make a buying decision based on that rare use case. More than likely the clone is a magnetic drive or SATA SSD anyway, in which case these new drives are going to be waiting forever for the data.

OK, sorry there is a use case I can think of, and that's something like a ZFS RAID config. But even there you could use any of these drives in such a way where the NVMe drives aren't going to be the limiting factor, because more often than not these types of RAID configs are used for a large amount of storage, and most of it's going to be mechanical drives or SATA SSDs and the NVMe drives are operating more like a cache for the RAID. Once again though, you run into the issue of how often that RAID gets used for writing hundreds of GBs at a single time.

You forget a lot of people may be using this drive for local machine learning or data science, and writes of several hundred gb wouldn't be out of the question either. Also almost every DevOps or full stack developer is going to prototype their app locally before deploying it to the cloud first; not to mention folks who are compiling Chromium or Linux for example (some reviewers will use those as a benchmark too). That's why Storage Review still posts SQL Server tests for consumer drives; so sustained random 4k mixed (hi map reduce!) and latency are going to be key.

daveteauk

Posts: 35 +6

I've recently discoverd a very strange phenomenon with the R4+ - on my Asus ROG Crosshair VIII Hero, when under load (Handbrake), if it's in the M.2 slot nearest the CPU it runs at c50°c, yet if I move it to the 2nd slot nearest the chip fan, it runs at c60°c, using the same file doing the same thing in Handbrake! Go figure. Both positions have the Asus heatsinks attached. Move experiment was trying to figure out a slow speed issue, using CrystalDiscmark64, where my R4+ is only getting 1100MB/s!! Much slower than my outgoing R4 (both 1Tb) which got c4200MB/s, in the same place with all the same OS and software. Even the bare and empty R4+ was only bgetting c1200MB/s Writes. Reads were generally ok at 6800MB/s. Sabrent 'support' has been attrocious. R4+ is going back, and a Sam 980 Pro is on order!Thanks for the review. One thing that interests me with SSD’s is operating temperature. Some SSD’s operate fairly hot while others are much cooler under load. For example, my 970 Evo Plus gets quite warm under load (60 degrees) while other nvme drives I’ve used stay in the 40s.

What are the operating temps of the 980 vs Sabrent?

I've recently discoverd a very strange phenomenon with the R4+ - on my Asus ROG Crosshair VIII Hero, when under load (Handbrake), if it's in the M.2 slot nearest the CPU it runs at c50°c, yet if I move it to the 2nd slot nearest the chip fan, it runs at c60°c, using the same file doing the same thing in Handbrake! Go figure. Both positions have the Asus heatsinks attached. Move experiment was trying to figure out a slow speed issue, using CrystalDiscmark64, where my R4+ is only getting 1100MB/s!! Much slower than my outgoing R4 (both 1Tb) which got c4200MB/s, in the same place with all the same OS and software. Even the bare and empty R4+ was only bgetting c1200MB/s Writes. Reads were generally ok at 6800MB/s. Sabrent 'support' has been attrocious. R4+ is going back, and a Sam 980 Pro is on order!

What issue is that? Crystaldiskmark giving poor results is not an issue. Try at least two other software too to see if there is real issue.

For temp issue, 60°c is not any kind of problem and changing M.2 on another place will surely affect temperatures.

With that information there are no issues.

DonquixoteIII

Posts: 188 +131

Have you checked your asus manual to see

where the slots connect? If one goes direct to cpu and the other goes to the shared link...

where the slots connect? If one goes direct to cpu and the other goes to the shared link...

You forget a lot of people may be using this drive for local machine learning or data science, and writes of several hundred gb wouldn't be out of the question either. Also almost every DevOps or full stack developer is going to prototype their app locally before deploying it to the cloud first; not to mention folks who are compiling Chromium or Linux for example (some reviewers will use those as a benchmark too). That's why Storage Review still posts SQL Server tests for consumer drives; so sustained random 4k mixed (hi map reduce!) and latency are going to be key.

I'm going to stick to the point that most creators/developers or whatever are not going to notice one bit a drive that can do a write op, as is stated in this article, for almost 200GB before there was a noticeable slowdown. So, maybe 0.01% of those using systems may fit into this category. If you feel that's incorrect, back it up with PC usage data, because a person here or there than may exceed what this drive can do is such a tiny minority, that it's probably not worth Samsung or most any other company to put TOO much effort into trying to solve a problem for on a single drive. After all, there are such things as RAID.

Once again, most apps that are producing data can't even produce it at a rate that a single NVMe can write at, which means that 99.99% or more workloads are going to be met by this drive.

This drive is going to solve the BIGGER issue for most users, price. Put two of these 1TB drives into a RAID 0 if you want to talk about a work area and you are going to FAR exceed the requirements of pretty much anything that produces data, except again, that 0.01% or less people that have extreme use cases. And it's SO much cheaper than what high quality drives in the past cost And no, I don't think that compiling most programs result in much drive usage, and a tiny droplet of people are compiling Chromium, and they know how to create a ZFS RAID.

I use to program BTW, but I wasn't compiling large packages. Compiling is mostly a load on the CPU, since it's taking text and creating an executable. I'd like to see data that suggests that a CPU can compile faster than it can output data to an NVMe. AND this is why compiling tends to be a benchmark now for CPUs. I could see how in the olden days of mechanical drives or even SATA SSDs, this was an issue, but writing a continual stream of data to a drive is basically the same thing as writing one large data file, which an NVMe can do incredibly fast.

Once again, I'd suggest you pose some of this to Steve from Hardware Unboxed, who also posts articles on Techspot, and see if he finds a single NVMe a bottleneck for any of the CPU testing he does.

You know, match your work to a proper system??

DonquixoteIII

Posts: 188 +131

One wonders WHY you would argue against valid use cases. What's the point? The use cases mentioned by many above are valid, and Storage Tech is a well known, trusted source. What is the point that you are trying to make here?I'm going to stick to the point that most creators/developers or whatever are not going to notice one bit a drive that can do a write op, as is stated in this article, for almost 200GB before there was a noticeable slowdown. So, maybe 0.01% of those using systems may fit into this category. If you feel that's incorrect, back it up with PC usage data, because a person here or there than may exceed what this drive can do is such a tiny minority, that it's probably not worth Samsung or most any other company to put TOO much effort into trying to solve a problem for on a single drive. After all, there are such things as RAID.

Once again, most apps that are producing data can't even produce it at a rate that a single NVMe can write at, which means that 99.99% or more workloads are going to be met by this drive.

This drive is going to solve the BIGGER issue for most users, price. Put two of these 1TB drives into a RAID 0 if you want to talk about a work area and you are going to FAR exceed the requirements of pretty much anything that produces data, except again, that 0.01% or less people that have extreme use cases. And it's SO much cheaper than what high quality drives in the past cost And no, I don't think that compiling most programs result in much drive usage, and a tiny droplet of people are compiling Chromium, and they know how to create a ZFS RAID.

I use to program BTW, but I wasn't compiling large packages. Compiling is mostly a load on the CPU, since it's taking text and creating an executable. I'd like to see data that suggests that a CPU can compile faster than it can output data to an NVMe. AND this is why compiling tends to be a benchmark now for CPUs. I could see how in the olden days of mechanical drives or even SATA SSDs, this was an issue, but writing a continual stream of data to a drive is basically the same thing as writing one large data file, which an NVMe can do incredibly fast.

Once again, I'd suggest you pose some of this to Steve from Hardware Unboxed, who also posts articles on Techspot, and see if he finds a single NVMe a bottleneck for any of the CPU testing he does.

You know, match your work to a proper system??

The only justification I can see is that in your opinion professionals should not be visiting Techspot

I guess you didn't read. People who have more demanding use cases understand their use case, I hope. So, why wouldn't they use a system that's designed for their use case?? I said NOTHING about people's rights to their use cases and that's a very odd interpretation of what I typed out.

What I suggested, and worded pretty clearly is these are such minority use cases and a drive maker doesn't have to try to include EVERY use case for a drive they design. People who have more demanding use cases are smart enough to use a RAID. Duh??

Next, I said that I doubt that even the use cases you mentioned, especially the compile, would be slowed down one BIT by this drive, as it's more of a demand on the CPU. Once again directly from THIS review, they didn't notices a slowdown until after writing about 200GB. Are you telling me that compiling Chromium is going to make an .EXE that's over 200GB?? I think you're creating fantasy dude.

Then I said to pose these questions to Steve from Hardware Unboxed, since he actually TESTS that scenario.

So how is any of this saying people shouldn't have their specific use cases? I suggested people should be smart enough to put together a system that works well for their use cases. If they can't, they probably should be doing another job. Or at least be smart enough to talk about their needs to an IT specialist who can create a proper system for their use case.

Really this conversation is turning into meaningless words with your last post. So, show REAL evidence about anything that pushes the output of a CPU faster than the capability of this drive, and then I'll tell you why they should have at least a RAID 0, or if they work professionally and can't afford to even lose seconds if a RAID 0 crashes, then a ZFS RAID for more reliability with the redundancy it offers. Why can't you figure this part out?

I already mentioned the use case for those who produce videos, and I've been told by more than one Youtuber that the output of their system, and they're using Threadrippers, doesn't exceed the capability of a SINGLE NVMe drive, and these were gen 3 drives when I would have asked, or first gen, PCIe gen4 drives which really weren't any better, so even slower than the 980 Pro. When I run compression, even with minor compression settings with 7-Zip, using an AMD 3700X, it doesn't even exceed the write capability of my SATA SSD, so FASTEST setting for compression using 7-zip. Decompression is much faster, but even that is still handled by a SATA SSD, with my 3700X.

What I suggested, and worded pretty clearly is these are such minority use cases and a drive maker doesn't have to try to include EVERY use case for a drive they design. People who have more demanding use cases are smart enough to use a RAID. Duh??

Next, I said that I doubt that even the use cases you mentioned, especially the compile, would be slowed down one BIT by this drive, as it's more of a demand on the CPU. Once again directly from THIS review, they didn't notices a slowdown until after writing about 200GB. Are you telling me that compiling Chromium is going to make an .EXE that's over 200GB?? I think you're creating fantasy dude.

Then I said to pose these questions to Steve from Hardware Unboxed, since he actually TESTS that scenario.

So how is any of this saying people shouldn't have their specific use cases? I suggested people should be smart enough to put together a system that works well for their use cases. If they can't, they probably should be doing another job. Or at least be smart enough to talk about their needs to an IT specialist who can create a proper system for their use case.

Really this conversation is turning into meaningless words with your last post. So, show REAL evidence about anything that pushes the output of a CPU faster than the capability of this drive, and then I'll tell you why they should have at least a RAID 0, or if they work professionally and can't afford to even lose seconds if a RAID 0 crashes, then a ZFS RAID for more reliability with the redundancy it offers. Why can't you figure this part out?

I already mentioned the use case for those who produce videos, and I've been told by more than one Youtuber that the output of their system, and they're using Threadrippers, doesn't exceed the capability of a SINGLE NVMe drive, and these were gen 3 drives when I would have asked, or first gen, PCIe gen4 drives which really weren't any better, so even slower than the 980 Pro. When I run compression, even with minor compression settings with 7-Zip, using an AMD 3700X, it doesn't even exceed the write capability of my SATA SSD, so FASTEST setting for compression using 7-zip. Decompression is much faster, but even that is still handled by a SATA SSD, with my 3700X.

Last edited:

DonquixoteIII

Posts: 188 +131

I guess you didn't read. People who have more demanding use cases understand their use case, I hope. So, why wouldn't they use a system that's designed for their use case?? I said NOTHING about people's rights to their use cases and that's a very odd interpretation of what I typed out.

What I suggested, and worded pretty clearly is these are such minority use cases and a drive maker doesn't have to try to include EVERY use case for a drive they design. People who have more demanding use cases are smart enough to use a RAID. Duh??

Next, I said that I doubt that even the use cases you mentioned, especially the compile, would be slowed down one BIT by this drive, as it's more of a demand on the CPU. Once again directly from THIS review, they didn't notices a slowdown until after writing about 200GB. Are you telling me that compiling Chromium is going to make an .EXE that's over 200GB?? I think you're creating fantasy dude.

Then I said to pose these questions to Steve from Hardware Unboxed, since he actually TESTS that scenario.

So how is any of this saying people shouldn't have their specific use cases? I suggested people should be smart enough to put together a system that works well for their use cases. If they can't, they probably should be doing another job. Or at least be smart enough to talk about their needs to an IT specialist who can create a proper system for their use case.

Really this conversation is turning into meaningless words with your last post. So, show REAL evidence about anything that pushes the output of a CPU faster than the capability of this drive, and then I'll tell you why they should have at least a RAID 0, or if they work professionally and can't afford to even lose seconds if a RAID 0 crashes, then a ZFS RAID for more reliability with the redundancy it offers. Why can't you figure this part out?

I already mentioned the use case for those who produce videos, and I've been told by more than one Youtuber that the output of their system, and they're using Threadrippers, doesn't exceed the capability of a SINGLE NVMe drive, and these were gen 3 drives when I would have asked, or first gen, PCIe gen4 drives which really weren't any better, so even slower than the 980 Pro. When I run compression, even with minor compression settings with 7-Zip, using an AMD 3700X, it doesn't even exceed the write capability of my SATA SSD, so FASTEST setting for compression using 7-zip. Decompression is much faster, but even that is still handled by a SATA SSD, with my 3700X.

You do realize, I hope, that data centers buy up most of the tech, so by your standards individual users running a desktop IS the minority use case.

M.2 NVME SSDs in a raid configuration are the fastest raid available. PCIe 4.0 using many Rocket 4 plus SSDs should be around the fastest read / write speeds of ANY raid configuration. However, there is the issue of long term sustainable speeds. Hence the need for reviewers (like Storage Review) to focus on such 'minor' details.

ZFS is pretty much the slowest, crappiest implementation of any type of raid, designed as a cheaper alternative by Sun Microsystems to be able to use any and all extra disks that an ENTERPRISE might have lying around.

It is too bad that Larry killed off Solaris... There was a solaris x86 release with ZFS... but you can't get it anymore. Too bad. Looks like my copy just got more precious.

Last edited:

evolucion8

Posts: 83 +39

There is no such thing as 3-Bit MLC, Samsung and their terrible marketing ideas. There are newer TLC drives that have less durability than prevous TLC drives, why? Simple, previous TLC drives used 64 Layer 3D NAND, now they are using 96 Layer 3D NAND, packing even more data within more density but less space, hence less durability, still better than the fiasco of the QLC SSD that are around now.

daveteauk

Posts: 35 +6

There IS/was a real issue,,,What issue is that? Crystaldiskmark giving poor results is not an issue. Try at least two other software too to see if there is real issue.

For temp issue, 60°c is not any kind of problem and changing M.2 on another place will surely affect temperatures.

With that information there are no issues.

Prior to the R4+, I was using an R4 and was getting MUCH faster read/write times with the SLOWER drive = an issue. At one point they were both in the system together. I also swapped them over from M.2_1 > M.2_2 and back to make sure it wasn't the slots causing the issue. R4+ was also tested bare and empty immediately after install, and was found to be very slow from the outset. The OS was then cloned from the R4 to the R4+ so both had exactly the same files on them, about 40% full, and the R4 performed flawlessly and as expected. The R4+, using five different speed testing apps was slow under all of them, where the R4 was fine. Sabrent 'support' over the issue was attrocious. So bad that I would never buy another Sabrent product again, even though I was happy with the R4. The R4+ has gone back, and has now been replaced with a WD SN850 which is all I expected from such a drive, and performs, using several benchmark suits including CDMark6/7/8 as it should and expected/advertised.

Why would changing M.2 slots 'surely' affect temps? I agree that 60°c is not a problem, and I didn't say it was. I was making a comment/observation in relation to the review where temps are commented on. Why should a slot change cause a 10°c temp difference? My CPU has an AIO attached, so the M.2 slot next to it is not getting circulated airflow to cool that immediate area.

daveteauk

Posts: 35 +6

I've just replaced my appallingly performing Sabrent Rocket 4 Plus with a 1Tb WD SN850, and can report it IS blindingly fast, and performs as advertised, getting c6850Mb/s reads and c5200Mb/s writes when the drive is 40% full with the OS and other files. The 2Tb version gives much faster writes in the order of c6500Mb/s, but is much more expensive - ie not just twice the price for twice the capacity.Seconding the vote for comparison to WD Black SN850 (1TB, especially): To the best of my understanding -- short of the 5x more-expensive Optane, of course -- WD's latest gem is the strongest-performing consumer/prosumer SSD as of today in early Jan 2021 (I.e., best random read/write, IOPS and access times...sequential read/write being more of a marketing canard than anything else).

Similar threads

- Replies

- 35

- Views

- 887

- Replies

- 25

- Views

- 248

Latest posts

-

Samsung delays $37B Texas chip plant with no customers in sight

- zamroni111 replied

-

TSMC shifts focus to US, postpones new chip plant in Japan

- Kashim replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.