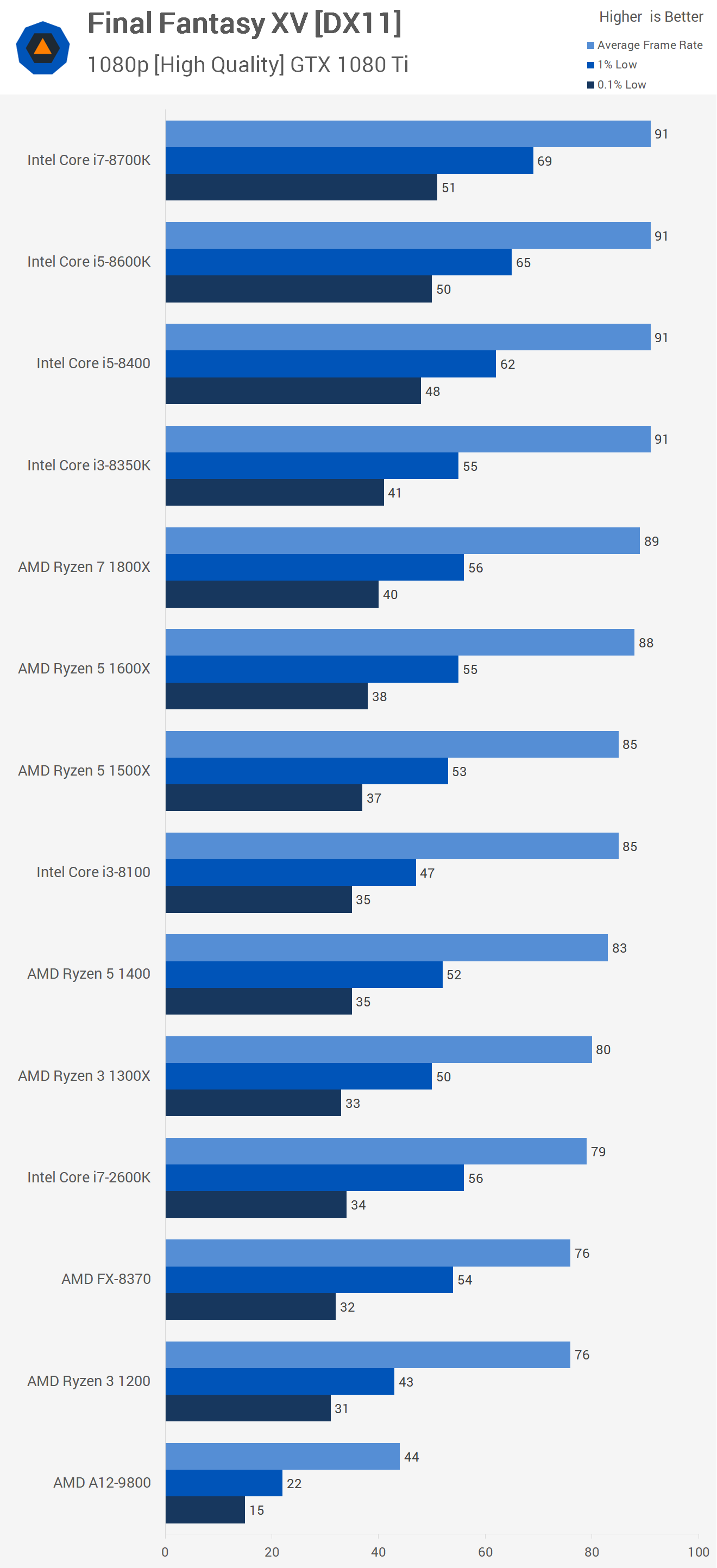

This isn't what's being experienced out in the wild, despite bios updates to deal with some early quirks. These chips aren't hitting the advertised boost speeds for most and there is little overclocking overhead BUT this isn't to say that they're bad chips, they are unsurpassed in productivity and they're still perfectly good gaming CPUs but Intel does win in that department.

It won't stop me from buying the 3950X when it arrives in September - upgrading from an overclocked 2700 (non-X) at 4.25ghz 1.425V.

By out in the wild I assume you are referring to forum posts / reddit. It would make sense if a majority of the people posting there are looking at how to get max boost clocks, after all they came there for help. On the flip side of the coin, people who are getting max boost clocks aren't making posts for help because they don't need help in the first place. You cannot base the current statues of a product based on support threads alone. If you did nearly every product ever launched would be a faulty piece of junk when in fact the number of people posting help threads for many products is a small amount. It should also be noted that as sales increase, number of RMA cases will increase proportionately. A better selling product is bound to have more people that need help.

I've seen a few posts about not reaching max boost clocks but nothing I would call widespread. Given the number of sales they are making based off the few retailers that actually release their numbers, I'd say this is well within expected.

Gamer Nexus

Techspot

Techpowerup

Toms HW

Der8auer, the most famious cpu overclocker right now who always talks about issues like x299 VRM cooling and so on made a video about that

Aanandtech

etc etc etc

"Not a wide spread issue"

The denial is strong with this one.

No, the latest AGESA updates haven't fixed a thing.

Not to mention that the boost is fraud, because with Ryzen 3xxx and their poor 7nm node, they bin chiplets so every cpu has only one "strong" core which is able to reach the boost clock unlike with Intel, where any core can reach the boost.

But yeah, 4.6 and 4.7ghz looks better on paper than those pisspoor 4.1 - 4.2 boost.

Lets imagine the public outrage had Intel done this.

Video from Der8auer about the issue