Why it matters: HyperText Transfer Protocol (HTTP) is the system that web browsers use to talk to servers, and it’s built using Transmission Control Protocol (TCP). TCP has many features that make it attractive for HTTP, but it also includes a lot of excessive code. By ditching it for the simpler User Datagram Protocol (UDP) and then adding back what HTTP needs, transmission can be smoother and faster.

HTTP v1, v1.1 and v2 have all used TCP because it’s been the most efficient way to incorporate reliability, order and error-checking into Internet Protocol (IP). In this case, reliability refers to the server’s ability to check if any data was lost in the transfer, order refers to if the data is received in the order it was sent and error-checking means the server can detect corruption that occurred during transmission.

As Ars Technica notes, UDP is substantially simpler than TCP but doesn’t incorporate reliability or order. But TCP isn’t perfect either, being a one size fits all solution for data transfer and thus including things HTTP doesn’t need. Google has managed to remedy this situation by developing Quick UDP Internet Protocol (QUIC), a protocol base for HTTP that maintains the simplicity of UDP but adds the couple things that HTTP needs, such as reliability and order.

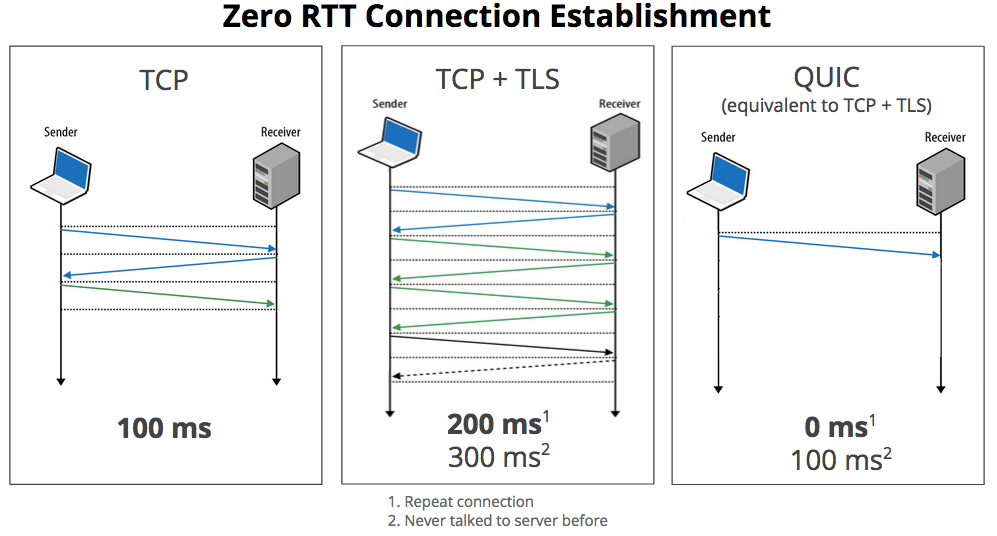

This should, theoretically, improve stability and speed. For example, when establishing a secure connection between client and server, TCP has to make multiple round trips to establish a connection and only after can the Transport Layer Security (TLS) protocol make its trips to establish an encrypted connection. QUIC can do both of these simultaneously, reducing the total number of messages.

The Internet Engineering Taskforce (who are responsible for establishing internet protocol) has just recently approved the use of QUIC and have named it HTTP/3. They’re currently establishing a standardized version of HTTP-over-QUIC, and it’s already supported by Google and Facebook servers.

https://www.techspot.com/news/77482-internet-evolving-http-no-longer-use-tcp.html