Rumor mill: Apple might have been slow to jump onto the generative AI bandwagon, but the company is starting to go all-in on artificial intelligence. According to a new rumor, nowhere will that be more apparent than in the iPhone 16, which is said to come with a massively upgraded Neural Engine for on-device AI tasks.

Apple's future generation of iPhone, iPad, and MacBook chips, the M4 and A18, will have an increased number of cores in their improved Neural Engines, writes Taiwanese publication Economic Daily News.

Apple first introduced its dual-core Neural Engine in the A11 Bionic SoC found in the iPhone 8/8 Plus and iPhone X, which released in 2017. The company bumped the Neural Engine's cores to 8 in the A13 that launched in the iPhone 11 series in 2019, doubling the count to 16 in the A14 that debuted in the iPhone 12/10th-gen iPads a year later.

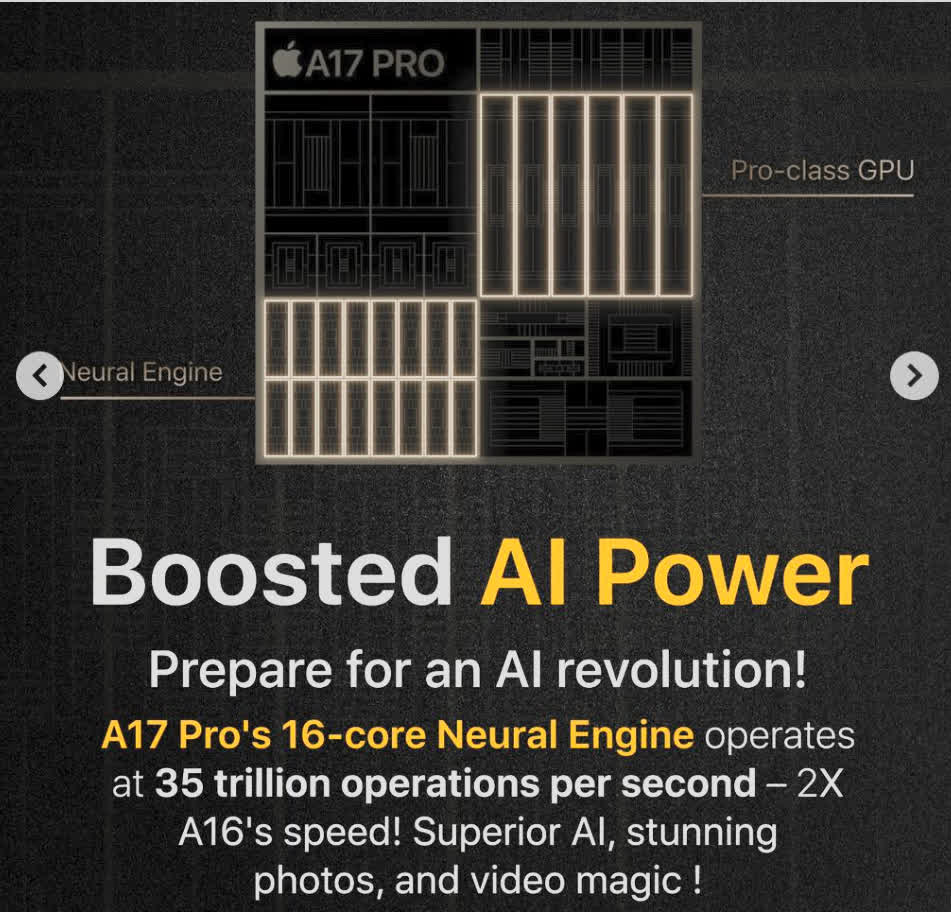

Apple has stuck with 16 cores in its iPhone's Neural Engines since 2020, though the component's performance has still improved with each generation – Cupertino says the iPhone 15 Pro's A17 Pro chip's Neural Engine is twice as fast as the one in the iPhone 14 Pro. It sounds as if the A18 could double the core count to 32, which would match the Mac Studio and the Mac Pro that are configured with an M1 Ultra or M2 Ultra SoC.

The latest rumor follows reports that Apple's future products will likely run generative AI models using built-in hardware instead of cloud services.

There are plenty of advantages to using on-device silicon for generative AI tasks rather than relying on remote servers and cloud platforms. Google made a big deal about the AI processing abilities of its Tensor G3 chip, which it claims pushes the boundaries of on-device machine learning, bringing the latest in Google AI research directly to the phone. That statement was put under scrutiny when YouTube channel Mrwhosetheboss found that most of the Pixel 8 Pro's new generative AI features need to be processed in the cloud, meaning a constant internet connection is required.

Earlier this month, Apple CEO Tim Cook confirmed that the company would announce new generative AI features for its products later this year.

https://www.techspot.com/news/101910-iphone-16-could-feature-major-neural-engine-upgrade.html