I don't really get the hate for SLi or why its being killed off.

I had an Asus Strix Nvidia 970 Sli setup from 2014-2018 and I had nothing but a great experience with it.

I was able to play stuff like The Witcher 3, max settings at 4K (albeit at a locked 30fps) Project Cars at 4K 60fps, the new Tomb Raider at the same and many others.

Can't say I ever really noticed the problems with it that so many others complained about. I suspect many of them had never actually tried it and were just jumping on the bandwagon of internet hate for the sake of it, TBH.

I agree with you the main downfall of the multi gpu are several points which nobody mentions.

The lack or support by game developers and often even in the PRO segment of software.

But the absolute worst is the electricity prices which especially in my country are pumped up to extreme levels in my country the actual energy price is as low as in any other country.

But the taxes on your using it are insane the real price is probably 0,2 euro cent but after taxes and so called transport costs it is over 34 euro cents per kwh.

So its pretty costly, before one says some place its more expenssive that probably is so.

But there is no need for this insane high prices here most power comes from a large atomic powerplant and we have half our country filled with solar panels and windmills and loads of organic power production (mostly farmers). There are even plans to make use of the power of the nord sea to produce even more.

So power was the major concern with multi gpu setups, now people blabbing here that they are less used on companies are partially correct, because yes if they can they will lower costs if they can especially here with the insane high prices here (even though large companies pay alot less)

Anyway I do liked the multi gpu setups also the ones at work which I installed which are monster number crunchers with up to 8 graphics card per system these do complex calculations and are really useless for home users. Nevertheless there are plenty of them and can't be replaced by a single card nor that will ever happen. Unless the new cpu technic is really that powerfull as it was on paper. But currently those are only toys for the super global companies like IBM and the likes.

And the other hand the current gpu are actually performing at insane levels for gamers and there is actually no real need anymore for newer and faster ones.

Thats also the funny thin people bought very expenssive videocards with new toys which actually are almost not used at all. They pay a fortune for a card which actually does not show anything at all in my games. And to be honest NOT one new game made me think oeh I want that game.

That raytracing is so overhyped and does do little to nothing for me, so whatever people say about I do not care for my games its all useless and as now more than 2 years later hardly any new supports or use it and worse its hardly noticable.

People care so much about shadows .... I turn it off or put it on the lowest setting I can put it at.

I really do not like super dark games at all, so Doom3 was pretty quickly moved to the bin.

When I visited a friend he was bragging about his 2100 euro costing water cooled 2080ti which he is now crying about after this 3080 came out.

Anyway when I asked him to show me why he was so excited he only could showed some minor reflexes on a small puddle of water in game, and I thought is that what you make such a huge fuss about and payed so much money for.....

I will not tell what I thought also but it ain't nice for him

Now people are again hyped about these new cards and they even as I speak made an order already for the newest hyped 30x0 serie.

nvidia will get its profit margins this year again in full, because I am pretty sure newer models will follow these pretty soon as well. I bet the next lineup is already in the pipeline to get more money out of your pockets and for what ... there are no games at all which really do maker use of this new stuff and really for me it does do nothing.

So I keep playing my old favorites and the likes and let it pass there is no need for me to buy a new pc nor will I buy anything whats hyped and actually does not add anything usefull or shows real improvements, for now I seen non.

Maybe in 5 to 10 years from now we can buy games which actually makes use of them, time will tell. But by then nvidia has cooked up probably another 5 to 10 new toys so you open up your wallet again for something new.

True some new developments are fantastic but besides only a minor example nothing has been developed for real into the gaming world with this new tech and I am pretty sure it will take a long time before we actually see any games having this.

For example I see alot of people talking about games and see youtube video's about them playing their game in 4k res, but I see with my 1440p monitor the exact same as they do, there is absolute no difference in the game nor does it look better.

If I buy a 4K monitor and I play a game I want to see some improvements and do not want to see the same graphics as I do when I run it on a 1080p monitor.

I was bored and saw the release of horizon dawn for pc and I bought the darn game, the bad thing is that it looks alot better on the freaking ps4, hell even the whole story was cut on the pc and the graphics are really only 1080p, yes you can run it at higher res but you still get 1080p material nothing more. But I really was hoping for better graphics but there is really no difference in most games in the graphics at al l if you turn up the graphic settings.

It still looks the same in whatever graphic setting I set it, no clear visuals or improvements.

Grass still looks like its a green blur, yes it moves but it still is just a green blur.

So he was stunned to see my screen showed the exact same as he had on his Hires pc.

There is totally no difference, so we tried many more games and found only a few actually show a major improvement in graphics. For me that made me decide that I do not have to change anything at all for years to come. I won't miss much with my current hardware at all.

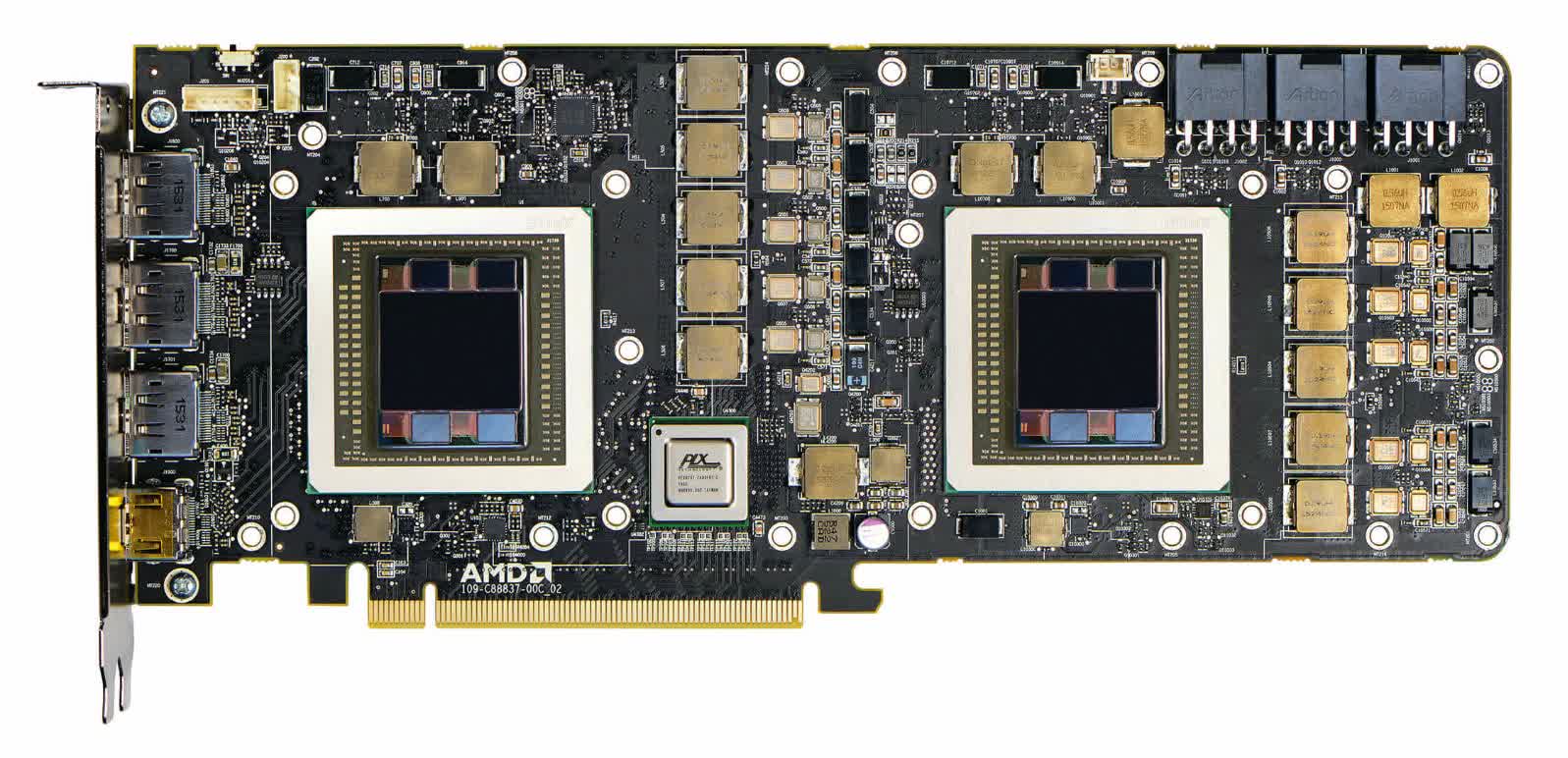

And end of story when I really need more graphics power is just scope up a cheap 5700 xt from the second hand market and run 2 of them