Following our series of CPU & GPU scaling benchmarks, it's time for a comparison between Intel's new Core i5-13400 and the tried and true AMD Ryzen 7 5700X. As of writing the Ryzen processor is a tad cheaper at around $190, whereas the i5-13400 is currently fetching $240, which is the MSRP for that part.

As the most affordable 13th-gen Core i5 part, the 13400 has raised the attention of many, and while it looks quite good on paper, it might also look very familiar to some of you. With 6 P-cores, 4 E-cores and a 20 MB L3 cache you might have mistaken it as the Core i5-12600K, because that's basically what it is.

When compared to the 12600K, the 13400's P-cores are clocked just 300 MHz lower at 4.6 GHz, a mere 6% reduction, while the E-cores have been clocked down from 3.6 GHz to 3.3 GHz, a slightly larger 8% reduction. Other than that they're basically the same CPU with a notable change to the integrated graphics.

The i5-12600K used the UHD 770, while the 13400 uses the UHD 730. This sounds trivial but the 770 packs 33% more execution units. At the end of the day you won't be gaming with either solution, so at least in that sense it is a trivial difference.

When compared to the 12400, this newer 13th-gen version clocks 5% higher for the P-cores while the 12400 didn't offer any E-cores at all. The 12400 was also limited to an 18 MB L3 cache and used the UHD 730 integrated graphics. So when compared to the 12400, the i5-13400 is a big upgrade for productivity performance, but at today's prices it also costs 30% more.

As usual, for this review we'll be focusing on gaming performance, so we thought it would be interesting to not just compare the i5-13400 against the Ryzen 5700X, but also the previous generation value champ, the Core i5-12400, along with the Ryzen 5 5600. So we have four CPUs in total, all of which can be purchased today for between $130 and $240.

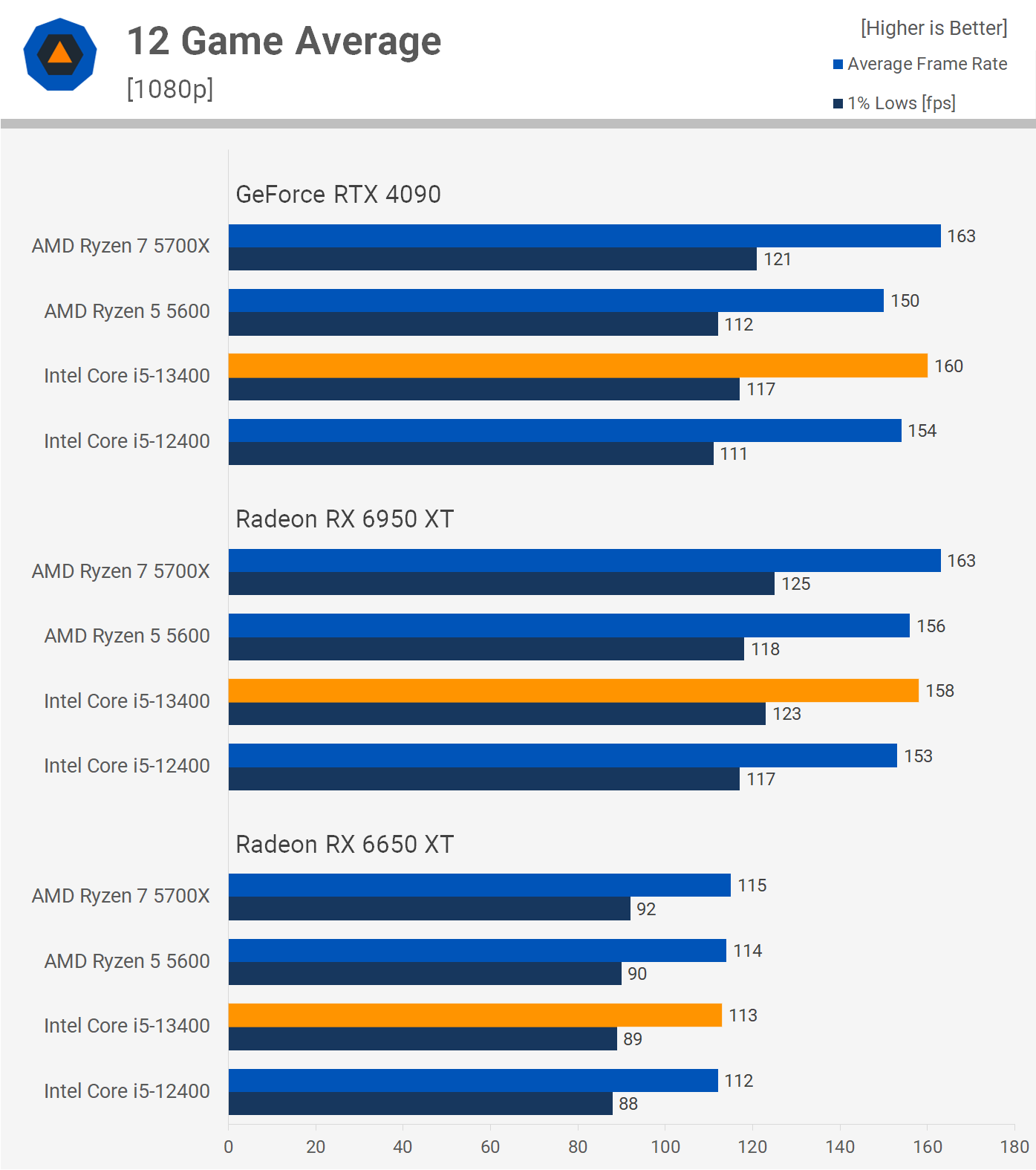

Now, given this is a GPU scaling benchmark we're obviously focusing on gaming performance, and in total we have a dozen games, all of which have been tested at 1080p using the Radeon RX 6650 XT, 6950 XT and RTX 4090.

The reason for testing at 1080p is simple, we want to minimize the GPU bottleneck as we're looking at CPU performance, but by including a number of different GPUs we still get to view the effects GPU bottlenecks have on CPU performance.

As for test systems, we're using DDR4 memory exclusively as the older but much cheaper memory standard makes sense when talking about sub-$250 processors, though there are certainly scenarios where you would opt for DDR5, but for simplicity sake we've gone for an apples to apples comparison with DDR4.

We used G.Skill Ripjaws V 32GB DDR4-3600 CL16 memory for this test because at $115 it's a good quality kit that offers a nice balance of price to performance. The AM4 processors can run this kit at the 3600 spec using the standard CL16-19-19-36 timings. The locked Intel processors can't run above 3466 if you want to use the Gear 1 mode, and ideally you'd want to use Gear 1 as the memory has to run well above 4000 before Gear 2 can match Gear 1.

The reason for this limitation is that the System Agent or SA voltage is locked on Non-K SKU processors, and this limits the memory controllers ability to support high frequency memory using the Gear 1 mode. That being the case, we've tested the 12400 and 13400 using the Ripjaws memory adjusted to 3466, as this is the optimal configuration for these processors short of going and manually tuning the sub-timings, which we're not doing for this review.

Benchmarks

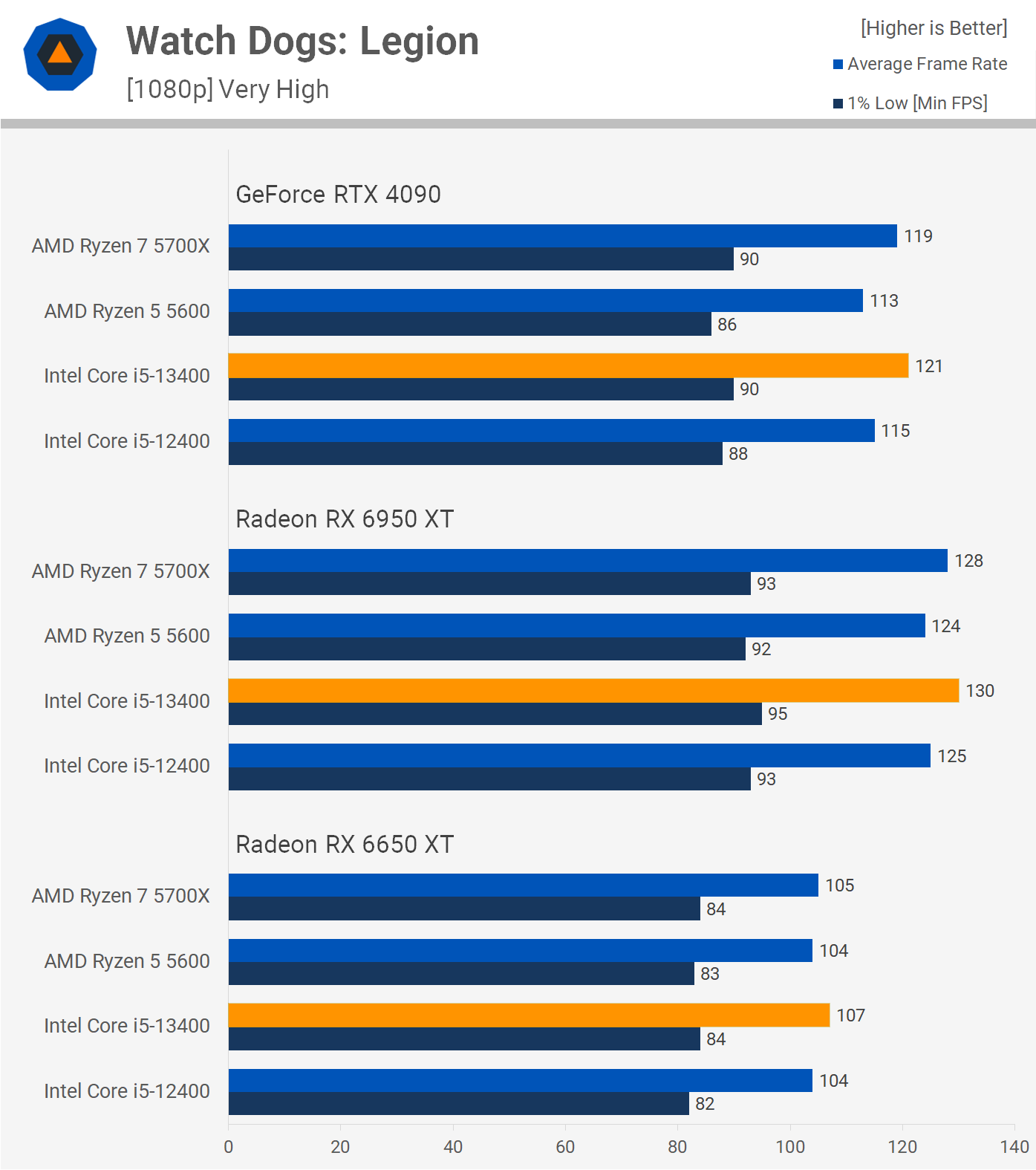

Starting with Watch Dogs: Legion, we find very competitive results across the board, regardless of the GPU used. It's crazy to see that we're actually not that GPU limited when using the 6650 XT. Sure, the 13400 was 21% faster using the 6950 XT, but that's quite a small margin. The GeForce driver overhead is also visible with the RTX 4090 coming in slightly slower than the 6950 XT.

We saw the highest frame rates using the 6950 XT, but even so the 13400 and 5700X delivered basically identical performance, the Intel CPU was just 1.5% faster.

Even when compared to the much cheaper Ryzen 5 5600, the 13400 doesn't look that impressive, winning that match up by a mere 5% margin.

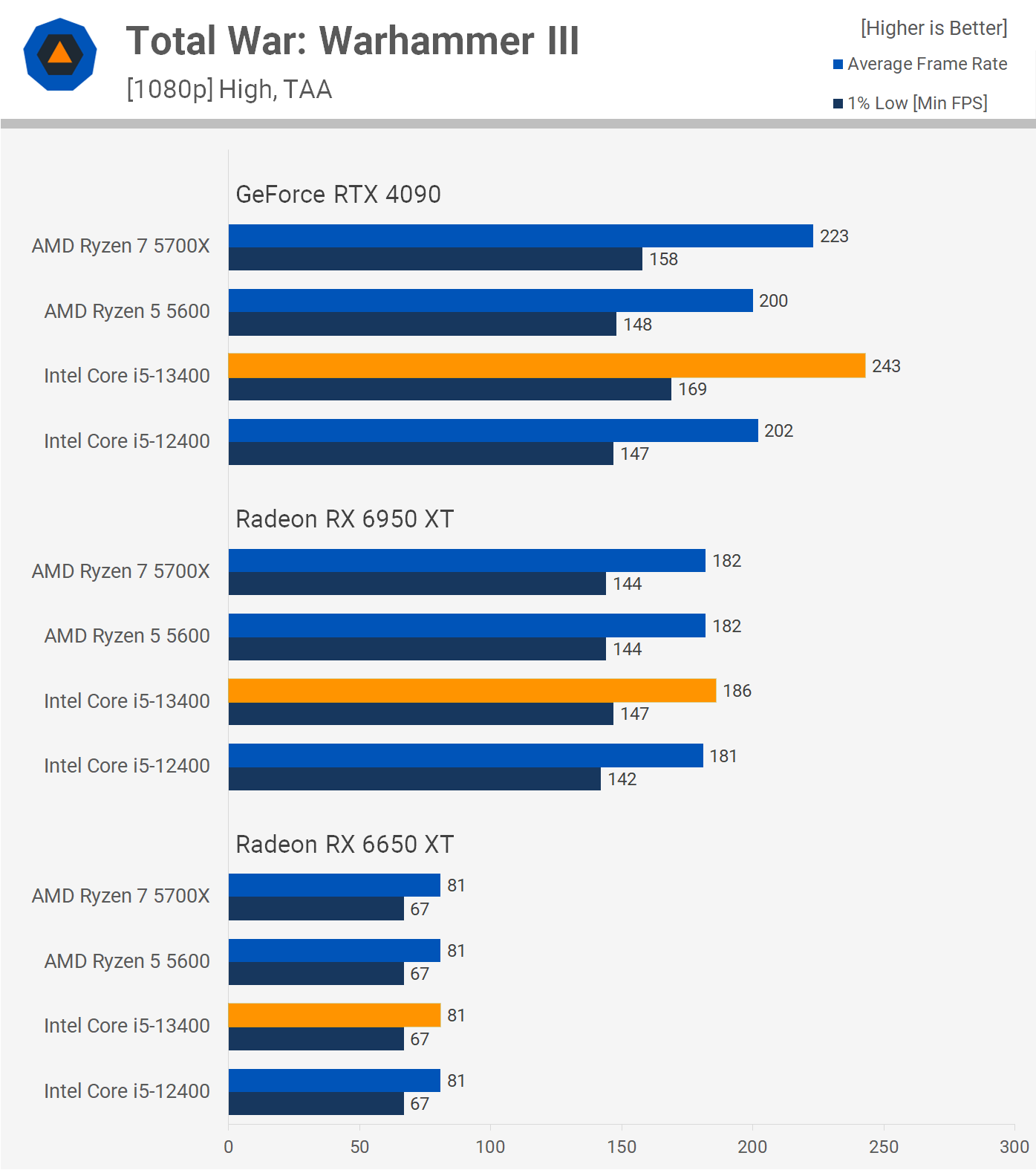

Moving on to Warhammer III, we see that all CPUs are limited by the GPU at exactly 81 fps when using the 6650 XT, and even with the much faster 6950 XT we're still heavily GPU limited, this time at around 180 fps. It's not until we use the RTX 4090 that we find the performance limits of these CPUs.

The Ryzen 5 5600 and Core i5-12400, for example, both capped out at around 200 fps, which is very respectable performance. The 5700X was 12% faster than the 5600, while the 13400 was 9% faster than the 5700X, so ultimately Intel does win this test.

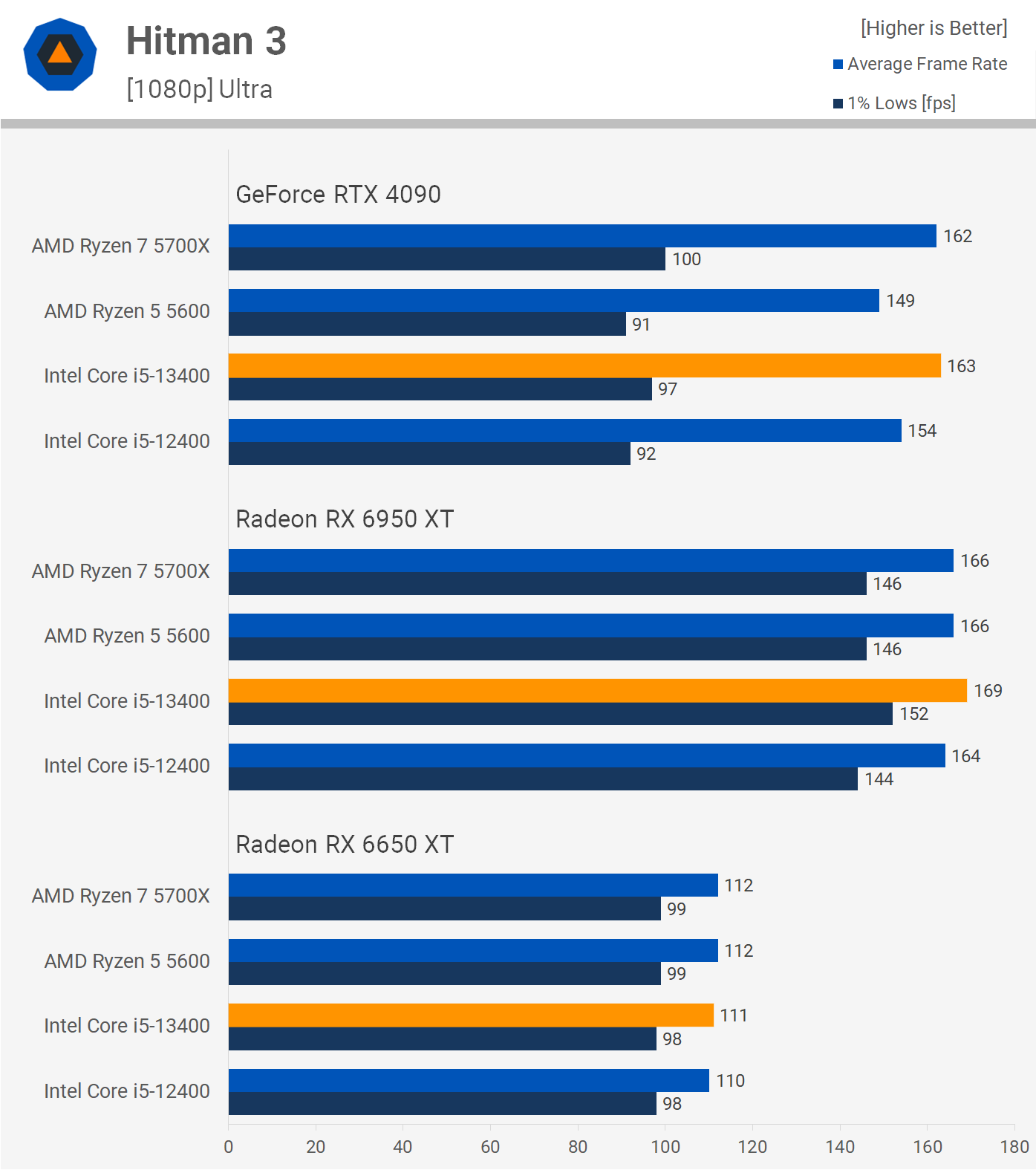

Next up we have Hitman 3, and no, the 6950 XT and 4090 results aren't mixed up. For some reason the RTX 40 series currently suffers from weak 1% lows in this title, presumably a driver related issue. In any case, the average frame rate of the 6950 XT and RTX 4090 are similar at 1080p due to performance being CPU limited.

The more limited and heavily utilized 12400 and 5600 performed quite better with the Radeon GPU, again due to Nvidia's driver overhead, which sends performance backwards in high CPU utilization scenarios. For the most part though, there was little to no performance difference between the four CPUs.

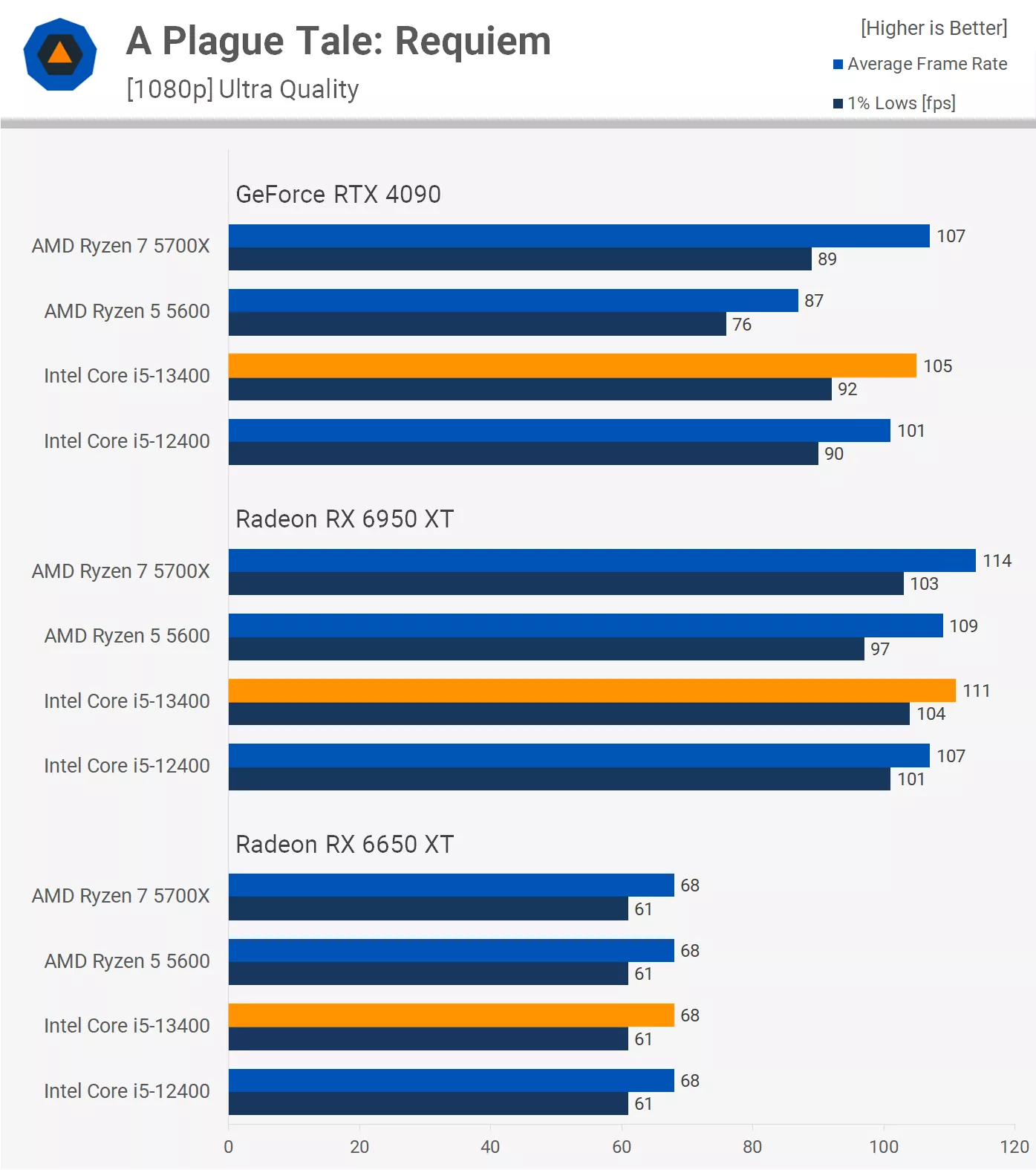

A Plague Tale: Requiem results are CPU limited when using the RTX 4090 and 6950 XT. The 6650 XT, on the other hand, heavily limited performance to 68 fps on the GPU side as all four CPUs could easily meet that performance target.

Now with the Radeon 6950 XT we see that the 5700X and 13400 delivered the same level of performance, while the 5600 and 12400 were also comparable.

However, things look a bit different with the RTX 4090, namely for the Ryzen 5600 which appears overwhelmed by the additional overhead, dropping well below the 5700X with just 87 fps on average. The 5700X and 13400 though did deliver comparable performance.

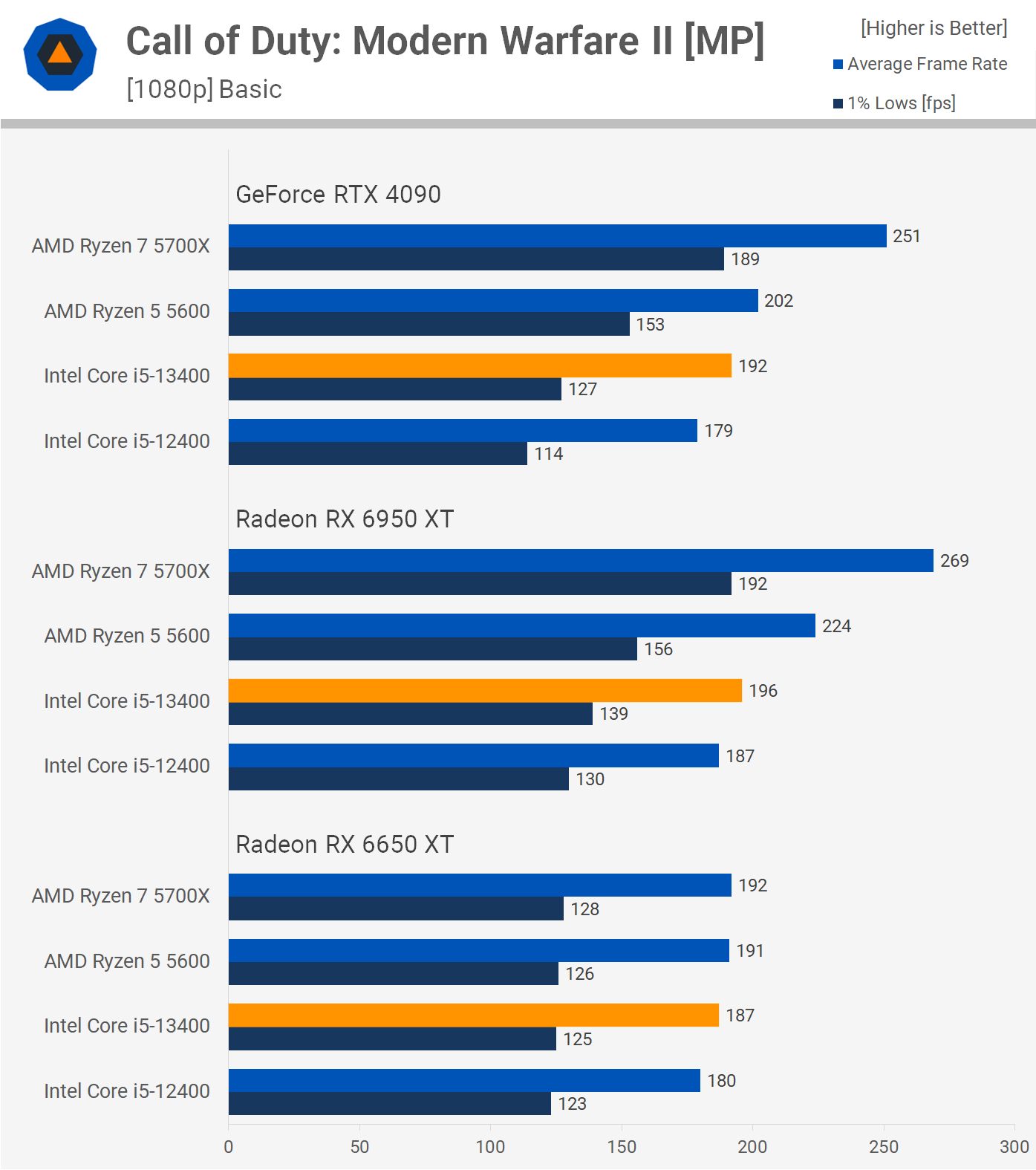

Next up we have Call of Duty: Modern Warfare II, using the basic quality preset as this is a competitive multiplayer shooter, so lower quality settings are typically used to not just boost frame rates, but also make it easier to spot enemy players.

With the 6650 XT we see that performance is largely GPU limited, with just a 7% performance difference between the fastest and slowest CPUs tested. The 5700X for example was just 3% faster than the 13400, so in other words performance was basically identical.

Moving to the 6950 XT largely removes the GPU bottleneck and now the 5700X is a massive 37% faster than the 13400, which is a surprisingly large margin, very surprising in fact. The 13400 was just 5% faster than the 12400, so a very mild improvement there, while the 5700X was 20% faster than the 5600. We're also seeing similar margins with the RTX 4090, though due to the overhead the CPU limited results are slightly slower with the GeForce GPU.

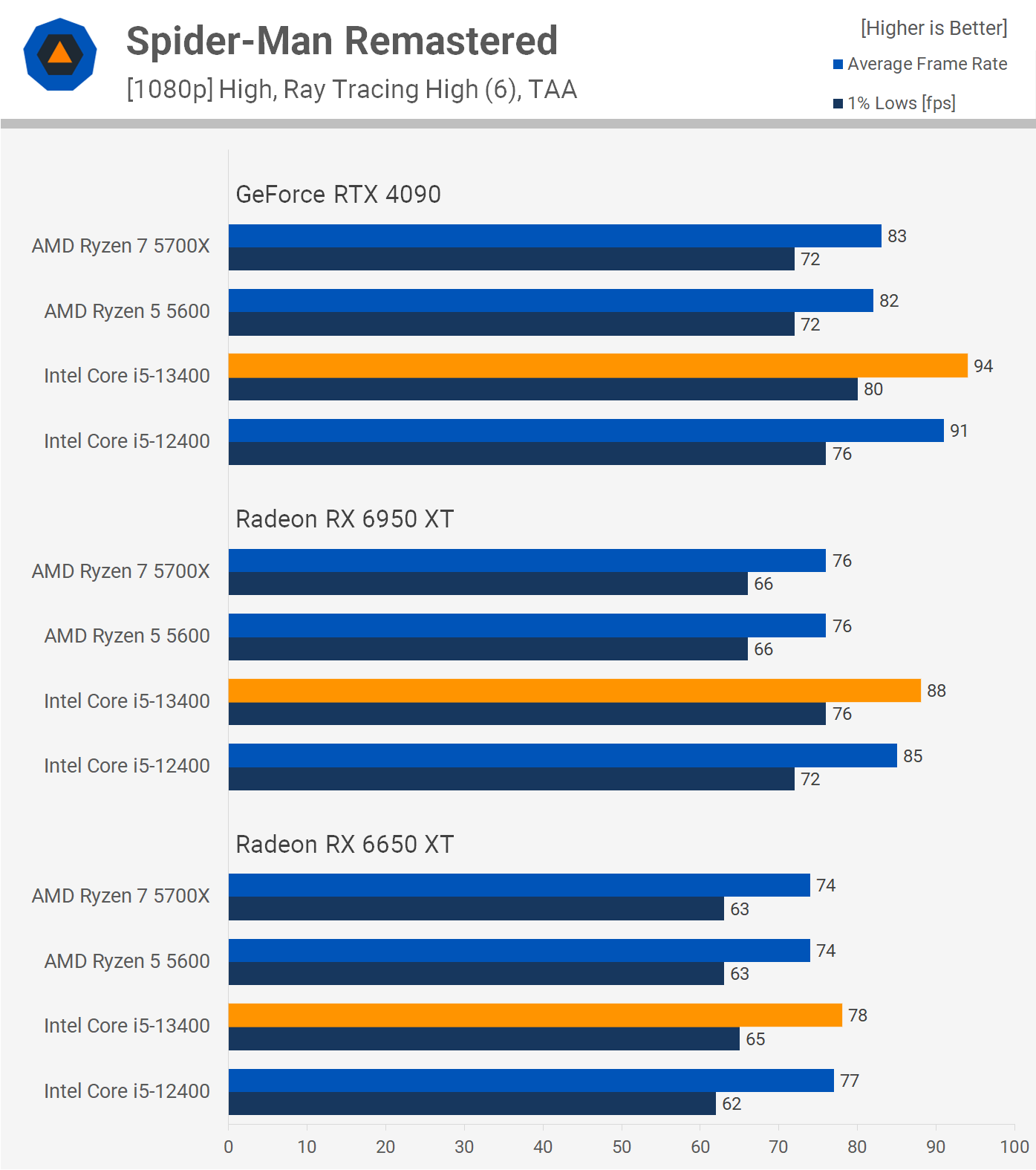

Spider-Man Remastered is a very CPU-limited game, especially with ray tracing enabled. That said, we do see some performance improvement from the 6650 XT to the 6950 XT, particularly when looking at the Core i5 processors. Interestingly the Ryzen processors did see a performance uplift when moving from the 6950 XT to the RTX 4090, around a 9% gain while the 13400 saw a 7% improvement.

We suspect memory performance is the key limiting factor for the Ryzen processors, namely DRAM latency will be responsible for why the Core i5's perform better in this test. Using the RTX 4090 the 13400 was 13% faster than the 5700X.

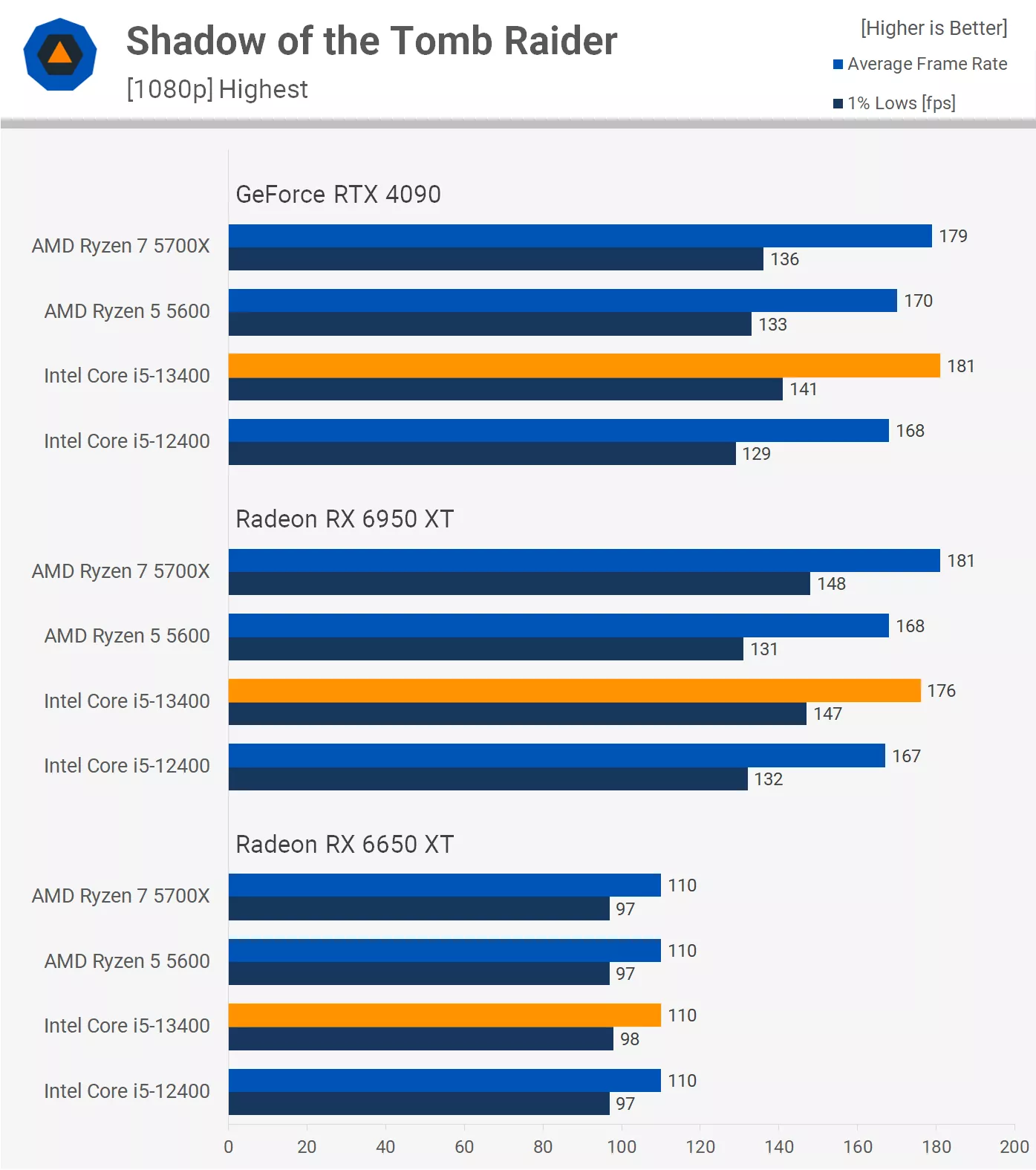

In the Shadow of the Tomb Raider, the Radeon 6650 XT results are highly predictable as we're entirely GPU limited, so all CPUs are capped at 110 fps. This limit was removed with the 6950 XT and RTX 4090, and now the results are CPU limited.

Basically the 12400 and 5600 were evenly matched with the 13400 and 5700X also evenly matched, delivering around 8% more performance than the cheaper models.

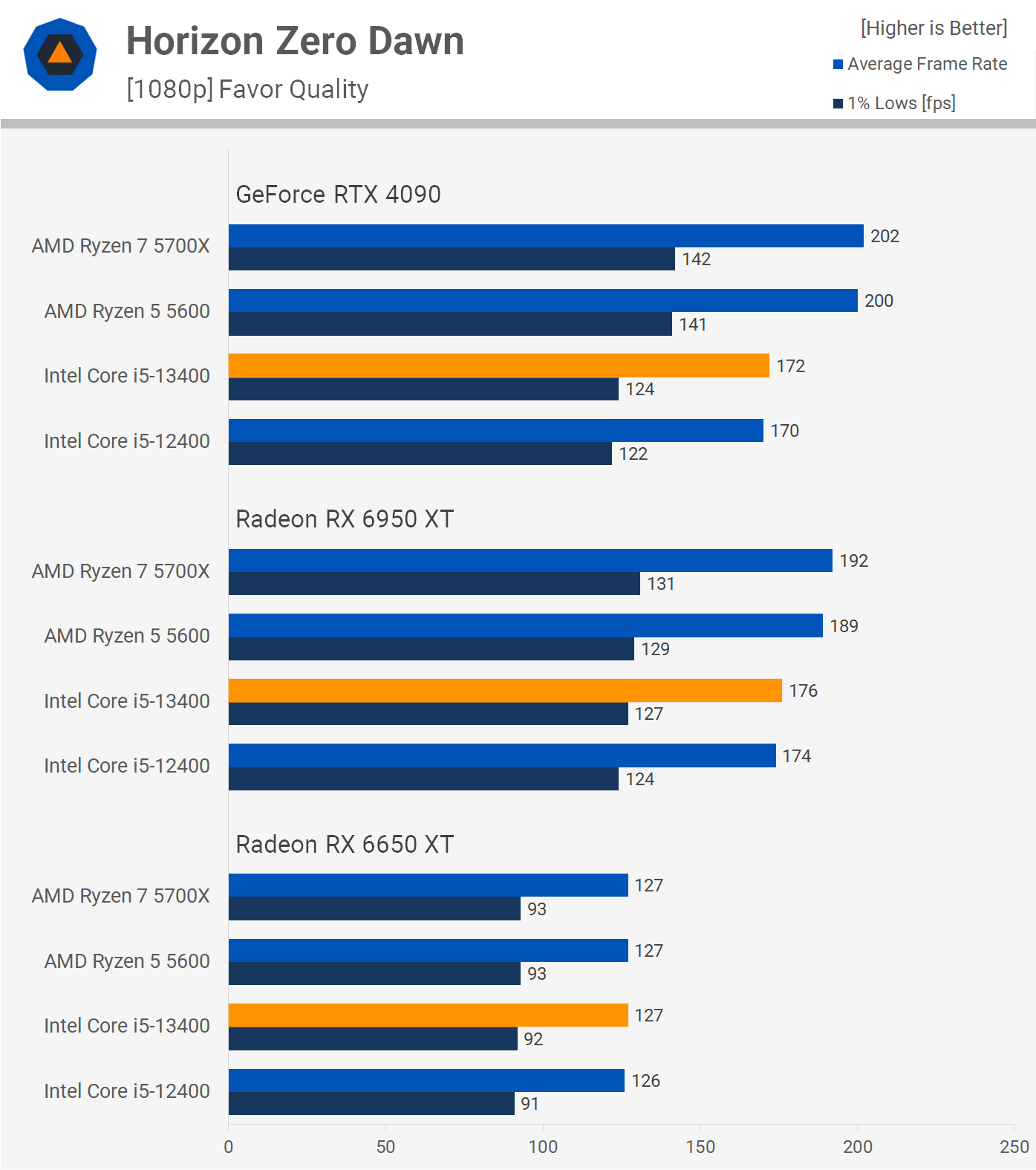

Next up we have Horizon Zero Dawn and this title always seems to heavily favor AMD processors as shown here. The Ryzen 5700X was 17% faster than the 13400 when using the RTX 4090 and 9% faster using the 6950 XT. Interestingly, the 5700X was slower using the 6950 XT, while the 13400 delivered its best results with the 6950 XT.

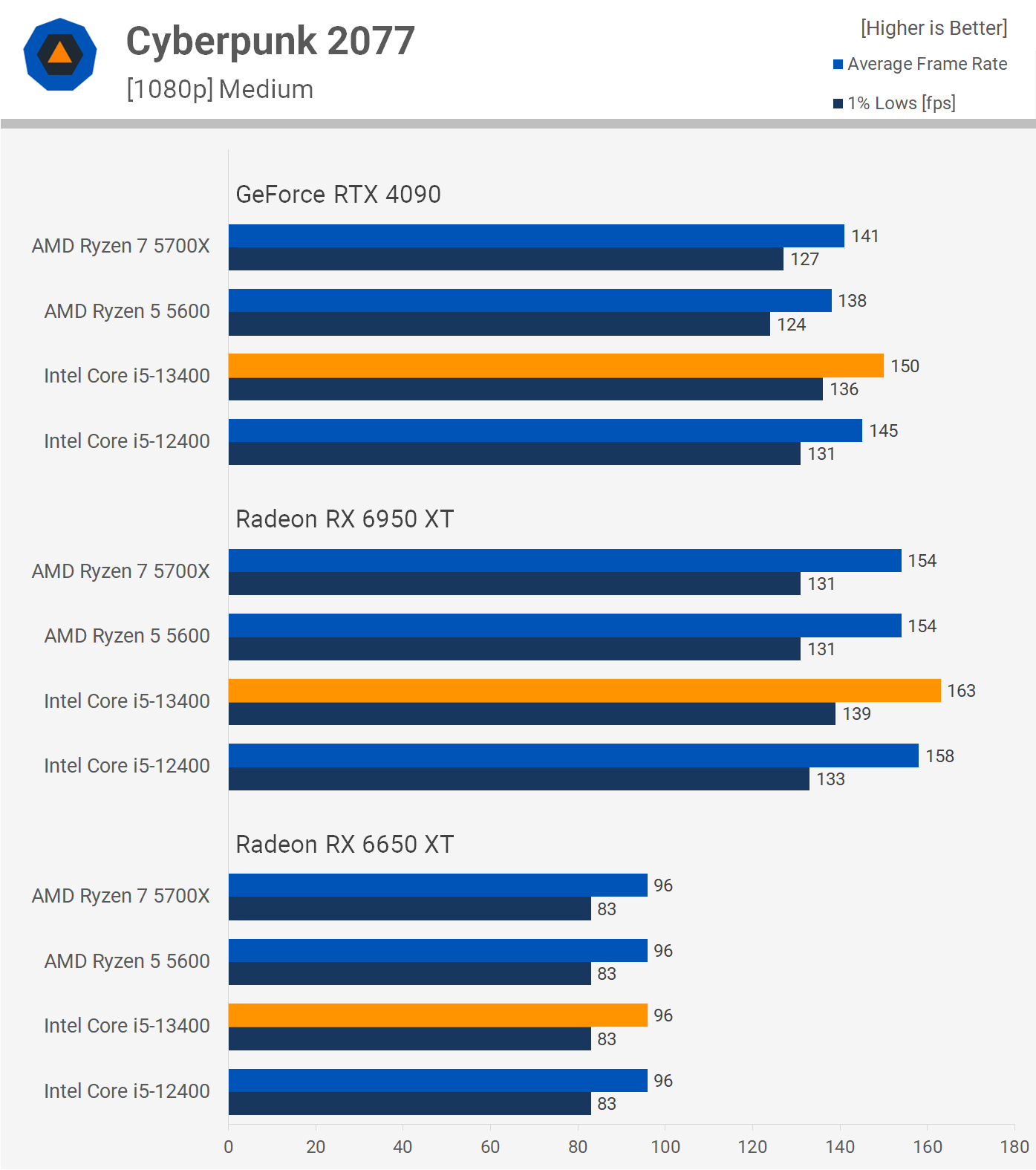

Moving on to the Cyberpunk 2077 results we find that when CPU limited the game plays best with a Radeon GPU, evident by the fact that the 6950 XT was up to 12% faster than the RTX 4090, seen when using the Ryzen 5 5600.

If we look at the 6950 XT results we see that the 13400 is 3% faster than the 12400, while the 5600 and 5700X both hit a wall at 154 fps. Performance overall was very similar though with the 13400 beating the 5700X by a 6% margin.

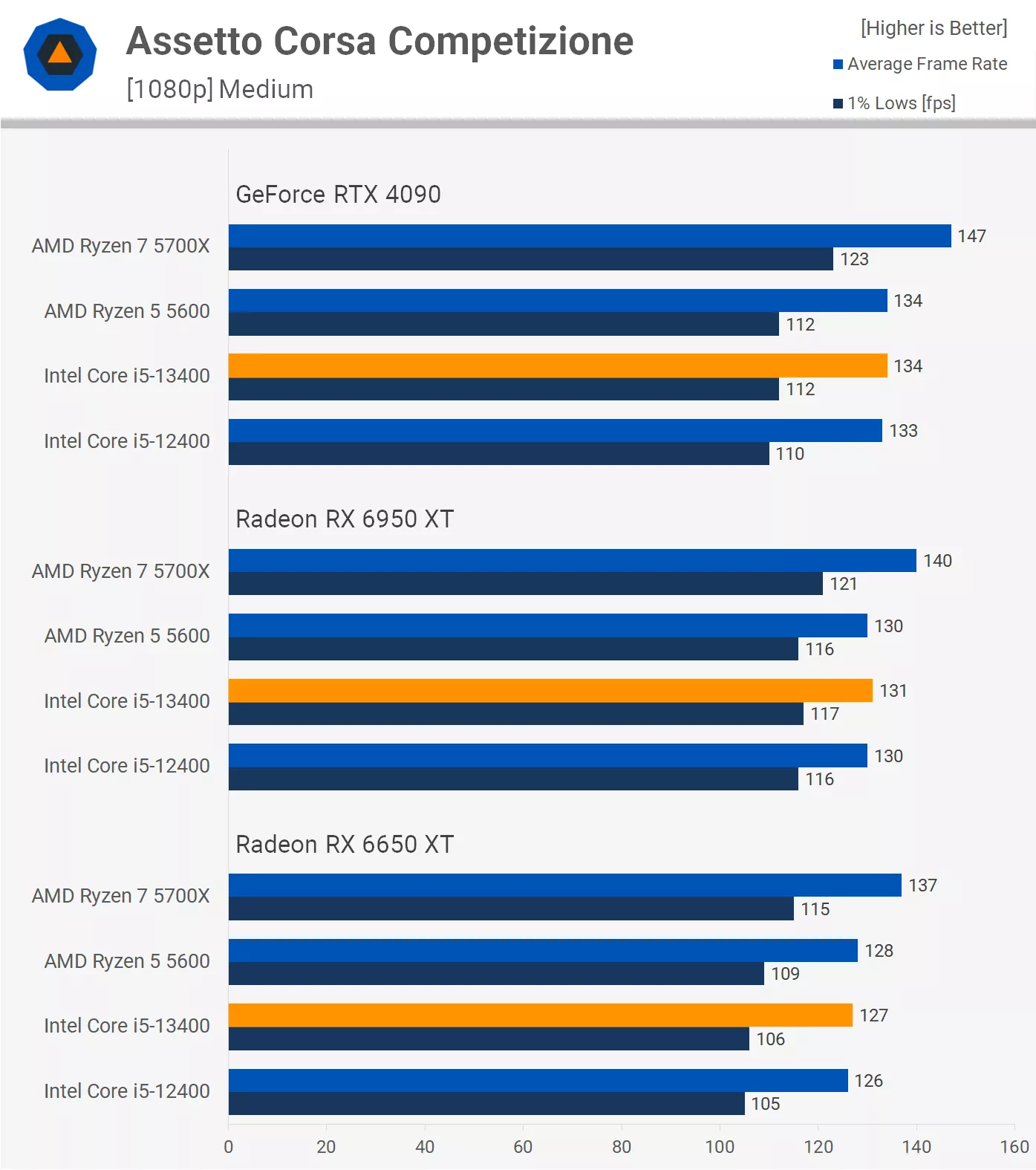

ACC has been tested using the medium quality preset and as a result the game is heavily CPU limited, and we're seeing this even with the 6650 XT.

Still, the Ryzen 5700X was around 7-10% faster than the 5600, which also made it 7-10% faster than the 13400. The extra cores and small frequency advantage the Ryzen processor has over the 5600 is clearly helping, while the small frequency bump the 13400 enjoys over the 12400 is virtually worthless in this example.

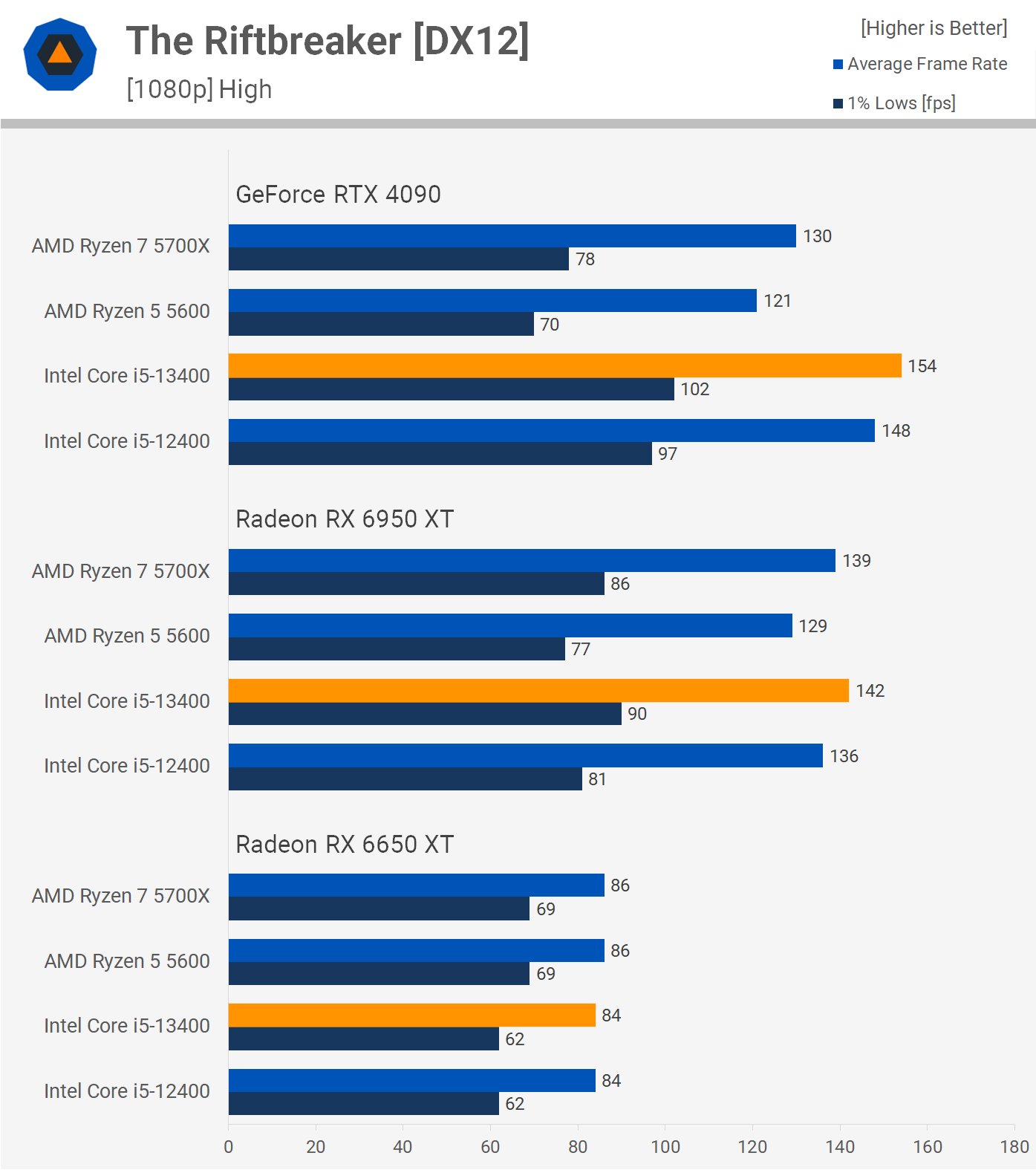

The Riftbreaker results are interesting and perhaps in part explain why the AMD processors typically do poorly in this title for our CPU testing, which normally only features the RTX 4090. Using the Radeon 6950 XT, the 5700X and 13400 delivered basically identical performance at around 140 fps on average.

However, when switching to the RTX 4090 we see some interesting changes. First, the 5700X is slower, dropping 6% of the performance seen with the Radeon GPU. Meanwhile the 13400 gains performance, becoming 8% faster, and now with the GeForce GPU, the Intel processor is a massive 18% faster. So it's possible had we used the 7900 XTX, that the 13400 and 5700X would have delivered comparable performance.

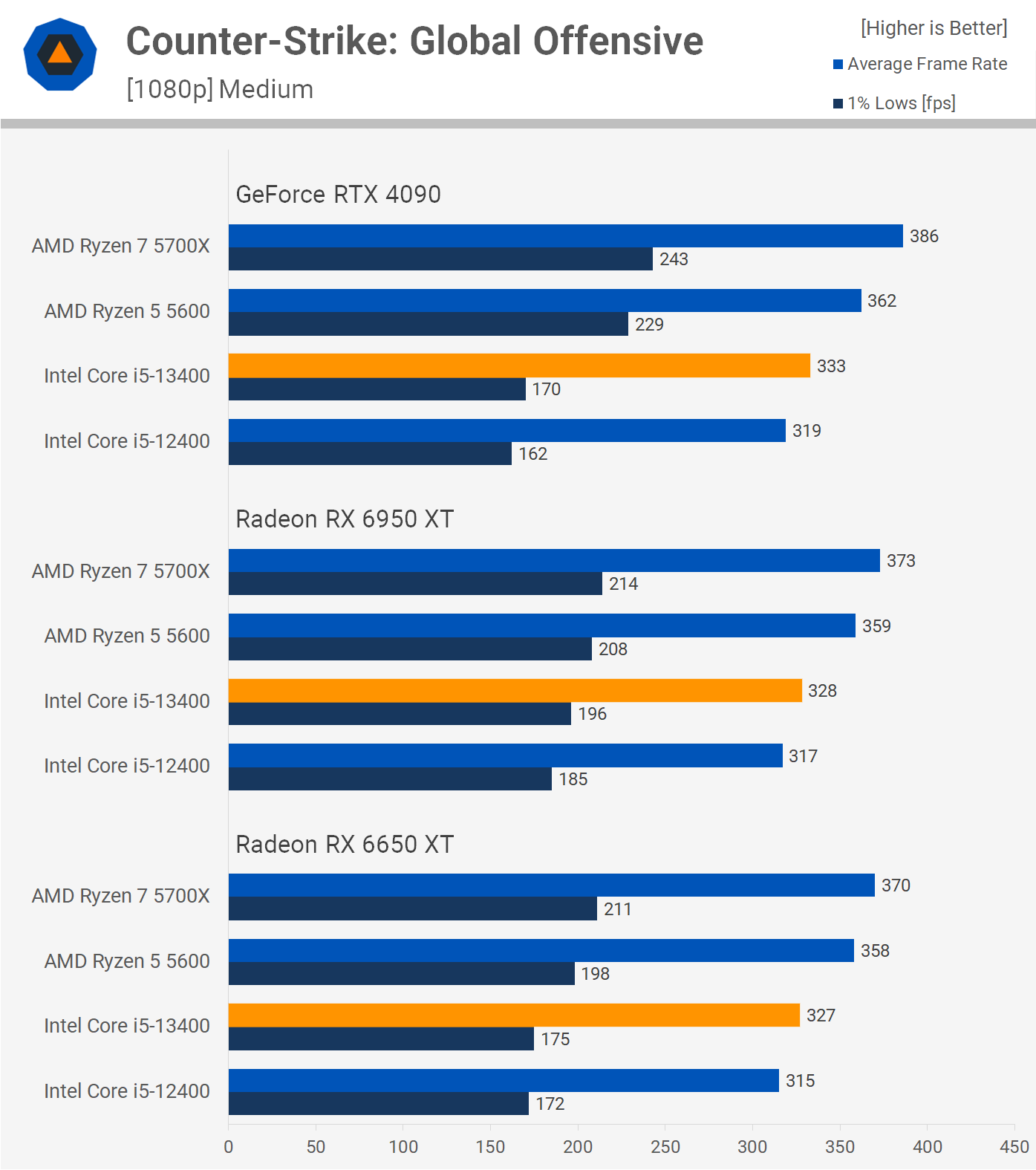

Last up we have Counter-Strike: Global Offensive and as you can probably tell, this title is very CPU limited and that's the case regardless of the visual quality settings used.

Here the 5700X is around 13-16% faster than the 13400 and interestingly the Core i5 processors struggled the most with the RTX 4090, seen when looking at the 1% lows. Still, if you happen to be using a Radeon GPU, it's going to be very difficult to tell the difference between these two CPUs.

12 Game Average

As you can see, the Ryzen 5700X and Core i5-13400 are very evenly matched. Using the RTX 4090, the Ryzen CPU was just 2% faster, or 3% faster using the 6950 XT, and 2% with the 6650 XT. Given those margins are well within 5%, it's fair to say that overall the Ryzen 5700X and i5-13400 deliver the same level of gaming performance.

It's worth noting that for gaming the Core i5-13400 was just 4% faster than the i5-12400, which makes sense given it's only clocked 5% higher. Therefore, when it comes to gaming the i5-13400 isn't an upgrade over the 12400. That also means it's not a great deal slower than the Ryzen 5 5600 for the most part, despite costing significantly more. So from a value perspective the 13400 doesn't offer much.

Cost Per Frame

For our cost per frame analysis we're just going to consider the CPUs given the similarity in pricing for memory and motherboards in this segment. The Ryzen 5 5600 is by far the best value part here, offering a little over 20% more value than the i5-12400 and over 40% more than the i5-13400.

Meanwhile, the Ryzen 5700X is better value than the i5-12400 and significantly better value than the i5-13400, reducing the cost per frame by 27%. Of course, these margins are reduced once you factor in motherboard and memory prices, but the point is the 5700X presents as a far better investment for gamers at $180 than the 13400 does at $240.

For productivity the Core i5-13400 will fare better, but even there we've seen in the past that the 5700X and 12600K trade blows depending on the workload. Best case, the 12600K is faster in an application like Cinebench, but again it costs 33% more, so it's not exactly a clear win there.

What We Learned

Not unlike the Core i3-13100, in our opinion the Core i5-13400 is dead on arrival at the current asking price. For Intel to be competitive, these "new" locked 13th-gen parts – which are just 12th-gen processors with a new name – need to launch at the same price points as the original 12th-gen models they are replacing.

So in the case of the i3-13100, instead of setting you back $150, it should have launched at around $130 – though today that's at most a $100 part like the 12100F. The i5-13400 suffers the exact same issue, $240 for this processor makes no sense, hell it costs more than the Ryzen 5 7600.

But worse than that, the 12400 can be had for $180, and while it doesn't have the same four E-cores, gamers aren't going to care about that. Heaven forbid a new chip generation offers something extra at the same price point. Sadly, this no longer seems to be a thing in the PC space, or at least it's becoming increasingly rare. Then, of course, there's the Ryzen 7 5700X which can be had for as little as $180 and the AM4 platform has almost endless affordable quality motherboard options as well as cheap CPU upgrades.

You would only buy the Core i5-13400 over the Ryzen 5700X (or 12400) because you are interested in productivity performance, but in that situation the i5-13500 makes more sense as it costs just $10 more, comes with twice as many E-cores, 20% more L3 cache and clocks 4-6% higher.

Not only that, but the Core i5-13400 will be ~5% slower than the 12600K, and with the 12600K now available for $250, what's the point of the 13400?

At $200 to $250 for a new CPU, your best options right now include the Ryzen 5 7600, Ryzen 7 5700X or Core i5-13500. We could easily make an argument for each of those, they're all valid depending on your preferences, but in short, the 5700X is the value king. This Ryzen chip can take advantage of affordable B550 motherboards and DDR4 memory.

The Core i5-13500 is the budget productivity king, it can be paired with either DDR4 or DDR5 memory, and is supported by a range of Intel 600 and 700 series motherboards. The Ryzen 7600 is the least affordable of the three, but it offers the best gaming performance by far, not to mention it's backed by a superior platform that will support future CPU generations. Unless the Core i5-13400 drops to at least $180, you can simply ignore it in favor of one of the three processors we just mentioned.