We've been waiting to reexamine Nvidia's Deep Learning Super Sampling for a long time, partly because we wanted new games to come out featuring Nvidia's updated algorithm. We also wanted to ask Nvidia as many questions as we could to really dig into the current state of DLSS.

Today's article is going to cover everything. We'll be looking at the latest titles to use DLSS, focusing primarily on Control and Wolfenstein: Youngblood, to see how Nvidia's DLSS 2.0 (as we're calling it) stacks up. This will include our usual suite of visual comparisons looking at DLSS compared to native image quality, resolution scaling, and various other post processing techniques. Then, of course, there will be a look at performance across all of Nvidia's RTX GPUs.

We'll also be briefly reviewing the original launch games that used DLSS to see what's changed here, and there will be plenty of discussion on the RTX ecosystem, Nvidia's marketing, expectations, disappointments, and so on. Strap yourselves in because this is going to be a comprehensive look at where DLSS stands today.

First, having covered the topic in detail before, here's a recap of where we're up to with DLSS...

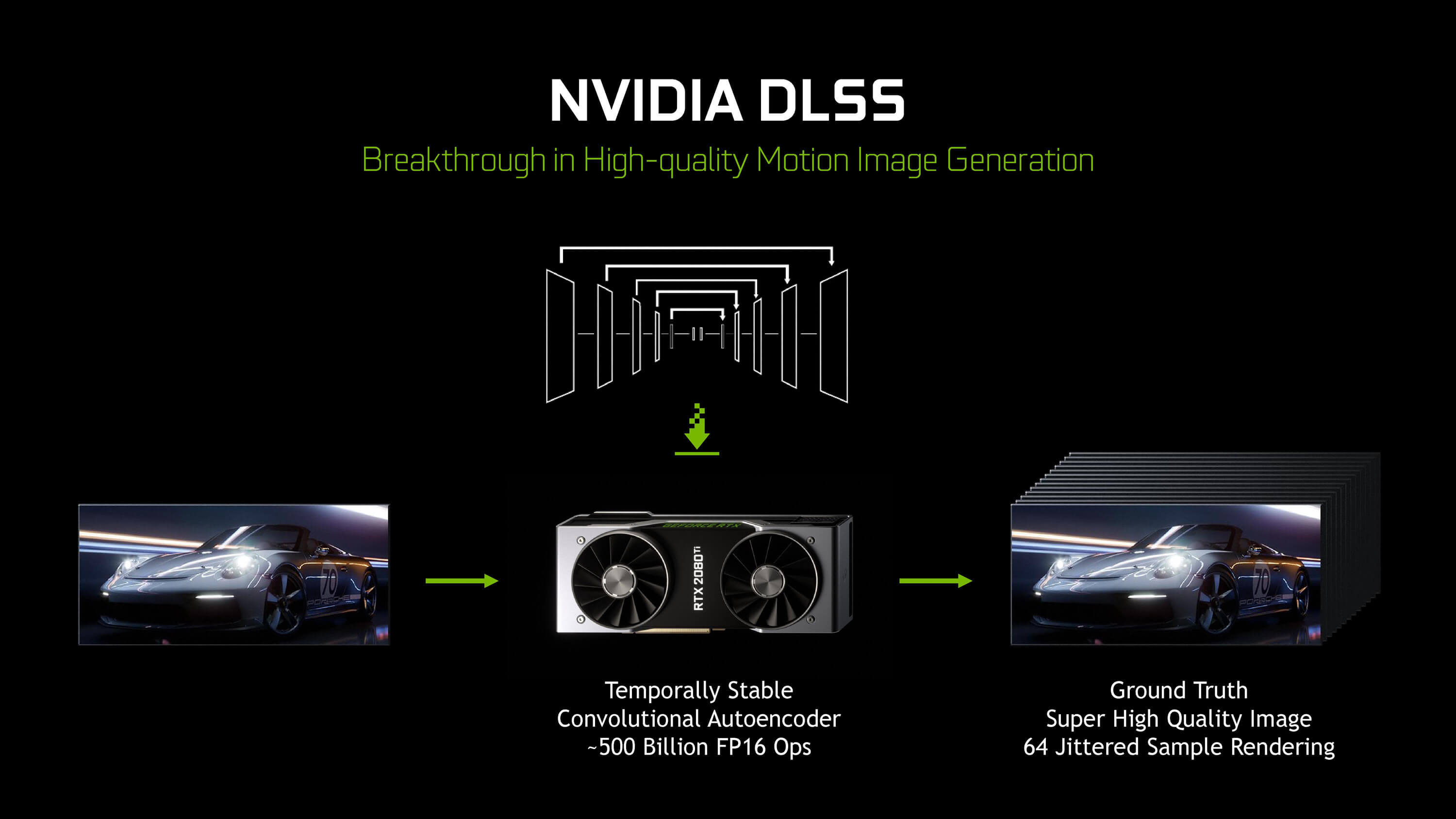

Nvidia advertised DLSS as a key feature of GeForce RTX 20 series GPUs when they launched in September 2018. The idea was to improve gaming performance for those wanting to play at high resolutions with high quality settings, such as ray tracing. It did this by rendering the game at a lower than native resolution, for example, 1440p if your target resolution was 4K, and then upscaling it back to the native res using the power of AI and deep learning. The goal was for this upscaling algorithm to provide native-level image quality with higher performance, giving RTX GPUs more value than they otherwise had at the time.

This AI algorithm also leveraged a new feature on RTX Turing GPUs: the tensor cores. While these cores are most likely included on the GPU to make it also suitable for data center and workstation use cases, Nvidia found a way to use this hardware feature for gaming. Later, Nvidia decided to ditch the tensor cores for their cheaper Turing GPUs in their GTX 16 series products, so DLSS ended up only being supported on 20 series RTX products.

While all this sounded promising, the execution within the first 9 months was far from perfect. Early DLSS implementations looked bad, producing a blurry image with artifacts. Battlefield V was a particularly egregious case, but even Metro Exodus failed to impress.

One of the major issues with the initial version of DLSS is that it did not provide an experience better than existing resolution scaling techniques. The implementation in Battlefield V, for example, looked worse and performed worse than a simple resolution upscale. In Metro Exodus it was more on par with these techniques, but it wasn't impressive either. As DLSS was locked down to certain quality settings and resolutions on certain GPUs, and was only supported in a very limited selection of games, it didn't make sense to use DLSS instead of resolution scaling.

Following the disappointing result, Nvidia decided to throw the original version of DLSS in the bin, at least that's what it sounded like based on our discussions with the company. Instead, for the short term they released a better sharpening filter for their FreeStyle tools that would enhance the resolution scaling experience, while they worked on a new version of DLSS in-house.

The first step towards DLSS 2.0 was the release of Control. This game doesn't use the "final" version of the new DLSS, but what Nvidia calls an "approximation" of the work-in-progress AI network. This approximation was worked into an image processing algorithm that ran on the standard shader cores, rather than Nvidia's special tensor cores, but attempted to provide a DLSS-like experience. For the sake of simplicity, we're going to call this DLSS 1.9, and we'll talk about this more when we look at DLSS in Control.

Late in 2019 though, Nvidia got around to finalizing the new DLSS – we feel the upgrade is significant enough to warrant it being called DLSS 2.0. There are fundamental changes to the way DLSS works with this version, including the removal of all restrictions, so DLSS now works at any resolution and quality settings on all RTX GPUs. It also no longer requires per-game training, instead using a generalized training system, and it runs at higher performance. These changes required a significant update to the DLSS SDK, so it's not backwards compatible with original DLSS titles.

So far, we've seen two titles that use DLSS 2.0: Wolfenstein: Youngblood and indie title 'Deliver us the Moon'. We'll be focusing primarily on Youngblood as it's a major release.

Nvidia tells us that DLSS 2.0 is the version that will be used in all DLSS-enabled games going forward; the shader core version, DLSS 1.9, was a one-off and will only be used for Control. But we think it's still important to talk about what Nvidia has done in Control, both to see how DLSS has evolved and also to see what is possible with a shader core image processing algorithm, so let's dive into it.

Control + DLSS

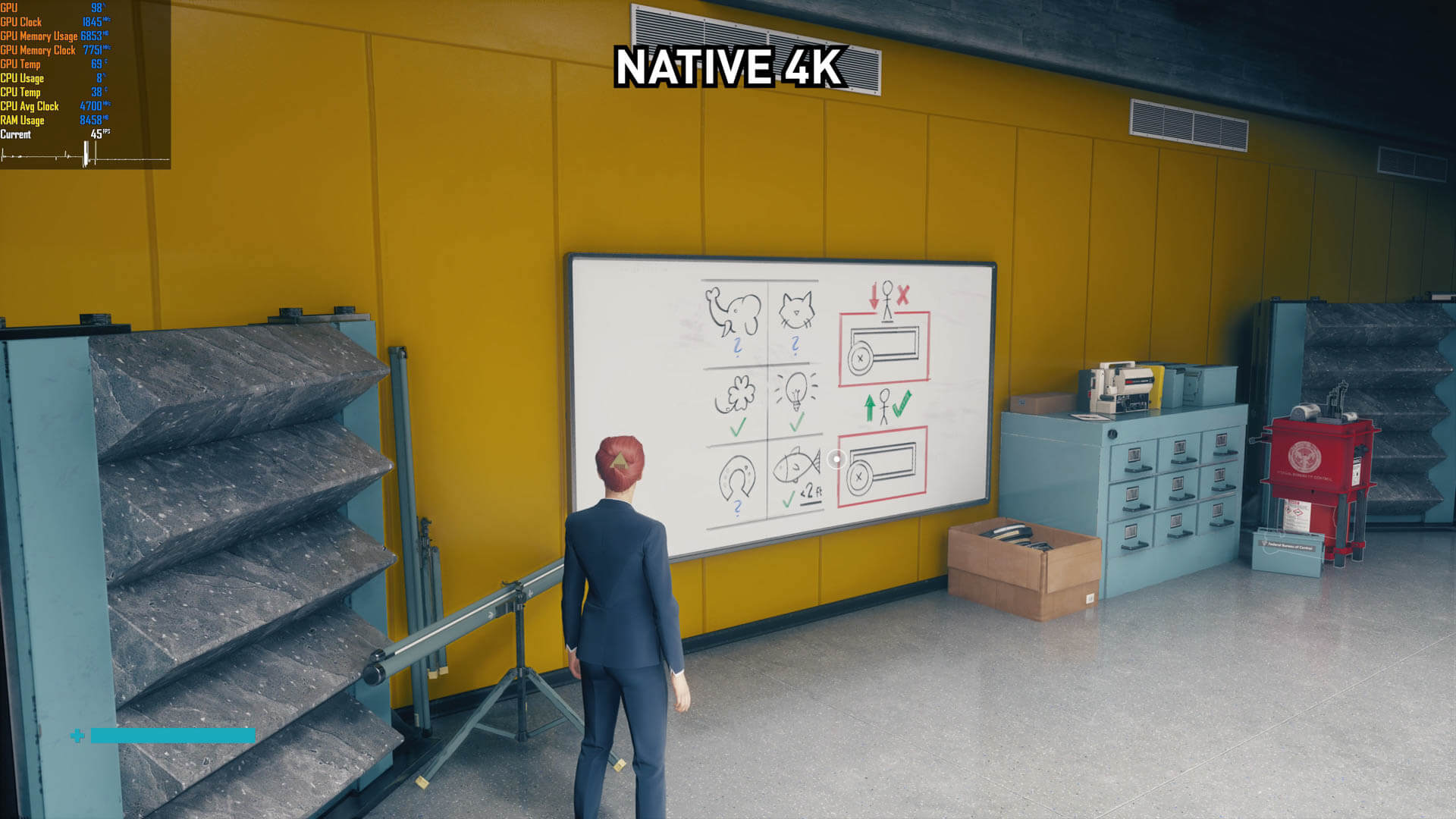

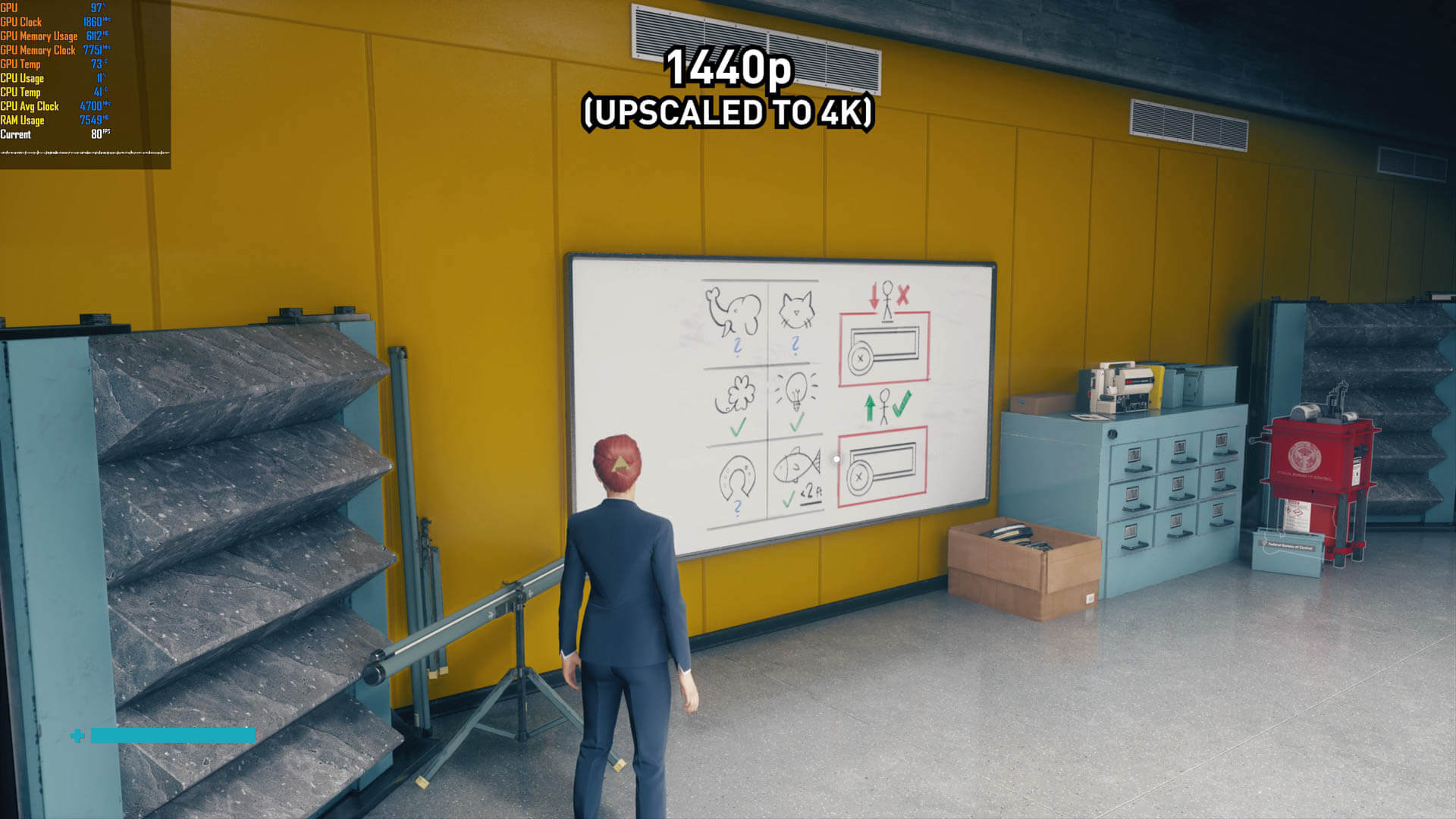

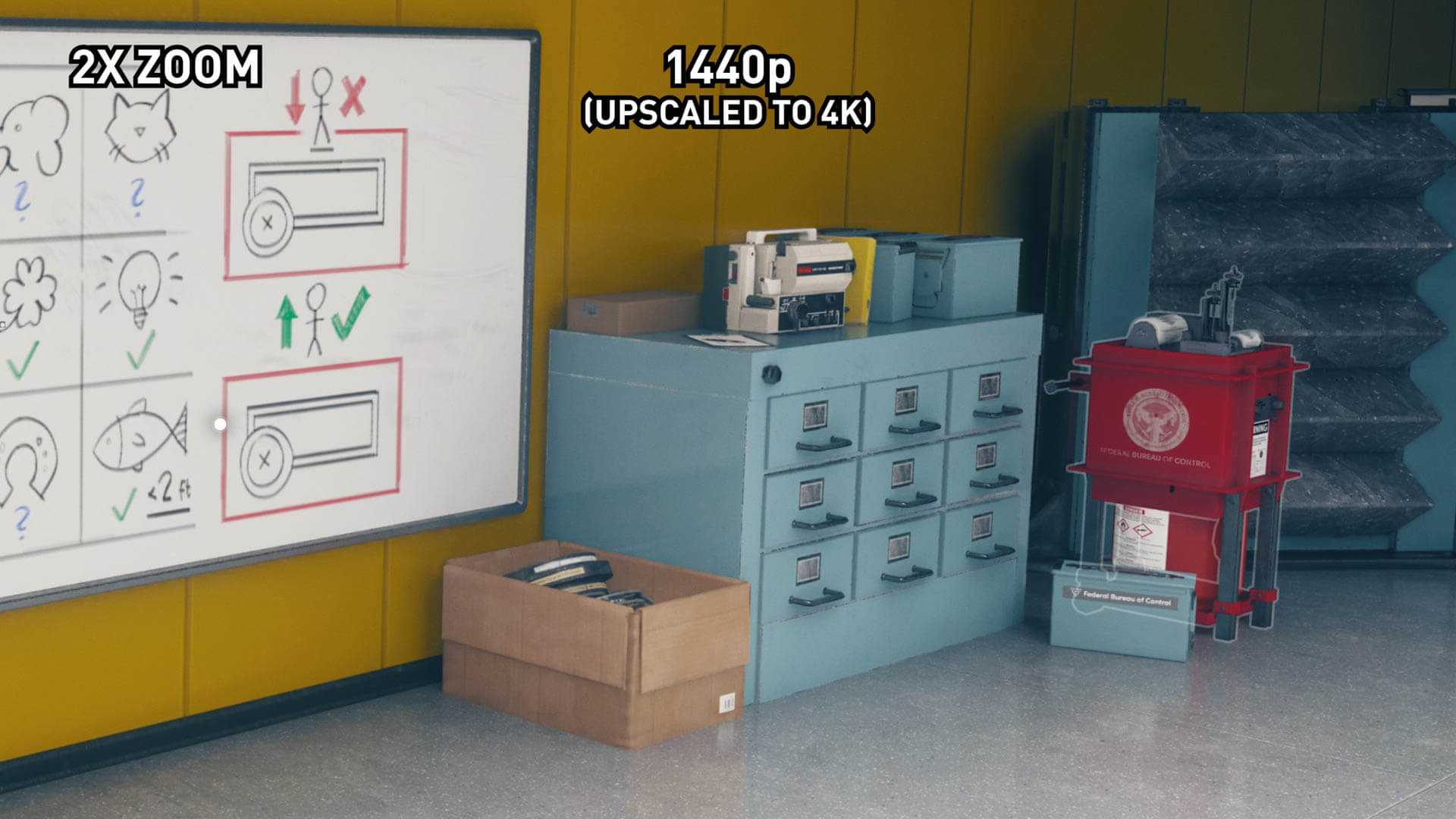

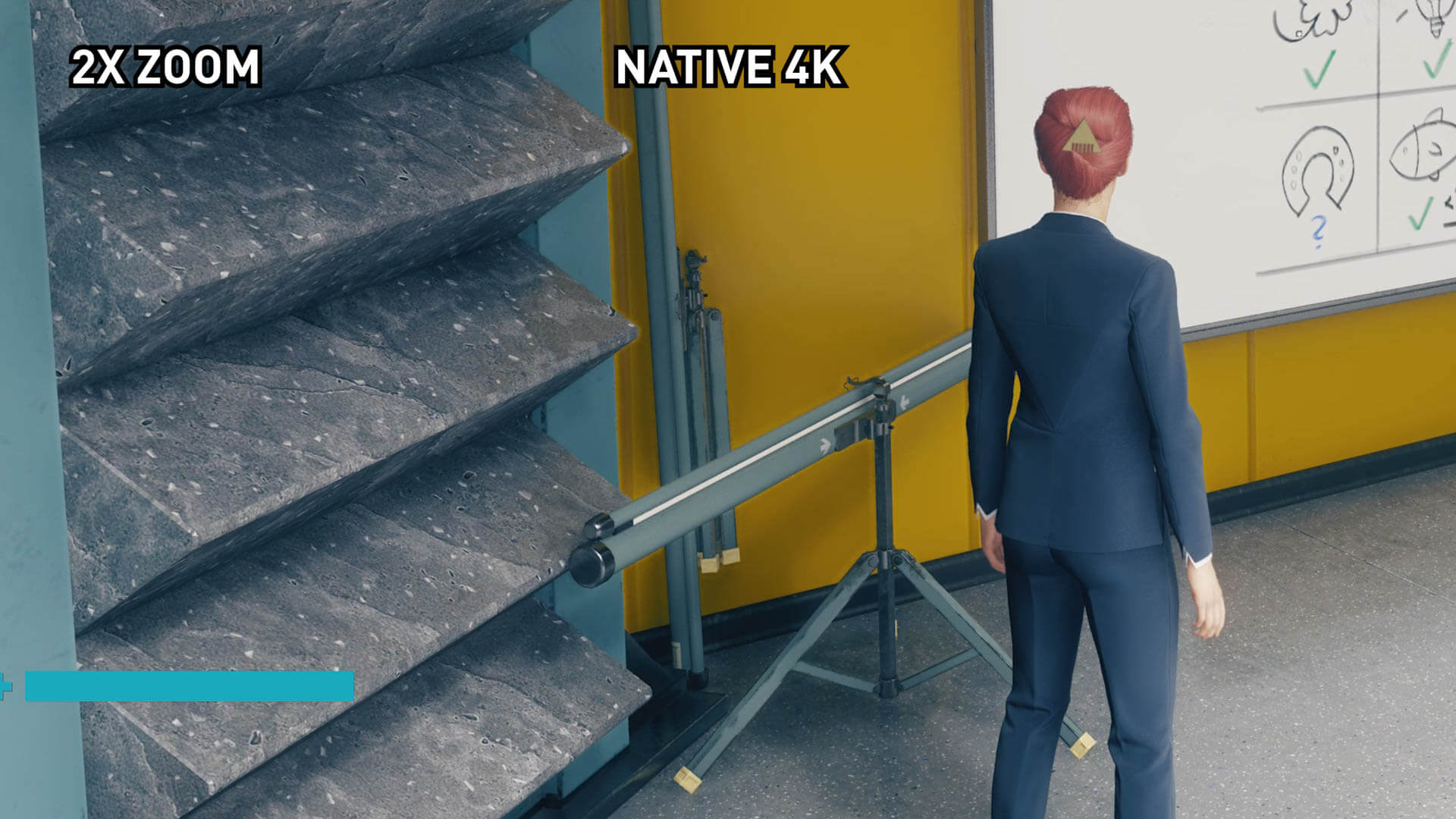

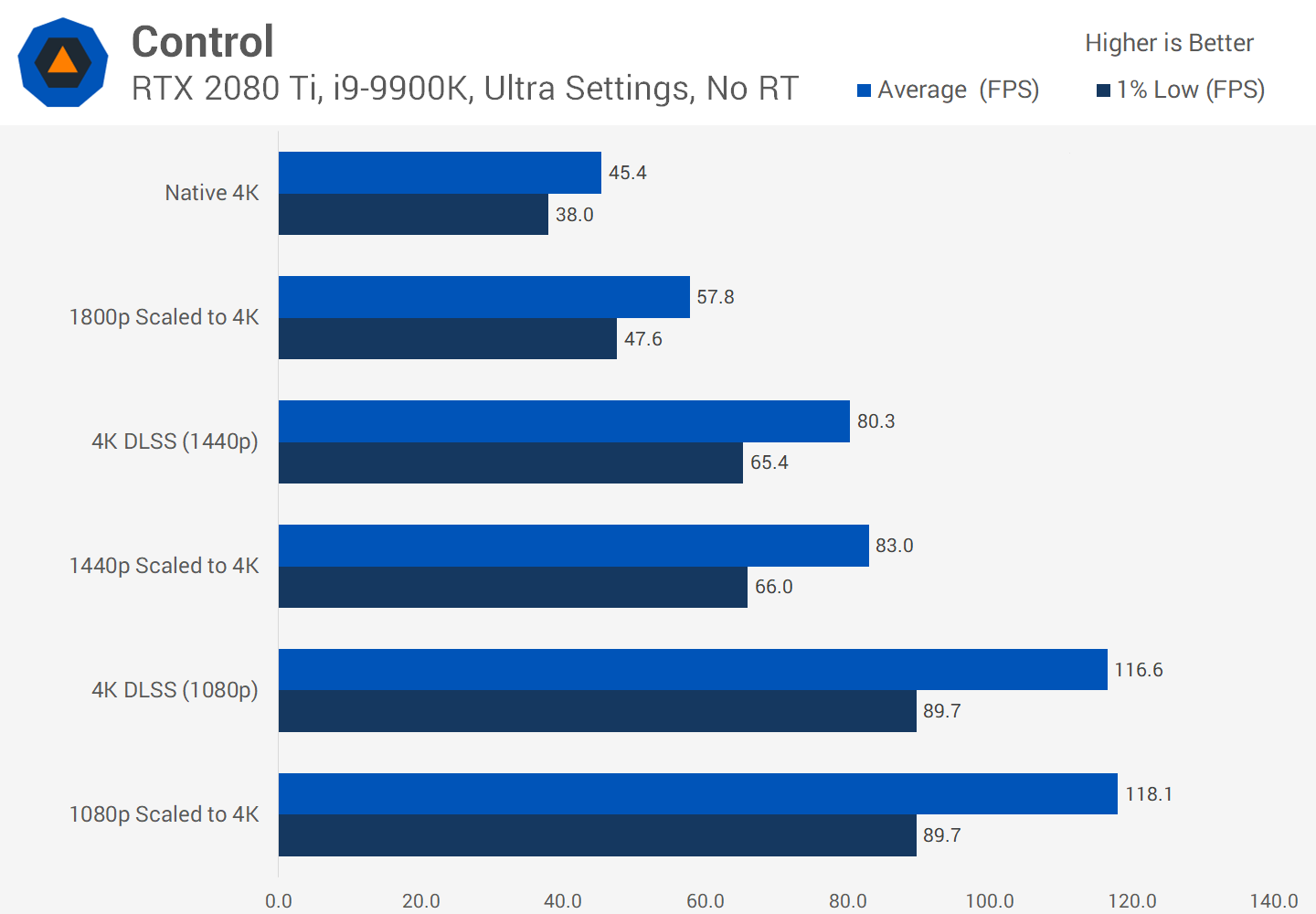

With a target resolution of 4K, DLSS 1.9 in Control is impressive. More so when you consider this is an approximation of the full technology running on the shader cores. The game allows you to select two render resolutions, which at 4K gives you the choice of 1080p or 1440p, depending on the level of performance and image quality you desire.

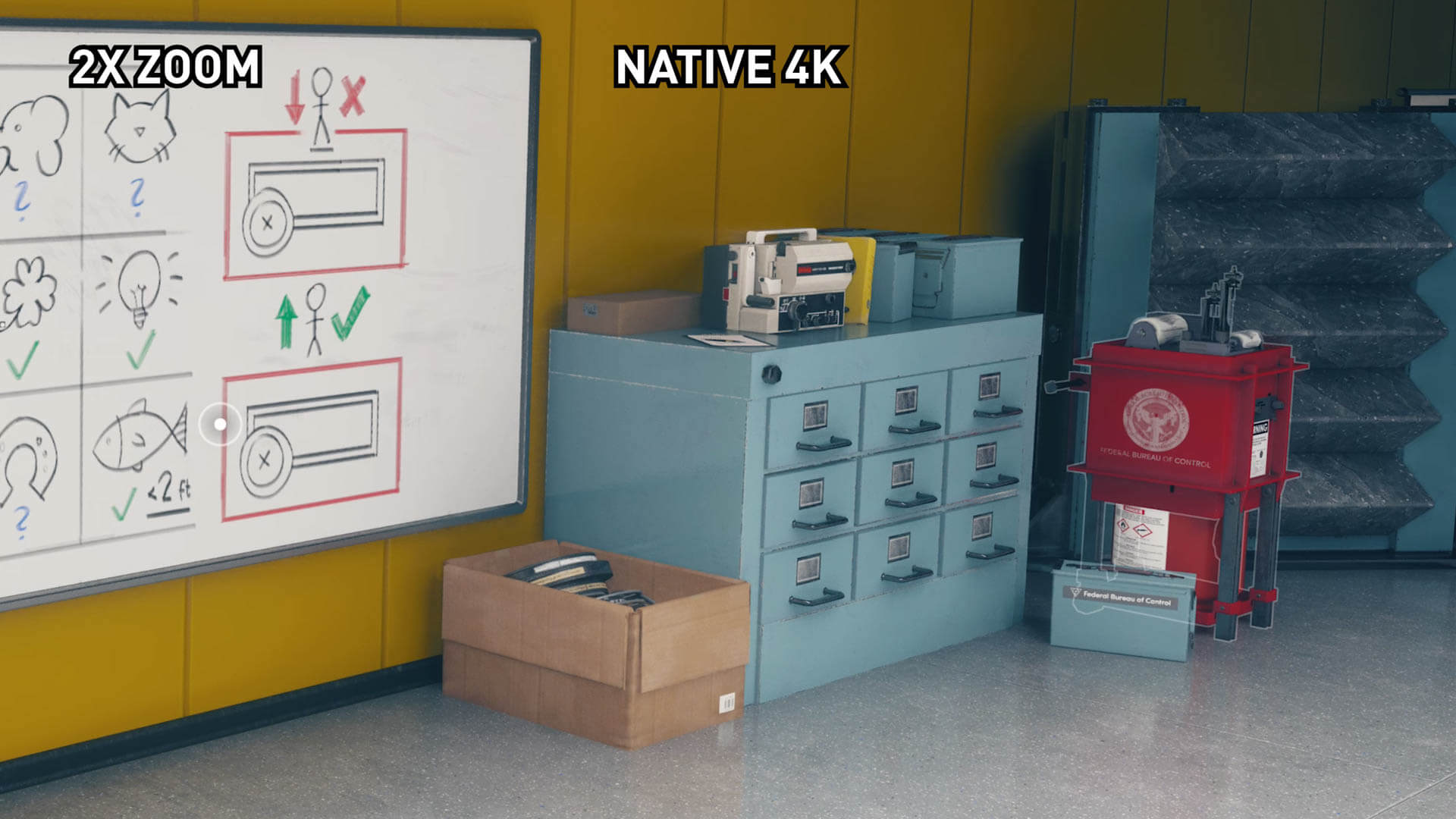

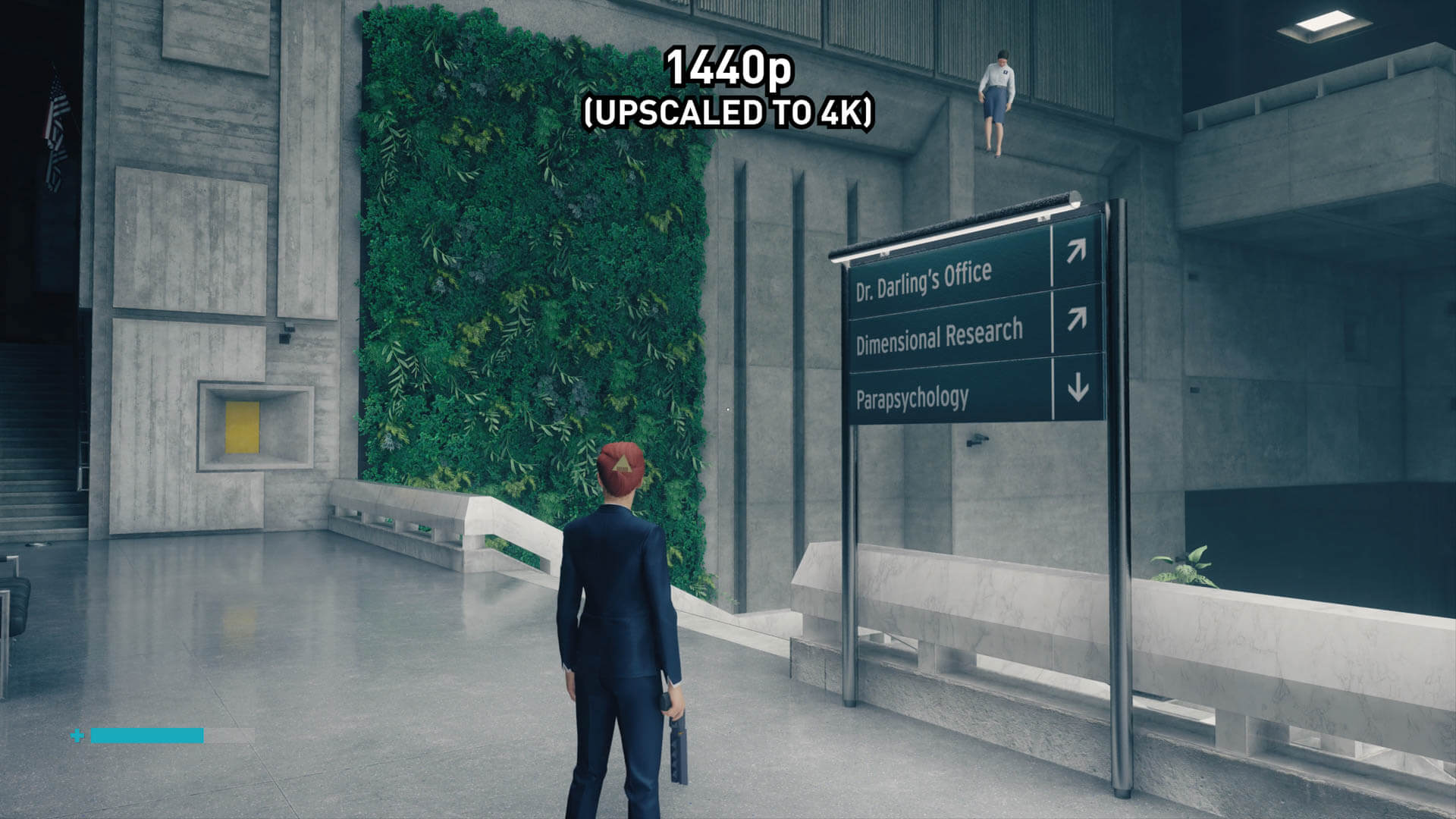

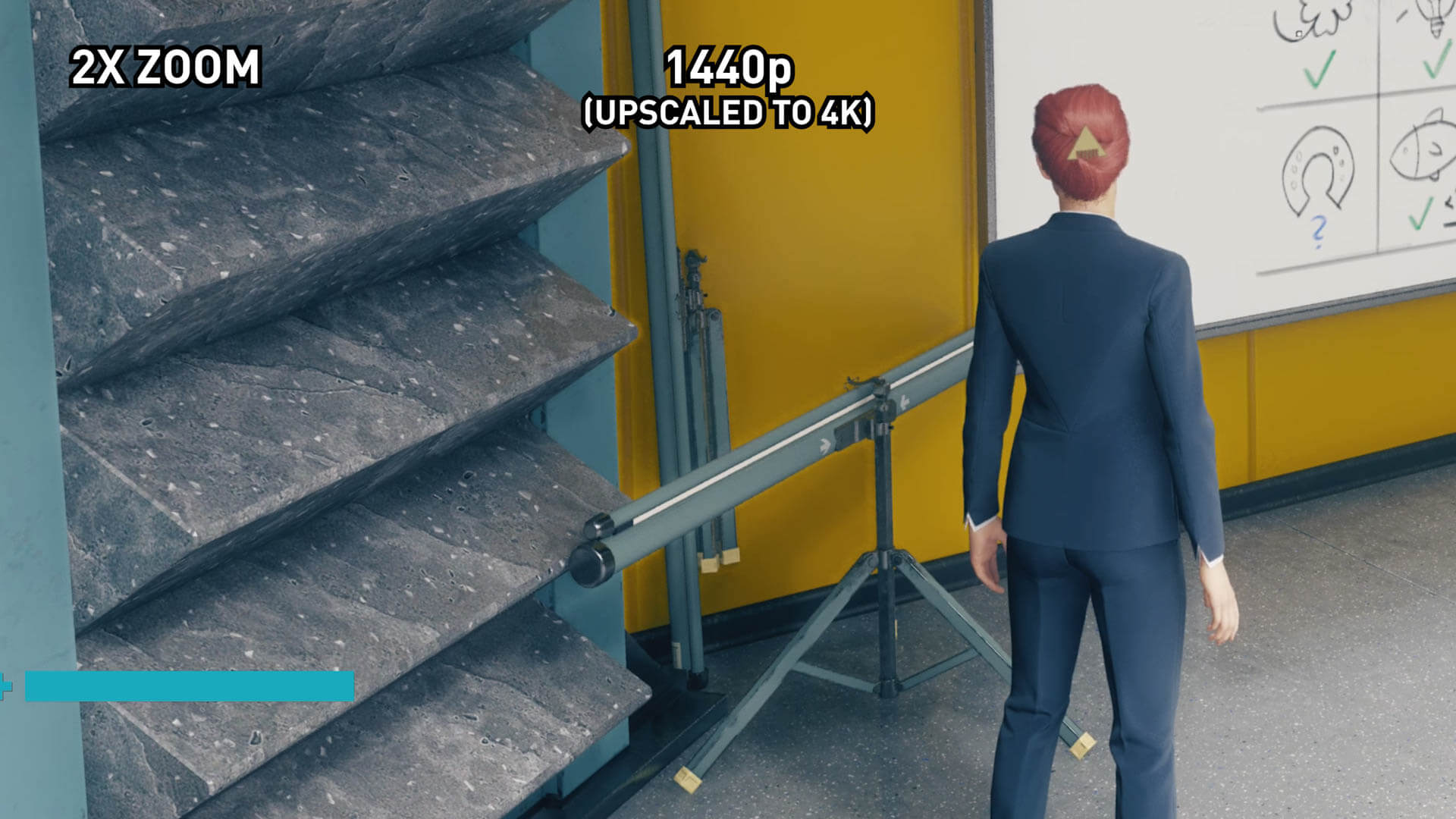

DLSS with a 1440p render resolution is the better of the two options. It doesn't provide the same level of sharpness or clarity as native 4K, but it gets pretty close. It's also close overall to a scaled 1800p image. In some areas DLSS is better, in others it's worse, but the slightly softer image DLSS provides is quite similar to a small resolution scale. Unlike previous versions of DLSS, though, it doesn't suffer from any oil painting artifacts or weird reconstructions when the upscale from 1440p to 4K is applied. The output quality is very good.

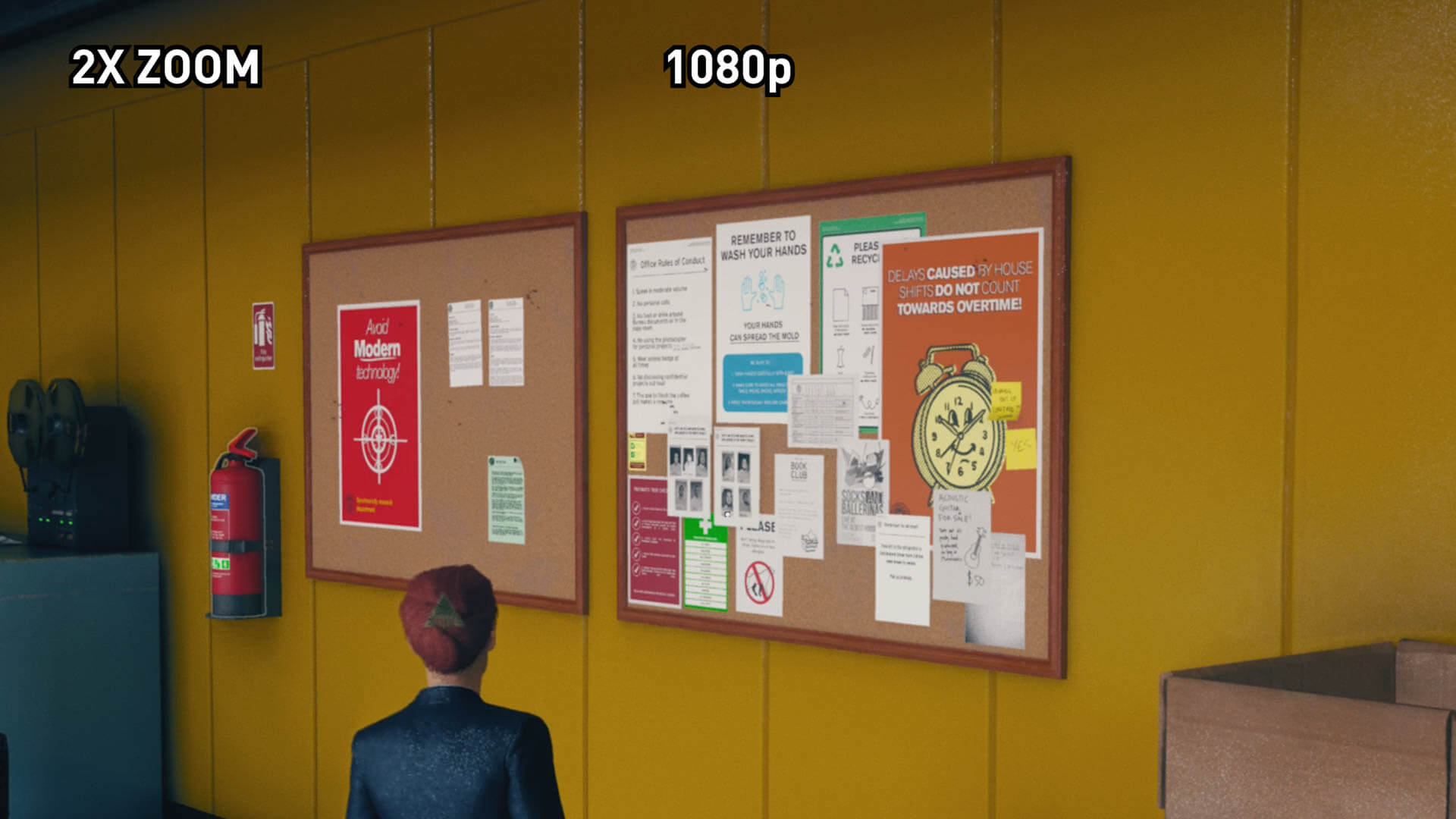

We can also see that DLSS rendering at 1440p is better than simply playing the game at 1440p. Some of the differences are subtle and require us zooming in to see better edge handling and cleaner lines, but the differences are there: we'd much rather play at 1440p DLSS than native.

That's not to say DLSS 1.9 is perfect, because it does seem to be using a temporal reconstruction technique, taking multiple frames and combining them into one for higher detail images. This is evident when viewing some of the fine details around the Control game world, particularly grated air vents, which trouble the image processing algorithm and produce flickering that isn't present with either the native or 1800p scaled images. These super fine wires or lines throughout the environment seem to consistently give DLSS the most trouble, although image quality for larger objects is decent.

Previously we found that DLSS targeting 4K was able to produce image quality similar to an 1800p resolution scale, and with Control's implementation that hasn't changed much, although as we've just been talking about we do think the quality is better overall and basically equivalent (or occasionally better) than the scaled version. But the key difference between older versions of DLSS and this new version, is the performance.

... the key difference between older versions of DLSS and this new version, is the performance.

Previously, running DLSS came with a performance hit relative to whatever resolution it was rendering at. So 4K DLSS, which used a 1440p render resolution, was slower than running the game at native 1440p as the upscaling algorithm used a substantial amount of processing time. This ended up delivering around 1800p-like performance, with 1800p like image quality, hence our original lack of enthusiasm.

However, this shader processed version is significantly less performance intensive. 4K DLSS with a 1440p render target performed on par with native 1440p, so there is a significant performance improvement over the 1800p-like performance we got previously. This also clearly makes DLSS 1.9 the best upscaling technique we have, because it offers superior image quality to 1440p with performance on par with 1440p; essentially there's next to no performance hit here.

Another way to look at it is we get an 1800p-like image, with the performance of 1440p, which is simply better than we can achieve with any resolution scaling option.

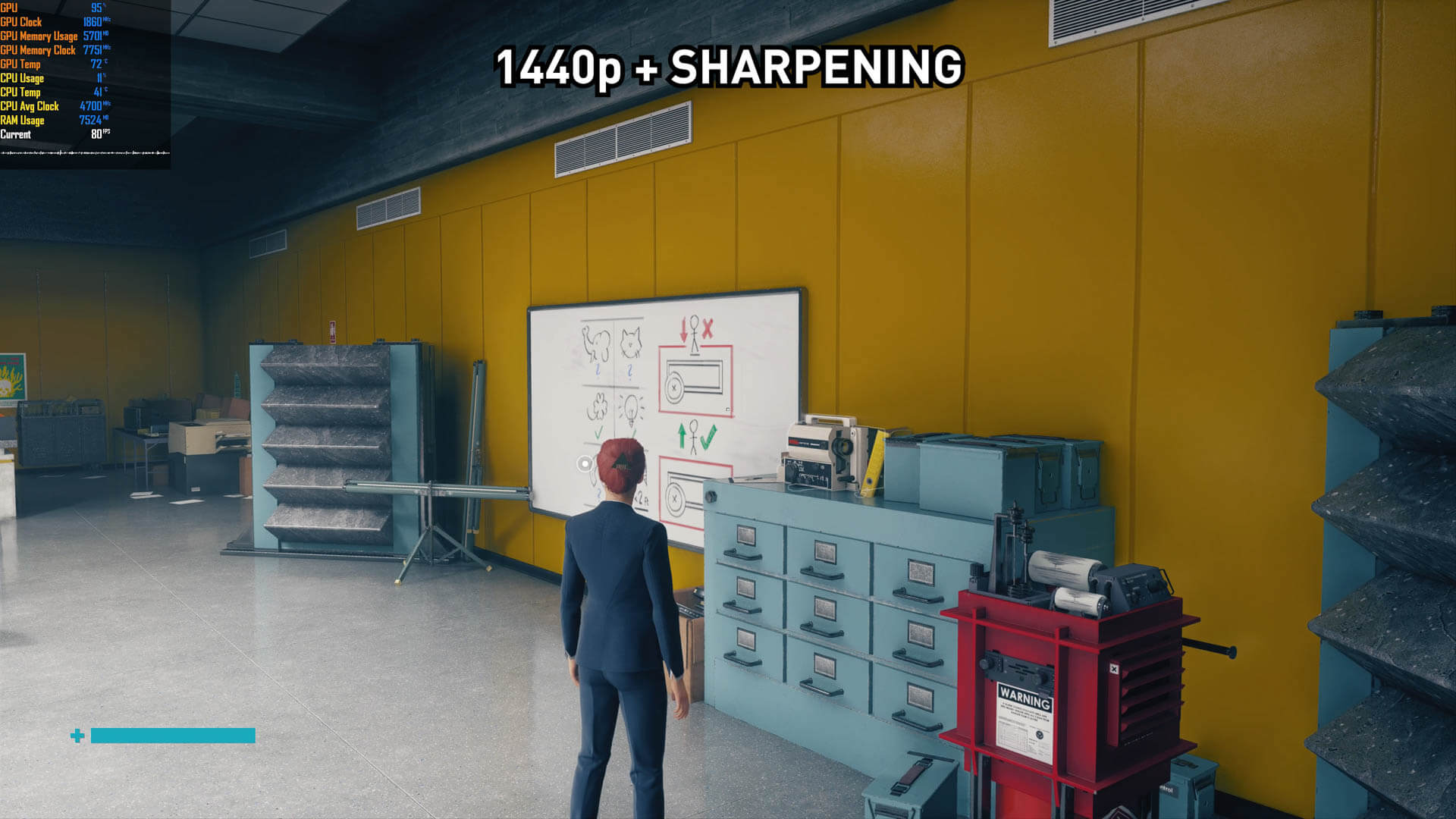

You'll notice that we haven't mentioned image sharpening and how that factors in. With previous DLSS iterations, scaled 1800p provided better non-sharpened image quality with fewer artifacts at the same performance level, which made 1800p a better base rendering option to then sharpen from. With sharpening, you want to use the best native image you can and go from there, which is why we preferred using 1800p + sharpening to match 4K rather than using either native DLSS or sharpened DLSS. The results were better.

But with "DLSS 1.9/2.0," the tables have turned and now it makes more sense to sharpen the DLSS image if you want to try and further match native image quality. That's because unlike with previous versions, at equivalent performance levels we do get better image quality with DLSS this time. Sharpening DLSS rendering at 1440p to try and emulate 4K gives you much better results than trying to sharpen a simple 1440p upscaling job.

While DLSS in Control is nice for anyone targeting 4K and using a 1440p render resolution, the results outside of this specific combination are lackluster. When targeting 4K and using the lower 1080p render resolution, temporal artifacts become more obvious and at times jarring. The image is also softer than with a 1440p resolution, which is to be expected, although performance is solid.

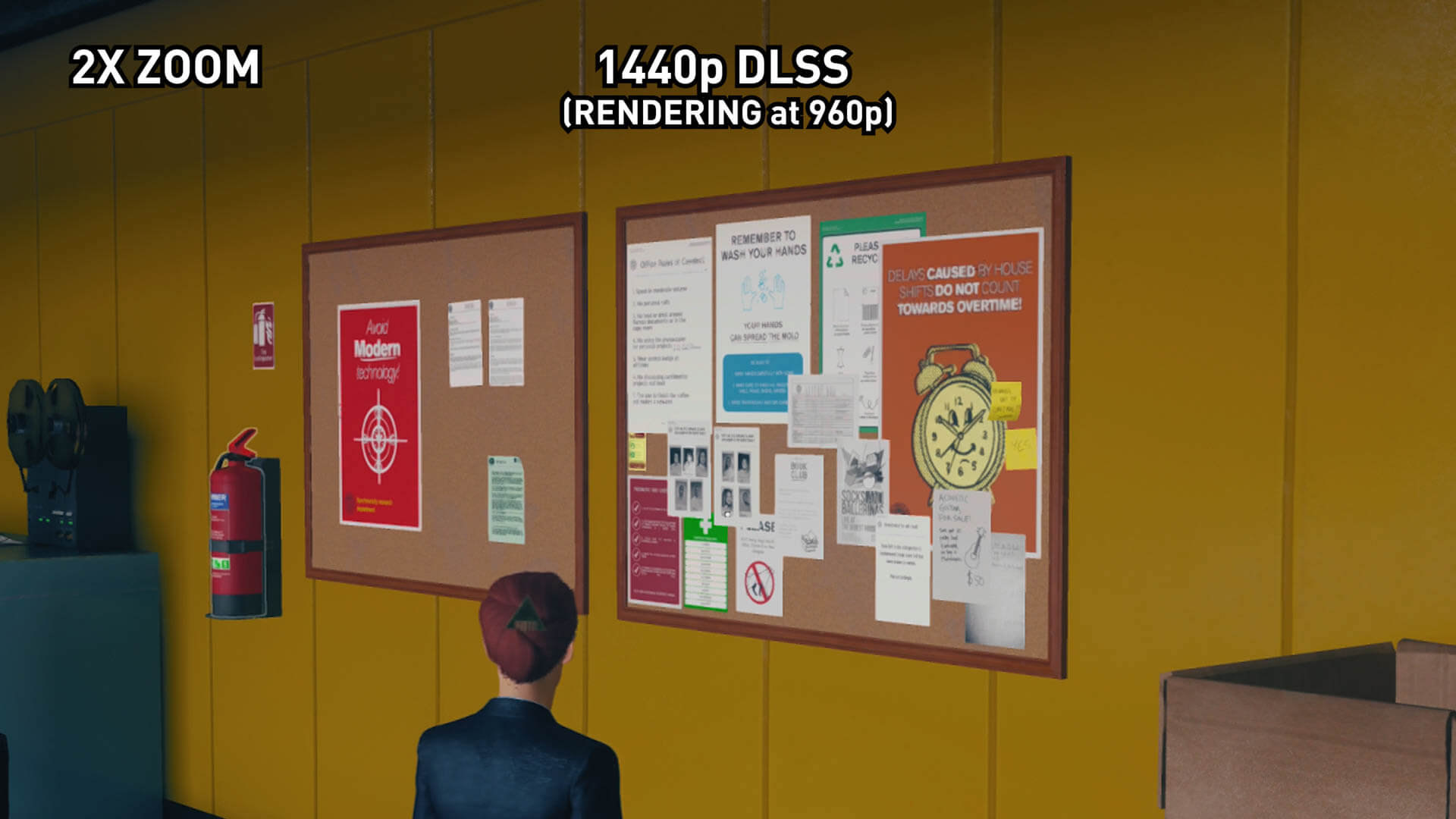

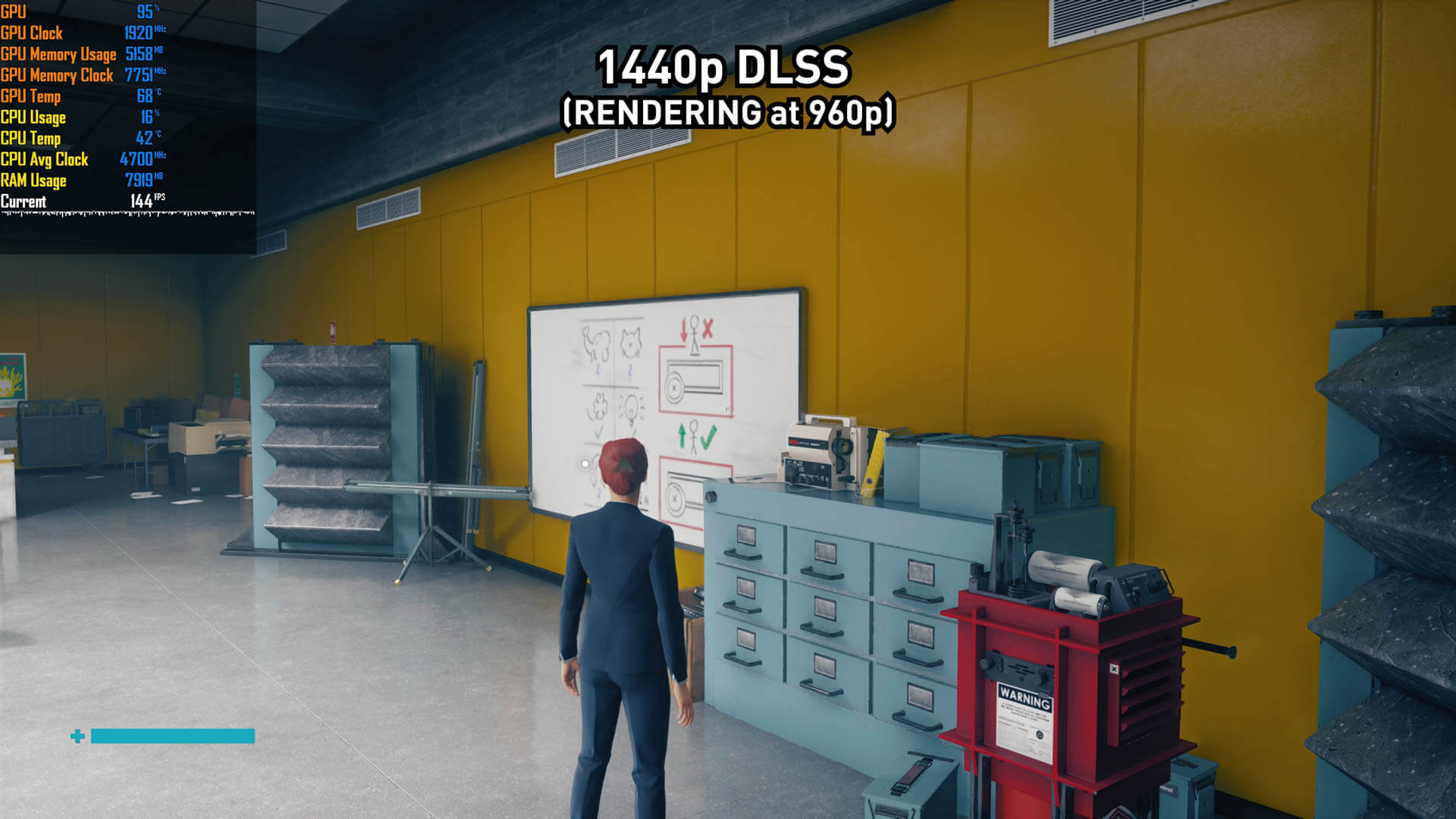

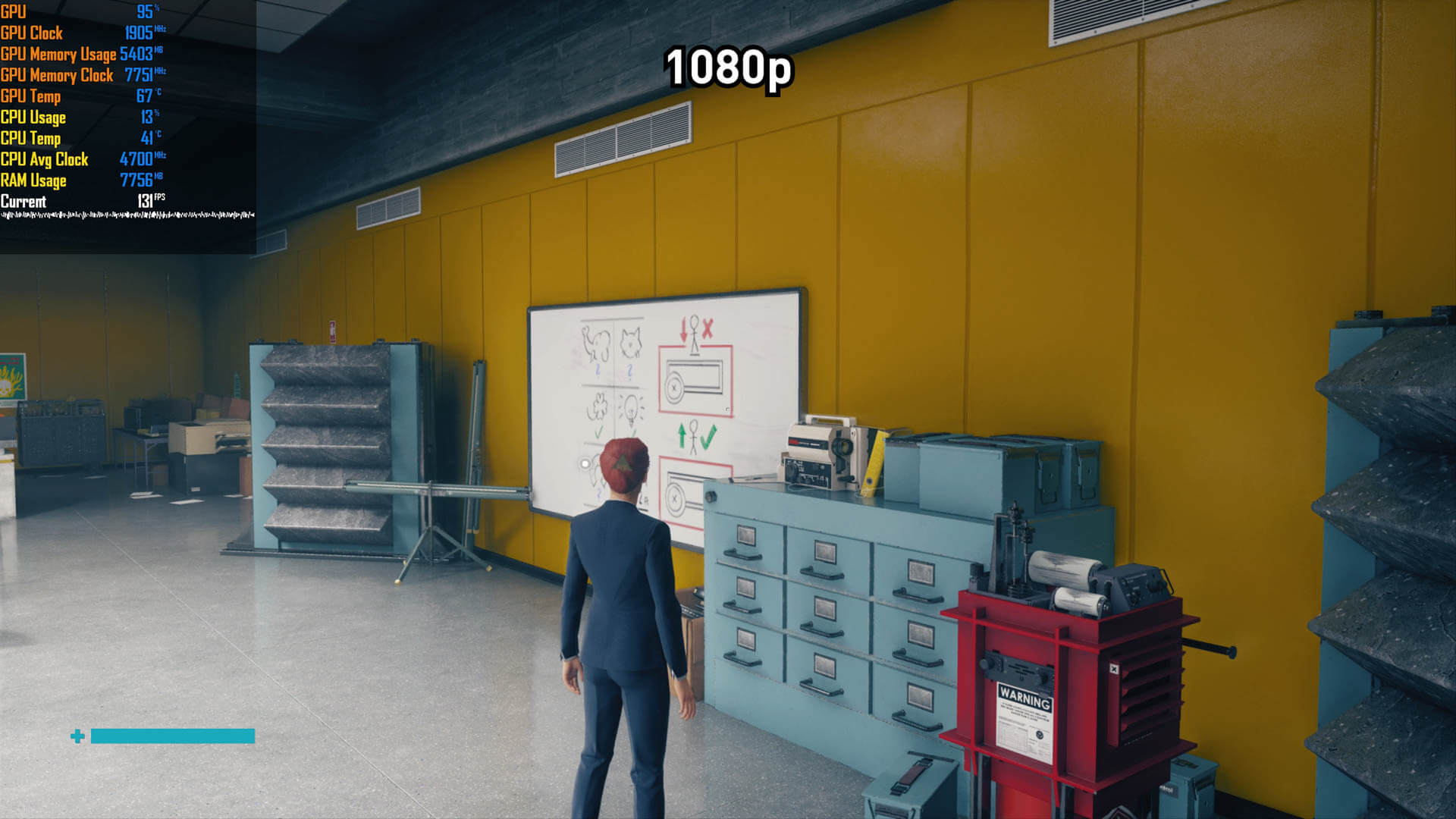

Using DLSS with a target resolution below 4K is also not a good idea. When targeting 1440p we're presented with either 960p or 720p as the render resolutions, and when rendering at either of these resolutions there's simply not enough detail to reconstruct a great looking image. Even the higher quality option, 960p, delivered far from a native 1440p image. This algorithmic approximation of DLSS is just not suited to these lower resolutions.

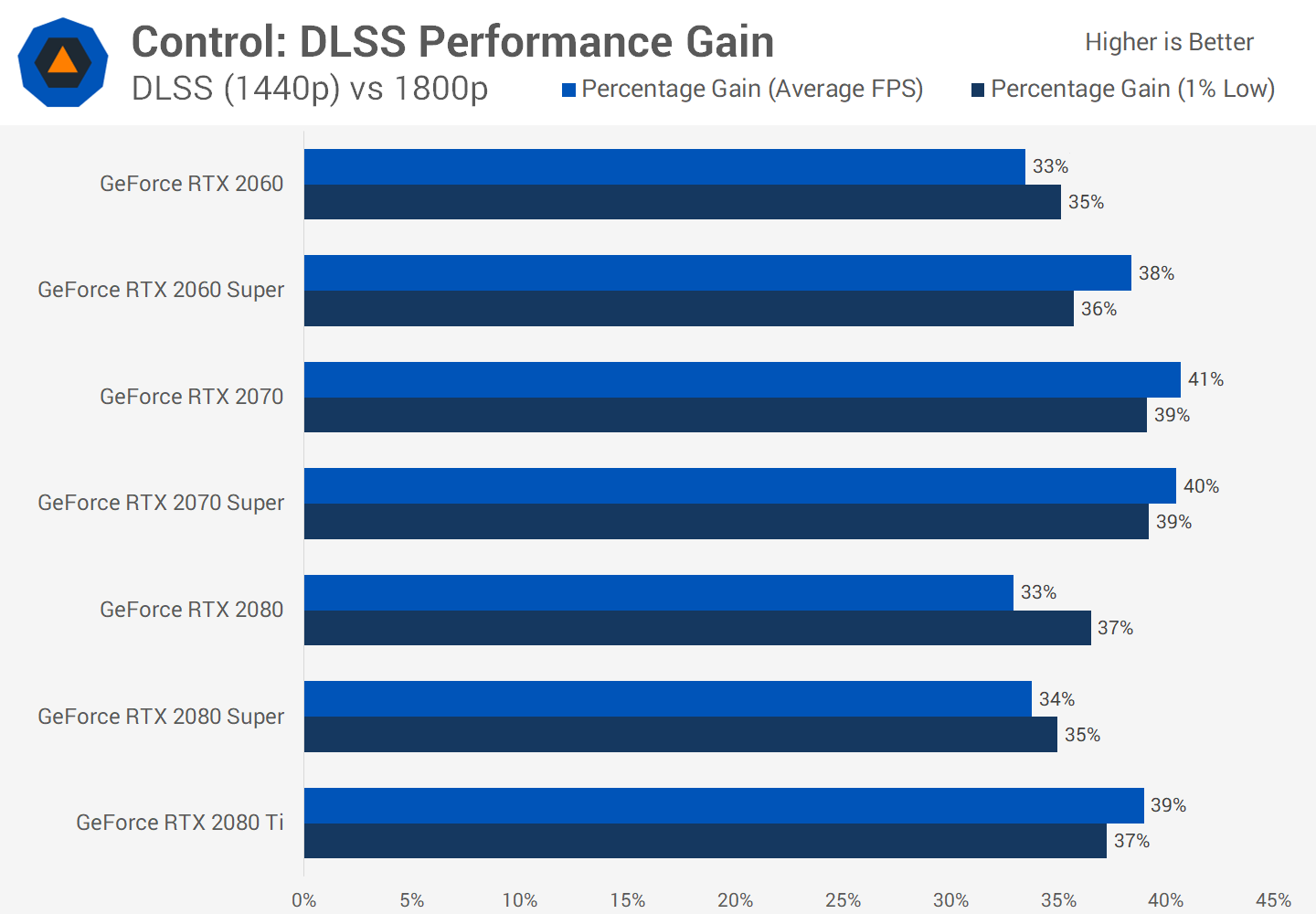

With this in mind, let's explore the performance benefit we get from Control's optimal configuration: a target resolution of 4K while rendering at 1440p. We're comparing to Control running at 1800p, which is the equivalent visual quality option, to see the pure performance benefit. The benchmarks were ran on our Core i9-9900K test rig with 16GB of RAM, and we used Ultra settings with 2x MSAA when DLSS was disabled.

The performance gains we see with each RTX GPU are fairly consistent across the board. When visual quality is made roughly equal, DLSS is providing between 33 and 41 percent more performance, which is a very respectable uplift.

This first batch of results playing Control with the shader version of DLSS are impressive. This begs the question, why did Nvidia feel the need to go back to an AI model running on tensor cores for the latest version of DLSS? Couldn't they just keep working on the shader version and open it up to everyone, such as GTX 16 series owners? We asked Nvidia the question, and the answer was pretty straightforward: Nvidia's engineers felt that they had reached the limits with the shader version.

Concretely, switching back to tensor cores and using an AI model allows Nvidia to achieve better image quality, better handling of some pain points like motion, better low resolution support and a more flexible approach. Apparently this implementation for Control required a lot of hand tuning and was found to not work well with other types of games, whereas DLSS 2.0 back on the tensor cores is more generalized and more easily applicable to a wide range of games without per-game training.

Not needing per-game training for DLSS 2.0 is huge...

Not needing per-game training for DLSS 2.0 is huge. This allows the new model to take all of the knowledge and data it's learned across a wide variety of games and apply it all at once, rather than relying on specific set of training data from a single game. This has provided better image quality, but it also gives Nvidia another advantage: generalized DLSS updates.

While the first version of DLSS required individual updates to DLSS on a per-game basis to improve quality - and this rarely happened - DLSS 2.0 should improve over time as Nvidia trains the AI model as a whole. Nvidia told us that starting with this new version, they'll be able to update DLSS via Game Ready drivers without the need for game patches. We'll see whether that materializes, but it's an improvement over what was previously possible.

Another benefit from not needing per-game training is that it makes DLSS faster and easier to integrate. This should mean more DLSS games, but we'll wait and see to see if that materializes first.

Wolfenstein: Youngblood + DLSS

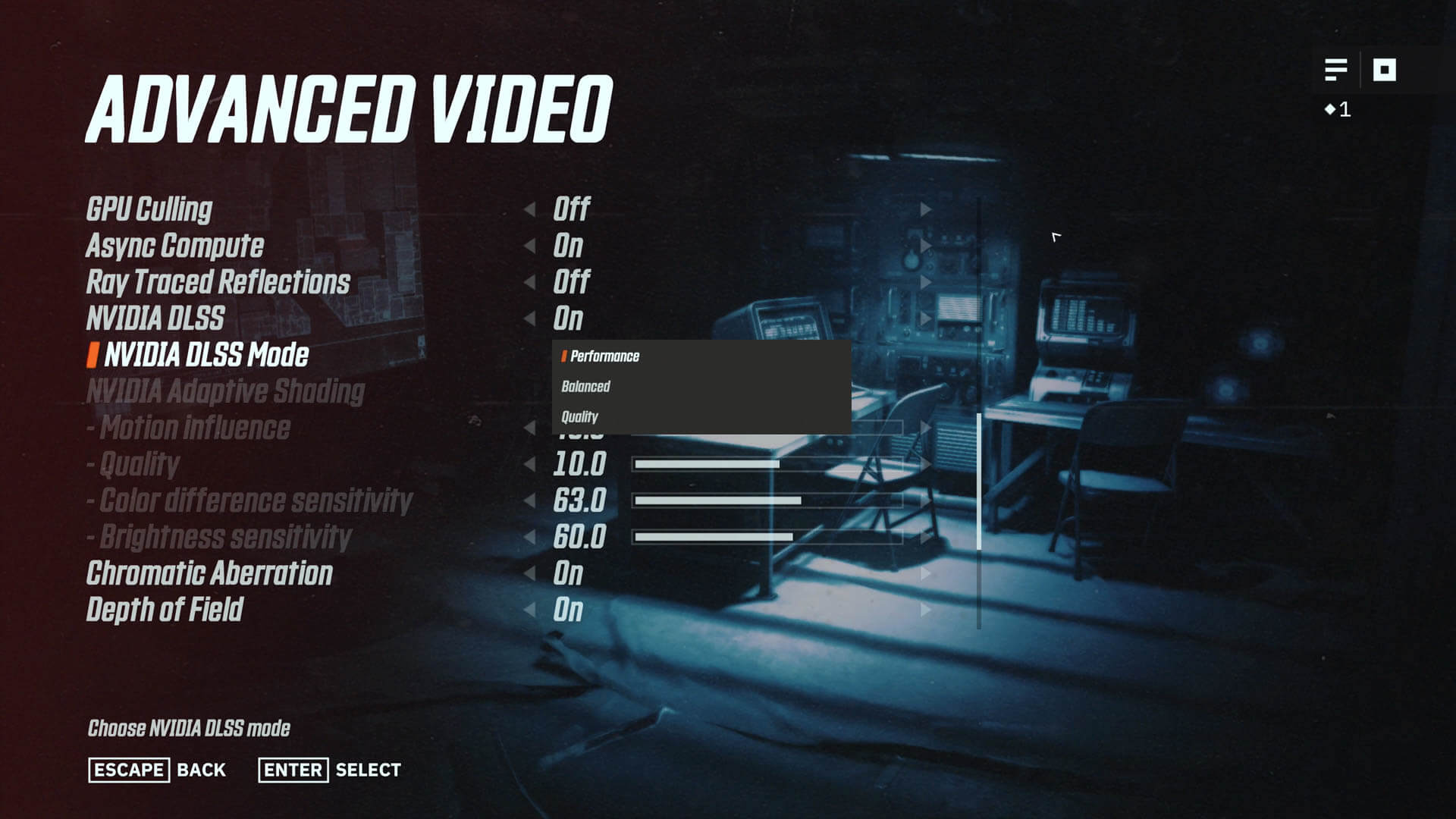

Time to take an in-depth look at DLSS 2.0 in Wolfenstein: Youngblood. Compared to previous DLSS implementations, now there are three quality options to choose from: Quality, Balanced and Performance. All continue to upscale the game from a lower target resolution, so the 'Quality' mode isn't a substitute for the still absent DLSS 2X mode that was announced at launch.

The big question is whether DLSS 2.0 is any good, and we're pleased to say that it is.

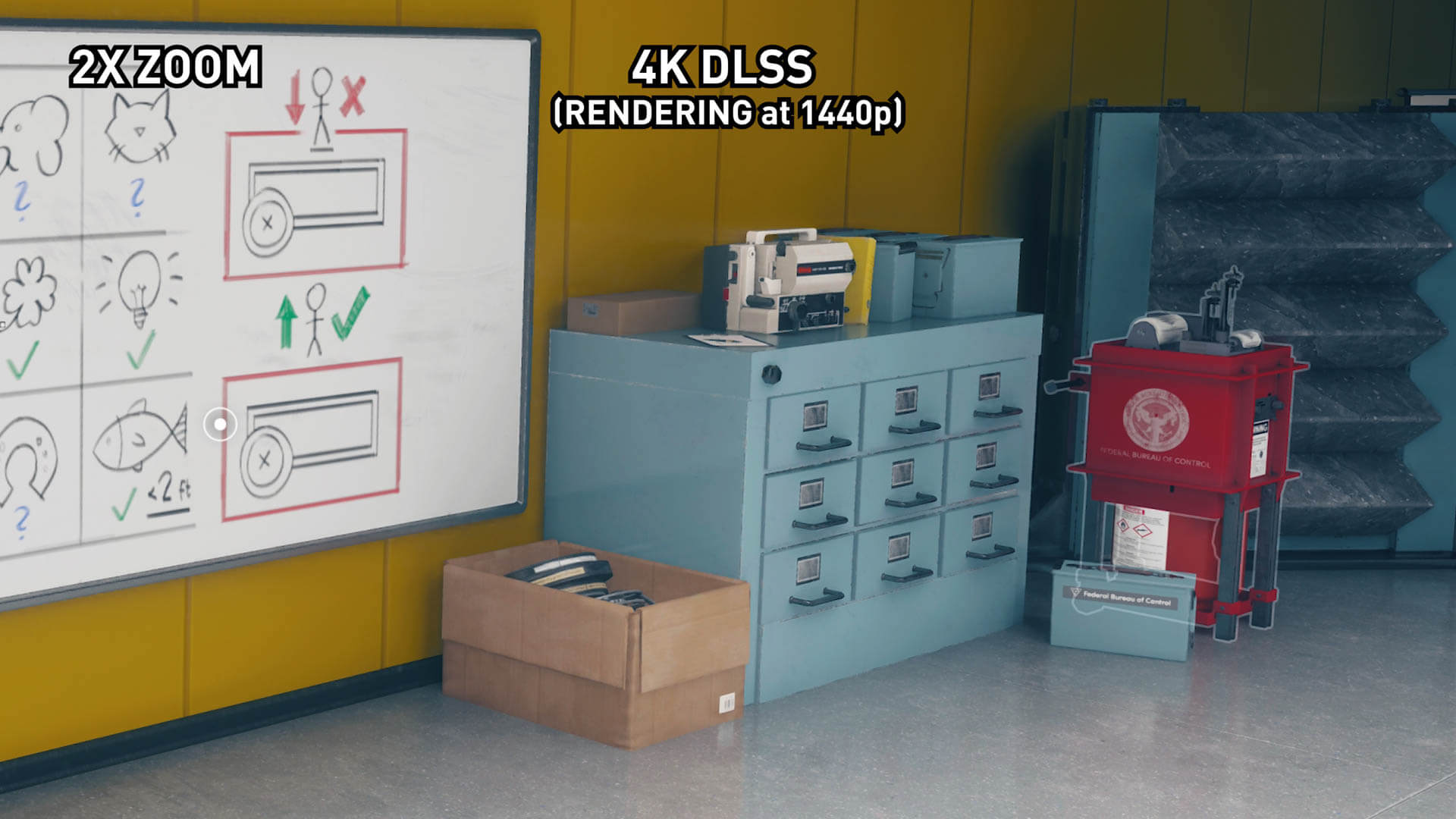

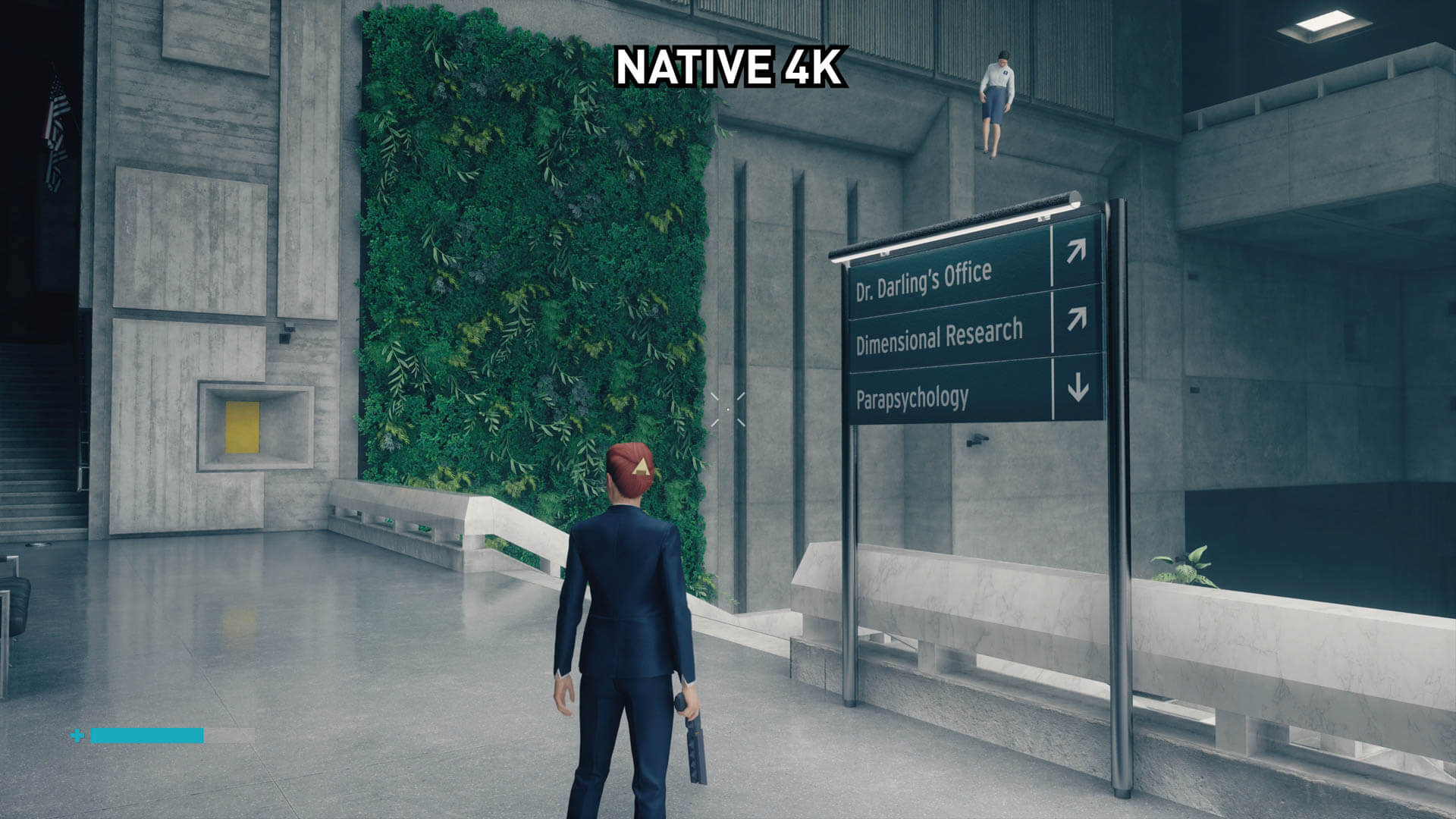

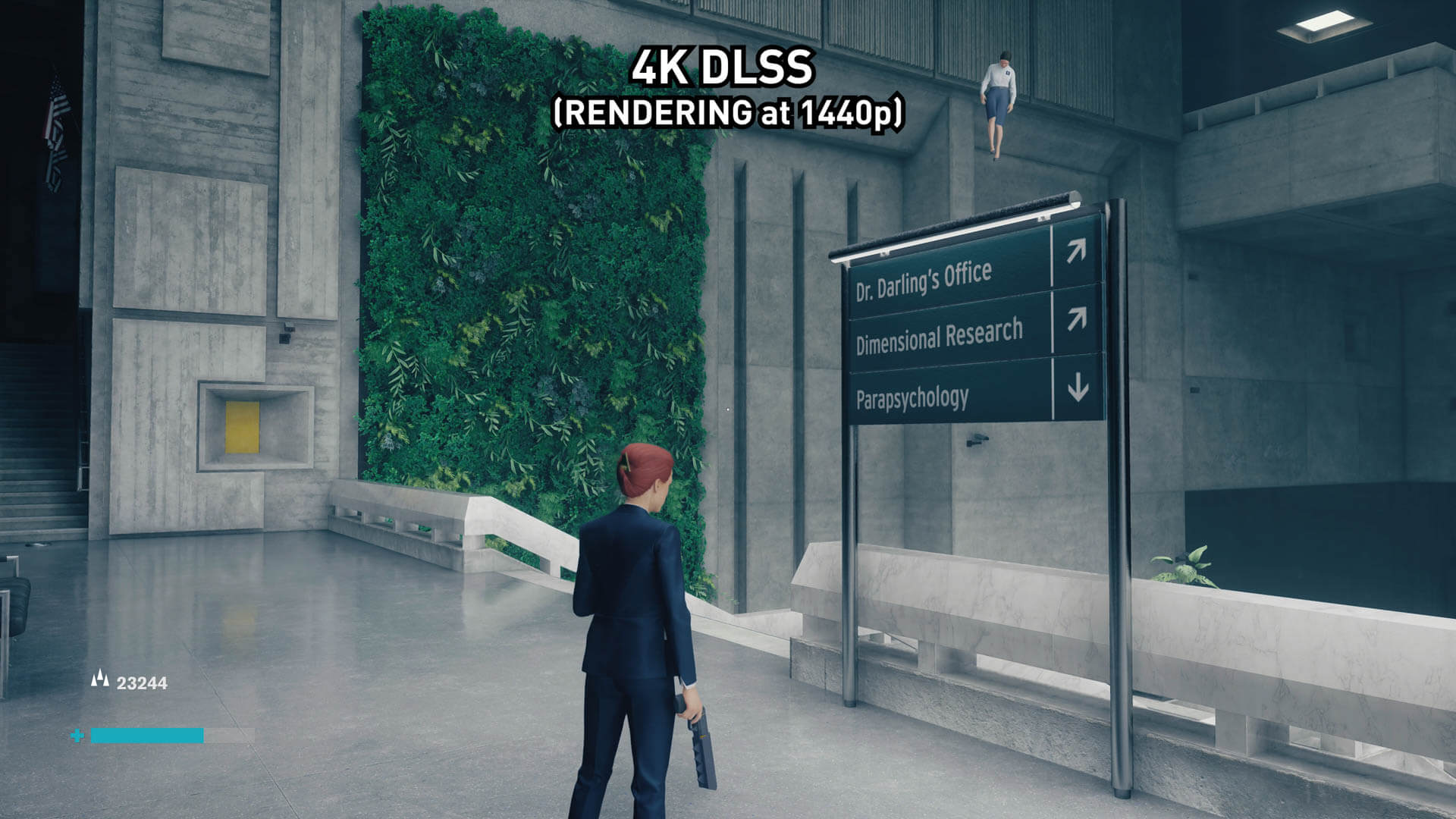

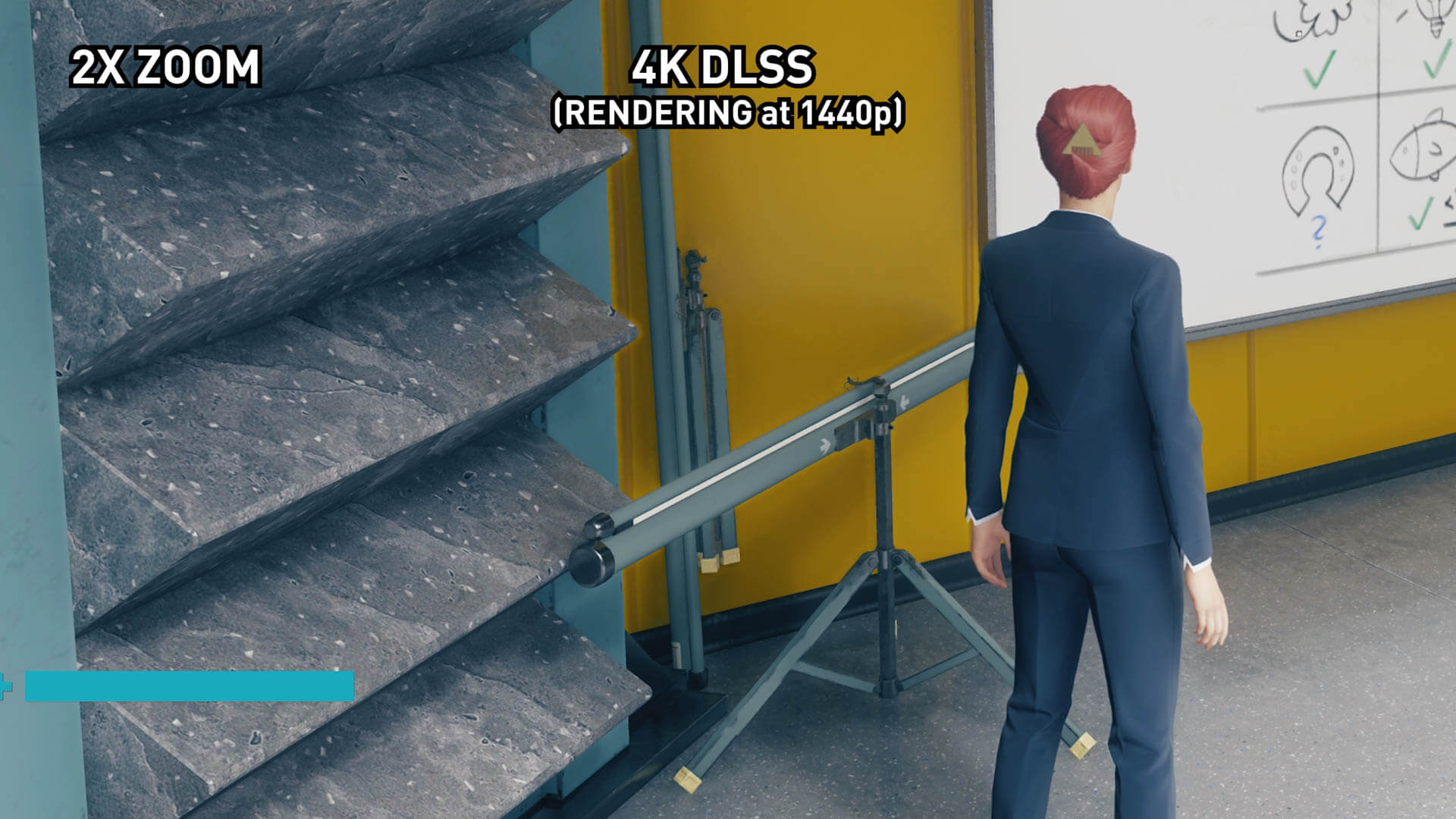

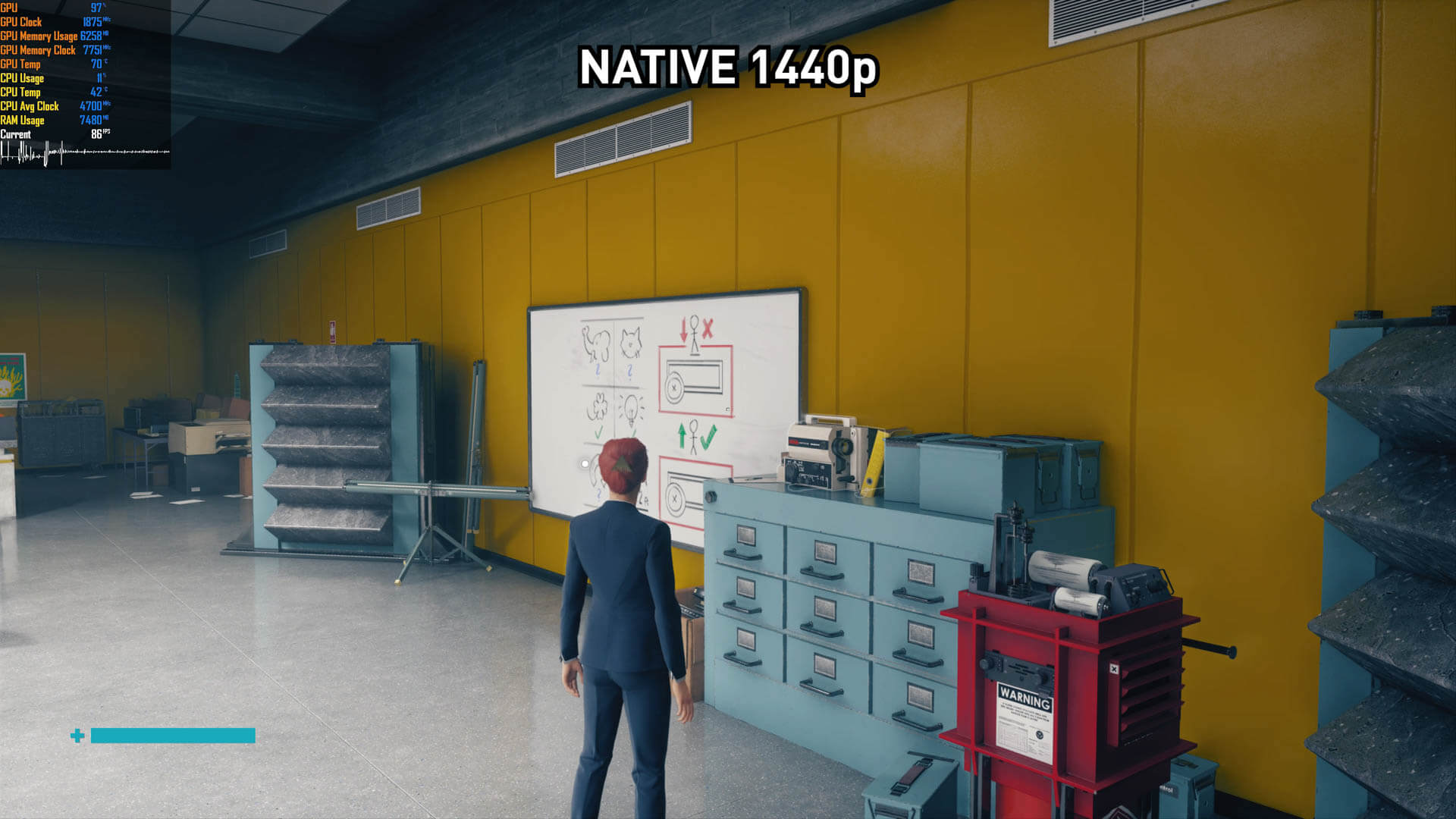

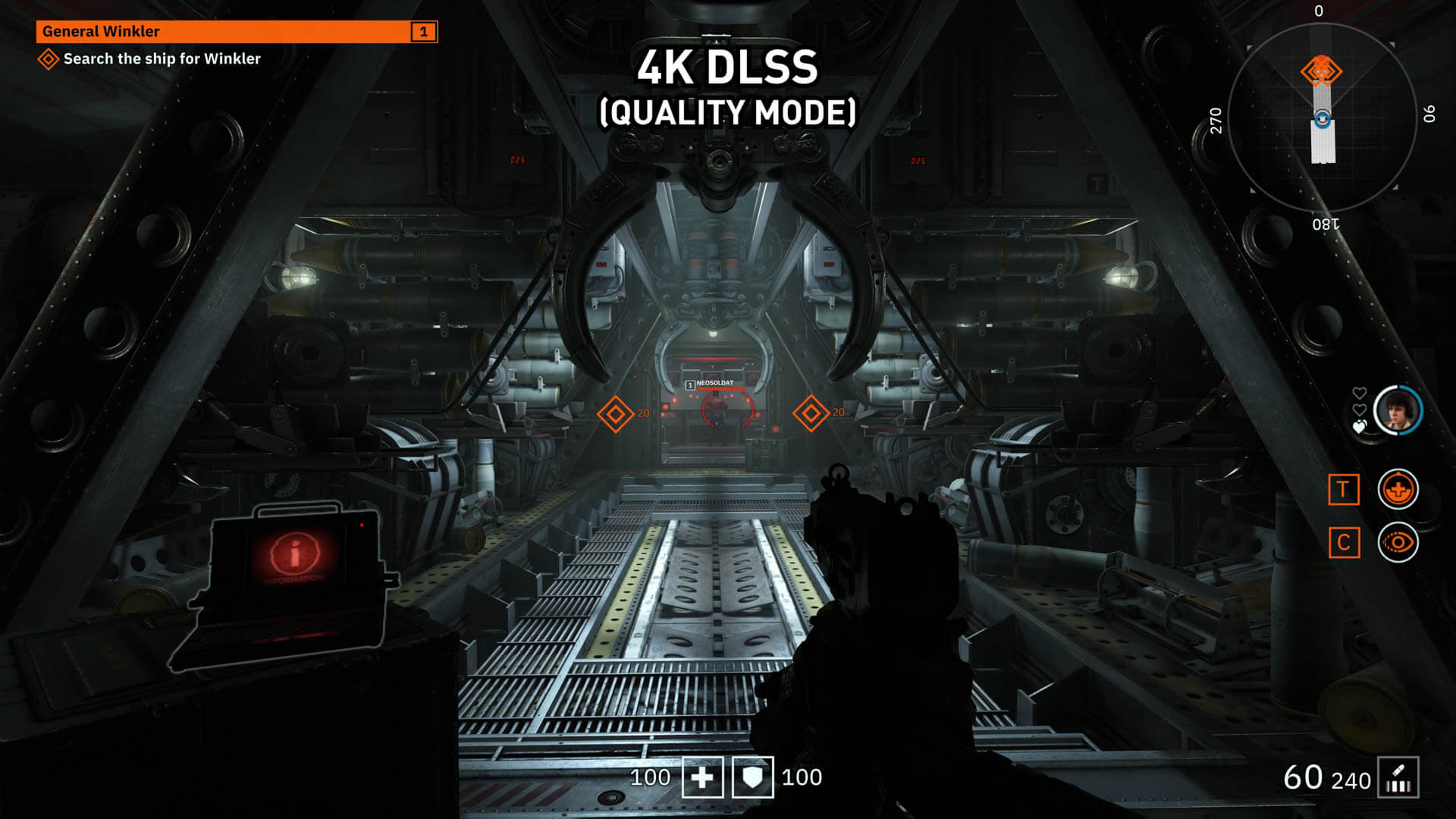

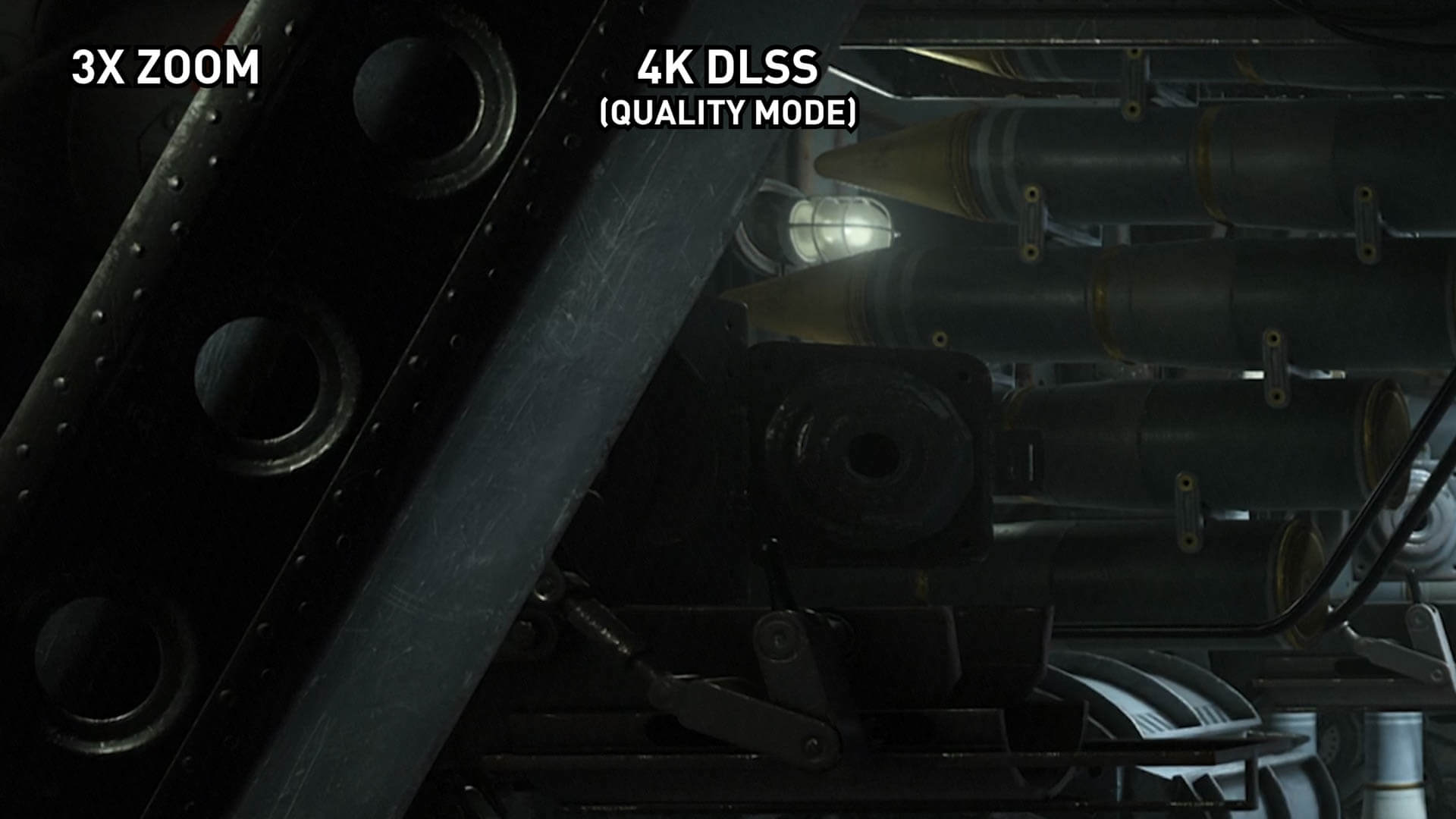

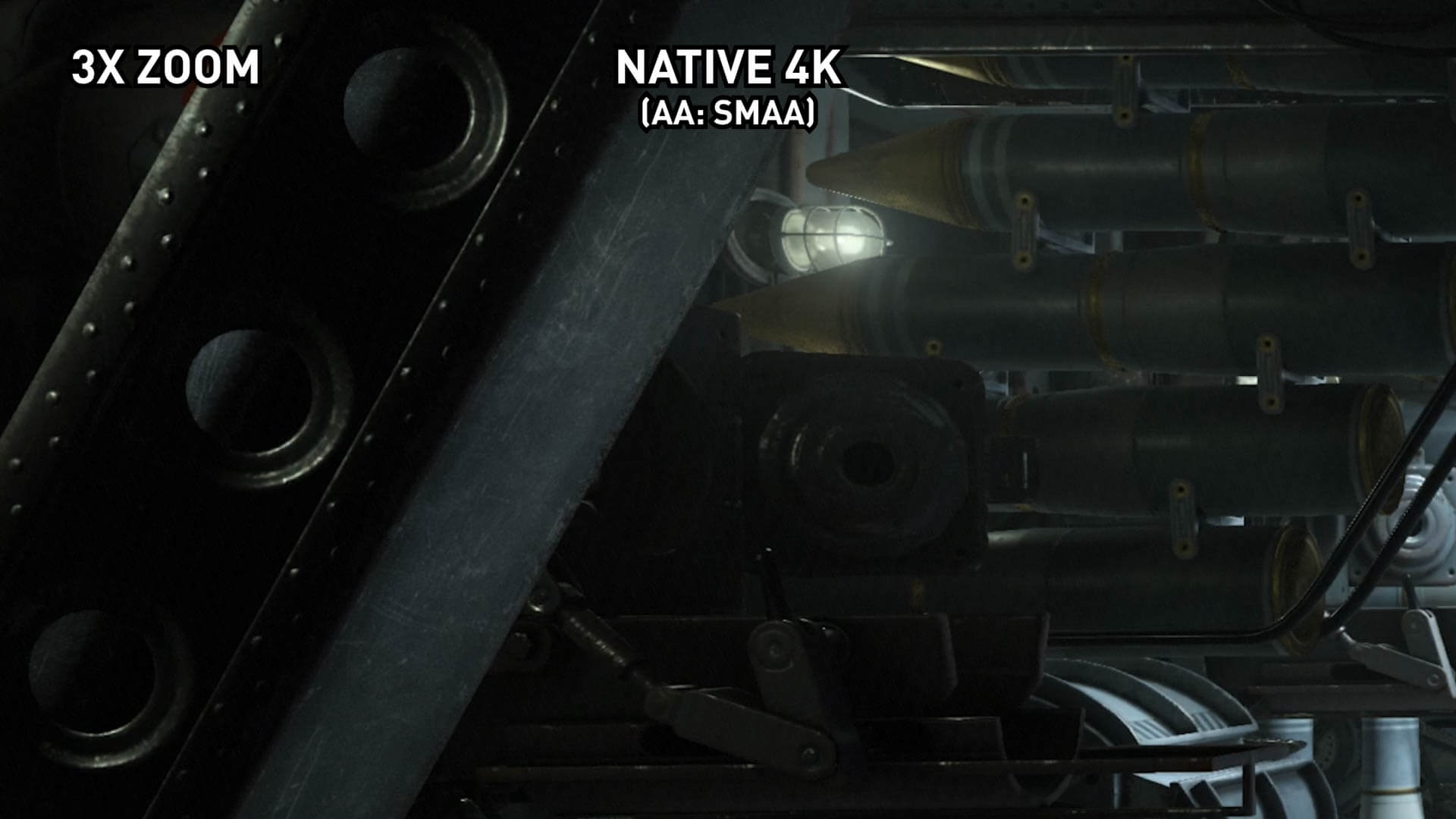

In fact, DLSS 2.0 is extremely impressive, far exceeding our expectations for this sort of upscaling technology. When targeting a native 4K resolution, DLSS 2.0 delivers image quality equivalent to the native presentation. Despite DLSS rendering at an actual resolution below 4K, the final results are as good as or in some circumstances better than the native 4K image.

We're hesitant to say that the image quality DLSS provides is better than native, because Youngblood's existing anti-aliasing techniques like SMAA T1x and TSSAA T8X aren't great, and produce a bit of blur across what should be a very sharp native 4K image. When comparing DLSS directly to, say, TSSAA T8X, the DLSS image is sharper, and we should note here the results we're showing now are with the game's built in sharpening setting turned off.

However, when putting DLSS up against SMAA without a temporal component, so just regular SMAA, the level of clarity and sharpness DLSS provides is quite similar to the SMAA image. Again, there are advantages here - SMAA does have a fair few remaining jagged edges and some shimmering, which is generally cleaned up with DLSS - but when comparing detail levels we'd say both native 4K and DLSS are similar. We suspect with a really good post-process anti-aliasing like we've seen in some other games (e.g. Shadow of the Tomb Raider), we'd see DLSS and native 4K looking almost identical.

And while it may not be always superior to native 4K, being at worst equivalent to 4K, is a huge step forward for DLSS. As we talked about extensively in previous features, older DLSS implementations were only good enough to produce an 1800p-like image, often with weird artifacts like thin wires and tree branches getting 'thickened', along with a general oil painting effect that we didn't like. None of those issues are present here, this just straight up looks like a native image.

We should stress that native 4K and DLSS 4K don't look identical. This isn't a black box algorithm that can magically pull true native 4K out of the hat. 4K DLSS does look slightly different to native 4K, some areas may have a small increase to detail, others may have a small decrease. But it's no longer a situation where the DLSS image is noticeably worse, the two images are to our eyes equivalent, with neither being clearly better than the other in all situations.

There are some areas where you may notice DLSS actually improves the image quality, such as with some fine patterned areas and other elements with thin lines. This is because Nvidia trains the AI using super sampled images with the clearest possible forms of these details. On the other hand, there are still some areas where the algorithm struggles, one being with how DLSS handles the fire elements towards the end of the game's built-in benchmark tool. But these are minor issues and a far cry from the problems with DLSS 1.0.

As mentioned earlier, there are three quality modes on this latest revision of DLSS, and at 4K the differences are very subtle between them. Quality is slightly sharper than Balanced, which is slightly sharper than Performance. We think Balanced is a great place to be with the 4K image, and realistically all are a negligible amount away from the native image.

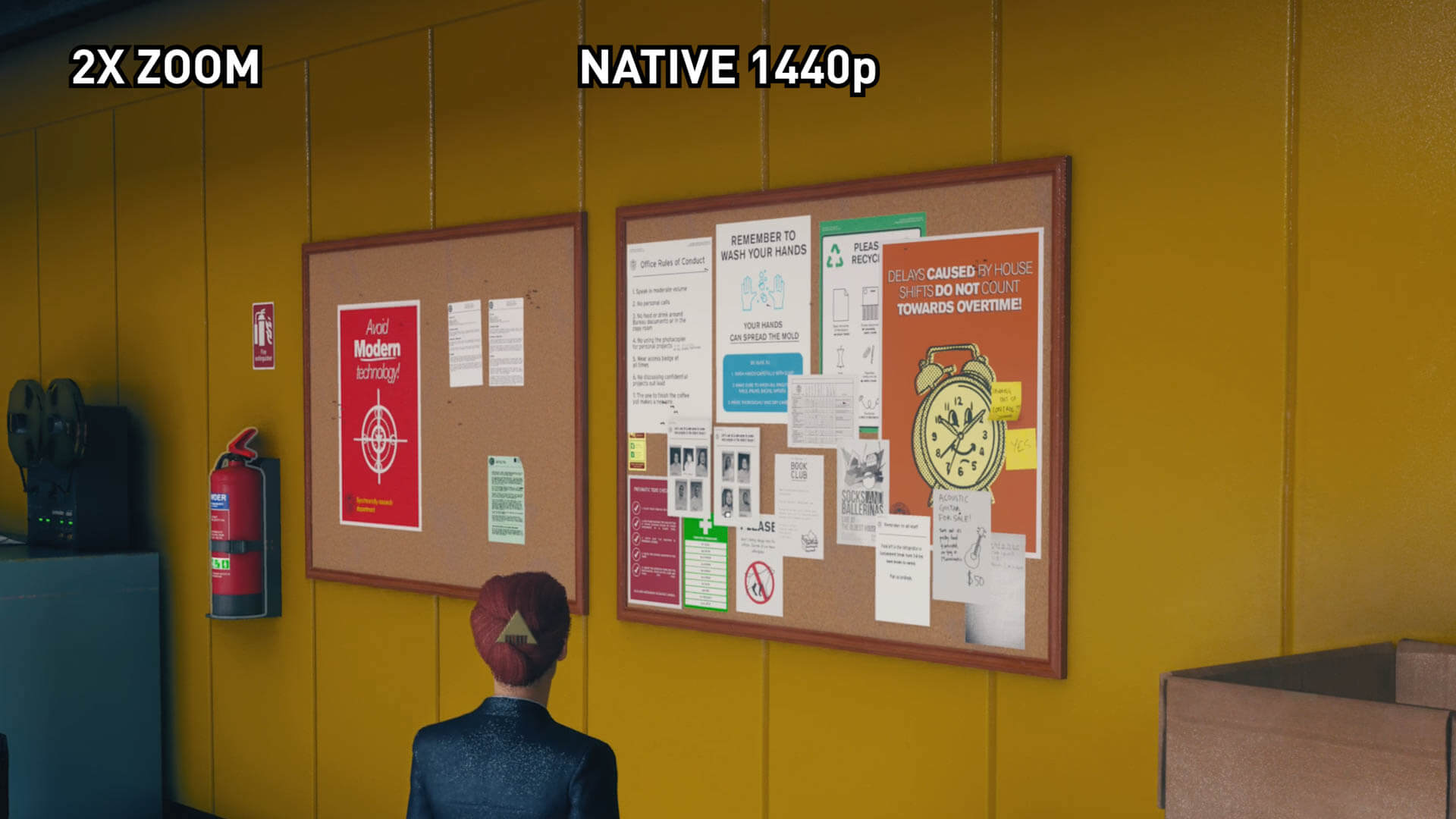

The other really impressive aspect to DLSS 2.0 is that it's also fully functional at lower resolutions.

Take 1440p for example. The Quality DLSS mode, like at 4K, provides essentially a native 1440p image while rendering at a lower resolution. Everything we've just been talking about with 4K also applies here, which is unlike any previous version of DLSS, where quality quickly fell away at lower resolutions. Even with Control's shader implementation this was a significant issue, but it's not with DLSS 2.0.

At 1440p the limitations of the lower quality DLSS modes does become a bit more apparent. While the Performance mode is fine at 4K, we think the quality does suffer more here and we wouldn't recommend it over either Balanced or Quality which are both fine. Quality is the mode we would opt to use at 1440p given it delivers the closest presentation to native.

DLSS 2.0 is also effective at 1080p in the same way it is at 1440p and 4K, with DLSS providing essentially native image quality, especially when using the Quality Mode. Similar to 1440p, we don't think the performance mode is particularly effective, so we'd stick to using Balanced or Quality, the latter of which is the most impressive and delivers image quality equivalent to native.

DLSS 2.0 performance

Let's take a look at performance once more using our Core i9-9900K test rig, uber settings, ray tracing disabled (because it doesn't make much sense in a fast paced game like this) and TSSAA T8X anti-aliasing when DLSS is disabled.

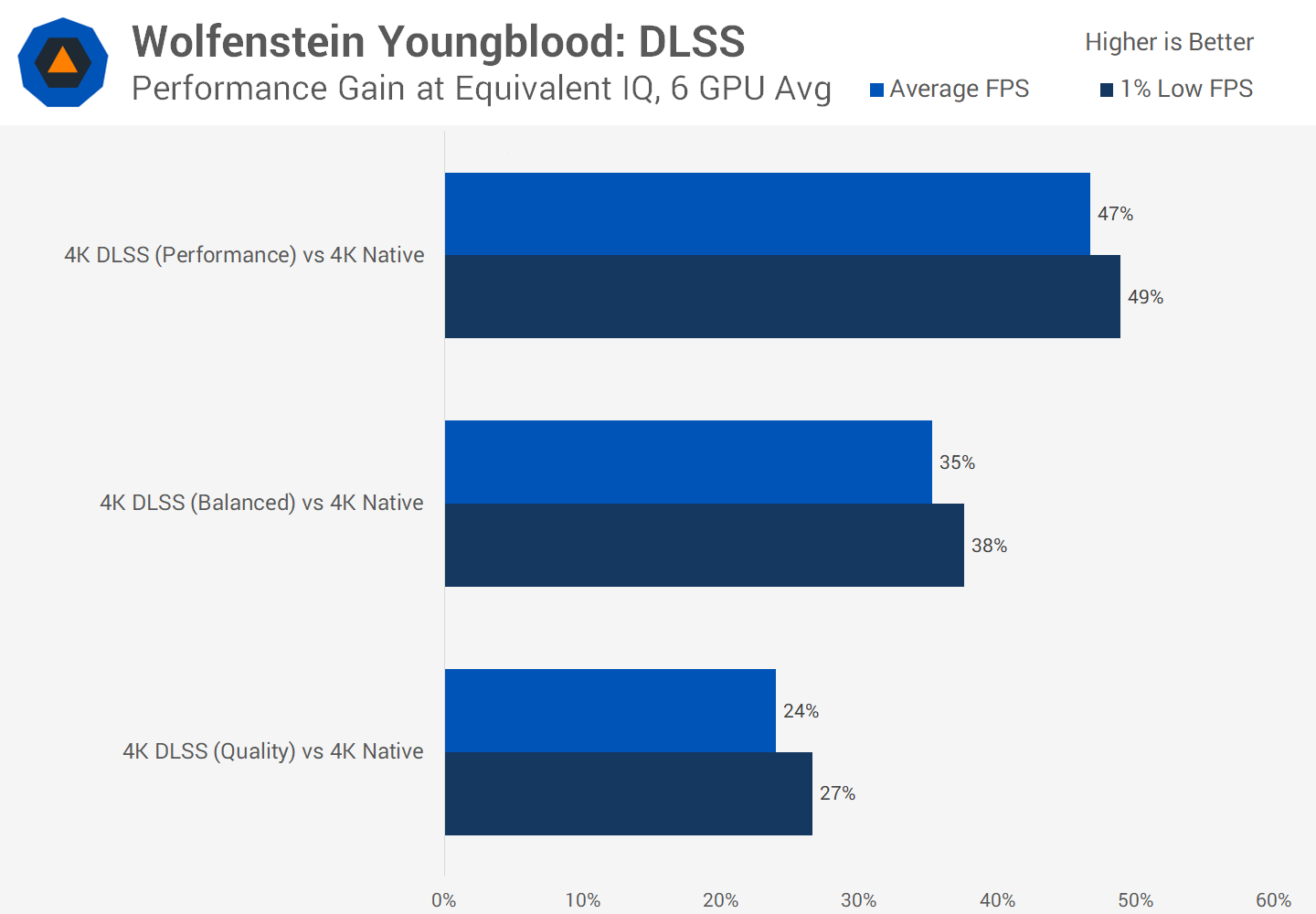

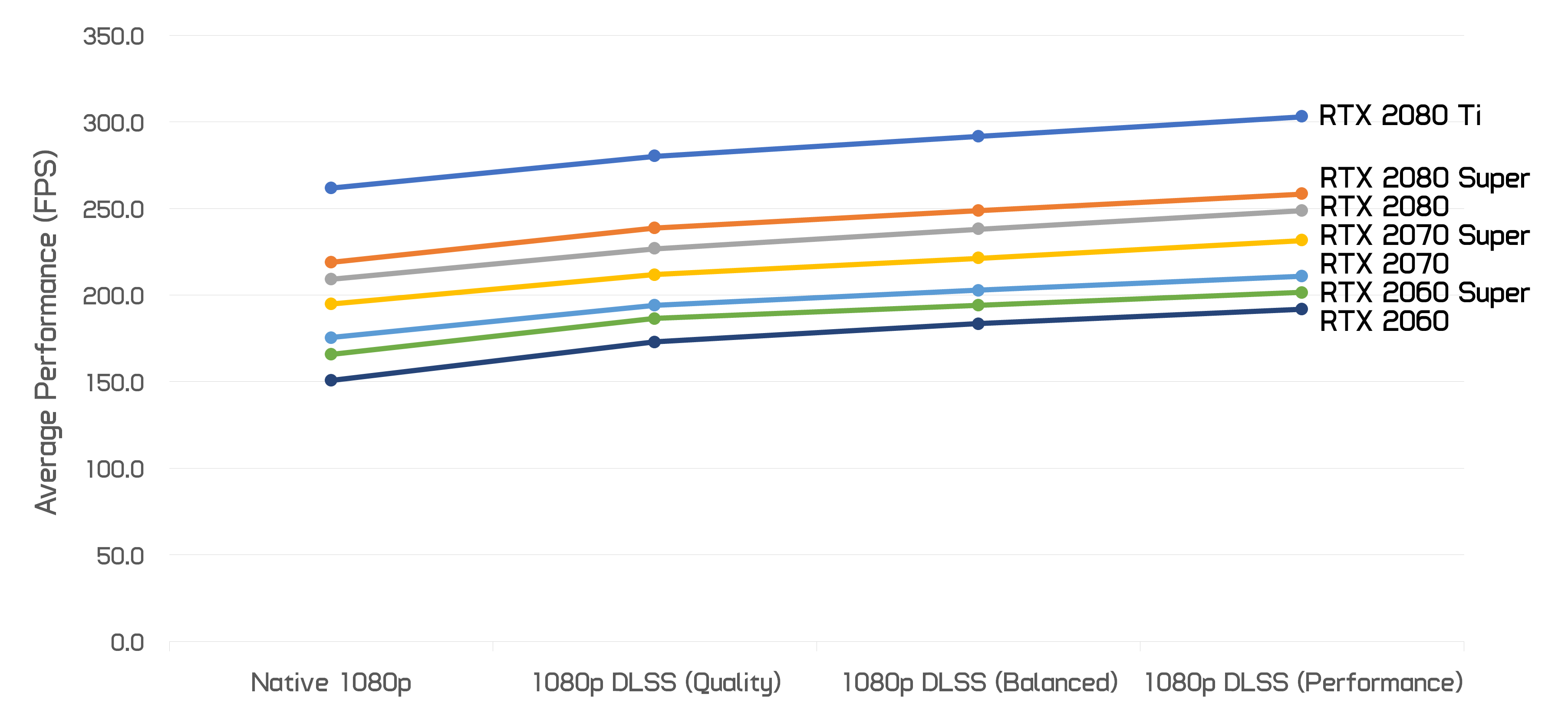

Here we're looking at the average performance gain we saw when playing with a 4K target resolution across the six RTX GPUs that support 4K gaming, from the RTX 2060 Super to the RTX 2080 Ti. Using the Quality mode, on average we saw a 24% improvement to average FPS over native 4K, and a 27% improvement in 1% lows, with equivalent to native 4K image quality. Using Balanced, the numbers increased to around a 35% improvement, and then with the Performance mode, a 47% improvement. All of these modes we'd say deliver image quality essentially identical to native 4K, if not better.

DLSS Modes vs. RTX GPU @ 4K

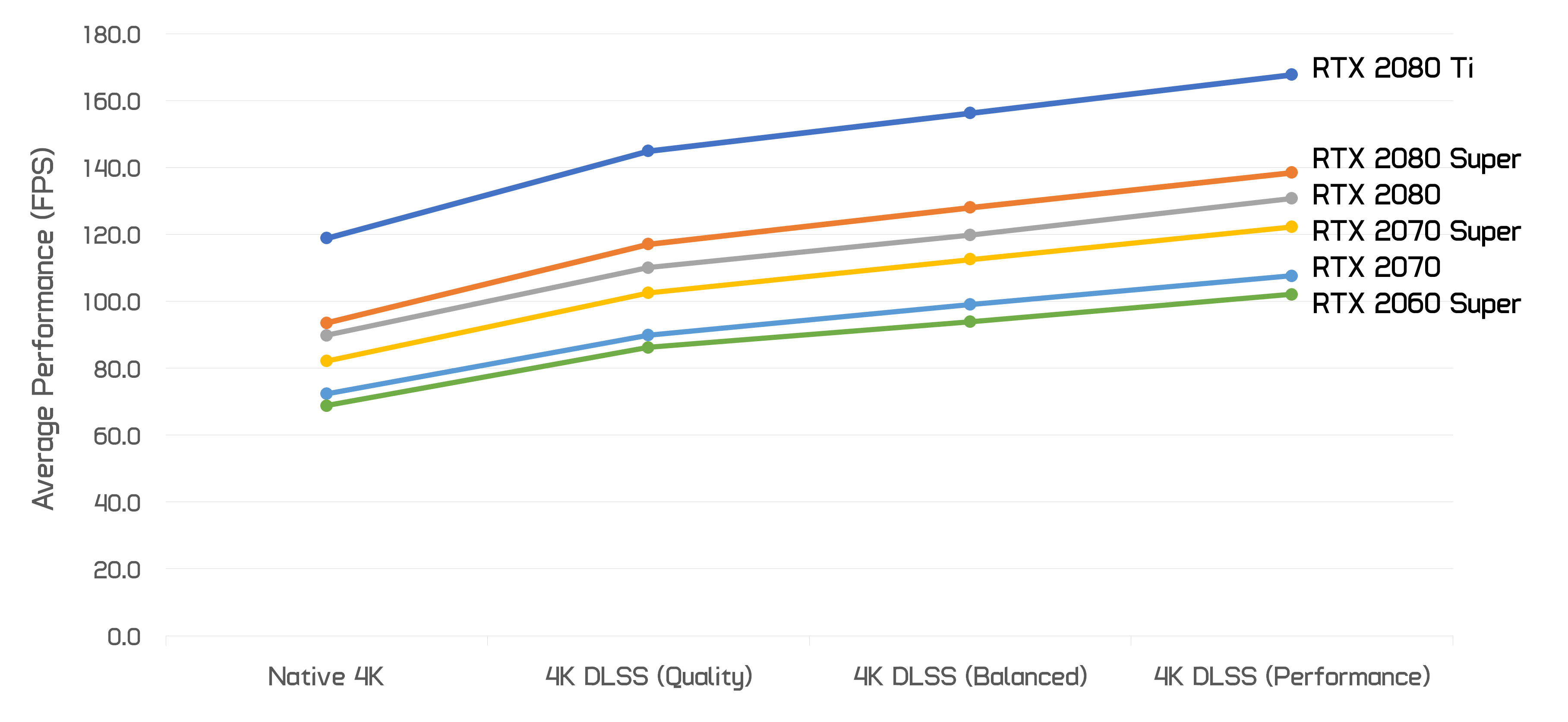

The reason why we're using an average across the six GPUs is performance is very consistent regardless of what RTX GPU you have. This chart shows the actual results from all six GPUs, and you'll see the lines match up. There is a slight tendency for lower performance GPUs to gain more out of using DLSS, we saw up to a 25% gain for the RTX 2060 Super compared to 22% for the RTX 2080 Ti using the quality mode, but it's for the most part equivalent.

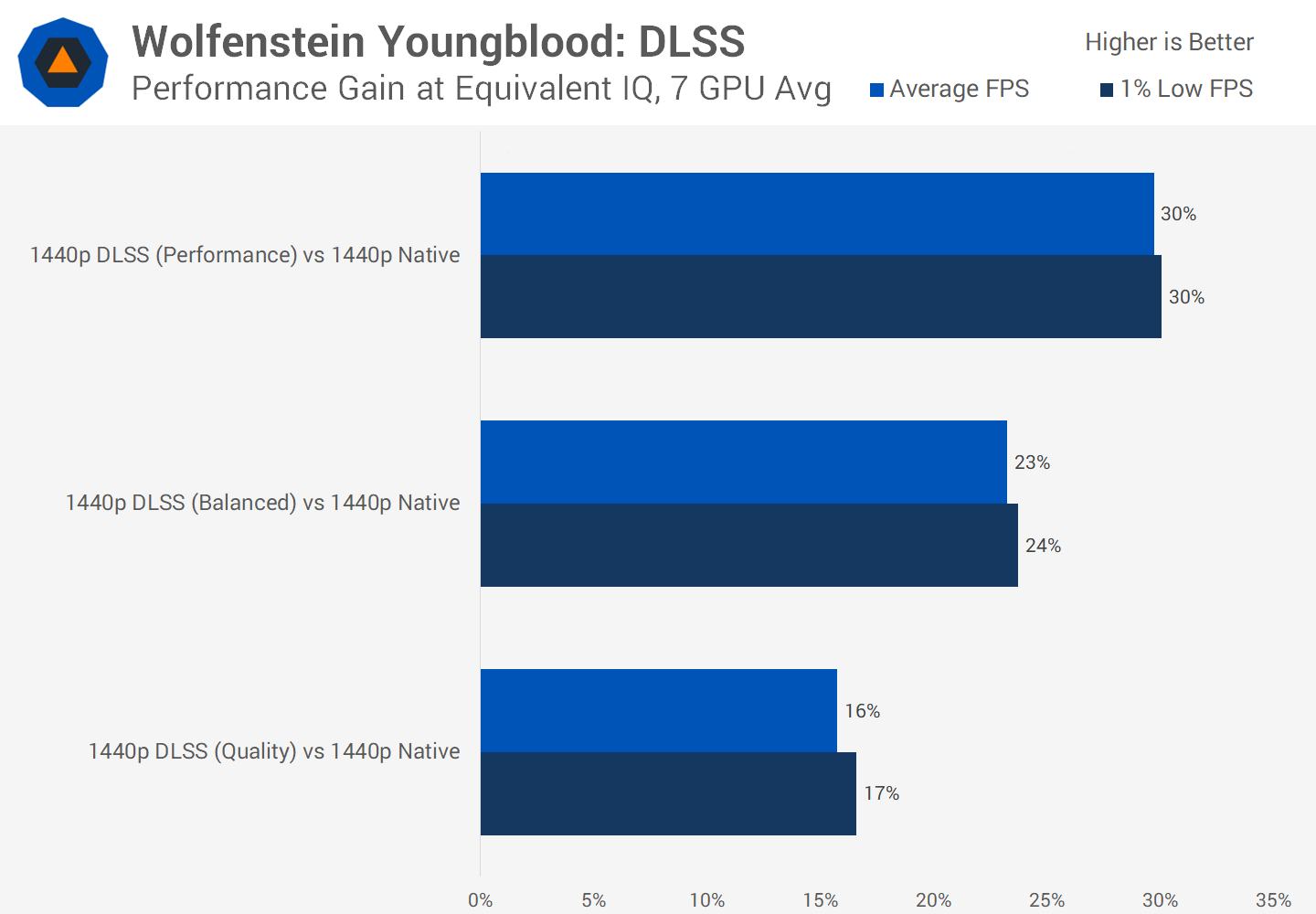

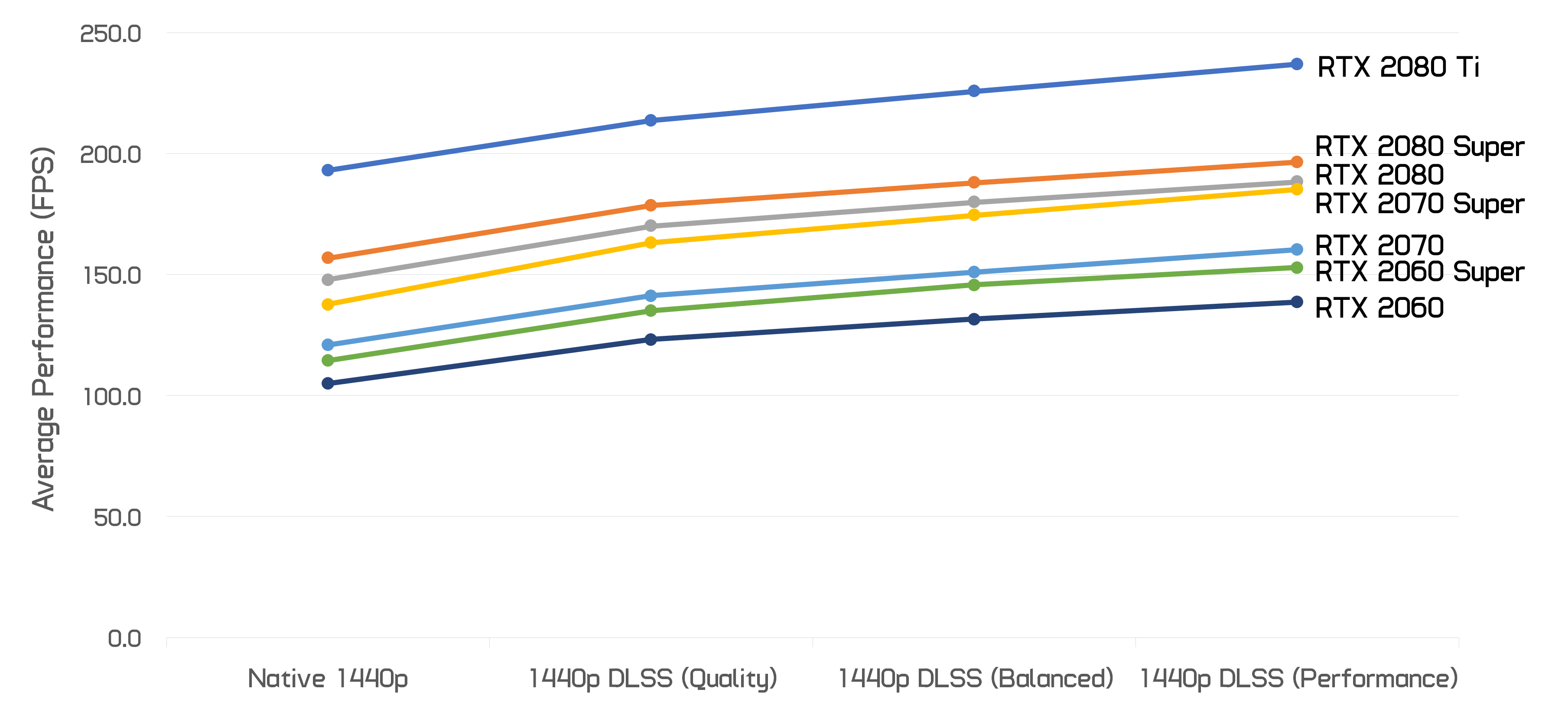

What about at 1440p? Clearly we aren't getting as good performance gains here. The Quality mode provided a 16% gain on average, and the Balanced mode a 23% gain. The Performance mode did deliver a 30% gain, below what Balanced achieves at 4K, however we don't feel the Performance mode delivers image quality equivalent to native, so we're not getting a pure 30% performance gain as image quality does decrease somewhat.

DLSS Modes vs. RTX GPU @ 1440p

There is a slight tendency for lower performance GPUs to gain more from DLSS at 1440p. We saw up to a 26% gain using the Balanced mode with an RTX 2060, compared to just 17% with the RTX 2080 Ti.

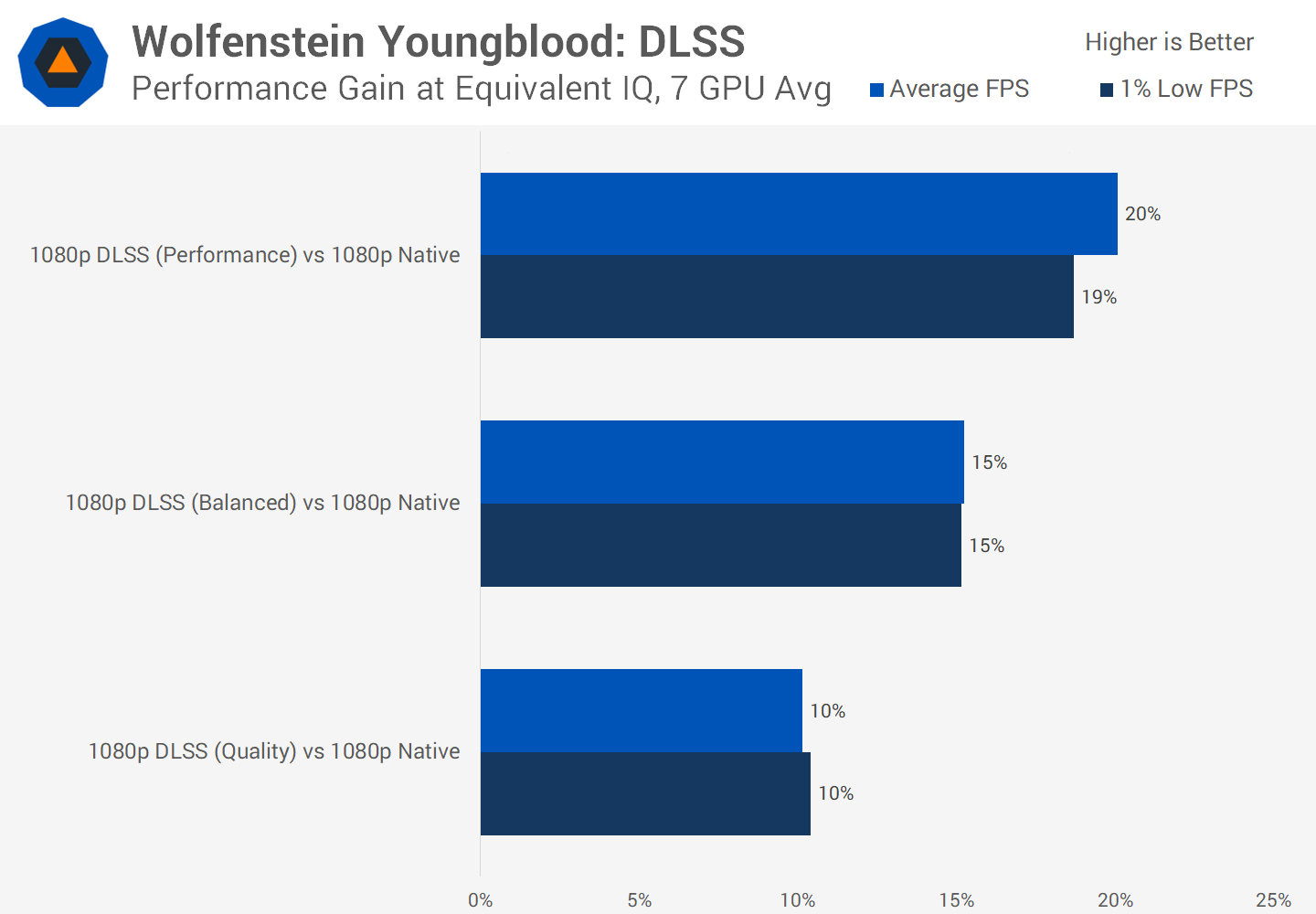

At 1080p, gains drop further again, now just 10% on average across 7 GPUs using the Quality mode, with the same tendency for lower power GPUs to see higher gains. We believe something that could be going on is that frame rates become so high that DLSS struggles to scale well.

DLSS Modes vs. RTX GPU @ 1080p

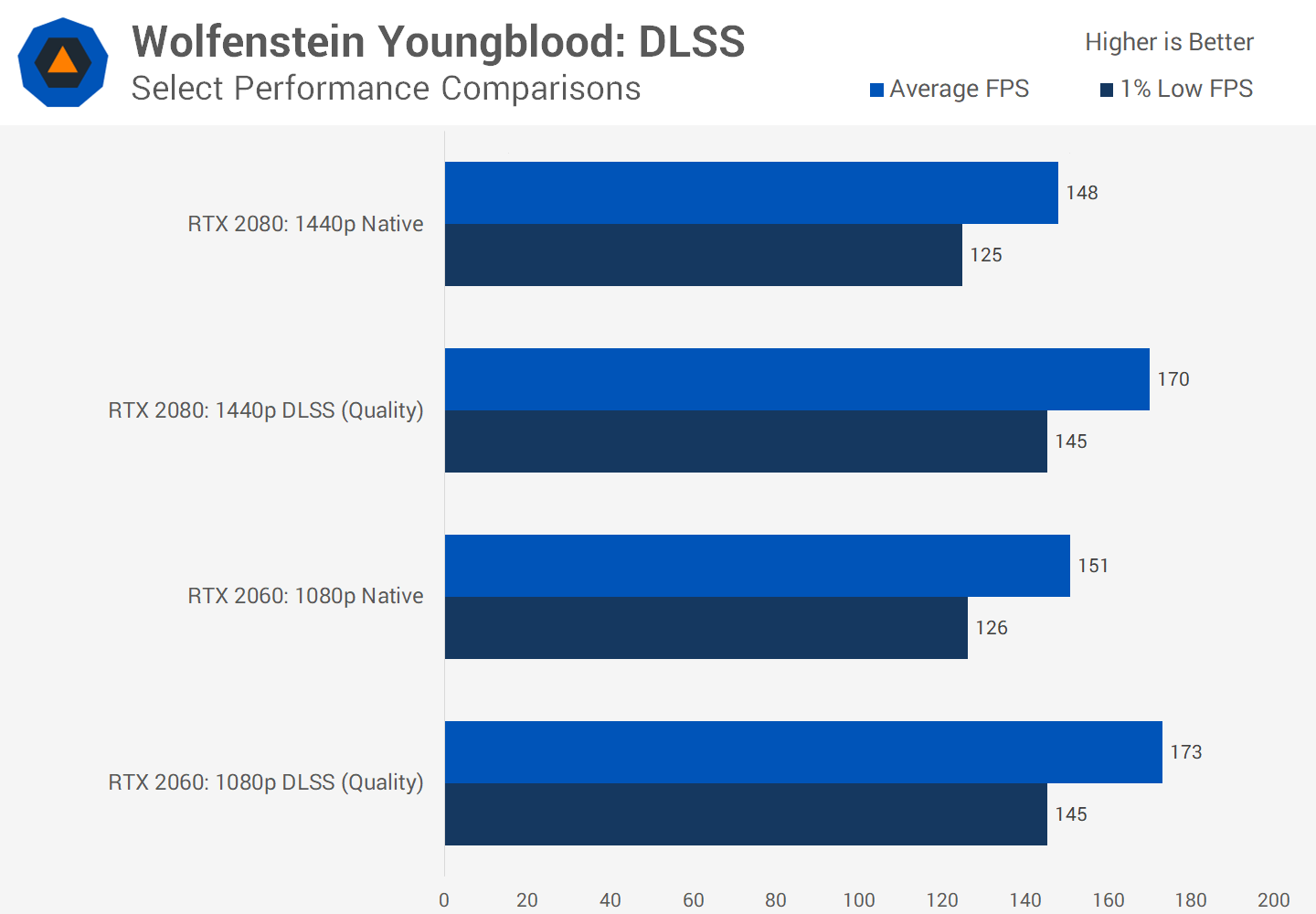

For example, the RTX 2060 at native 1080p hit 150 FPS, and saw around a 15% improvement to that performance when using the DLSS Quality mode. This is similar to what we saw with the RTX 2080 at native 1440p: again around 150 FPS, and again around a 15% improvement using the Quality mode. So this does make me think that the lower your baseline performance is, the more you can benefit from DLSS, but we'll have to test more games in the future to confirm that finding.

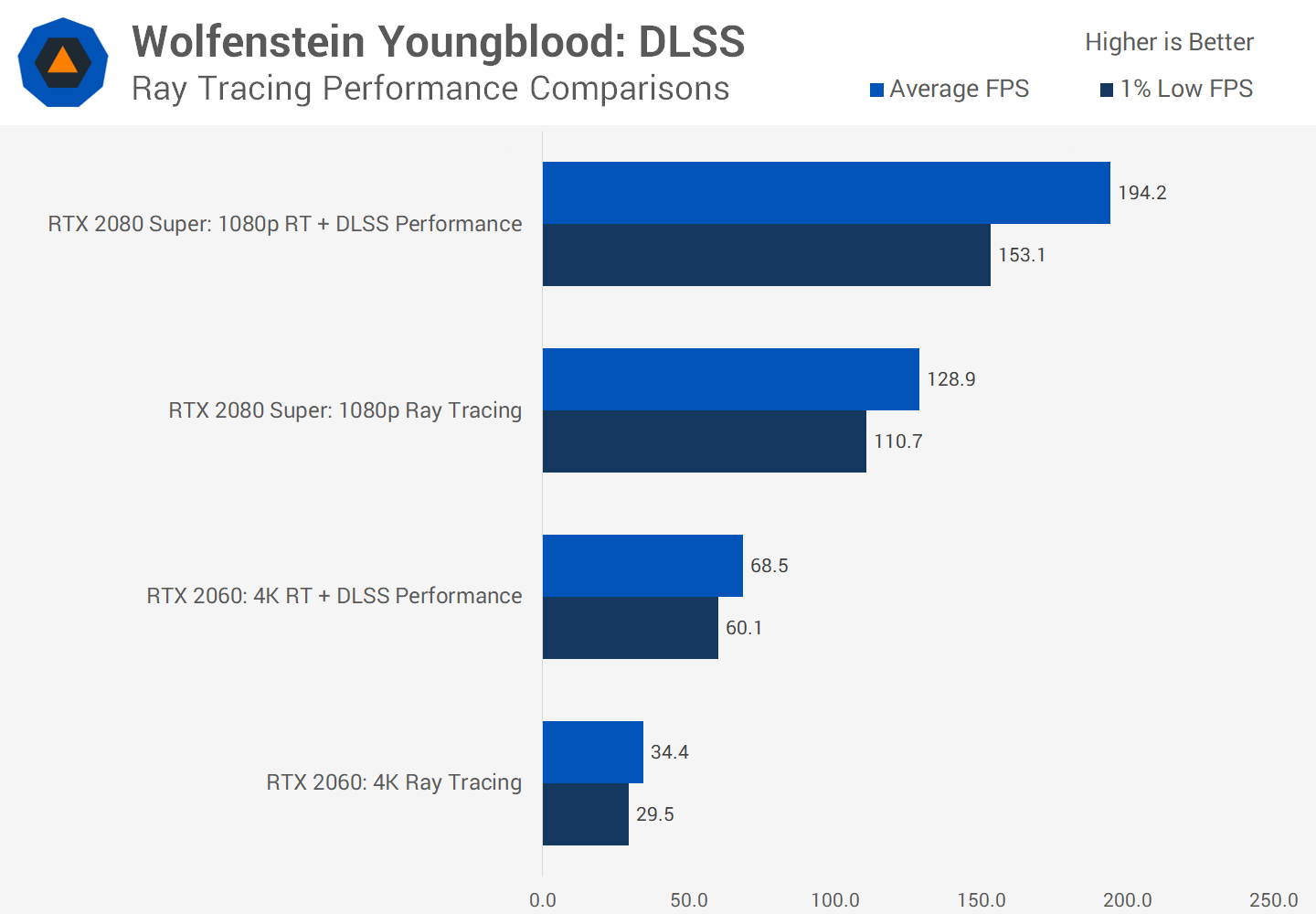

On the other hand, we saw larger gains when ray tracing was enabled. We didn't do extensive testing with this feature, but with the RTX 2060 we saw up to a 2X improvement at 4K using the Performance mode.

Regardless of the configuration though, we were able to achieve performance gains, with DLSS effectively giving you a free performance boost at the same level of image quality. That's very impressive and will be especially useful either in situations where you want to gain at high resolutions, or when you want to really crank up the visual effects, like using ray tracing.

DLSS ecosystem, closing thoughts

There are lots of genuine positives to take away from how DLSS performs in its latest iteration. After analyzing DLSS in Youngblood, there's no doubt that the technology works. The first version of DLSS was unimpressive, but it's almost the opposite with DLSS 2.0: the upscaling power of this new AI-driven algorithm is remarkable and gives Nvidia a real weapon for improving performance with virtually no impact to visuals.

DLSS now works with all RTX GPUs, at all resolutions and quality settings, and delivers effectively native image quality when upscaling, while actually rendering at a lower resolution. It's mind blowing. It's also exactly what Nvidia promised at launch. We're just glad we're finally getting to see that now.

Provided we get the same excellent image quality in future DLSS titles, the situation could be that Nvidia is able to provide an additional 30 to 40 percent extra performance when leveraging those tensor cores. We'd have no problem recommending gamers to use DLSS 2.0 in all enabled titles because with this version it's basically a free performance button.

The visual quality is impressive enough that we'd have to start benchmarking games with DLSS enabled – provided the image quality we're seeing today holds up in other DLSS games – similar to how we have benchmarked some games with different DirectX modes based on which API performs better on AMD or Nvidia GPUs It's also apparent from the Youngblood results that the AI network tensor core version is superior to the shader core version in Control. In a perfect world, we would get the shader version enabled for non-RTX GeForce GPUs, but Nvidia told us that's not in their plans and the shader version hasn't worked well in other games.

Clearly, a year and a half after DLSS launched, even Nvidia would admit this hasn't gone to plan. This is almost an identical situation to Nvidia's RTX ray tracing. The feature has been heavily advertised as a 'must have' for PC gamers, but the first few games to support the tech didn't impress, and it's taken nearly a year to get half-decent game implementations that as of today can be counted on one hand.

Just like with ray tracing, it's nice to eventually get DLSS support in games, but doing so weeks or months after the games' launch is almost worthless. We can't imagine too many people going back to play Youngblood months after release specifically for DLSS, even less so having received mediocre reviews.

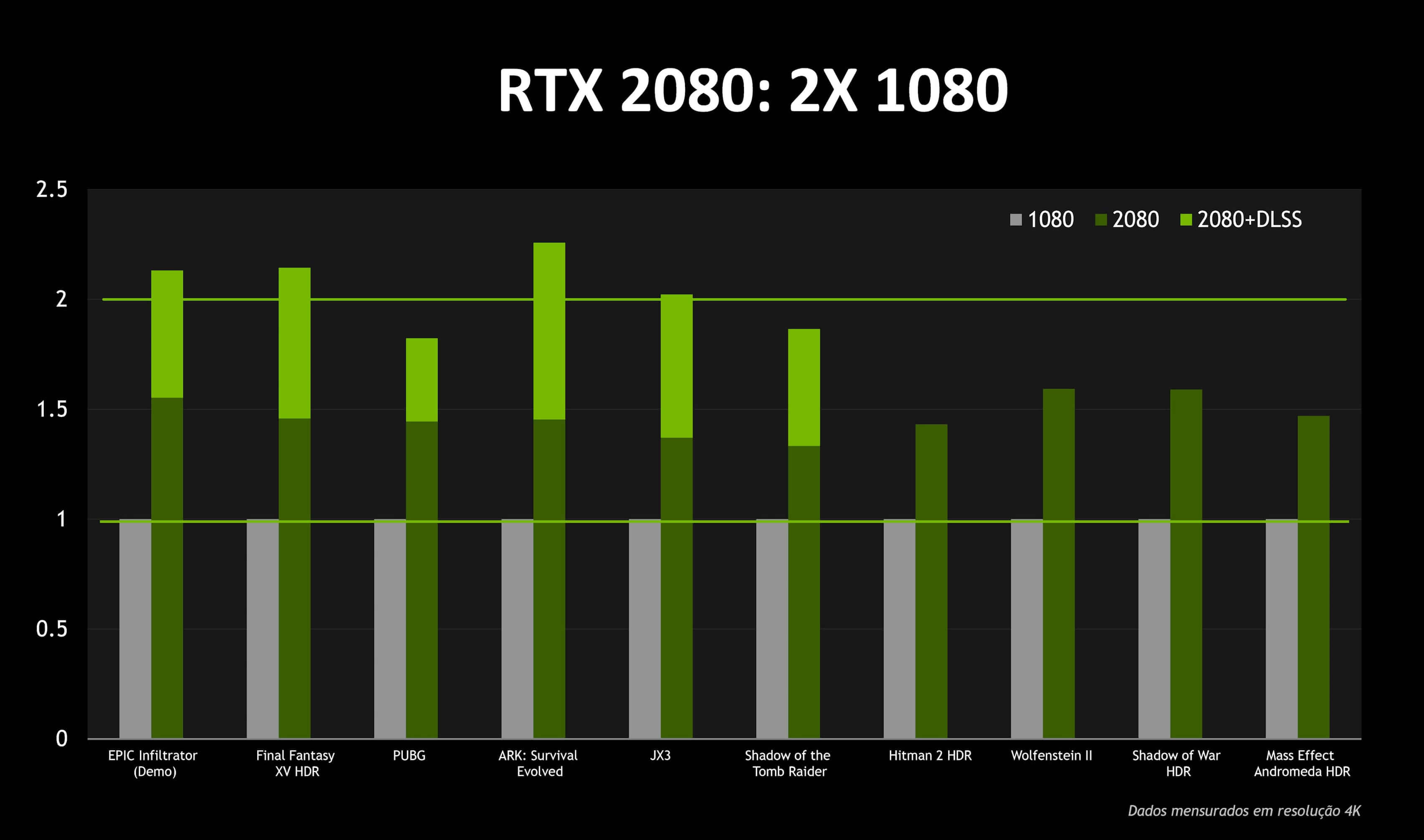

We have no doubt that DLSS will become a fantastic inclusion in games beyond today, but we've got to say that looking back Nvidia went too far on promising what they were unable to deliver. It was common to see promotion slides like the above, showing off the magical free performance DLSS would provide.

The huge slate of DLSS games is almost laughable in 2020 with most of these never getting DLSS. Those are some major releases that Nvidia advertised would support DLSS but never came to fruition. We asked them about this and their response was that initial DLSS implementations were "more difficult than we expected, and the quality was not where we wanted it to be" so they decided to focus on improving DLSS instead of adding it to more games.

Nvidia also told us that older titles supporting DLSS will require game-side updates to get 2.0 level benefits due to the new SDK and that's in the hands of developers, but we doubt those will get support. Battlefield V and Metro Exodus appear to have the same DLSS implementations as when we first tested these titles, flaws and all.

Some readers criticized our original features, claiming that we didn't understand DLSS because the magic of AI would see these titles improve with more deep learning and training. Well, a year later and it hasn't improved in these games at all.

On the positive side, Nvidia claims DLSS is much easier to integrate now and getting DLSS in games on launch day with quality equivalent to Youngblood's implementtion should be very achievable.

DLSS is at a tipping point. The recently released DLSS 2.0 is clearly an excellent technology and a superb revision that fixes many of its initial issues. It will be a genuine selling point for RTX GPUs moving forward, especially if they can get DLSS in a significant number of games. By the time Nvidia's next generation of GPUs comes around, DLSS should be ready for prime time and AMD might need to respond in a big way.