Spider-Man Remastered is an old console game that was remastered for PlayStation 5 and now it's arrived to PC and we're taking a look at GPU performance, because why not. As the name suggests, this is a remastered port of Spider-Man, it includes the original game plus the downloadable content, updated textures, graphical and performance improvements amongst other things. This version was initially released for the PlayStation 5 back in late 2020.

It's now been ported to Microsoft Windows as a standalone title for Steam and the Epic Games Store. With that gamers got the best possible version of the game yet with unlocked frame rates, FSR 2.0 and DLSS upscaling, ray-traced reflections, improved shadows, and ultra-wide monitor support.

Developers recommend at least a GeForce GTX 1060 6GB or Radeon RX 580 8GB for a 1080p 60 fps gaming experience. For "very high" quality visuals they call for an RTX 3070 or 6800 XT and then for amazing ray tracing the RTX 3070 or 6900 XT. Of course, we'll put those claims to the test and much more as we dug out 43 current and previous generation GPUs for this testing.

We've benchmarked Spider-Man Remastered at 1080p, 1440p and 4K using the medium and very high quality preset as well as a ray tracing configuration using the high quality preset. Because it was impossible to repeat the same pass over and over while swinging through the city, we've instead benchmarked a pass at street level. This was just as demanding as swinging from buildings, so it worked well for testing.

Our GPU test system is powered by the Ryzen 7 5800X3D with 32GB of DDR4-3200 CL14 memory. We used the GeForce Game Ready 516.94 and Adrenalin Edition 22.8.1 drivers, both of which include optimization for this game. So with that, let's get into the results!

Benchmarks

Medium Quality Setting

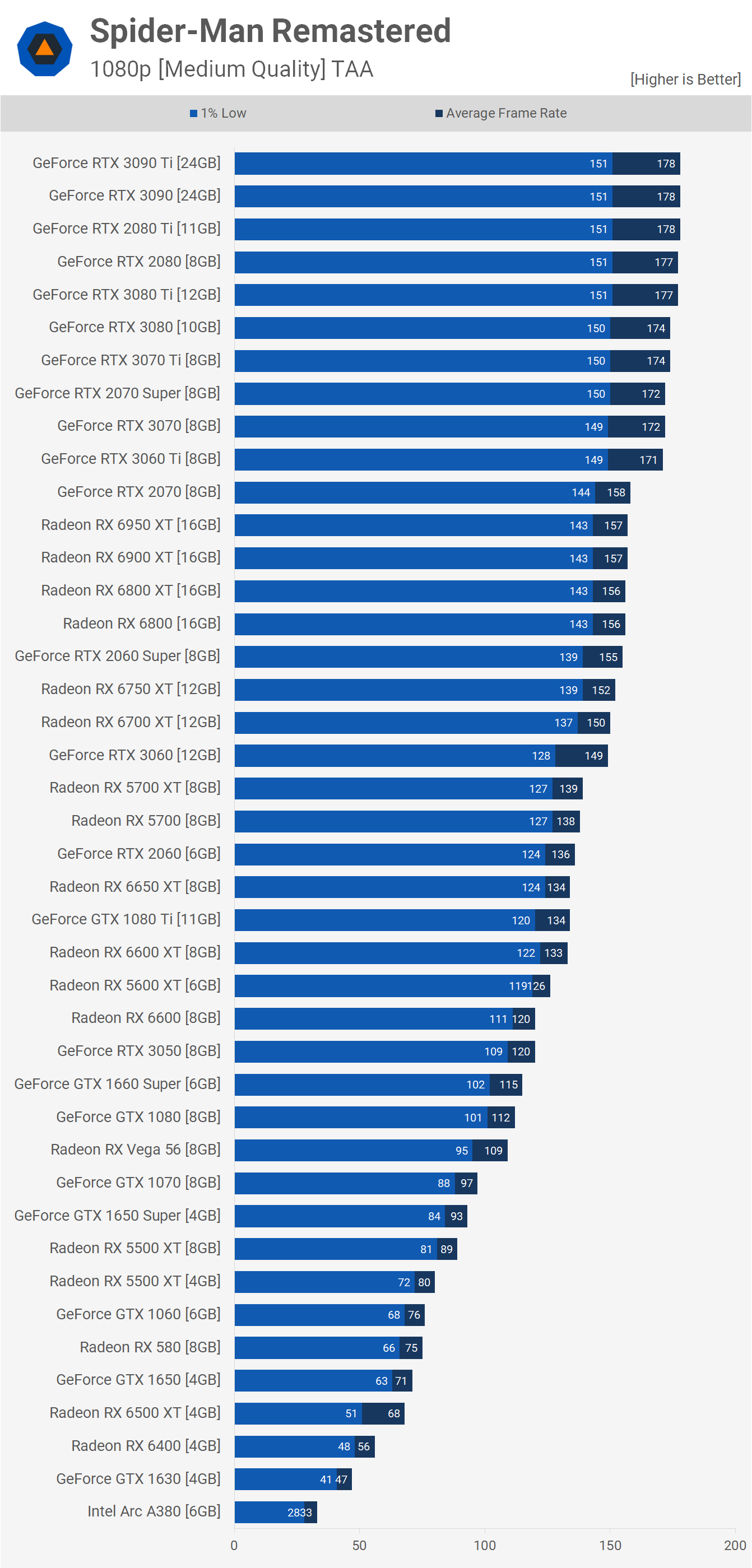

Starting with the medium quality data at 1080p, we'll go from the bottom and work our way up. Unsurprisingly, Intel's not looking to optimize for newly released titles just yet, in fact they'd probably appreciate it if game developers stopped making games for the next 5 years.

Anyway, the Arc A380 can be found at the very bottom with a barely playable 33 fps on average and remember we're using the medium quality preset here at 1080p. The GTX 1630 is also awful with just 47 fps on average as it somehow made the RX 6400 with 56 fps look good. The 6500 XT cracked the 60 fps barrier but 1% lows weren't great, so the true entry point is the GTX 1650 or the good old RX 580 which managed 75 fps.

Somehow the Radeon 5500 XT is almost 20% faster than the 6500 XT, but we're probably not meant to talk about that. As usual the GTX 1650 Super looks like one of the best budget graphics cards, embarrassing not only the much newer 6500 XT, but also its predecessor, the vanilla 1650.

Jumping up a bit, the GTX 1080 and Vega 56 are an interesting matchup, we're looking at very comparable performance here with the GeForce GPU ahead by just a few frames, and both were good for over 100 fps.

The RTX 3050 was able to match the RX 6600, and that's a great result for the GeForce given it's typically slower despite currently costing more. It was also interesting seeing the 5700 XT beating the GTX 1080 Ti, despite being 10% slower than the RTX 2060 Super, so overall not a great result for the RDNA GPU.

RDNA and RDNA2 don't look particularly impressive in general, hitting a system bottleneck at just shy of 160 fps for parts like the RX 6800, 6800 XT, 6900 XT and 6950 XT.

Meanwhile, the RTX 3060 Ti was good for 171 fps with numerous Turing and Ampere GPUs delivering between 171 and 178 fps.

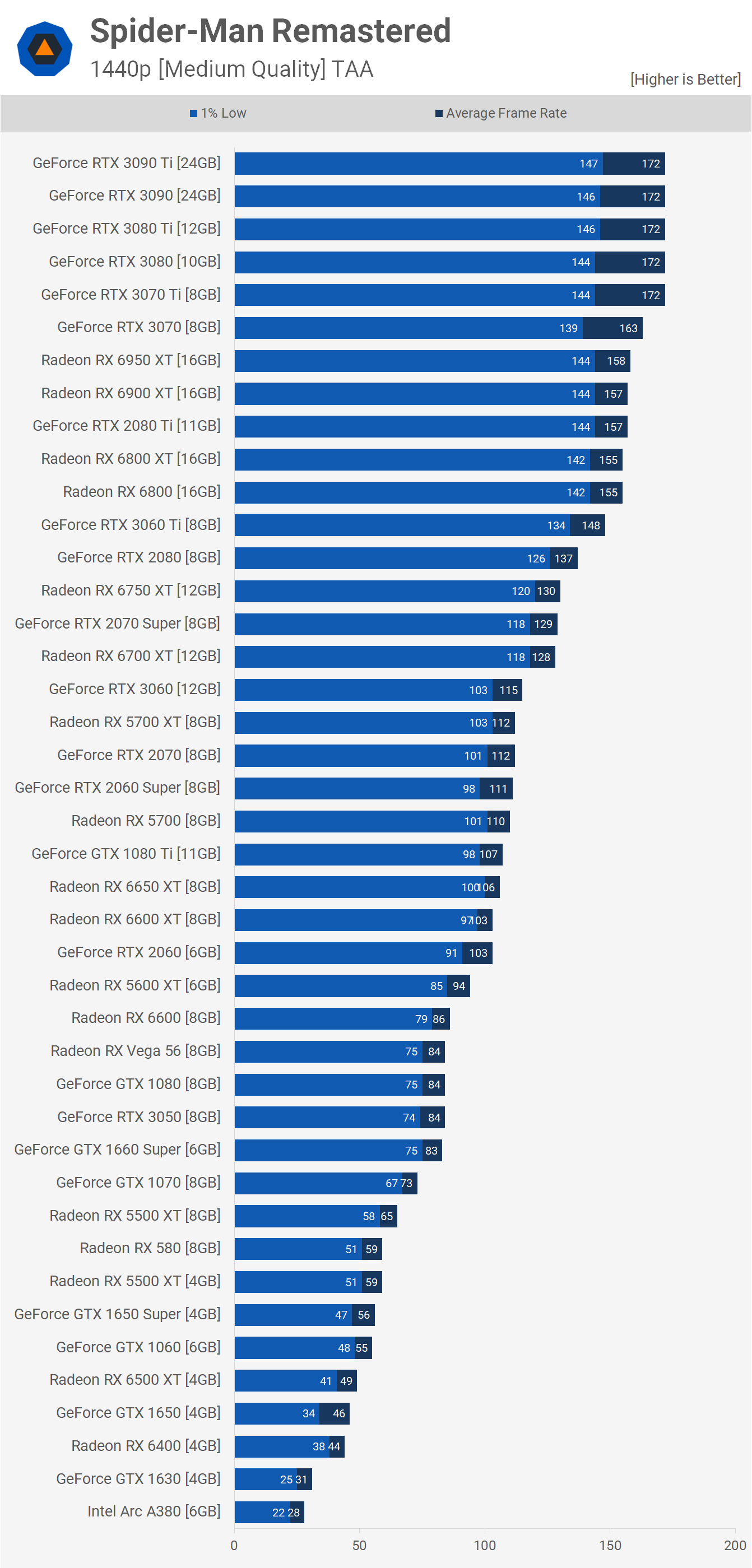

Jumping up to the 1440p resolution saw parts such as the A380 and GTX 1630 drop to around 30 fps on average, or unplayable territory in our opinion. For those of you targeting 60 fps, the RX 580 and 4GB 5500 XT is the bare minimum, which is not so bad if we are using the medium quality preset.

AMD's Vega 56 matched the GTX 1080 and quite embarrassingly for Nvidia, kept pace with the RTX 3050. This time the 5700 XT was able to match the 2060 Super and 3060, which is a better result for the popular RDNA GPU.

Unfortunately for AMD though, RDNA2 didn't hang in there all that well with Ampere. For example, the 6800 XT was 10% slower than the 10GB RTX 3080 and that's despite the GeForce GPU running into a CPU bottleneck at 172 fps. That also means the majority of the mid-range to high-end Ampere GPUs were frame capped by the CPU.

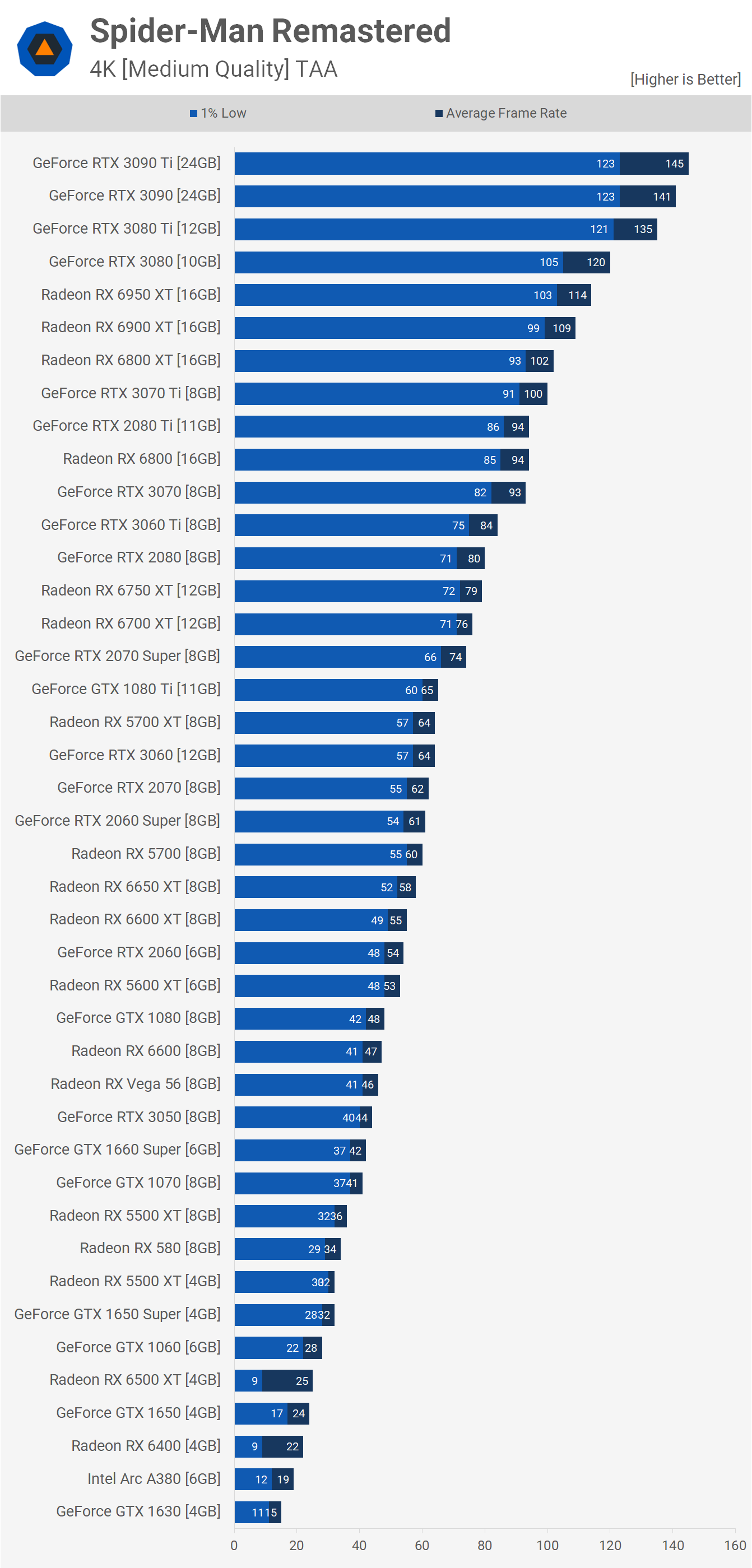

Those hoping to play at 4K with medium quality settings and still hit 60 fps, you will require a decent GPU. The Radeon RX 5700 XT managed 64 fps, matching the RTX 3060 exactly.

Then for 100 fps or more, you'll need the RTX 3070 Ti or 6800 XT. RDNA2 stacks up a bit better here, but the RTX 3090 Ti was still a whopping 27% faster than the 6950 XT.

Very High Quality Setting

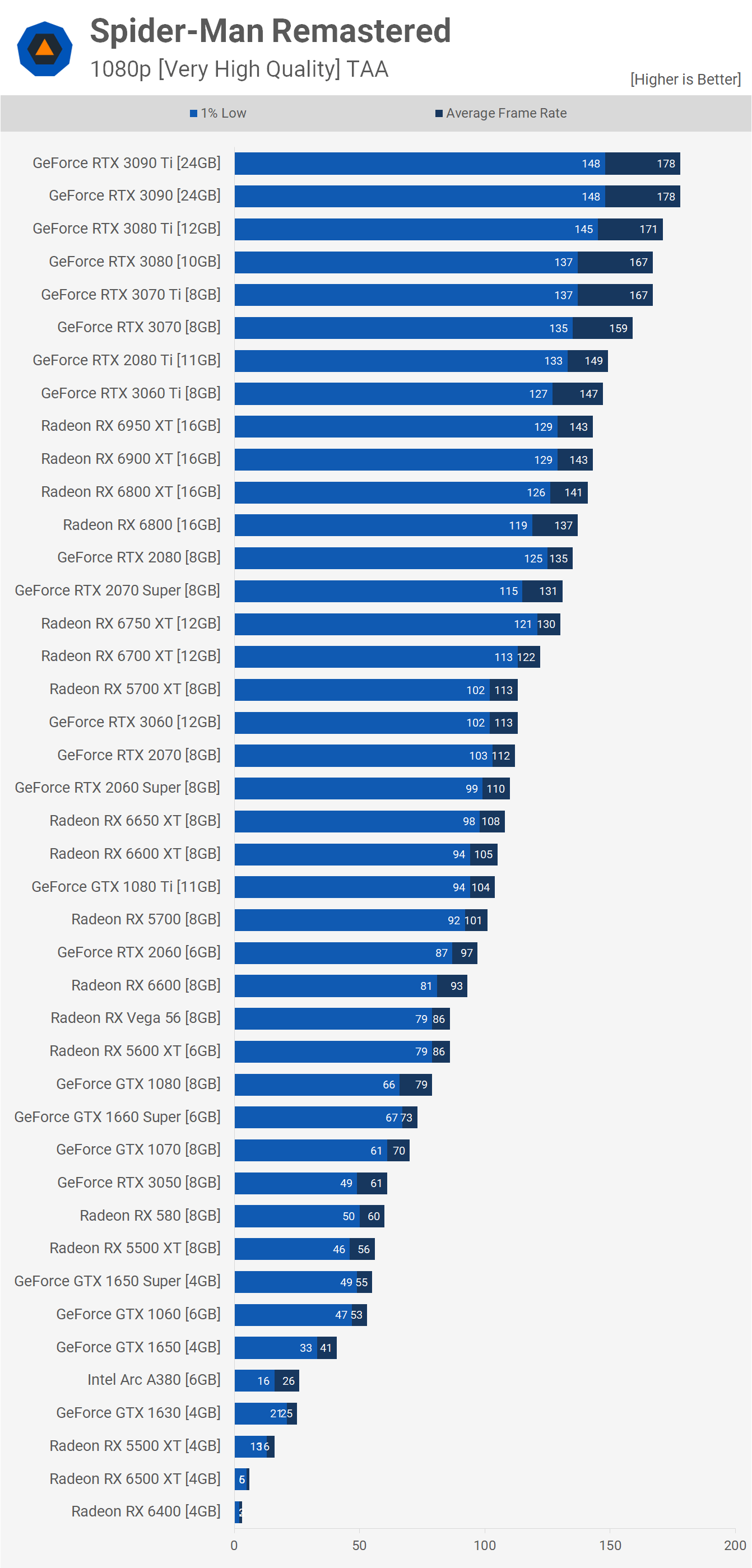

Moving to the very high quality preset, we'll go back and start with the 1080p data. If you're unfortunate enough to own an RX 6400 or RX 6500 XT, you won't be able to even get a glimpse of how Spider-Man Remastered looks when maxed out. The game was a blurry mess and we were unable to run our test, but after 30 seconds these are the numbers we received... 3 and 6 fps on average. The 4GB 5500 XT was a lot better but also a long way off delivering playable frame rates, as was the GTX 1630 and Intel Arc A380.

The game became quite playable with the GTX 1060 6GB, GTX 1650 Super and RX 5500 XT. The RX 580 was very impressive, too, spitting out 60 fps on average which somehow meant it was able to match the RTX 3050, which is a bit confusing.

Vega 56 pulls away from the GTX 1080 by a 9% margin using the very high quality preset. Then we have the vanilla 5700 matching the RTX 2060 and GTX 1080 Ti, while the 5700 XT is up there with the RTX 3060, 2070 and 2060 Super.

The RDNA2 GPUs are less impressive. The 6800 XT, 6900 XT and 6950 XT all hit a brick wall around 140 fps, making the old RTX 2080 Ti faster, while they're only roughly on par with Ampere's RTX 3060 Ti.

For Nvidia the system limit is reached at 178 fps with the RTX 3090 Ti and RTX 3090 making them 24% faster than AMD's best offerings.

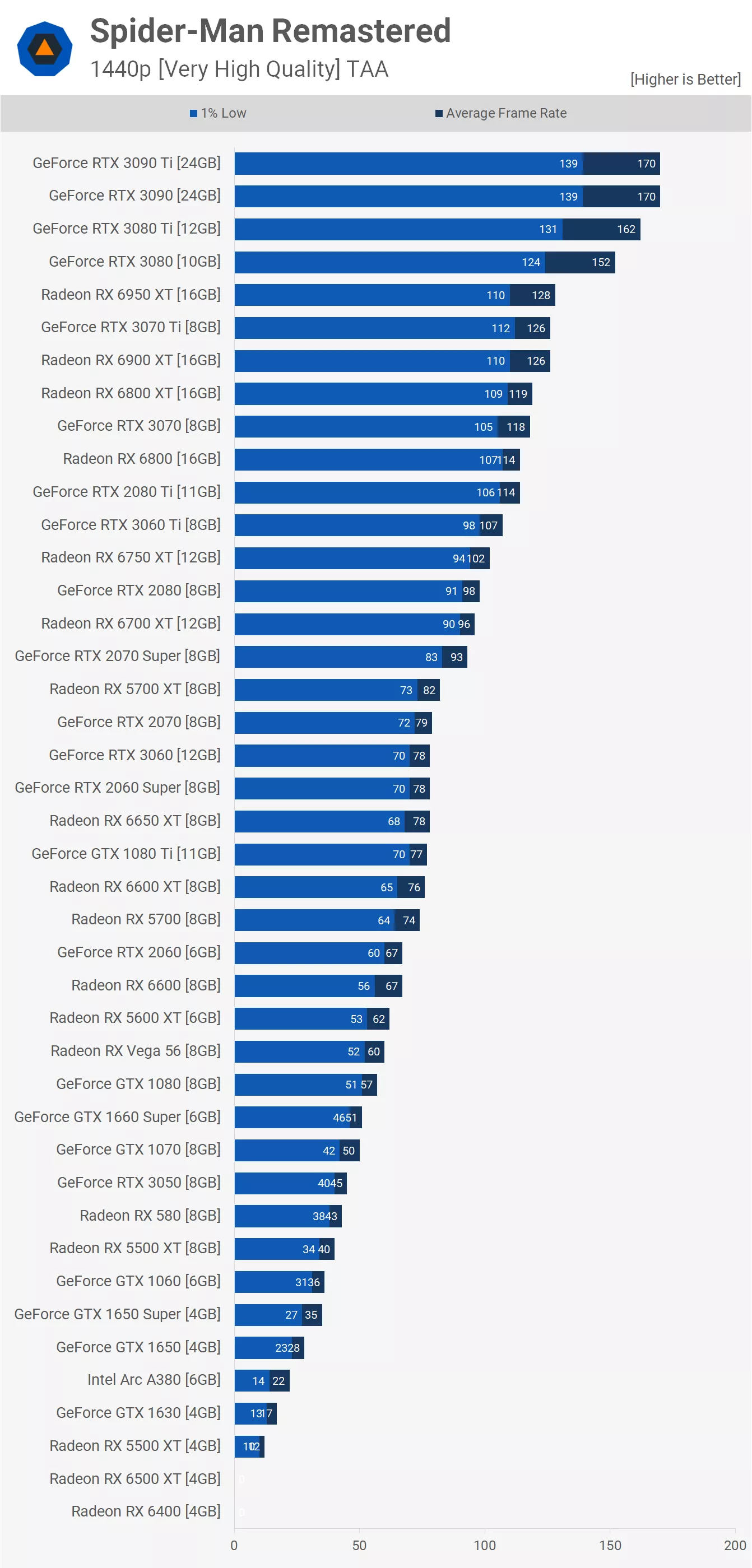

Increasing the resolution to 1440p on this quality preset breaks the RX 6400 and 6500 XT as both crashed to desktop. The 4GB 5500 XT, GTX 1630 and A380 were no better and the game didn't start to resemble playable performance until we got to the GTX 1650 Super, but of course, even here with 35 fps on average the result was less than ideal.

The minimum here for playable performance is the GTX 1070, 1660 Ti or 1660 Super. The GTX 1080 was noticeably smoother with 57 fps on average and then Vega 56 with 60 fps on average. Then between 74 fps and 79 fps we have seven GPUs ranging from the RX 5700 to the RTX 2070 with several current-gen offerings such as the Radeon 6600 XT, 6650 XT and GeForce RTX 3060.

The next performance tier was taken with the RTX 2070 Super, 6700 XT and RTX 2080, while the 6750 XT and RTX 3060 Ti were competitive.

At the high-end RDNA2 is nowhere once again as the RTX 3080 10GB delivered 28% more frames than the 6800 XT. For some reason Radeon GPUs appear CPU bound at just shy of 130 fps, whereas Nvidia GPUs were able to push up to 170 fps.

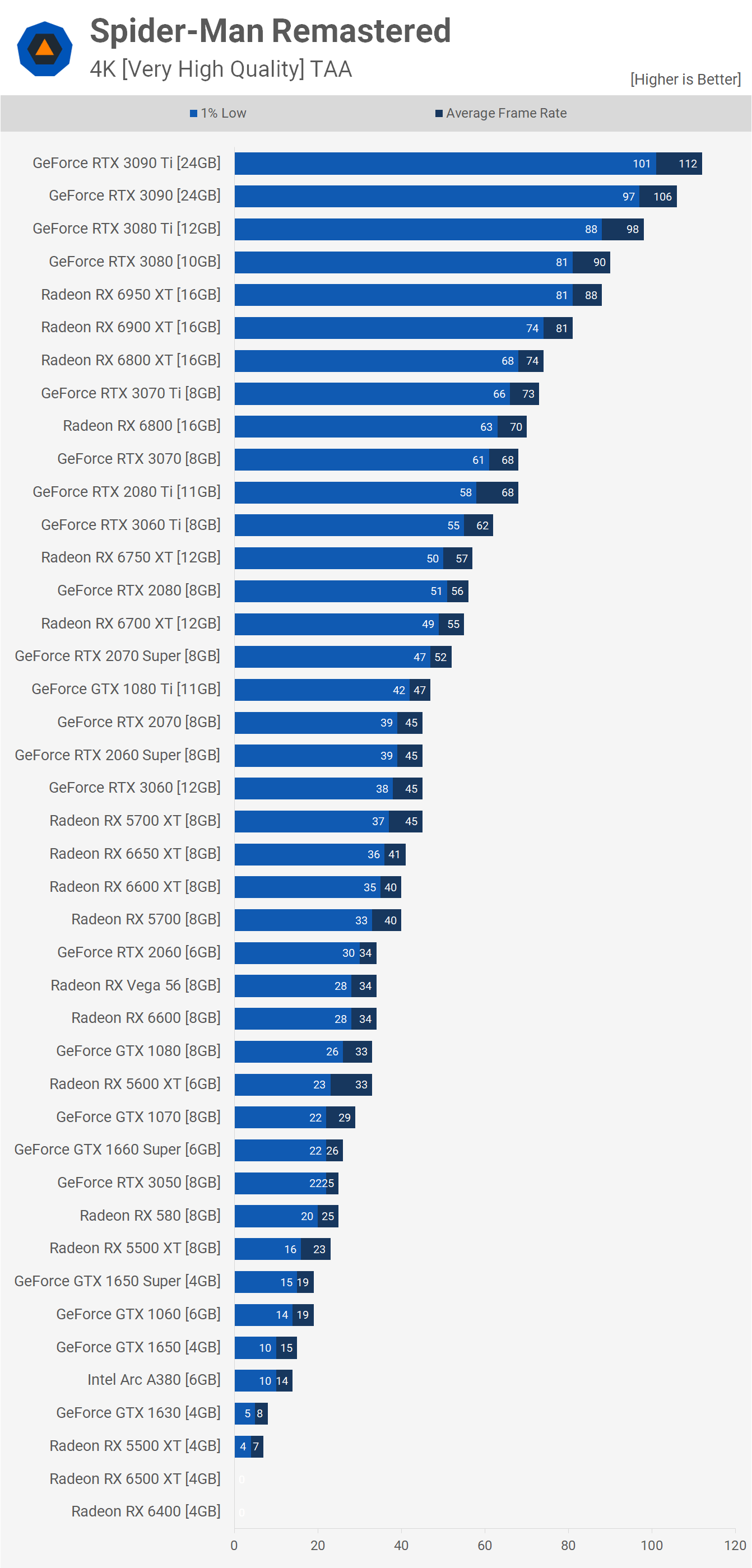

The 4K very high results aren't terribly surprising. The Pascal flagship GTX 1080 Ti was good for ust 47 fps on average and it took the RTX 2080 Ti to comfortably crack the 60 fps barrier.

Meanwhile, the most affordable Radeon GPU to achieve this was the Radeon RX 6800, matching the RTX 3070 and 3070 Ti.

The GeForce RTX 3080 was 22% faster than the 6800 XT and it took AMD's 6950 XT to match the original 10GB 3080. Again, where Radeon GPUs maxed out at around 90 fps, the flagship GeForce GPU pressed on to deliver over 100 fps, hitting 112 fps to make it 24% faster.

Ray Tracing Benchmarks

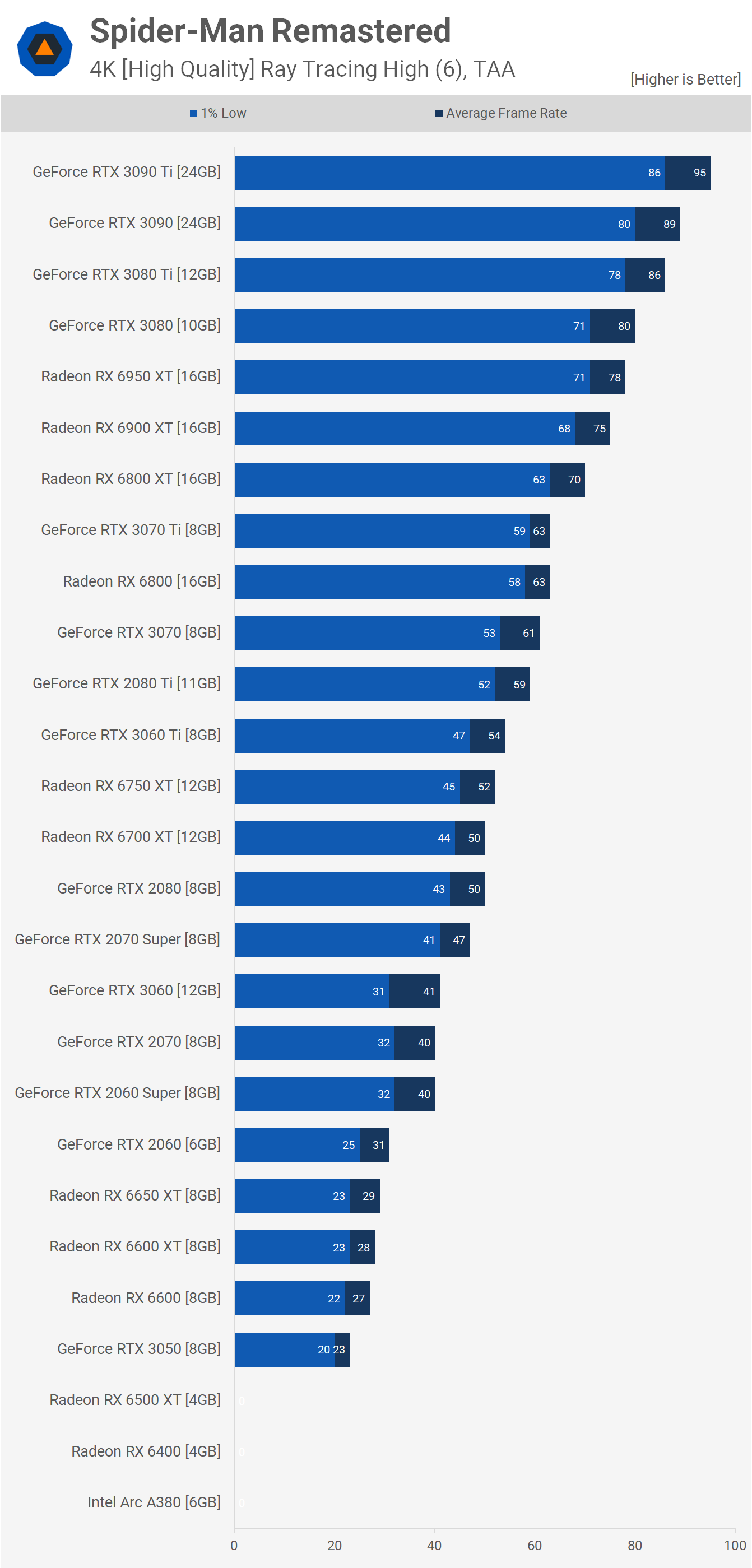

We also took a look at ray tracing performance using the high quality preset. RT effects were all set to high (not very high) and the default "6 objects" was selected.

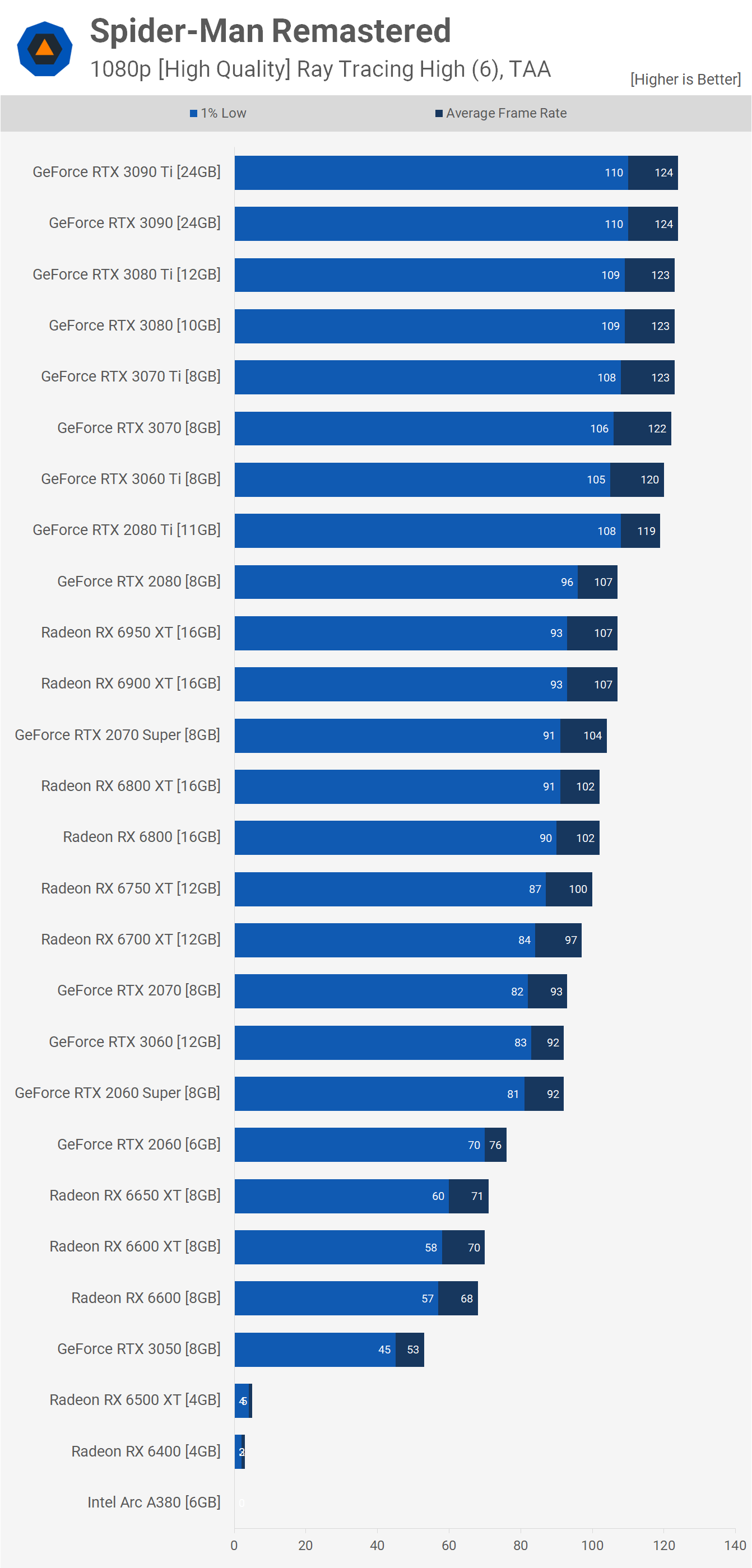

When compared to the "Very High" results we just looked at, performance at 1080p drops by 30% for the high-end GeForce GPUs and 25% for the high-end Radeon GPUs.

But ignoring the high-end for a moment, we see that although entry-level GPUs such as the Arc A380, RX 6400 and 6500 XT claim to support ray tracing (and technically do), they also don't in any kind of usable capacity.

The RTX 3050 was good for 53 fps, that's certainly playable and the game looked great. But for a 60 fps experience the Radeon 6600, 6600 XT or 6650 XT will be required and it's interesting to see all three GPUs delivering basically identical performance, suggesting that the ray tracing portion of the pipeline is bottlenecking RDNA2 here.

As we already knew, even Turing (RTX 20) handles ray tracing better than RDNA2 and this has allowed the RTX 2060 to beat the 6650 XT, whereas the Radeon GPU was 11% faster using the very high quality preset with RT effects disabled.

Beyond the RTX 2060, the game was very playable with ray tracing enabled as the 2060 Super and RTX 3060 pumped out 92 fps and the 6700 XT an impressive 97 fps. The RDNA2 architecture hit a wall at just over 100 fps. Meanwhile, the high-end GeForce GPUs went as high as 124 fps with most models running into a system bottleneck at 1080p as the RTX 3070 was just 2 fps behind the RTX 3090 and 3090 Ti.

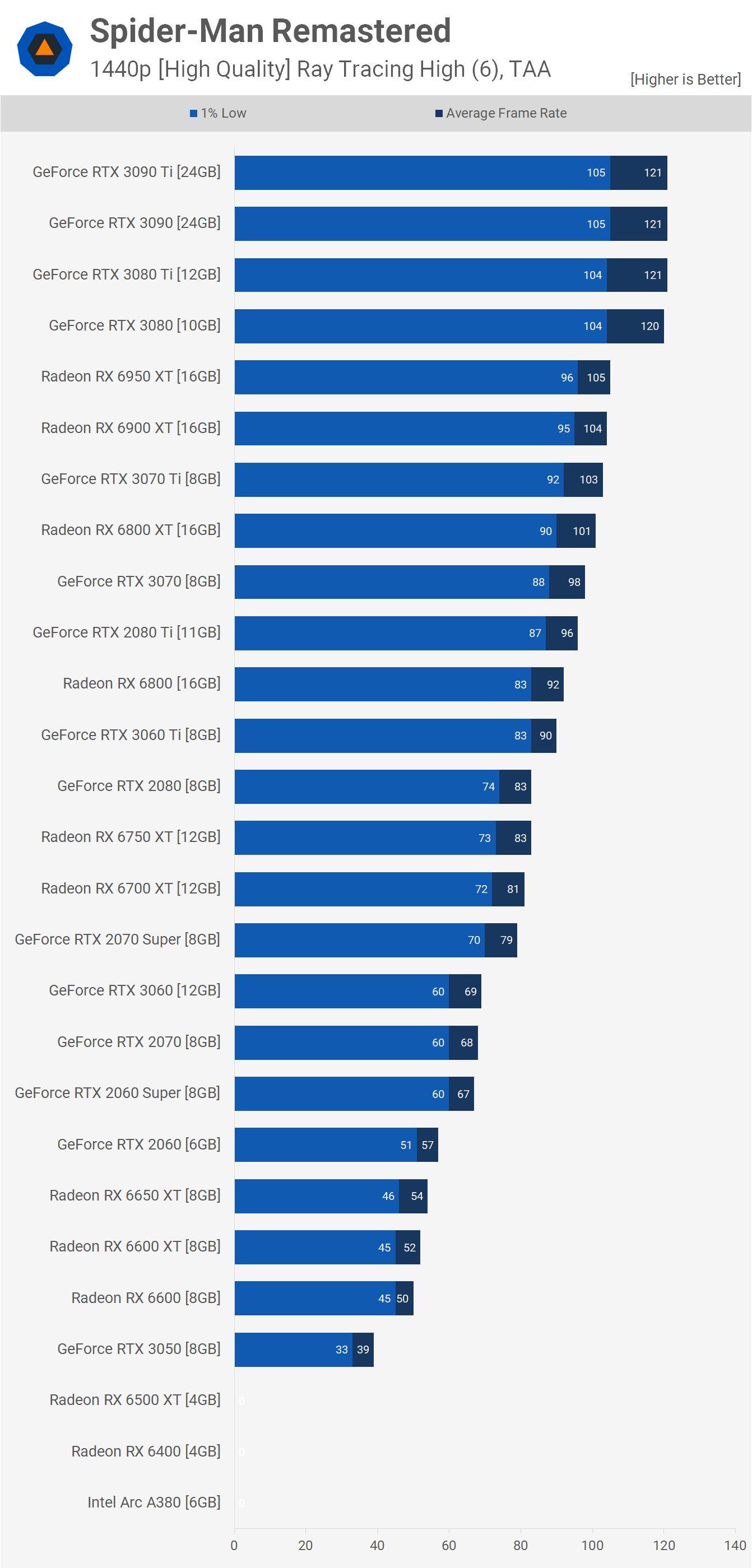

For those of you who want to enjoy ray traced effects at 1440p you'll be pleasantly surprised to learn that you can comfortably do so with an RTX 2060 Super, RTX 2070 or RTX 3060, while AMD users will require the 6700 XT or 6750 XT, though both models were quite a bit faster than the GeForce GPUs just mentioned.

For a high refresh rate experience, the RTX 3060 Ti and RX 6800 work well. The Radeon GPUs maxed out at just over 100 fps, the 6950 XT, for example, was just a few frames faster than the RTX 3070 Ti, while the RTX 3080 and faster GPUs all hit a system limit at 120 fps.

For 4K gaming with ray tracing enabled you'll want at least an RTX 2080 Ti, RTX 3070 or RX 6800 for around 60 fps. The 6950 XT struggled to match the original RTX 3080, but at least here it was within a few frames. For maximum performance you'll want an RTX 3080 Ti, RTX 3090 or 3090 Ti which is not at all surprising.

With Great Power Comes Great Responsibility

After spending most of the past week benchmarking Spider-Man Remastered with over 1,000 passes, we've now got a pretty good idea of what it takes to run this title. The good news is that gamers targeting only 60 fps, the game can be run on pretty modest hardware, especially if you're willing to dial down the quality settings a little.

For example, 1080p medium only requires a Radeon 6500 XT, and that means any relatively modern GPU that's not a GTX 1630 or A380 will work just fine. The 6500 XT 1% lows were still a bit sketchy, so let's say the GTX 1650 is the minimum requirement here. Then at 1440p, the Radeon RX 580, 5500 XT, GTX 1080 or RTX 3050 will work well.

Spider-Man Remastered is one of the best showcases of ray tracing effects so far.

In our opinion, Spider-Man Remastered is one of the best showcases of ray tracing effects so far. The game clearly looks better with RT enabled which is great news, and although the performance hit remains massive, for those targeting 60 fps at 1080p you can still enjoy the game with ray tracing using fairly modest hardware such as the RX 6600 or RTX 2060. Even at 1440p, the requirements aren't extreme: the 2060 Super, RTX 3060 or 6700 XT will provide over 60 fps, and well over in the case of the 6700 XT.

It does seem that at the high-end you're almost always going to be limited by something else than your graphics card in this title, unless you game at 4K with quality settings maxed out, which we guess you'd do for this title as the RTX 3080 Ti was still good for over 80 fps with 1% lows well over 60 fps.

But it does appear that the bigger challenge here might be CPU performance. We used the Ryzen 7 5800X3D for all our testing and with the higher-end GPUs we often saw utilization peaking at around 70% which is exceptionally high for a game. However, the game was entirely stutter free, it ran incredibly smooth, much smoother than a lot of the games we test, especially those that are ported to PC.

For those of you using a slower CPU with a high-end GPU, there's a good chance you'll see a performance limitation from your CPU at resolutions below 4K, and without conducting the appropriate level of testing we can't comment on how smooth the game runs with lesser CPUs.

It's also worth keeping in mind that if you're lowering visual quality settings in the hope of raising frame rates and it's not working, there's a good chance your CPU is to blame, and if that is the case you might as well crank up the quality settings and max out your GPU, or at least get closer to maxing it out.

If time allows we may want to run a CPU benchmark for this title. We're interested to see how budget CPUs like the Core i3-12100 handle this game. As for RAM usage, it appears that you'll get away with 16GB just fine, but as always 32GB is a nice luxury, especially for those that like to multi-task while gaming.