In a nutshell: Steam’s hardware survey for November has dropped, showing that AMD is still chipping away at Intel’s once insurmountable lead in the CPU space. Elsewhere, the GTX 1060 keeps its long-held spot as the most popular video card, though its lead is decreasing, and the RTX 2060 is cementing its place as the most common Turing GPU among Steam users.

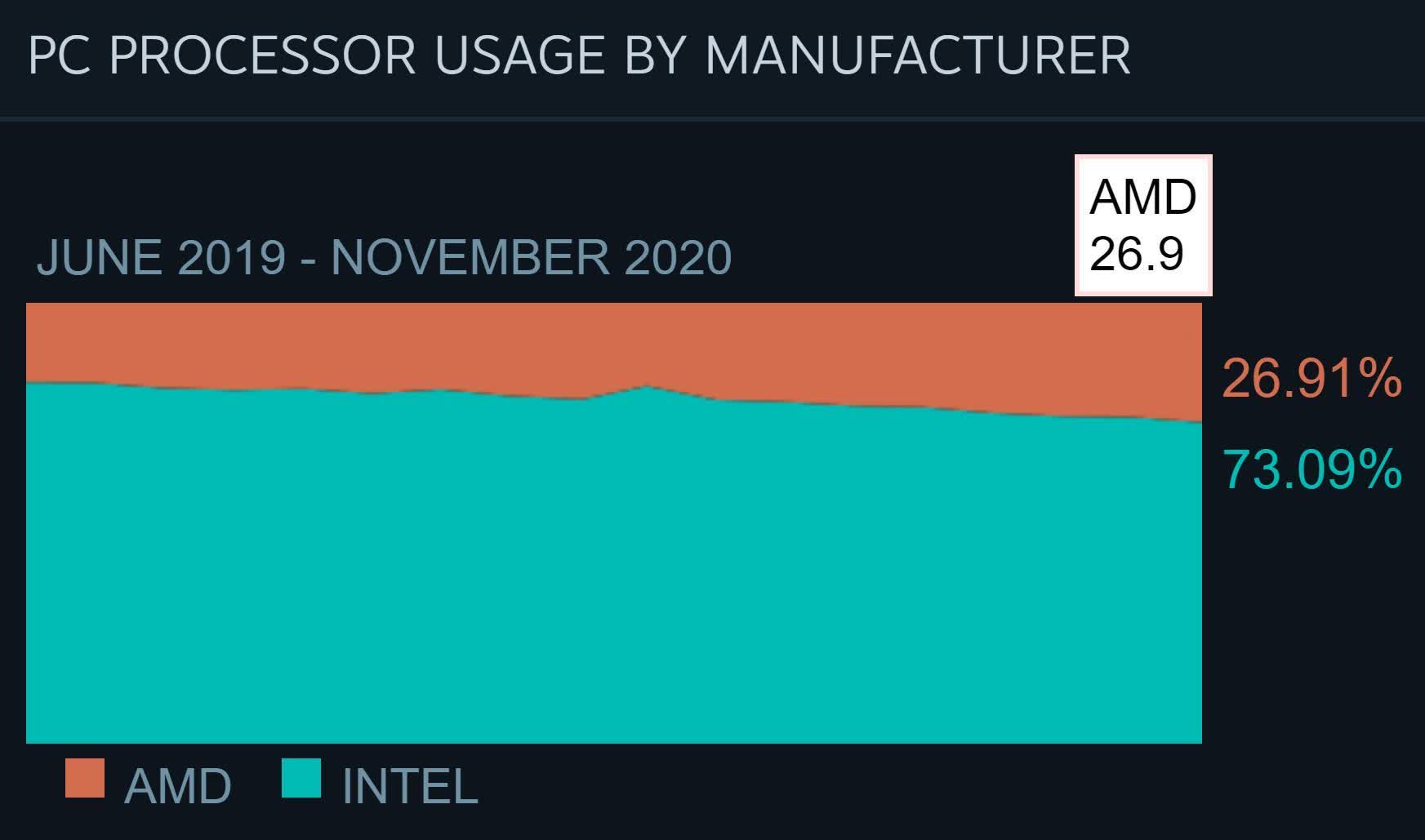

It’s no secret that AMD has been closing the gap on Intel in the Steam Survey for some time now. Team Red took more than 25 percent of the CPU share in September; two months later, that figure stands at 26.91 percent. It was only in June 2019 when Intel was sitting pretty with an 82 percent share.

AMD has Ryzen to thank for taking the fight to its long-time rival. The processors have been pushing out Intel’s offerings since their arrival in 2017 and have improved with each generation. With the 7nm Ryzen 5000 series proving to be a fantastic choice for work and play—providing you can find one—and the 5nm Zen 4 set for release next year, expect AMD’s share to start increasing at a faster pace. Meanwhile, Intel has its Rocket Lake processors arriving in the first half of 2021, though they use a variant of Sunny Cove backported to the 14nm process.

AMD’s video card share among Steam users remains pretty flat, having increased from 14.8 percent in June last year to just 16.5 percent in November. And while the Radeon RX 6000 series will doubtlessly sell well—we loved the RX 6800 and RX 6800 XT—AMD is coming up against some monstrous competition in the form of Nvidia’s Ampere, particularly the new RTX 3060 Ti, a $400 card that outperforms the RTX 2080 Super.

AMD’s highest video card in the Steam Survey is the RX 580 in tenth place, holding a 2.14 percent share. The GTX 1060 stay on top with 10.6 percent, though its lead keeps declining, while the RTX 2060 (3.51 percent) and RTX 2070 Super (2.29 percent) are the only 20-series cards in the top ten.

Elsewhere, more people are opting for 16GB as their preferred amount of system RAM, and despite QHD (and higher) resolution monitors getting cheaper, the number of users with 1920 x 1080 displays went up 0.57 percent last month.

https://www.techspot.com/news/87829-amd-erodes-more-intel-cpu-share-steam-survey.html