Why it matters: Chiplets are gaining increasing prominence in processor design. AMD has embraced them for its CPUs, and Intel will soon follow suit. Although GPUs have been slow to migrate to chiplets, AMD kickstarted the shift with its RDNA 3 lineup, and a new patent suggests the company plans to push onward with future Radeon generations.

AMD recently submitted a patent describing a complex chiplet-based design that may (or may not) drive future graphics card lineups. It could signify the beginning of a radical change in GPU design similar to what's currently occurring with CPUs.

A chiplet-based design splits a processor into multiple smaller dies, each possibly specializing in tasks like logic, graphics, memory, or something else. This has multiple advantages compared to traditional monolithic products that pack everything into one large chip.

Also read: What Are Chiplets and Why They Are So Important for the Future of Processors

Chiplets can lower manufacturing costs by allowing companies to mix different process nodes into the same product, using older and cheaper components in places where cutting-edge transistors aren't necessary. Also, smaller dies result in higher yields and fewer defective chips. Moreover, splitting up the chips enables greater flexibility in processor design, possibly creating room for additional dies.

Click to enlarge

AMD fully committed to chiplets beginning with Zen 2 CPUs, and the reorganization enabled the rise of Ryzen 9-class products. Intel will debut its take on chiplets (dubbed "tiles") when the Meteor Lake CPU series debut later this month.

While graphics cards might not benefit as much from chiplets at first, AMD took some initial steps with the Radeon 7000 series. Each of the GPUs incorporates a large graphics die and several memory dies.

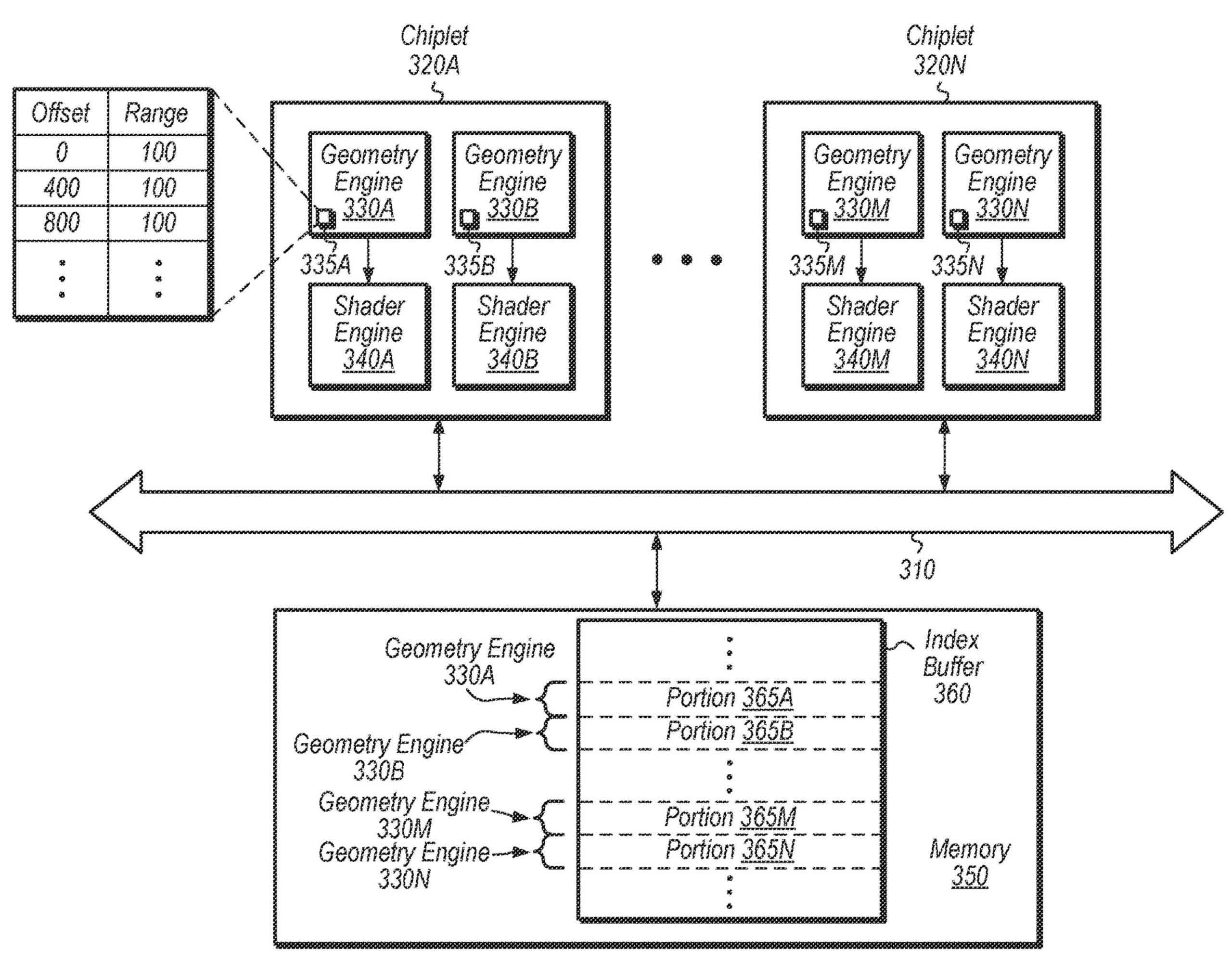

The patent, titled "Distributed Geometry" describes a system that splits graphics tasks into multiple dies without a central unit to direct the others. Questions remain regarding how the dies would stay in sync. Clearly patents don't always become actual products, but the document hints at AMD's commitment to a chiplet-based future. If AMD pursues the design outlined in the patent, it would likely first emerge in the RDNA 5 lineup, expected to appear in 2025.

RDNA 4 is expected to launch sometime next year, but if rumors prove true, the GPU family will only consist of mid-range cards headlined by a model that could outperform the $900 Radeon RX 7900 XT (and Nvidia's GeForce RTX 4080) for about half the price.

Meanwhile, Nvidia is rumored to be pursuing chiplets for the compute GPUs in its upcoming GeForce RTX 5000 series, but consumer products will likely remain monolithic.

https://www.techspot.com/news/101121-amd-patent-potentially-describes-future-gpu-chiplet-design.html