Something to look forward to: If you're in the market for a decent graphics card but don't want to join the Green Team, AMD has you covered pretty well as of late. The 5700 and 5700 XT are both great cards for their price, and soon, the RX 5500 will be joining their ranks.

Whereas the 5700 and 5700 XT perform well in the 1440p arena, AMD has positioned the 7nm RX 5500 as the king of 1080p gaming at a (presumably) reasonable price point.

The first RX 5500s will be made available in pre-made desktops and notebooks; though the latter will ship with the tweaked RX 5500M. There will be a discrete version of the card at some point in the future, but we don't have a specific release window for now.

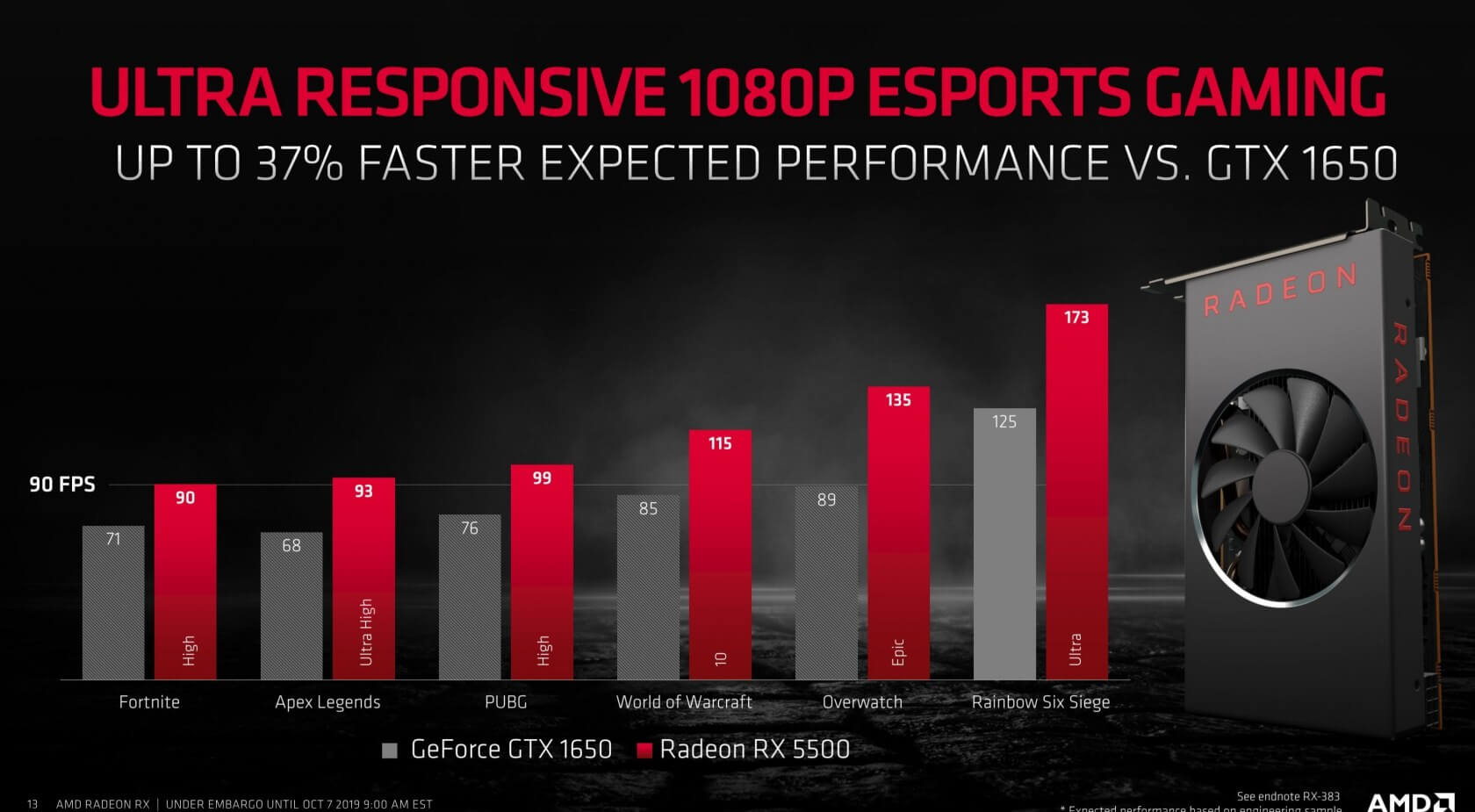

Preliminary information aside, what exactly does the 5500 bring to the table? According to AMD, it boasts "up to" 37 percent faster performance on average than its competition (Nvidia's GTX 1650).

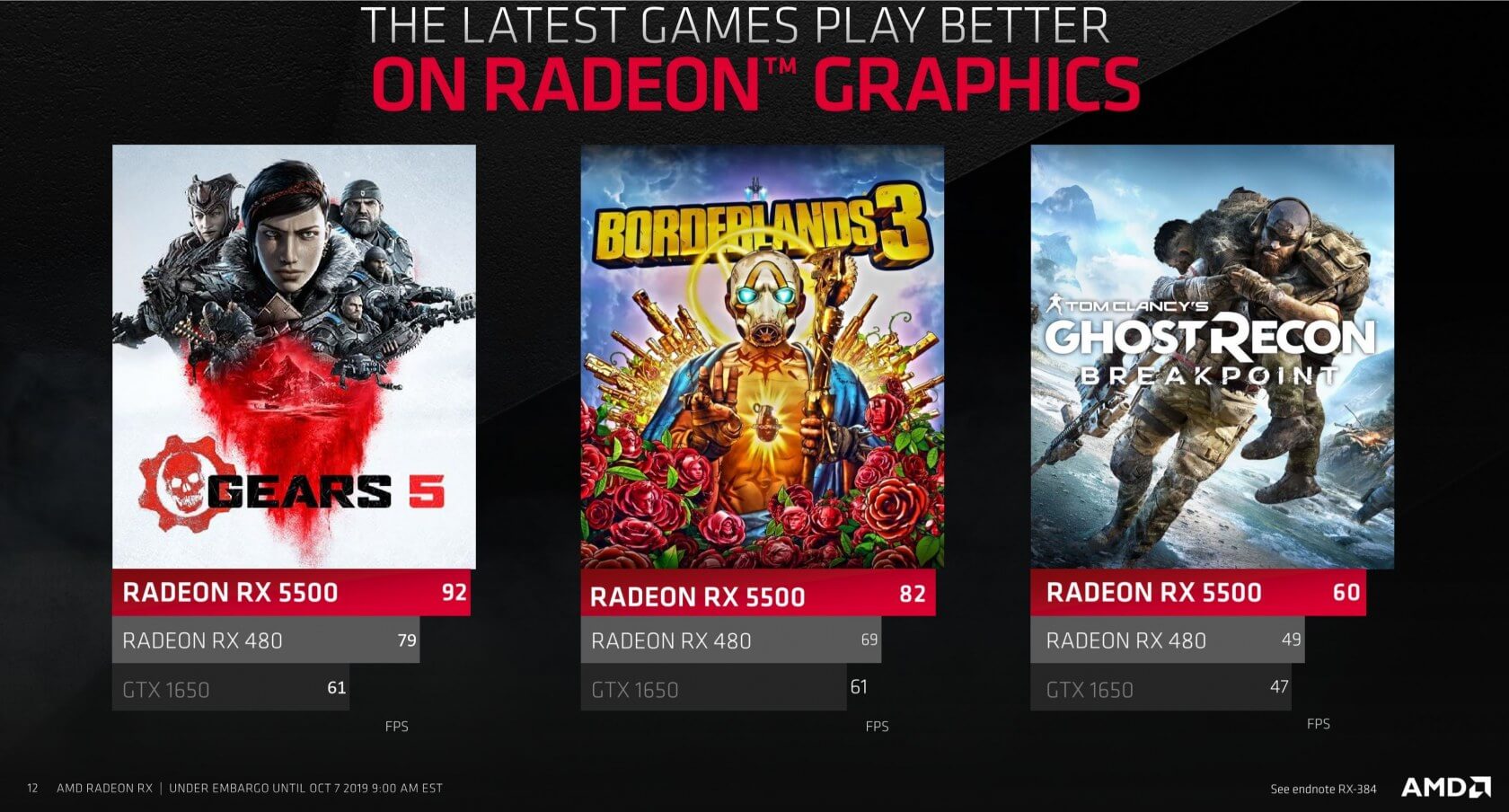

In more concrete numbers, AMD says the RX 5500 can deliver up to 60 FPS in "select" AAA titles (such as Gears of War 5 and Ghost Recon Breakpoint), and up to 90 FPS in eSports games (Fortnite, Apex Legends, Overwatch). As usual, we recommend taking manufacturer claims with a grain of salt until third-party benchmarks (such as our own) hit the web.

The 5500 features standard clock speeds of up to 1717Mhz and boost clocks of up to 1845Mhz, PCI-E 4.0 support, as well as a 128-bit memory interface and 8GB of GDDR6 memory. Further, AMD says the RX 5500 will have access to many of the same features the 5700 and 5700 XT do, including Radeon Image Sharpening and Radeon Anti-Lag.

We don't know how much the discrete version of AMD's RX 5500 will cost on launch, but ideally, it shouldn't be too far off from the GTX 1650's price tag. If it was much pricier, AMD's performance comparisons wouldn't make much sense. For reference, the 1650 will currently run you about $150-$170, depending on where you look.

Again, we recommend waiting for reviews before making a purchasing decision, but if you absolutely must snag a 5500 for yourself ASAP, the first machines with the card installed will come from HP and Lenovo this November, with Acer alternatives following in December. Notably, these rigs qualify for AMD's recently-announced "Raise the Game" bundle deal.

Until then, you can look forward to the MSI Alpha 15's release later this month. The gaming notebook will include the stripped-down (in comparison to the standard RX 5500) RX 5500M, which has a base clock speed of up to 1448Mhz (and a boost clock of up to 1645Mhz), and 4GB of GDDR6 VRAM.

https://www.techspot.com/news/82238-amd-new-radeon-rx-5500-boasts-up-37.html