In a nutshell: Intel's Core i9-13900K will reportedly feature higher clock speeds than its predecessor and will have twice the number of E-cores, increasing multi-threaded performance tremendously. However, it will still use the Intel 7 node, meaning that all these advantages will make it even more power-hungry and difficult to cool than current Alder Lake chips.

Hardware reviewer ExtremePlayer has posted some benchmarks of an Core i9-13900K qualification sample they managed to obtain. This CPU should offer near-identical performance to shipping products as it features final clock speeds, unlike the engineering samples we've seen before.

This late sample features a 5.5 GHz all P-core turbo clock and boosts up to a whopping 5.8 GHz when only two cores are loaded. In both instances, that's 300 MHz faster than the pre-binned i9-12900KS.

13900K QS (Q1EQ) Testedhttps://t.co/Lo6w72LUNR

— HXL (@9550pro) July 13, 2022

ST up 10% (Avg)

MT up 35% (Avg)

IPC: ADL≈RPL pic.twitter.com/AGXLyHUHxi

Raptor Lake will rely on the same Intel 7 manufacturing process as Alder Lake, so the higher clock speeds come at the cost of higher power consumption and thus more heat. During testing, ExtremePlayer saw the package power consumption jump over 400W, with a 360mm AIO unable to keep the CPU from throttling.

The Core i9-13900K does shine in synthetic tests, seeing a 10 percent performance boost in single-threaded benchmarks compared to a current-gen i9-12900K. This improvement seems to come mainly from the increased clock speeds, as the IPC performance difference was within the margin of error.

Meanwhile, multi-threaded performance is up a whopping 35 percent on average, although this can be attributed to the eight extra E-cores. These small cores also boost up to 4.3 GHz, 400 MHz more than the ones in the 12900K.

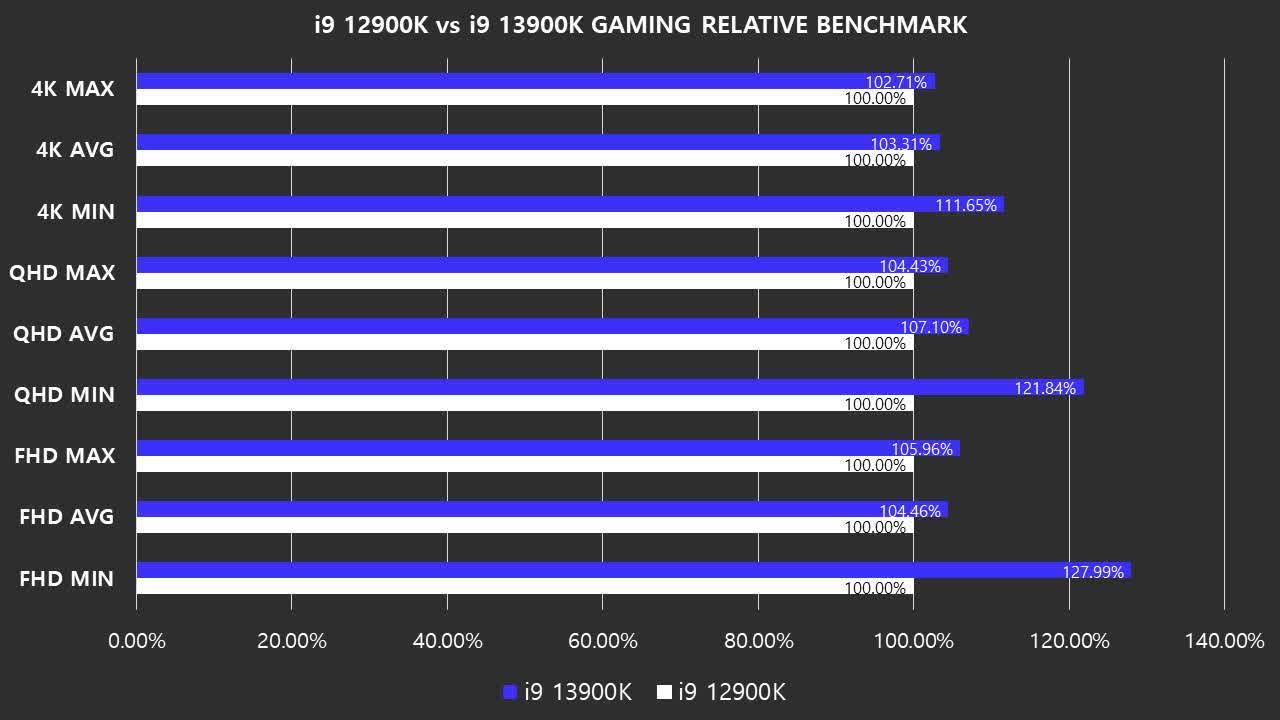

Gaming performance was also tested using 32GB of DDR5-6400 memory and an RTX 3090 Ti. At 1080p, the Raptor Lake processor was shown to provide about a 28 percent improvement in minimum frame rates, while the average FPS were just about 4 percent faster.

Intel might announce the Raptor Lake lineup at its Innovation event set for September 27, with retail availability expected in October.

https://www.techspot.com/news/95330-early-core-i9-13900k-sample-shows-massive-improvement.html