In a nutshell: Nvidia isn’t known for sneaking out new graphics cards without announcing it to the world, even when they only offer minor upgrades over their predecessors, but it looks as if a new GTX 1650 packing GDRR6 memory has arrived without much fanfare.

Speaking to PC Gamer, an Nvidia rep said the company released the new card because “the industry is running out of GDDR5, so we’ve transitioned the product to GDDR6.”

An early review of the new GTX 1650 version appeared on Expreview (via Videocardz). It uses the same TU117-300 GPU with 896 CUDA cores as its predecessor, though the base clock in the newer card drops from 1485 MHz to 1410 MHz, while the boost falls from 1665 MHz to 1485 MHz.

The memory clocks might be lower, but the updated card comes with 12 Gbps memory modules instead of the 8 Gbps found in the GDDR5 version, pushing the maximum bandwidth from 128 Gb/s to 192 Gb/s.

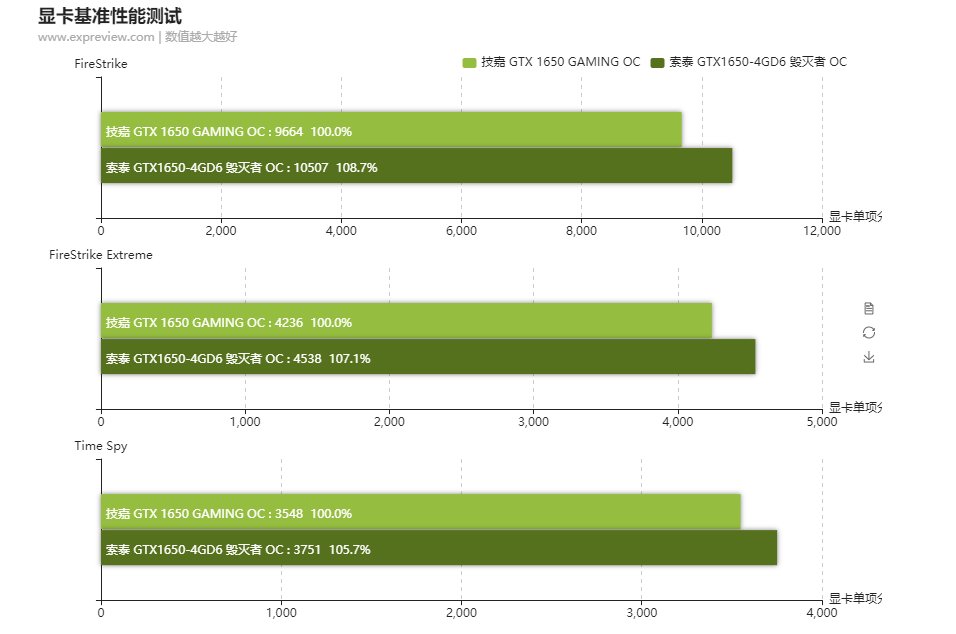

3DMark performance

Overall, it was found that the latest GTX 1650 is 5 to 8 percent faster in synthetic benchmarks and 2 to 10 percent faster in most games than its GDDR5-sporting predecessor.

We weren’t overly impressed with the GTX 1650, giving it a score of 60 in our review; the GTX 1650 Super, which uses GDDR6, is a much better buy.

It's noted that most modern graphics cards use GDDR6. The GTX 1660 is one exception, but buyers will likely opt for the GTX 1660 Ti or GTX 1660 Super, both of which use GDDR6 instead of GDDR5.

https://www.techspot.com/news/84685-nvidia-releases-gtx-1650-gddr6-due-industry-running.html